How-To

Hyper-V 3 Deep Dive: Network Virtualization

There's lots to like in the new Windows Server 2012, and even more if you look at just the Hyper-V against the competition. Let's start with extensible switch and virtual switch functionality.

A new IT buzzword (that is, hot on the heels of the now fairly worn "cloud") is software-defined networking. Driven by the need for data center networks to be more agile and policy driven than traditional manual switch configuration and VLANs can provide, SDN promises a world where your entire data center network is controlled via policy, and where VMs and networks are created and destroyed as the need arises. Microsoft's take on SDN comes in two products: The plumbing is built into Hyper-V in Windows Server 2012 in the form of network virtualization, while the management piece is in System Center Virtual Machine Manager 2012 SP1.

Cloud and SDN = Better Together

Businesses that look to move part of their workloads to a public cloud provider offering IaaS are often met by the requirement to change the IP address of their VMs. For many, this is not an easy task, as IP addresses of servers aren't just random numbers but often security, IPsec and firewall policies are tied to specific IPs. Finding all the locations for these and making sure they're changed is error-prone and time-consuming. This situation also makes it harder to move between cloud providers, as well as linking your internal network to one or more external clouds in a seamless manner.

Windows Server 2012 Hyper-V offers network virtualization as the solution to these issues. In essence, it does for networking what virtualization does for processor, memory and disk today. Each VM on any hypervisor today runs on top of what it seen as a physical machine, but which in fact is just part of the shared resources of a host.

In NV, each network has the illusion that it's running on a dedicated network, even though all resources, switching, routing and IP addresses are shared. This also enables BYOIP (Bring Your Own IP), as VMs can retain their IP addresses as they're moved within a data center or even to a public cloud.

For this to work, each VM is given two IP addresses. There's a CA (Customer Address) and a PA (Provider Address), except when using NVGRE. Ultimately, this means that VMs with identical IP addresses can coexist in the same cluster or even on the same host.

Tenant isolation also becomes much easier, as policies can be defined centrally and applied cluster or data center wide. The most common choice today for VM isolation is to use VLAN tagging, which was designed for static environments and means switches have to be reconfigured every time a new ID needs to be added.

In contrast, NV requires no involvement of the networking team after initial setup. Note that a virtual network can span multiple subnets if many VMs need to be connected to each other. You can mix VMs with or without NV on the same host, and broadcast traffic is never broadcast "everywhere" -- it always goes through NV to make sure it only reaches VMs that should see it. There's no GUI for configuring NV, it's all done through PowerShell. Microsoft provides a PowerPoint with more information on NV and the packet flow here.

|

Figure 1. Creating VM Networks is a whole lot easier using the GUI in SCVMM 2012 SP1 than in PowerShell. (Click image to view larger version.) |

NV also means a VM can be live migrated between clusters, as the PA address can be changed without the CA address changing and without VM downtime. Because NV is totally transparent to VMs, all guest operating systems can take advantage of it.

Behind the scenes, NV relies on one of two technologies: IP Rewrite, or GRE (Generic Routing Encapsulation), which is an IETF standard.

GRE puts the CA IP packet inside a packet with the PA address associated with it. This new header also contains a Virtual Subnet ID, which means networking gear can apply per tenant policies to traffic. Another advantage is that each VM on a host can share the same PA because traffic can be identified based on the Virtual Subnet ID, minimizing the number of IP and MAC addresses the infrastructure needs to track. NIC offloads won't work, however, because of their reliance on the correct IP header. To overcome these limitations, several vendors have defined a draft standard for NVGRE which will provide the benefits of GRE without the performance loss of not being able to use NIC offloads.

The other option is IP Rewrite, where each packet is changed as it enters or leaves a host with the appropriate CA or PA. The main advantage here is that NIC offloads work as expected and network equipment doesn't need to change. On the other hand each VM needs both a CA and a PA making IP address management more onerous. An in-depth technical look at NV can be found here.

SCVMM for scale

All the technology required for configuring NV is built into Hyper-V, but unless you have a very small environment where configuring each individual host using PowerShell is appealing, you'll need the tools that System Center Virtual Machine Manager 2012 SP1 (SCVMM) offers.

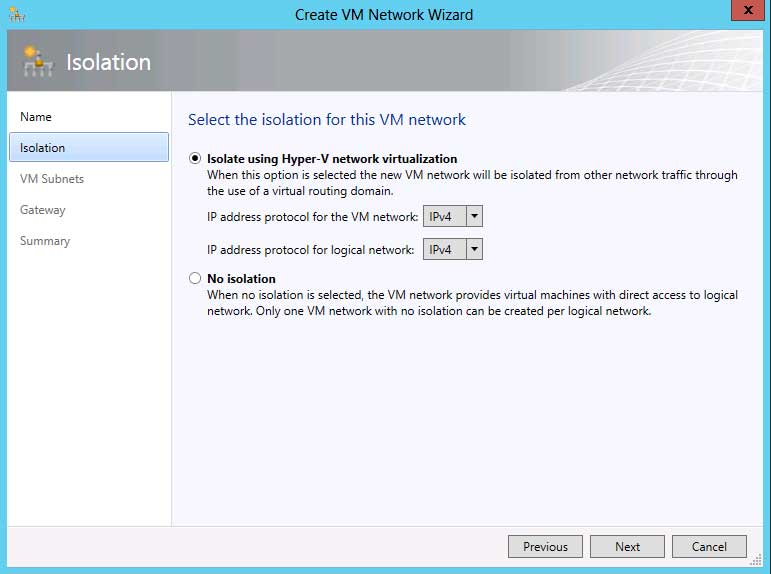

SCVMM 2012 SP1 brings two new objects to work with NV: the first is a VM Network and the second is a logical switch. VM Networks are routing domains and can contain multiple virtual subnets, as long as they're all able to "talk" to each other. A VM Network can have one of four types of isolation: no isolation, Network Virtualization, VLAN or External. The first type is used for management networks and times where you may have SCVMM itself or other management tools running in VMs and they need to communicate freely on all networks.

Network Virtualization isolation builds on the NV features of Windows Server 2012 as described above. The mapping tables that keep track of CA to PA mapping are held by SCVMM, and hosts build partial mapping tables. If a host is asked to send a packet to a VM/host it doesn't know about, it updates its table from SCVMM, minimizing the size of the tables that each host needs to manage in large environments.

|

Figure 2. While creating VM networks is a few clicks in a wizard, it's imperative that proper planning is carried out for production networks -- if you thought VM sprawl was bad, imagine VM Network sprawl! (Click image to view larger version.) |

If you're using VLAN isolation mode, you're relying on both physical switches and vSwitches being configured with the right VLANs. Essentially, this is taking a current setup that relies on VLANs and representing it in SCVMM. You can only have one VLAN per VM network in this model. The external model relies on extensions installed in the Hyper-V extensible switch for managing the isolation.

The logical switch (similar to the Distributed Switch in vSPhere) is a central location to define extensions and settings that are then automatically replicated to all hosts. SCVMM also brings VSEM (Virtual Switch Extension Manager) that lets you manage switch extensions across all your hosts. These extensions and their data and settings follow VMs as they're Live Migrated from host to host. SCVMM also integrates the placement of VMs with the provisioning of virtual networks, providing a dynamic and highly automated solution. Furthermore SCVMM integrates bandwidth capping and reservation on a per VM basis through policies.

The missing link

The next step is to link your on-premises data center(s) with a public cloud provider's network through a S2S (Site to Site) VPN. Once this is in place you can move a VM (using System Center App Controller) from your network to a public cloud without having to alter IP address configuration. The VM finds internal DNS and DC servers using its normal IP setup and internal clients looking for resources from the VM also discover them just as they did before it moved. Windows Server 2012, as part of the Unified Remote Access role, contains a network virtualization gateway, but it's expected that larger networks will take advantage of network appliances that are coming to market with this functionality. This functionality can be incorporated into load balancers; Top Of Rack (TOR) switches or new stand-alone appliances.

Next time, we'll look at VM network enhancements, specifically SR-IOV, Receive Side Scaling and QoS.

About the Author

Paul Schnackenburg has been working in IT for nearly 30 years and has been teaching for over 20 years. He runs Expert IT Solutions, an IT consultancy in Australia. Paul focuses on cloud technologies such as Azure and Microsoft 365 and how to secure IT, whether in the cloud or on-premises. He's a frequent speaker at conferences and writes for several sites, including virtualizationreview.com. Find him at @paulschnack on Twitter or on his blog at TellITasITis.com.au.