Hyper-V on Hyper-Drive, Part 2: Memory, Storage, Networking

Now that we know how Hyper-V can take advantage of processors, it's a good time to look at how we can take advantage of memory, networking and disk resources without breaking the budget.

Read the complete series:

Last time, we looked at processors and the balance of virtual and logical processors in Hyper-V hosts. Now, let's look at how to choose a good mix of memory, networking and disk resources to match your budget.

Memory

Before Service Pack 1 was released for Windows Server 2008 R2, assigning memory to VMs and architecting host machines was difficult because only a fixed amount could be assigned to each VM whether it needed it or not. Because Dynamic Memory is such a game changer in the Hyper-V world make sure all your hosts are running Windows 2008 R2 SP1.

It's also important to understand that Dynamic Memory in Hyper-V differs from ballooning in VSphere/ESXi because the hypervisor communicates with the VM to find out its memory needs. This allows intelligent choices to be made about allocating more or less memory to a VM but does require guest OSs that can "talk" to the hypervisor, hence Dynamic Memory is only supported with VMs running Windows Server 2008 SP2/2008R2 SP1, 2003 R2 SP2 and 2003 SP2 along with Windows 7 and Vista SP1. More information on supported platforms can be found here.

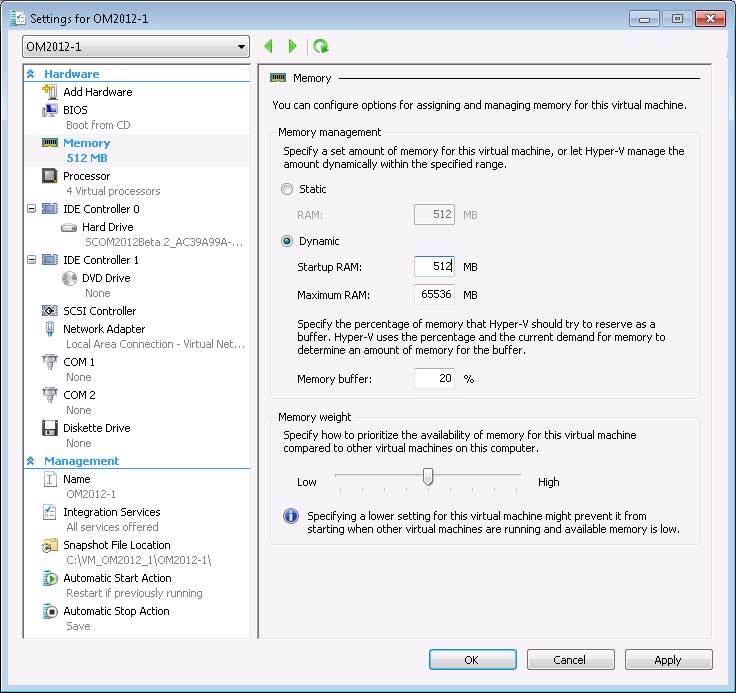

Some applications (Exchange and SQL server are examples) do their own memory management and Microsoft strongly recommends not using Dynamic Memory for VMs running these workloads. With Dynamic Memory each VM is allocated a certain amount of start-up memory. Microsoft recommends 512 MB for Windows Server 2008/2008 R2, Vista and Windows 7 while Windows 2003 and XP should be assigned 128 MB.

The host or parent partition has a default memory reserve. You can alter this using a registry key located at HKLM:\SOFTWARE\Microsoft\Windows NT\CurrentVersion\Virtualization\MemoryReserve. This key is a REG_DWORD and you have to create it: Default decimal value is 32 for 32 MB, maximum is 1024. If you follow best practises and run minimal services in the host, the default should work fine. Microsoft only supports backup, management and anti-malware agents in the parent partition.

|

Figure 1. Assigning the right amount of memory to running virtual servers is a whole lot easier with Dynamic Memory. (Click image to view larger version.) |

Bottom line: For efficient use of your hardware and to get good performance out of your VMs ensure that the total memory needed by your VMs stays lower than the overall amount of RAM in your hosts.

Storage

Storage is always a tricky part of server design and no less so in the virtual world. Ensuring that applications and VMs can access the required amount of IOPS (Input/Output Operations per Second) is crucial and virtualization has made it even harder. In the old world, we could assess this need on a per server basis but now we may have many servers with different IOPS profiles running on the same physical host.

Some applications have specific storage enhancements (Exchange Server 2010 for instance has several tricks to optimize the performance of the underlying disk subsystem for sequential IO, as does SQL Server). All of these optimizations are lost when you move to virtualized disks as the "disk" the VM sees is actually just a large file on a drive or a SAN. There are a few ways to compensate for this; one is to use pass-through disks (raw disks in the VMware world) where the VM has full access to a physical disk. The drawback is that there's no way of backing up the disk from outside of the VM. The other option is to decrease the latency and increase the speed of the disks which generally means a more expensive SAN with more spindles and / or SSD disks. The latter have excellent performance for random read IO making them eminently suited for storing VHD files, but of course their cost per gigabyte is high.

There are two types of VHD disks that you can attach to a VM, fixed size or dynamic. The former means that a 100 GB VHD is created as a 100 GB file initially, the latter starts off as a small file (while still appearing as a 100 GB drive to the VM) but grows as data is added. The benefit of the latter is better utilization of your storage hardware as only the actual used storage is consumed, but you have to be careful that you don't oversubscribe the underlying storage and run out of space as virtual disks grow. The golden rule used to be that fixed disks gave better IO performance, but the gap is closing and in Hyper-V 2008 R2 the difference is minimal. For a more in-depth exploration, see this white paper from Microsoft; the relevant section starts at page 25. Be aware that some workloads aren't supported on dynamically expanding disks, such as Exchange.

Networking

To achieve a well performing Hyper-V platform, don't forget the networking subsystem. If you have five, ten or more VMs on a host, don't expect them to fit all their connectivity needs through a couple of Gigabit NICs. As always, it pays to know your workloads. If you're going to virtualize busy file servers make sure to allow enough virtual network cards for the task. Hyper-V supports up to eight synthetic network interfaces in each VM (along with four emulated NICs, but these are not recommended for performance). 10 GB Ethernet is starting to become affordable and is a great way of increasing bandwidth.

|

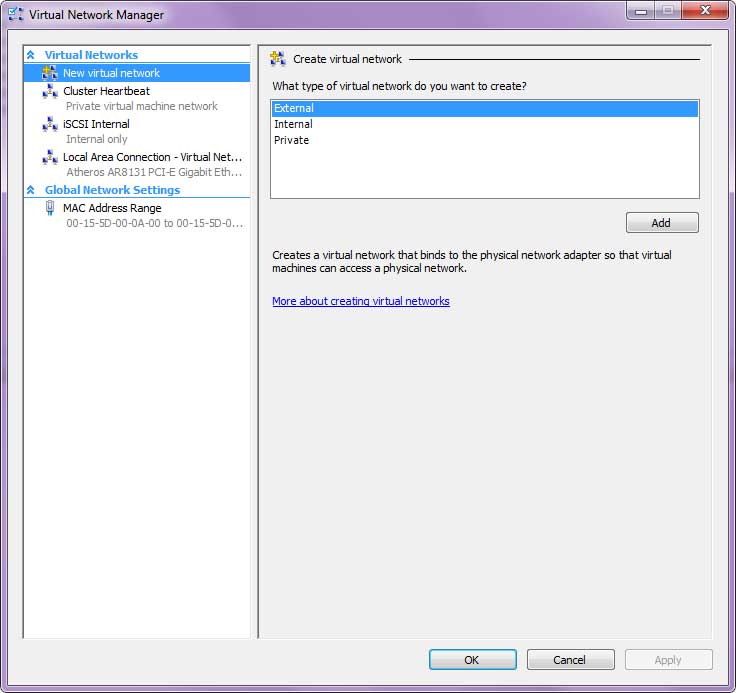

Figure 2. Creating virtual networks is easy in Hyper-V, but we’ll have to wait for Hyper-V in Server 8 for true virtual network switch functionality. (Click image to view larger version.) |

NIC teaming is another area where it pays to thread carefully. Officially, Microsoft doesn't support NIC teaming, but some of the vendors/OEMs do. Check with the manufacturer of your NIC to find out if they do support NIC teaming.

If you're going to use iSCSI for your storage, make sure to allow network cards for this connectivity as well, and use Jumbo Frames and disable File Sharing and DNS services from these NICs. Ensure that your NICs support performance enhancing features that are supported in Windows Server 2008 R2 such as TCP Chimney Offload and Virtual Machine Queues (VMQ). When using TCP Chimney Offload, you have to enable it both in the OS and in the properties of the driver for each NIC.

Next time, we'll look at some tricks for improving the performance of your VMs.

About the Author

Paul Schnackenburg has been working in IT for nearly 30 years and has been teaching for over 20 years. He runs Expert IT Solutions, an IT consultancy in Australia. Paul focuses on cloud technologies such as Azure and Microsoft 365 and how to secure IT, whether in the cloud or on-premises. He's a frequent speaker at conferences and writes for several sites, including virtualizationreview.com. Find him at @paulschnack on Twitter or on his blog at TellITasITis.com.au.