My Own Private Cloud, Part 1

In this two part article we'll look at the hardware I chose for my private cloud that I built during some down time, as well as the software that makes the solution tick.

While I've been working with Microsoft Hyper-V since the first version was in beta on a variety of hardware platforms in my own business, with my clients, as well as at the college where I teach, I've never had my own in-house cluster/cloud. And teaching, researching and learning with my Windows 2008 R2 laptop (albeit with 16 GB of RAM and a quad-core CPU) works, but it doesn't give my students the exposure to clustering that they need. The cluster will be moved to the college over the next month and I'll use it remotely when I'm not at the campus.

So it was time to build a two-node cluster, with a third machine as a domain controller. In this column I'll talk about my hardware choices and the reasoning behind them as well as how I structured my storage.

First Things, First: Hardware

My first step was to contact one of the major hardware vendors and get a quote for a couple of rack-mounted servers. I rang my rep at Dell and received a quote for two machines. They recommended the Dell PowerEdge R610, 32 GB of RAM, 2 x 160 GB HDD with an on-board quad port Intel NIC. While these are excellent, reliable platforms (most of the clients I support through my business run Dell servers), I wondered if the cost was worth it. The main benefit I could see was reliable hardware and built-in iDrac Enterprise (remote access card) access, but the downside was the loud fans. Rack-mounted servers aren't really suited to sit next to your office chair in a home-office situation.

The other option, and as it turned out at about half the cost, was to build my own using desktop-class hardware. After a bit of research, I settled on Intel Socket 1155 motherboards (model DH67CLB3) with Core i5 2400 processors and four 8 GB Corsair Vengeance DDR3 sticks per server. Be careful if you select Core i5 processors for a home lab -- not all models support all virtualization technologies. You'll want to make sure it supports Extended Page Tables (also known as SLAT).

I also splurged on Intel I350T4 quad-port, gigabit PCI-E network cards for each server. These cards support SR-IOV which will become important as Windows Server 8 Hyper-V will take advantage of this technology. Storage ended up being two 2TB Hitachi drives per server, in a RAID 1 mirror, all housed in whisper-quiet Antec Atlas server cases.

|

Figure 1. The building blocks for one Hyper-V server. (Click image to view larger version.) |

Lots of Storage Options

Storage is the next conundrum, with a few different options available. To build a cluster and offer high availability requires shared storage (until Hyper-V in Windows Server 2012, which offers other options). A fibre channel SAN would be really nice, but again, it's out of the budget.

That leaves iSCSI with two free options on the market. Microsoft has made its iSCSI target 3.3 available for free (download it here), which is an excellent option for small production deployments or test labs. This can be installed on any Windows 2008 R2 or 2008 R2 SP1 server, essentially turning it into a SAN that fully supports Live Migration.

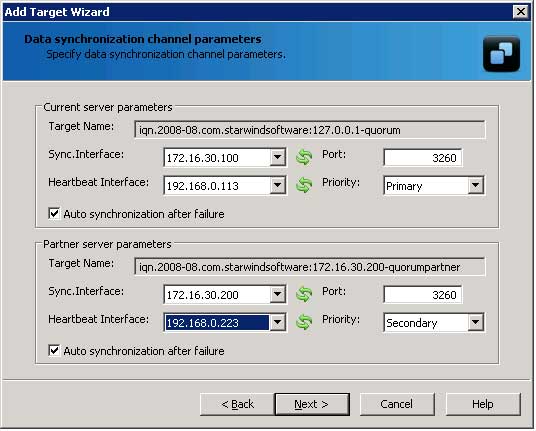

Another option that I've used in the past is StarWind software's Free Edition iSCSI SAN. It has some benefits over Microsoft's offering, including de-duplication of stored data, caching and Continuous Data Protection (CDP) features, along with a broader support of underlying OS.

Both these solutions require a third box with a number of drives in it to provide the actual storage and represent a single point of failure.

|

Figure 2. In any small to medium clustered Hyper-V installation StarWind's Native SAN is an excellent fault tolerant solution (Click image to view larger version.) |

Casting around for other options, I found StarWind's Native SAN for Hyper-V. This product runs on the virtualization hosts and provides iSCSI target based software, as well as providing real time synchronisation of the data between the two nodes. This means that you can build a two node Hyper-V cluster with replicated data storage without needing two extra servers or high end SAN hardware. Whilst not free, StarWind offers a license for MCTs. To round off the foundation for my cloud I built a third box with 8 GB of RAM as well as a 24-port gigabit switch.

Next time, we'll look at the software that turned this pile of expensive electronics and metal into a cloud platform.

About the Author

Paul Schnackenburg has been working in IT for nearly 30 years and has been teaching for over 20 years. He runs Expert IT Solutions, an IT consultancy in Australia. Paul focuses on cloud technologies such as Azure and Microsoft 365 and how to secure IT, whether in the cloud or on-premises. He's a frequent speaker at conferences and writes for several sites, including virtualizationreview.com. Find him at @paulschnack on Twitter or on his blog at TellITasITis.com.au.