How-To

Hyper-V Deep Dive, Part 5: NUMA, Scalability, Monitoring Improvements

Now that you know what's new in networking in Hyper-V, let's look at scalability, NUMA and VM monitoring.

More on this topic:

We've already looked at quite a bit of Hyper-V so far in the first four parts, with a focus on the improvements in network functionality. Let's switch to something different and look at scalability improvements, NUMA considerations, and VM monitoring.

Scalability

In the ongoing competition with VMware, Microsoft's hypervisor in version 2008/2008 R2 was a bit lacking in the numbers game, offering only four virtual CPUs per VM, “only” 64 GB of memory per VM and “only” 1,000 VMs in a 16 node cluster.

In this release Microsoft focused on making sure that any physical server that you have today can be virtualized successfully (think big SQL clusters). Each VM can have up to 64 virtual processors and 1 TB of memory; a cluster can be scaled to 64 nodes with a total maximum of 8,000 VMs. Each VM can have up to 260 virtual hard disks attached (four IDE and 256 SCSI) and each of those virtual hard drive files can be up to 64 TB (the theoretical limit is 1.3 PB) with the new VHDX file format. Each VM can also have up to eight synthetic network adapters (and another four legacy emulated NICs) and up to four virtual Fibre Channel adapters for connecting to a FC SAN (we'll cover it later in this series). These scalability limits have all been tested by Microsoft under production load.

Before we look at the scalability for a host server an explanation of processor count is in order. In the Hyper-V world a core on a CPU counts as a logical processor (LP), and if the CPU is using Intel Hyper Threading (making it appear that each core is actually two) that's counted as two LPs. Each VM is assigned one or more Virtual CPUs (vCPUs) and in earlier versions there are limits on the ratio of vCPUs to LPs -- this ratio no longer applies in Windows Server 2012.

In Server 2012 each Hyper-V host can have up to 320 Logical Processors with up to 1024 VMs running concurrently with a maximum of 2048 Virtual Processors across all VMs. A host can have up to 4 TB of RAM. These figures hold true across all three versions of Server 2012 that offer Hyper-V: Standard, Datacenter and the free Hyper-V Server.

All features are also common across the different versions of Windows Server 2012; Standard for instance now comes with Failover Clustering; the difference between them is that Standard comes with licensing for two Windows VMs and Datacenter comes with unlimited VM licensing. So if you need to run four Windows VMs on a particular host you can assign two Standard licenses to that server to cover VM licensing. Hyper-V server being free doesn't come with any VM licensing which makes it an ideal platform for VDI solutions or Linux VMs.

How's your NUMA?

These scalability numbers bring another issue into sharp focus; Non Uniform Memory Access (NUMA). In the world of Big Iron servers (320 logical processors is a server with 16 physical CPUs, each with 10 cores, running Hyper-Threading and likely several TB of RAM), each CPU doesn't have fast access to all the memory. The system is instead divided into NUMA nodes; each with a particular amount of CPU cores and memory, which are cache coherent for fast access; if a thread on one processor needs access to data stored in the memory of another NUMA node access is significantly slower. Because of the limits in Server 2008 R2 (4 vCPUs and 64 GB per VM) this wasn't an issue for VMs (although there is a setting to allow a VM to span NUMA nodes).

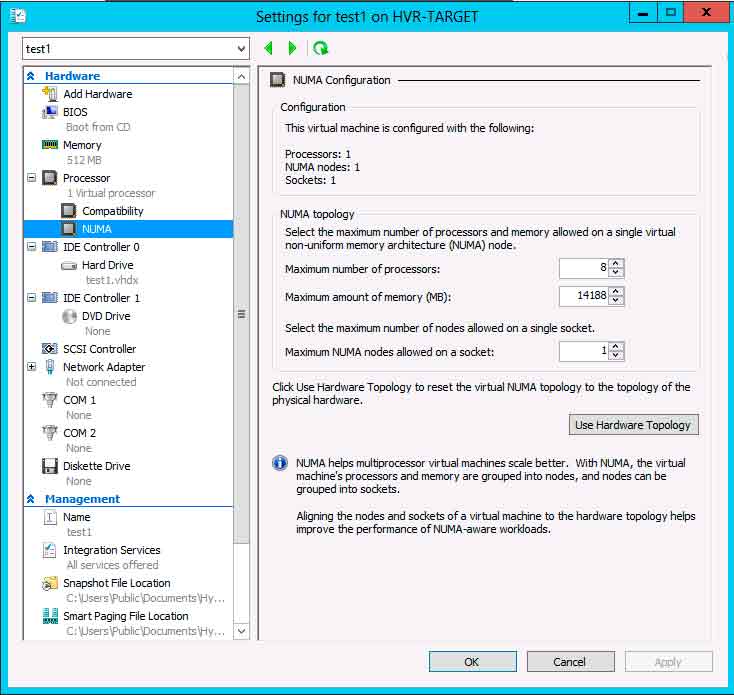

In 2012 however it's critical that VMs are aware of and work with the boundaries of NUMA nodes for performance not to suffer. This is handled by Hyper-V being aware of NUMA and also projecting the NUMA topology into VMs such that applications (such as SQL Server and IIS) that are NUMA aware can optimize their performance; this is called Virtual NUMA. Note that if your VM is using Dynamic Memory Virtual NUMA is disabled, and that Virtual NUMA only kicks in if your host has more than 8 LPs and more than 64 GB of memory.

|

Figure 1. If you have clusters with big host machines make sure you investigate your optimal NUMA topologies. (Click image to view larger version.) |

Hyper-V uses ACPI Static Resource Affinity Table (SRAT) to present NUMA topology to the VM; this is an industry standard, which means that any OS that's NUMA-aware should be able to use Virtual NUMA. Hyper-V automatically detects NUMA topology on a host and configures settings accordingly but if you have a cluster with servers of different generations, CPU count and memory size consult your hardware manuals to figure out the NUMA node size for each host. You should then manually set the NUMA node settings to the smallest NUMA node in your cluster which will ensure that Live Migrating VMs to different nodes won't result in a VM ending up on host with smaller NUMA configuration. Performance issues arise if the Virtual NUMA node projected into a VM is larger than the actual physical NUMA node size on the host.

If for some reason your manual changes to NUMA settings result in poor performance you can use the handy Use Hardware Topology button to go back to Hyper-V's automatically calculated settings on a host.

Performance is King

Another change to accommodate large systems is Hyper-V Early Launch; Hyper-V will start before the OS and only present the first 64 LPs to the parent partition (essentially the first VM to run on a host), this is to avoid Hyper-V being hamstrung by the scalability limits of Windows Server itself. Third party management tools that haven't been updated will misreport the number of cores in your server as they'll only see what the parent partition sees but there are new APIs to get the actual, physical host's processor count.

With VMs now supporting many vCPUs another issue comes to the fore: gain scheduling -- the process of grouping threads of execution together for good multi CPU performance. In the physical world the OS can assume that if it detects a certain number of CPU cores at startup they'll always be available for scheduling threads of execution but in a virtualized environment this no longer holds true. Because Microsoft has control over Windows they've included components in the kernel so that the hypervisor can tell the VM that it's running in a virtual environment and thus Windows Server 2008 and later doesn't need to do gain scheduling and so can easily scale up the number of vCPUs whereas Windows Server 2003 VMs can only have two vCPUs. Microsoft has also included these functions in their contribution to the Linux kernel.

Note that this is a distinct difference between VSphere and Hyper-V, in the VMware world the recommendation is always to minimize the number of vCPUs and only assign them when a VM is really going to use them. In Hyper-V (with Linux and Windows Server 2008+ VMs) assigning many vCPUs doesn't “cost” anything and if the VM doesn't use them; other VMs can.

Patching a Cluster (or, 'There goes my weekend....')

As cluster sizes grow, it's no longer practical to manually drain VMs from one node, install all authorized patches, reboot the server and then repeat the process for the next node. While SCVMM 2012 offers orchestrated patching, Windows Server takes it a step further by providing Cluster Aware Updating (CAU) for any type of cluster, not just Hyper-V. By default only Windows Updates are installed, through your normal WSUS or SCCM infrastructure, but Microsoft hotfixes and even non-Microsoft patches can be added with a bit of PowerShell magic and a file share. Third-party vendors can also extend the CAU feature. A cluster can be configured to be self-updating with a clustered role (previously known as clustered service) providing the coordination to automatically download and install updates on a scheduled basis.

You can also schedule cluster-wide tasks that can run on all nodes in a cluster, on only selected nodes, or on the node that owns a particular resource (such as a VM). Clusters can now start even if no DC is reachable, which is handy for branch office scenarios where the DC might be a VM on a node in the cluster; Hyper-V clusters also support Read Only Domain Controllers (RODC).

In Windows Server 2012 the default cluster configuration is Dynamic quorum management, which automatically adjusts the number of votes required for quorum as nodes go offline, providing greater resiliency. In a situation where a node has failed (unplanned downtime) and VMs that were hosted on that node are automatically restarted on other nodes in the cluster, it's now possible to assign four different levels of Failover Priority to each VM: High, Medium and Low, along with Do Not Failover. This makes it possible to cater for a guest cluster made up of a database VM and a Web front-end VM where the former needs to be running before the latter can start up properly.

Draining a node by Live Migrating running VMs is now a right click option in the UI.

Especially useful in scenarios where VMs are using guest clustering to provide HA for application, the new Affinity and Anti-Affinity rules let you define that two VMs should always be located on the same host (for high speed access between an application and a database for instance) or that two VMs should never be hosted on the same host (two members of the same Exchange DAG for example).

VM Monitoring

At the end of the day, your business doesn't care about the state of your underlying hardware, nor about your hypervisor or the OS inside a VM; it's the applications and their health that are important. System Center 2012 Operations Manager keeps tabs on the health of your hardware, hosts and VMs through Management Packs and guest clustering VMs can provide HA for your applications that support it. Applications that can't be clustered and that don't have an OM Management Pack, however, can take advantage of the new VM Monitoring feature.

VMs have to be either Windows 2008 R2 or 2012 with the 2012 Integration Components and the Failover Cluster feature installed; there's a COM component that runs in the VM and communicates via the heartbeat service with the Virtual Machine Manager Service in the parent. The inbox feature can check the state of designated services and for particular event IDs, once per minute, if the state is critical the VM is gracefully shut down and restarted, the second time it happens the VM is Live Migrated to another host.

Because VM to parent partition communication is a potential for security and privacy issues only a single bit is changed to communicate that an application is in a critical state. VM Monitoring is also expandable by third parties, Symantec has already demonstrated Symantec Application HA for Hyper-V which adds more proactive management, and can restart applications and even restore VMs from a backup image in addition to the troubleshooting steps offered natively.

Next time, we'll cover security and replication improvements.

More on this topic: