As you may know by now, I am a big-time VirtualBox fan. Version 3 has been released, which is a large update for the platform. The updated functionality includes:

- Up to 32 vCPUs per guest (Rockstar).

- Experimental graphics support for Direct3D 8 and 9.

- Support for OpenGL 2.

- Toolbar for use in seamless and full-screen modes.

These features supplement a robust offering for VirtualBox that in most categories is on par with any Type-2 hypervisor. If you haven't checked out VirtualBox, it may be time to do so. The footprint is small (63MB), the price is right (free) and it's quick.

One of the new features is the toolbar for use in full-screen or seamless modes. I use seamless mode frequently. Seamless mode basically puts the guest on top of your current host operating system for an easy transition between systems. With the new toolbar, a floating control panel allows you to switch back and forth easily (prior versions required a keystroke) between the host and the guest. The toolbar also allows virtual media to be assigned without leaving the full-screen or seamless session. Figure 1 below shows the toolbar appearing on a VM in seamless mode:

[Click on image for larger view.] |

| Figure 1. The new VirtualBox toolbar allows easy access to VM configuration while in full screen or seamless mode. |

VirtualBox 3 is a free download from the Sun Web site.

Posted on 07/06/2009 at 12:47 PM2 comments

As an administrator, one of the best things I can do is always compare what I am doing to what someone else is doing. This allows me to broaden my perspective and have answers for virtually every scenario that can arise in administering a virtual infrastructure. In the course of reading a quality blog post by Scott Lowe, I found myself with a blog post of my own for the same topic.

In Scott's piece, he focuses on NFS for use with VMware environments. In fact, I candidly sought out Scott earlier in the year to see how many implementations actually use NFS. I'm a big fan of vStorage VMFS, so before I swore off NFS, I wanted to quantify where it stood in the market. Scott's comments echoed that of readers here at the Everyday Virtualization blog, as well. NFS is quite prevalent in the market for VMware implementations.

So, with Scott's material and reader interest, I thought it a great time to share my thoughts on the matter. My central point is that above all, there needs to be a clear delineation of what the storage system will serve. I see this breaking down into these main questions:

- Will the storage system be provided only for a virtualization environment?

- Does the collective IT organization have other systems that require SAN space?

- Will tiers of storage be utilized?

- Who will manage the storage -- virtualization administrators or storage administrators?

If these questions can be answered, a general direction can be charted out to determine what storage protocol makes the most sense for an implementation. In my experience, virtual environments are a big fish in a pool of big storage -- but definitely not the only game in town.

If the storage is not directly administered by the virtualization administrators, the opportunity to decide the storage protocol can be limited.

There are so many factors including cost, supported platforms and equipment, what's already in place, performance, administration requirements, and other factors that make it impossible to make a blanket recommendation. I'd nudge you the direction of iSCSI or fibre channel so you could take advantage of the vStorage VMFS file system. NFS will likely have the lowest cost options, as well as the potential for the broadest offerings of storage devices, to its credit.

What factors go into determining your storage environment? My experience is that heterogeneous systems that require SAN space can be aggregated to one SAN, and that generally falls up to a fibre channel environment. Share your thoughts on factors that decide the storage protocol for a virtual implementation.

Posted by Rick Vanover on 07/06/2009 at 12:47 PM6 comments

One of the strongest enabling elements of virtualization is that there are plenty of offerings in the free space. This frequently allows organizations to build their business case and get comfortable with virtualization technologies. Recently at TechMentor, one of my sessions focused on when the free offerings are not enough. This was targeted toward small organizations that are at the decision point of introducing revenue solutions. Somewhat surprising is that VMware's free offering in the Type 1 hypervisor space and accompanying management is comparatively dull. Since the unmanaged ESXi was introduced, the only new enhancement available to ESXi on version 4 is thin provisioning of the virtual hard disks.

This is further complicated by VMware's action to request Veeam software to remove functionality for free ESXi installations utilizing Veeam Backup. ESXi also has a read-only interface through the remote command line interface (RCLI) for an unmanaged ESXi installation.

I had hoped that vSphere would give some hope for an enhanced offering for the free hypervisor, but this was not the case. The hypervisor market is a very fluid space right now, so anything can happen. The free ESXi still has use cases in many environments; it is just a tougher sell when planning a solution that has a lesser offering than the competition. Let me know how the free offering from VMware fits or clashes with your use of virtualization.

Posted by Rick Vanover on 07/06/2009 at 12:47 PM9 comments

Last week, I was at TechMentor in Orlando and a very interesting point was brought up in the virtualization track. For thin provisioning, who do you want doing it? Should the storage system or the virtualization platform administer this disk-space-saving feature? The recommendation was to use the storage system to provide thin provisioning on the LUN if supported. As a fan of virtualization in general and with vSphere's thin provisioning of VMDK files being one of my most anticipated features, I stopped and thought a bit about this recommendation. Then this sounded familiar to discussions of having software RAID or hardware RAID: We clearly want hardware controllers administering RAID arrays.

While it is not quantified, there is overhead to having a thin-provisioned disk with vSphere. The storage array may have overhead for a thin-provisioned LUN as well. I mentioned earlier how thin-provisioning monitoring is important with vSphere, but should we just go the hardware route? Of course this is assuming that the storage system supports thin-provisioning for ESX and ESXi installations.

What is your preference on this practice? I am inclined to determine that a hardware-administered (storage system) solution is going to be cleaner. Share your thoughts below or shoot me a message with your opinion.

Posted by Rick Vanover on 06/30/2009 at 12:47 PM3 comments

For VMware VI3 and vSphere environments, there is no clear guidance on how storage should be sized. We do have one firm piece of information, and that is the configuration maximums document. For both platforms, the only firm guidance is virtually the same for VMFS-3 volumes, in that a single logical unit number (LUN) cannot exceed 2 TB. With extents, they can be spanned 32 times to 64 TB. This became relevant to me while reading a post by Duncan Epping at Yellow-Bricks.com. He's right -- it just depends.

For most environments, sizing scenarios that flirt with the maximums are rare. There are plenty of planning points to determining the right size however. One piece of guidance is the allocation unit size for the formatting of the VMFS-3 volume. This will determine how big a single file on the VMFS volume will be, explained here. With this information, if you know you will have a VM with a 600 GB VMDK virtual disk, then an allocation unit of 4 MB or higher is required.

Another factor that can influence how to size a LUN is the desired number of VMs per LUN. If you want a nice, even number like 10, 15 or 20 VMs per LUN, you can take Duncan's advice and determine an average size and multiply accordingly. This is where a curve ball comes into play. Many organizations are now deploying Windows Server 2008 as a VM, and the storage requirement is higher compared to a Windows Server 2003 system. This will skew the average as new VMs are created.

The hardest factor to use as a determination for sizing is I/O contention for VMs. This becomes a layer of abstraction that is incredibly difficult to manage in aggregate per LUN, per host and per I/O-intensive VM basis. VMware has plans for that and more with their roadmap technology PARDA. Duncan again is right on point with a recommendation that very large VMs use a raw device mapping (RDM); this may be applicable to I/O-intensive VMs as well.

Finally, I think it is very important to reserve the right to change the sizing strategy. For example, when a VI3 or vSphere environment is initially implemented, it would make sense for 400 or 500 GB LUNs to hold 10 average VMs. If the environment grows to over 1,000 VMs, do you want to manage 100 LUNs? It is pretty easy for the VMware administrator, but consider earning back the respect of your SAN administrator and consolidating to larger LUNs and returning the smaller LUNs back to the SAN. This can keep your management footprint reasonable with the SAN administrator.

The answer still is that it depends, but these are my thoughts on the topic based on experience. Share your comments on LUN sizing below or shoot me a message with your strategy.

Posted by Rick Vanover on 06/29/2009 at 12:47 PM4 comments

In a recent discussion, the topic of Type 1 and Type 2 hypervisors came up. To some, this is an arbitrary distinction that doesn't matter much as there's already an inherent understanding of what the requirements are for a virtualization solution.

Simply put, the distinction between Type 1 and Type 2 has to do with whether an underlying operating system is present. Virtualization Review editor Keith Ward touched on part of this topic in a post about KVM virtualization in regards to Red Hat.

I'm convinced there's no formal standards-based definition of Type 1 and Type 2 criteria. However, I did like this very succinct piece of literature from IBM. While it doesn't have an exhaustive list of hypervisors and their types, it does give good definitions. The material describes a Type 1 hypervisor as running directly on the hardware with VM resources provided by the hypervisor. The IBM Systems Software Information Center material further states that a Type 2 hypervisor runs on a host operating system to provide virtualization services.

Some are obvious, such as VMware ESXi and Citrix XenServer being Type 1 hypervisors. My beloved Sun VirtualBox, VMware Server and Microsoft Virtual PC are all Type 2 hypervisors.

With that said, it's unclear where Hyper-V fits into the mix. Information like this Microsoft virtualization team blog post pull Hyper-V closer to the Windows Server 2008 base product.

The relevance of Type 1 and Type 2 distinction is academic, in my opinion, but something I wanted to share here. Your thoughts? Drop me a note or share a comment below.

Posted by Rick Vanover on 06/24/2009 at 12:47 PM8 comments

One of the more highly anticipated features of vSphere is the ability to thin-provision VMs for their storage. I first made mention of this during the end of the private beta program, and expressed concern about the practice side of thin-provisioning. Basically, we can get in trouble with thin-provisioning storage, as so many factors are involved with the behavior on disk. Not to pick on Windows, but when a service pack or new version of Internet Explorer is installed to a VM the storage footprint can go up a good bit. Multiply that by hundreds of VMs, and the storage footprint can be significant.

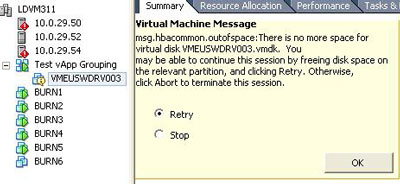

For vSphere environments, this is of grave importance: If the volume fills up, the VM will pause. If you follow my Twitter feed, you will have seen many references I have made to my current work with vSphere, including deliberate efforts to cause errors so I can practice the resolution. Today, I had a thin provisioned LUN run out of space and the VM paused. Fig. 1 shows the message.

|

| Figure 1. What happens when a thin-provisioned disk runs full. |

The obvious answer is to watch your storage very closely. The default alarm settings with vSphere allow administrators to provide monitoring and alerting based on usage of the datastores.

More than anything, this is a head's up to everyone to configure the alarm to notify very aggressively on the datastore usage. There are also plenty of scripting options available, including utilizing Enhanced Storage VMotion to move VMs from fully provisioned VMs to thin-provisioned. Share your comments on the risks of thin provisioning below.

Posted by Rick Vanover on 06/22/2009 at 12:47 PM4 comments

Earlier this month when I checked in with VirtualBox, I was intentionally priming for the upcoming version 3 release. Currently in beta, version 3 offers what I believe is the highest virtual CPU count for a VM at 32 symmetric multiprocessors (SMP) for mainstream hypervisors using common Intel and AMD processors.

Version 3 is due out soon, probably this month and I am very excited to give it a try. I have been a long time VirtualBox user for select virtualization platforms and will keep you updated on the new version when it is released. The beta user guide has a full list of the new features available at the VirtualBox Web site.

Posted by Rick Vanover on 06/19/2009 at 12:47 PM13 comments

This week, I had a chance to talk with Cisco about the new Unified Computing System (UCS) architecture. For me, this was an opportunity to get a deep dive into the architecture and understand what may not be obvious initially.

First of all, the UCS is most beneficial to the largest environments. There can be scenarios where mid-size environments make sense, but there are many factors. Generally speaking, the UCS framework makes financial sense in the 2-to 3-blade chassis range. This is based on port costs for the blade chassis of the competition plus actual switching and interface costs on the blades. This is where it gets interesting. The costs for traditional blades can get high for 10 GB Ethernet switches and Fibre Channel switches to accommodate the I/O for blade chassis. In the case of UCS, this I/O is consolidated to a component called the UCS 6100 Fabric Interconnect (you can see it here).

The component is the hub for all communication in the UCS realm. Here, blades are connected to the Interconnect with a Fabric Extender that resides on the blades. While the Interconnect is relatively expensive, the Fabric Extenders are around $2,000 each (list price). The Fabric Interconnect is available with 20 or 40 ports that can accommodate 10 or 20 UCS blade chassis, respectively. The Fabric Extender is the incremental cost in this configuration. Compared to traditional blade architecture, this is the main benefit and is cost recovered in the 2- to 3-blade chassis range.

The UCS is a high-featured framework, but not without limitations. One thing we cannot do is connect a general-purpose server (non-UCS server) directly to the Fabric Interconnect. The Interconnect is not a single switch (even though it might look like one in the image), but a conduit of all network and storage I/O. So, if I wanted to put a 1U server (not like any of us have one-offs!) in the same rack as a UCS blade, it would need its own cabling.

The UCS platform is also a framework, meaning that other vendors could make blade servers to go into the Cisco chassis and take advantage of the service profile management stack. Partners have expresses interest, though none are currently doing this. The service profile provision process manages everything from MAC addresses for Ethernet interfaces, worldwide names for storage adapters and boot order to the servers. This management stack and provisioning tool is included with the platform for no additional cost.

For many virtualization administrators, we have not yet transitioned to blades for one reason or another. The UCS platform will soon offer a rack-mount server with the forthcoming C-Series.

Where does this fit into your virtual plan? Hardware platform selection is one of the more passionate topics that most virtualization administrators hold. Share your thoughts below on the UCS framework and how you view it.

Posted by Rick Vanover on 06/17/2009 at 12:47 PM1 comments

Last month when vSphere was released to general availability, we were collectively relieved that it was finally made available. What seemed like a very long launch going back to previews, announcements, beta programs and partner activity had finally come to fruition. Now that it is here, people like me are considering what are the next steps and how do we upgrade to vSphere.

We all have surely seen the comparison chart by now that shows which editions of vSphere provide which features. This starts with the free version of ESXi and goes all the way up to the Enterprise Plus edition that includes the Cisco Nexus 1000V vNetwork distributed switch.

The good news is that for your current processors under subscription, you can go to vSphere with no cost under most circumstances. For example, if you have processors covered with a current Support and Subscription Services (SnS) from a prior sale at Enterprise level for VI3 you are entitled to Enterprise in vSphere. From the chart above, you will see that vSphere Enterprise will not include 3rd party multipathing, host profiles, or the vNetwork distributed switch. Enterprise is also limited to six cores per processor, which is good for today's technology only. Advanced and Enterprise Plus offerings of vSphere accommodate for twelve cores per processor. Further, vSphere Enterprise will only be for sale until Dec. 15, 2009.

So, what does all of this mean? Well if you purchased VI3 Enterprise, you will have to make a decision for future purchases with vSphere. If you want to maintain Distributed Resource Scheduler (DRS), VMotion and Storage VMotion, new purchases by Enterprise Plus must be made after Dec. 15, 2009. The vSphere Advanced offering has the processor core inventory but does not provide DRS or Storage VMotion.

I think customers are being pushed upward with this situation, especially since in order to maintain DRS and Storage VMotion functionality the next tier upward is required. Chances are, the largest organizations would want the Nexus 1000V anyways – but it would have been nice to have an additional option or additional time to make budgetary funds ready.

VMware has made accommodations for virtually every scenario through the vSphere Upgrade Center Web site. Most notable is that Enterprise SnS customers are entitled to upgrade these processors to Enterprise Plus for only $295 per processor -- again by Dec.15, 2009.

This is the big picture of the pricing and licensing side of moving to vSphere. Has this cramped your budget or not fit into your cycles? That is a frequent comment I have heard from other administrators and decision makers. Let me know if it has for your environment or share a comment below.

Posted by Rick Vanover on 06/15/2009 at 12:47 PM3 comments

In case you didn't notice, I am a big fan of VMware vStorage VMFS. I recently pointed out some notes about slight version differences with vSphere's incremental update to the clustered file system for VMs. VMFS has other features of interest to me, like the coordinator-less architecture for file locking and smart blocking.

For many other clustered file system solutions, file lock management can be IP-based. VMFS, by its architecture, has all file locks performed on the disk with a distributed lock manager. Stepping back and thinking about that, it may sound like a bad idea at first -- that may not be a good idea on a disk volume for a large number of small files. VMFS volumes contain a small number of very large files (virtual disks). This makes the on-disk overhead of managing the locks negligible from the storage side, but very flexible from the management side. Further, when the lock management is being done on-disk, this takes away the possibility of split-brain scenarios where locking coordination can be interrupted or contended.

Comparatively, this is one of my sticking points of why I like VMFS storage compared to a Microsoft virtualization solution that is reliant on Microsoft Clustering Services. Hyper-V uses MSCS to create a clustered service for each logical unit number assigned to the host. Within each LUN, the current practice is to assign one VM to each LUN. Windows Server 2008 R2 introduces clustered shared volumes, yet still relies on MSCS. MSCS isn't available for the free Hyper-V offering, as MSCS requires Windows Server 2008 Enterprise or higher.

Because VMFS is coordinator-less, it has no requirement for MSCS or vCenter Server to manage the access. For this reason, administrators can move VMs between managed and unmanaged (ESXi free) environments with ease.

Besides the file system being smart on locking, it is also smart on block sizes. When a VMFS volume is formatted, the allocation unit size of 1 MB, 2 MB, 4 MB or 8 MB is selected. This permits virtual disk files (VMDKs) to be 256 GB, 512 GB, 1 TB or 2 TB, respectively. VMFS sneaks back into cool by provisioning a sub-block on the file system for the smaller data elements of a VMFS volume. On a traditional file system with an 8 MB allocation unit, a 2 KB file (like a .VMX file) would consume a full 8 MB. VMFS uses smaller sub-blocks to minimize internal defragmentation.

Is this too much information? Probably, but I can't get enough of it. Send me your comments on VMFS or leave a note below.

Posted by Rick Vanover on 06/10/2009 at 12:47 PM7 comments

Although Oracle is heavily focused on the business side of the recently announced acquisition of Sun Microsystems, the VirtualBox platform continues to receive updates. There are a lot of questions as to what will happen after Sun's acquisition is complete, such as what will the virtualization strategy be? Or who will be the steward of Java? One thing is for sure: Until the business end of things is complete, we really don't know anything.

I pipe up with content on VirtualBox from time to time because that is the product I use most on my notebook for virtualization needs, and I follow the product line closely. Even though the acquisition is underway, there have been three updates to VirtualBox recently. Here are some of the new features of version 2.2.4:

- Fixes from the major 2.2.0 release

- Bi-directional OVF support

- Guest VM limit now at 16 GB

- Mac OS X host support

The OVF support is a hidden jewel with the current version of the product. I'm a big fan of cross-virtual platform options, and with the OVF support we have options to work with other platforms and VirtualBox. Mainstream conversion tools such as PlateSpin Migrate and VMware Converter do not have VirtualBox support. Now with the OVF functionality, VMs can be imported or exported to OVF specifications. The export process provides eight fields for defining the OVF virtual machine. Simply having OVF is not a free pass between platforms; some work would need to occur to inject drivers.

VirtualBox can be a host on Windows, Intel Mac OS X, Linux and Solaris operating systems and is still free. More information on VirtualBox can be found on the Sun Web site.

Posted by Rick Vanover on 06/08/2009 at 12:47 PM6 comments