Splunk Inc. today announced several updates to its cloud service for analyzing machine-generated Big Data.

Splunk Cloud now features what the company calls the industry's first 100 percent uptime service-level agreement (SLA), lower prices and a free online sandbox in which developers and users can play around with data files.

The SLA is possible through built-in high availability and redundacy in the Software as a Service (SaaS) offering's single-tenant cloud architecture. Spunk Cloud also avoids system-wide outages by delivering dedicated cloud environments to individual customers, the company said, and uses Splunk software to monitor the service. "This is why we're the only machine-data analytics service to offer a 100 percent uptime SLA," the company said. The service can scale from service plans starting at 5GB per day to plans allowing 5TB per day.

The cloud service provides the functionality found in the company's on-premises Splunk Enterprise platform for operational intelligence. Users can search, analyze and visualize machine-generated data from any source, such as Web sites, applications, databases, servers, networks, sensors, mobile devices, virtual machines, telecommunications equipment and so on. The data can be analyzed to provide business insights, monitor critical applications and infrastructure, respond to and avoid cyber-security threats, and learn about customer behavior and buying habits, among other scenarios.

The service also provides access to more than 500 of the company's apps -- such as for enterprise security, VMware and Amazon Web Services (AWS) cloud -- to use out-of-the-box, customizable alerts, reports and dashboards.

[Click on image for larger view.]Using the Splunk Cloud Sandbox (source: Splunk Inc.)

[Click on image for larger view.]Using the Splunk Cloud Sandbox (source: Splunk Inc.)

From the home screen, users can navigate to search and interact with data; correlate different data streams and analyze trends; identify and alert on patterns, outliers and exceptions; and rapidly visualize and share insights through the reports and dashboards.

Customers also get access to the Splunk developer platform and its RESTful APIs and SDKs.

Security is enhanced, the company said, by the individual environments that don't mix data from other customers. Also, Splunk Cloud instances operate in a Virtual Private Cloud (VPC), so all data moving around in the cloud is isolated from other traffic.

Also new in the updated service is access to an online sandbox in which users can "mess around" with analytics and visualizations on their own data files or pre-populated example data sets supplied by Splunk. Using the sandbox requires a short account registration and e-mail verification. Within a few minutes, you can start the sandbox and use a wizard to upload your data files, although this must be one at a time.

After a data file is uploaded, you're presented with a search interface that provides tabs to show events, display statistics or build visualizations. For example, it took only a minute or so to upload an IIS log file and see "events" that were culled from the data, primarily time stamps in my useless test case.

Along with the search tab, the sandbox provides tabs for reports, alerts, dashboards and for Pivot analysis.

The sandbox account lasts for 15 days, but up to three sandboxes can be set up over the lifetime of a single account.

Splunk Cloud itself has a 33 percent discounted price resulting from "operational efficiency."

The service is now available in the United States and Canada, with a standard plan starting at $675 per month for up to 5GB per day. Scaling to the 5TB per day limit switches to an annual billing plan.

"We’ve been seeing organizations start to move their mission-critical applications to the cloud, but many still see availability and uptime as a significant barrier," the company quoted 451 Research analyst Dennis Callaghan as saying. "By guaranteeing 100 percent uptime in its SLA, Splunk Cloud should help ease some of the performance monitoring and visibility concerns associated with applications and infrastructure running in the cloud."

Posted by David Ramel on 08/05/2014 at 11:31 AM0 comments

Scientists from three companies have combined to tackle the problem of cloud-to-cloud networking, emerging with technology that speeds up connection time from days to seconds.

The research that resulted in "breakthrough elastic cloud-to-cloud networking" technology was sponsored by the U.S. government's DARPA CORONET program, which studies rapid reconfiguration of terabit networks.

The cloud computing revolution has greatly changed the way organizations access applications, resources and data with a new dynamic provisioning model that increases automation and lowers operational costs. However, experts said in an announcement, "The traditional cloud-to-cloud network is static, and creating it is labor-intensive, expensive and time-consuming."

That was the problem addressed by scientists from AT&T, IBM and Applied Communication Sciences (ACS). Yesterday they announced proof-of-concept technology described as "a major step forward that could one day lead to sub-second provisioning time with IP and next-generation optical networking equipment, [enabling] elastic bandwidth between clouds at high-connection request rates using intelligent cloud datacenter orchestrators, instead of requiring static provisioning for peak demand."

The prototype -- which uses advanced software-defined networking (SDN) concepts, combined with cost-efficient networking routing in a carrier network scenario -- was implemented on the open source OpenStack cloud computing platform that accommodates public and private clouds. It elastically provisioned WAN connectivity and placed VMs between two clouds in order to load balance virtual network functions.

According to an IBM Research exec, the prototype provides technology that the partnership now wants to provide commercially in the form of a cloud system that monitors a network and automatically scales up and down as needed by applications. A cloud datacenter will send a signal to a network controller describing bandwidth needs, said Douglas M. Freimuth, and IBM's orchestration expertise comes into play by knowing when and how much bandwidth to request among which clouds.

Today, Freimuth noted, a truck has to be sent out to set up new network components while administrators handle WAN connectivity, which requires physical equipment to be installed and configured. By using cloud intelligence to requisition bandwidth from pools of network connectivity when it's needed by an application, this physically intensive process could be done virtually. Setting up cloud-to-cloud networks could be done in seconds rather than days, he said.

The innovation means organizations will spend less because they more effectively share network resources through virtualized hardware, and operating costs are reduced by cloud-controlled automated processes, among other benefits, Freimuth said.

"For you and me, as individuals, more dynamic cloud computing means new applications we never dreamed could be delivered over a network -- or applications we haven't even dreamed of yet," Freimuth concluded.

Posted by David Ramel on 07/30/2014 at 2:09 PM0 comments

GigaSpaces Technologies and RackWare each released new tools this week in 3.0 versions of their products that handle cloud orchestration and disaster recovery, respectively.

Cloudify 3.0 from GigaSpaces has been completely re-architected for "intelligent orchestration" of cloud applications, the company said. This intelligence provides a new feedback loop that automatically fixes problems and installs updates without human intervention needed. The company said this automatic reaction to problem events and implementation of appropriate corrective measures eliminate the boundary between monitoring and orchestration.

The new Cloudify version provides building blocks for custom workflows and a workflow engine, along with a modeling language to enable automation of any process or stack. A new version planned for release in the fourth quarter of this year will feature monitoring and custom policies that can be used to automatically trigger corrective measures, providing auto-healing and auto-scaling functionality.

GigaSpaces said the new release is more tightly integrated with the open source OpenStack platform, which it said is rapidly becoming the de facto private cloud standard. "The underlying design of Cloudify was re-architected to match the design principles of OpenStack services, including the rewriting of the core services in Python and leveraging common infrastructure building blocks such as RabbitMQ," the company said.

It also has plug-ins to support VMware vSphere and Apache CloudStack, while plug-ins for VMware vCloud and IBM SoftLayer are expected soon. Its open plug-in architecture can also support other clouds, and plug-ins are also expected soon for services such as Amazon Web Services (AWS), GCE Cloud and Linux containers such as the increasingly popular Docker.

Meanwhile, if things go south in the cloud and automatic corrective measures aren't enough, RackWare introduced RackWare Management Module (RMM) 3.0 for cloud-based disaster recovery for both physical and virtual workloads. The product release coincides with a new research report from Forrester Research Inc. that proclaims "Public Clouds Are Viable for Backup and Disaster Recovery."

The system works by cloning captured instances from a production server out to a local or remote disaster recovery site, synchronizing all ongoing changes. In the event of a failover, the company said, a synchronized recovery instance takes over workload processing. The company said this system provides significant improvement over traditional, time-consuming tape- or hard disk-based backup systems. After the production system outage is corrected and the server is restored, the recovery instance synchronizes everything that changed during the outage back to the production server to resume normal operations.

"By utilizing flexible cloud infrastructure, protecting workloads can be done in as little as one hour and testing can be as frequent as needed," the company said. "The solution brings physical, virtual, and cloud-based workloads to the level of protection that mission-critical systems protected by expensive and complex high-availability solutions enjoy."

In addition to the new ability to clone production servers and provide incremental synchronization for changes in an OS, applications or data, the company said RMM 3.0 can span different cloud infrastructures such as AWS, Rackspace, CenturyLink, VMware, IBM SoftLayer and OpenStack.

Posted by David Ramel on 07/30/2014 at 2:14 PM0 comments

Oracle Corp. yesterday unveiled the Oracle Data Cloud, a Data as a Service (DaaS) offering that's initially serving up marketing and social information to fuel "the next revolution in how applications can be more useful to people."

The new product capitalizes on Oracle's February acquisition of the BlueKai Audience Data Marketplace, which has been combined with other Oracle data products.

The BlueKai service -- claiming to be the world's largest third-party data marketplace -- draws on data from more than 200 data providers to provide information such as buyers' past purchases and intended purchases, customer demographics and lifestyle interests, among many others.

Initial offerings in the cloud include Oracle DaaS for Marketing and Oracle DaaS for Social.

The marketing product -- available via a new subscription model -- "gives marketers access to a vast and diverse array of anonymous user-level data across offline, online and mobile data sources," Oracle said. "Data is gathered from trusted and validated sources to support privacy and security compliance." It uses data from more than 1 billion global profiles to help organizations with large-scale prospecting and deliverance of targeted advertising across online, mobile, search, social and video mechanisms.

The social product -- in limited availability, currently bundled with the Oracle Social Cloud -- "delivers categorization and enrichment of unstructured social and enterprise data, providing unprecedented intelligence on customers, competitors, and market trends." It uses data from more the more than 700 million social messages produced each day on millions of social media and news data sites. The service allows for sophisticated text analysis to extract useful and contextual information from the unstructured data.

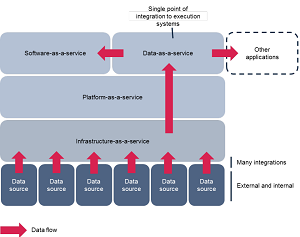

Oracle exec Thomas Kurian yesterday provide more details in a webcast in which he explained how DaaS fits into the overall Oracle strategy, a key tenet of which is combining data from internal and external sources for more useful applications.

"By combining a unified view of data from multiple applications, and enriching data from within a company with data from outside of the company's boundaries, and then bringing that data into a variety of different applications, we believe that applications can be much more useful to organizations, and that people will find that they can make decisions with these applications in a much more useful way," Kurian said.

[Click on image for larger view.]DaaS in the as-a-service stack: Data abstracted from applications. (source: Ovum)

[Click on image for larger view.]DaaS in the as-a-service stack: Data abstracted from applications. (source: Ovum)

Kurian provided several example use cases:

- Marketers can use not only a marketing automation system, but know who to target with marketing campaigns.

- Salespeople can use not only just a salesforce automation application, but know which people to target as leads or prospects.

- Recruiters can use not only just a recruiting application to hire people, but know which people are the best candidates for a particular job.

- Supply chain people can know which vendors to work with because they have a better view of which vendors and what profile and delivery practices they have.

The news elicited positive reactions from some industry analysts, who emphasized the competitive advantage the Oracle Data Cloud might be able to provide.

"IDC sees [DaaS] as an emerging category that addresses the needs of businesses in real time to tap into a wide array of external data sources and optimize the results to drive unique insights and informed action," Oracle quoted IDC analyst Robert Mahowald as saying. "Oracle Data as a Service is addressing this need with a suite of data solutions that focus on scale, data portability, and security that help customers gain a competitive advantage through the use of data."

Ovum analyst Tom Pringle echoed that sentiment in a quote provided by Oracle: "With Oracle's comprehensive and distinctive framework of data ingestion, value extraction, rights management, and data activation, Oracle Data Cloud can help enterprises across multiple industries and channels generate competitive advantage through the use of data." Oracle also provided a detailed analysis of DaaS written by Pringle.

Oracle said it plans to extend the DaaS framework to other business lines -- sales, for example -- and to vertical data solutions.

Posted by David Ramel on 07/23/2014 at 10:25 AM0 comments

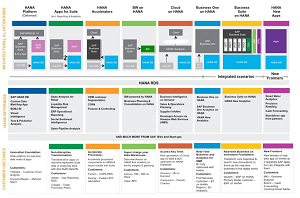

Surprising some industry pundits, enterprise software vendor SAP SE yesterday announced the backing of two cloud-based open source community initiatives, along with the release of cloud-based development tools for its HANA platform.

The German maker of traditional ERP and CRM solutions is sponsoring the OpenStack Infrastructure as a Service (IaaS) and Cloud Foundry Platform as a Service (PaaS) projects.

"Well, this is surprising," opined longtime open source observer Steven J. Vaughan-Nichols about the announcement. SAP's focus on the cloud shouldn't be too surprising, though, as Jeffrey Schwartz noted earlier this year that the company "took the plunge, making its key software offerings available in the cloud as subscription-based services." And, obviously, proprietary solutions have long been shifting to open source -- not too many are going it alone these days.

In fact, SAP is joining archrival Oracle Corp. in becoming an OpenStack sponsor. The Register seemed taken aback by that "perplexing" news when it reported "SAP gets into OpenStack bed with ... ORACLE?" Another rival, Salesforce.com Inc., was rumored by The Wall Street Journal to be joining OpenStack last year ("Salesforce.com to Join OpenStack Cloud Project"), but that didn't happen. SAP seems to be getting a jump on its CRM competitor in that regard.

Started in 2010 by an unlikely partnership between Rackspace Hosting and NASA, the OpenStack project provides a free and open source cloud OS to control datacenter resources such as compute, storage and networking.

[Click on image for larger view.]What is HANA? (source: SAP SE)

[Click on image for larger view.]What is HANA? (source: SAP SE)

"SAP will act as an active consumer in the OpenStack community and make contributions to the open source code base," SAP said. "In addition, SAP has significant expertise in managing enterprise clouds, and its contributions will focus on enhancing OpenStack for those scenarios."

Meanwhile, SAP had an existing relationship with Cloud Foundry, as last December it announced "the code contribution and availability of a Cloud Foundry service broker for SAP HANA" along with other open source contributions. SAP HANA is the company's own PaaS offering. "The service broker will allow any Cloud Foundry application to connect to and leverage the in-memory capabilities of SAP HANA," the company said yesterday.

Now the company is jumping fully onboard with the Cloud Foundry project, started in 2011 by VMware Inc. and EMC Corp. and now the open source basis of the PaaS offering from Pivotal Software Inc., a spin-off collaboration between the two companies.

SAP said it's "actively collaborating with the other founding members to create a foundation that enables the development of next-generation cloud applications."

To foster that development of next-generation cloud applications on top of the HANA platform, SAP also yesterday announced new cloud-based developer tools, including SAP HANA Answers, a portal providing developers with information and access to the company's cloud expertise. Developers using the company's HANA Studio IDE -- based on the popular Eclipse tool -- can access the portal directly through a plug-in.

"SAP HANA Answers is a single point of entry for developers to find documentation, implement or troubleshoot all things SAP HANA," the company said. The site features a sparse interface for searching for information. (Update: I originally reported the site contained no content, and SAP corrected me by pointing out that it just provides the search interface, and searching for "Hadoop," for example, brings up the related information. I apologize for the error.)

Another HANA Cloud Platform tool, the SAP River Rapid Development Environment, was announced as a beta release last month. "This development environment, provisioned in the Web, intends to bring simplification and productivity in how developers can collaboratively design, develop and deploy applications that deliver amazing user experiences," the company said at the time.

Yesterday, SAP exec Bjoern Goerke summed up the news. "The developer and open source community are key to breakthrough technology innovation," Goerke said. "Through the Cloud Foundry and OpenStack initiatives, as well as new developer tools, SAP deepens its commitment to the developer community and enables them to innovate and code in the cloud."

And raises a few eyebrows in the process.

Posted by David Ramel on 07/23/2014 at 6:48 AM0 comments

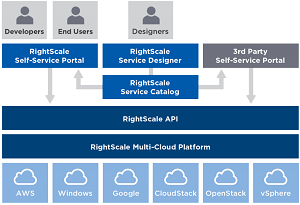

RightScale Inc. announced a new self-service portal that lets enterprises set up cloud services brokerages to provide developers and other users with instant access to cloud infrastructure.

Using RightScale Self-Service, IT departments can define a curated catalog of different resources that can be used for scenarios such as application development, testing, staging and production; demonstrations by sales and others; training; batch processing; and digital marketing, the company said.

RightScale Self-Service allows users to automatically provision instances, stacks or complex multi-tier applications from a catalog defined by IT.

Such catalogs can feature "commonly used components, stacks, and applications and an easy-to-use interface for developers and other cloud users to request, provision, and manage these services across public cloud, private cloud, and virtualized environments," said RightScale's Andre Theus in a blog post.

The company said the self-service portal can help companies bridge the divide between developers and central IT departments and control "shadow IT" issues. These problems occur because the ability to provide infrastructure and platform services has lagged behind development practices such as Agile, continuous delivery and continuous integration.

[Click on image for larger view.]

The RightScale Ecoystem

[Click on image for larger view.]

The RightScale Ecoystem

(source: RightScale Inc.)

Developers and other users might have wait for weeks or months for such resources to be provided, RightScale said. This creates a divide between IT and these staffers. When users get impatient and use public cloud services to instantly gain access to such requested infrastructure and platform resources themselves, a "shadow IT" problem can develop, with IT having no control or visibility into these outside services being used.

"By offering streamlined access to infrastructure with a self-service portal, IT can now meet the business needs of their organizations," the company said in a white paper (registration required to download). "At the same time, a centralized portal with a curated catalog enables IT to create a sanctioned, governed, and managed process for cloud use across both internal and external resource pools.

"Cloud architects and security and IT teams can implement technology and security standards; control access to clouds and resources; manage and control costs; and ensure complete visibility and audit trails across all their cloud deployments."

As quoted by the company, Redmonk analyst Stephen O'Grady agreed. "Developers love the frictionless availability of cloud, but enterprises crave visibility into their infrastructure, which is challenged by the widespread cloud adoption," O'Grady said. "RightScale Self-Service is intended to serve as a way to provide both parties what they need from the cloud."

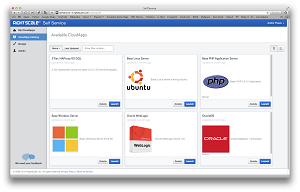

[Click on image for larger view.]

The RightScale Self-Service Catalog

[Click on image for larger view.]

The RightScale Self-Service Catalog

(source: RightScale Inc.)

What the parties need, RightScale said, are capabilities in four areas: standardization and abstraction; automation and orchestration; governance and policies; and cost management.

Under standardization and abstraction, users can design the catalog applications to meet company standards regarding security, versioning, software configuration and so on.

Under automation and orchestration, users can define workflows to provision multi-tier apps, take snapshots and roll back to them if needed, and integrate with IT service management systems or continuous integration services.

While the orchestration capabilities are enabled through a cloud-focused workflow language that lets users automate ordered steps and use RESTful APIs to integrate with other services and systems, RightScale also features a public API to facilitate its integration with other systems.

Under governance and policies, the portal provides policy-based management of infrastructure and resources. IT teams can segregate responsibilities by role. For example, different rules can be set for users who are allowed to define catalog items and for others who only have permission to access and launch them.

Under cost management, the portal can display hourly costs and total costs of services, and IT teams can set usage quotas to keep projects under budget.

RightScale Self-Service also integrates with the company's Cloud Analytics product, so companies can do "what if" analysis on various deployments, clouds and purchase options -- for example, to measure on-demand cost vs. pre-purchased cost. It also integrates with the company's Cloud Management product to administer applications.

In addition to providing on-demand access to cloud resources and offering the technology catalog, the self-service portal lets companies support major private and public clouds such as Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform, OpenStack and VMware vSphere environments.

Regarding the latter, Dan Twing, president and COO of analyst firm Enterprise Management Associates, said, "Treating VMware vSphere like a cloud and providing a governance framework for enterprise cloud usage is a simple, powerful concept that will have deep impact on how enterprises innovate using the full power of cloud computing."

Posted by David Ramel on 07/16/2014 at 8:32 AM0 comments

Eight months ago, Satya Nadella -- before he was named Microsoft CEO -- announced a cloud-based partnership with Cisco: "Microsoft and Cisco’s Application Centric Infrastructure, hello cloud!"

"Through this expanded partnership, we will bring together Microsoft’s Cloud OS and Cisco’s Application Centric Infrastructure (ACI) to deliver new integrated solutions that help customers take the next step on their cloud computing journey," Nadella wrote at the time.

Well, the companies took another step on their own journey yesterday with the networking giant's announcement that "Cisco Doubles Down on Datacenter with Microsoft."

But the language stayed pretty much the same.

"By providing our customers and partners with greater sales alignment and even deeper technology integration, we will help them transform their datacenters and accelerate the journey to the cloud," said Cisco exec Frank Palumbo.

The three-year agreement, announced at the Microsoft Worldwide Partner Conference in Washington, D.C., will see the companies investing in combined sales, marketing and engineering resources.

Cisco announced integrated products and services that will focus on the Microsoft private cloud, migration of servers, services providers and SQL Server 2014.

"Cisco and Microsoft sales teams will work together on cloud and datacenter opportunities, including an initial program focused on the migration of Windows 2003 customers to Windows 2012 R2 on the Cisco UCS platform," Cisco said.

A bevy of other offerings to be integrated includes Cisco Nexus switching, Cisco UCS Manager with System Center integration modules, Cisco PowerTool, Windows Server 2012 R2, System Center 2012 R2, Windows PowerShell and Microsoft Azure. Future releases will integrate Cisco ACI and Cisco InterCloud Fabric.

Further details were offered by Cisco's Jim McHugh. "At Cisco we believe our foundational technologies -- with UCS as the compute platform, Nexus as the switching platform, and with UCS Manager and System Center management integration -- provide customers an optimal infrastructure for their Microsoft Windows Server workloads of SQL, SharePoint, Exchange and Cloud."

Microsoft's Stephen Boyle also weighed in on the pact. "Enterprise customers worldwide are betting on Microsoft and Cisco to realize the benefits of our combined cloud and datacenter technologies," Boyle said. "Now, together, we're strengthening our joint efforts to help customers move faster, reduce costs and deliver powerful new applications and services for their businesses."

And now, eight months after that first ACI announcement, you can expect the companies' joint journey along with their customers to the cloud to progress even further.

"This greater alignment between Cisco and Microsoft will set the stage for deeper technology integration," said Cisco's Denny Trevett. "Together we will deliver an expanded portfolio of integrated solutions to help customers modernize their datacenters and accelerate their transition to the cloud."

So get ready for your trip, because you're going.

Posted by David Ramel on 07/16/2014 at 12:47 PM0 comments

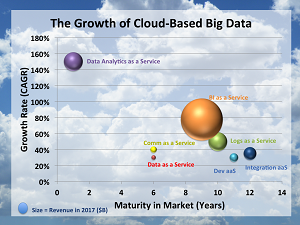

It's a perfect fit and it isn't a new trend, but Big Data's migration to the cloud seems to be accelerating recently.

The advantage of running your Big Data analytics in the cloud rather than on-premises -- especially for smaller companies with constrained resources -- are numerous and well-known. Oracle Corp. summed up some of the major business drivers in an article titled "Trends in Cloud Computing: Big Data's New Home":

- Cost reduction

- Reduced overhead

- Rapid provisioning/time to market

- Flexibility/scalability

"Cloud computing provides enterprises cost-effective, flexible access to Big Data's enormous magnitudes of information," Oracle stated. "Big Data on the cloud generates vast amounts of on-demand computing resources that comprehend best practice analytics. Both technologies will continue to evolve and congregate in the future."

In fact, they will evolve to the tune of a $69 billion private cloud storage market

by 2018, predicted Technology Business Research. That's why the Big Data migration to the cloud is picking up pace recently -- everybody wants a piece of the multi-billion-dollar pie.

As Infochips predicted early last year: "Cloud will become a large part of Big Data deployment -- established by a new cloud ecosystem."

[Click on image for larger view.]

Infochips illustrates the trend.

[Click on image for larger view.]

Infochips illustrates the trend.

(source: Infochips)

The following moves by industry heavyweights in just the past few weeks show how that ecosystem is shaping up:

-

IBM last week added a new Big Data service, IBM Navigator on Cloud, to its IBM Cloud marketplace. With a reported 2.5 billion gigabytes of data being generated every day, IBM said the new Big Data service will help organizations more easily secure, access and manage data content from anywhere and on any device.

"Using this new service will allow knowledge workers to do their jobs more effectively and collaboratively by synchronizing and making the content they need available on any browser, desktop and mobile device they use every day, and to apply it in the context of key business processes," the company said.

The new service joined other recent Big Data initiatives by IBM, such as IBM Concert, which offers mobile, cloud-based, Big Data analytics.

Google Inc. last month announced Google Cloud Dataflow, "a fully managed service for creating data pipelines that ingest, transform and analyze data in both batch and streaming modes."

The new service is a successor to MapReduce, a programming paradigm and associated implementation created by Google that was a core component of the original Hadoop ecoystem that was limited to batch processing and came under increasing criticism as Big Data tools became more sophisticated.

"Cloud Dataflow makes it easy for you to get actionable insights from your data while lowering operational costs without the hassles of deploying, maintaining or scaling infrastructure," Google said. "You can use Cloud Dataflow for use cases like ETL, batch data processing and streaming analytics, and it will automatically optimize, deploy and manage the code and resources required."

EMC Corp. on Tuesday acquired TwinStrata, a Big Data cloud storage company. The acquisition gives traditional storage EMC access to TwinStrata's CloudArray cloud-integrated storage technology.

That was just one of a recent spate of moves to help EMC remain competitive in the new world of cloud-based Big Data. For example, when the company announced an upgrade of its VMAX suite of data storage products for big companies, The Wall Street Journal reported: "Facing Pressure from Cloud, EMC Turns Data Storage into Service."

The same day, EMC announced "a major upgrade to EMC Isilon OneFS, new Isilon platforms and new solutions that reinforce the industry's first enterprise-grade, scale-out Data Lake." But wait, there's more: EMC also yesterday announced "significant new product releases across its Flash, enterprise storage and Scale-Out NAS portfolios" to help organizations "accelerate their journey to the hybrid cloud."

EMC's plethora of Big Data/cloud announcements make it clear where the company is placing its bets. As financial site Seeking Alpha reported: "EMC Corporation: Big Data and Cloud Computing Are the Future."

That was in March, and the future is now.

Posted by David Ramel on 07/10/2014 at 2:37 PM0 comments

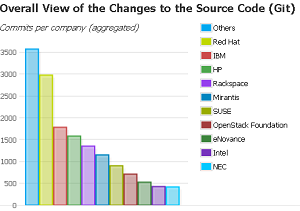

Enterprise Linux giant Red Hat is pushing further into the OpenStack arena with the release of Red Hat Enterprise Linux OpenStack Platform 5.

The company touts integration with VMware infrastructure as a key selling point of its distribution of the OpenStack cloud OS/platform. That's just one of "hundreds" of reported improvements to the OpenStack core components: compute, storage and networking. Other enhancements include three-year support and new features such as configuration and installation enhancements designed to help enterprises adopt OpenStack technology.

OpenStack is an open source project originally created by Rackspace Hosting and NASA, now under the direction of a consortium of industry heavyweights, including Red Hat. In fact, Red Hat -- which says it's "all in on OpenStack" -- has been the No. 1 contributor to recent OpenStack distributions, the last being Icehouse, released in April. As with Linux, the vendor makes money by bundling its distribution with services, support and extra features realized as part of the company's development model of "participate, stabilize, deliver."

[Click on image for larger view.]

Red Hat leads individual contributors to the open source OpenStack project.

[Click on image for larger view.]

Red Hat leads individual contributors to the open source OpenStack project.

(source: OpenStack.org)

The distribution -- which went into beta in May -- is based on Red Hat Enterprise Linux 7, just released a couple months ago. Its improved support of VMware infrastructure touches on virtualization, management, networking and storage functionalities.

"Customers may use existing VMware vSphere resources as virtualization drivers for OpenStack Compute (Nova) nodes, managed in a seamless manner from the OpenStack Dashboard (Horizon)," Red Hat said in an announcement. The new product also "supports the VMware NSX plugin for OpenStack Networking (Neutron) and the VMware Virtual Machine Disk (VMDK) plugin for OpenStack Block Storage (Cinder)."

Red Hat's OpenStack offering -- aimed at advanced cloud users such as telecommunications companies, Internet service providers and cloud hosting providers -- comes with a three-year support lifecycle, backed by a partner ecosystem with more than 250 members.

New features include better workload placement via server groups. Companies can choose to emphasize the resiliency of distributed apps by spreading them across cloud resources, or ensure lower communications latency and improved performance by locating them closer.

Red Hat also said the interoperability of heterogeneous networking stacks is enhanced through the new Neutron modular plugin architecture. That lets enterprises more easily add new components to their OpenStack deployments and provide better mix-and-match networking solutions.

A more technical enhancement is the use of the "para-virtualized random number generator device" that came with Red Hat Enterprise Linux 7, so guest applications can use better encryption and meet new cryptographic security requirements.

Red Hat said that in the coming weeks it will make generally available an OpenStack distribution based on its previous Linux distribution, version 6. The company will maintain dual versions so customers can best complement the OS they're using.

According to an IDG Connect survey commissioned last year by Red Hat, that overall customer base is expected to grow. The survey said 84 percent of respondents indicated they had plans to adopt OpenStack technology.

Posted by David Ramel on 07/09/2014 at 1:49 PM0 comments

Yes, Jon William Toigo makes some excellent points about lack of cloud storage management tools in his recent post, "Some Depressing but Hard Truths About Cloud Storage," but there has been some good news lately: dropping cloud storage prices.

Sure, the ongoing cloud storage price wars among companies such as Google Inc., Apple Inc. and Microsoft might be consumer-oriented, but cheaper cloud storage for the public at large surely translates into lower costs for enterprises.

The latest volley came this week from Microsoft, which increased the amount of free storage on its OneDrive (formerly SkyDrive) service and slashed prices for paid storage. The move follows similar initiatives from competitors.

In March, Google cut prices for its Google Drive service.

Shortly after, Amazon also got in on the act, posting lower prices effective in April.

Enterprise-oriented Box at about the same time decreased the cost of some of its services, such as its Content API.

Earlier this month, Apple announced price reductions for its iCloud storage options and previewed a new iCloud Drive service coming later this year with OS upgrades.

Just a couple weeks ago, IBM downgraded the expense of object storage in its SoftLayer cloud platform.

Interestingly, "Dropbox refuses to follow Amazon and Google by dropping prices," CloudPro reported recently. Coincidentally, The Motley Fool today opined that "Microsoft May Have Just Killed Dropbox."

In addition to price wars, the cloud storage rivals are also improving other aspects of their services in order to remain competitive. For example, in the Microsoft announcement a couple days ago, the company also increased the free storage limit from 7GB to 15GB. And if you subscribe to Office 365, you get a whopping 1TB of free storage. In paid storage, the new monthly prices will be $1.99 for 100GB (previously $7.49) and $3.99 for 200GB (previously $11.49), the company said.

And, of course, Amazon and Google both last week announced dueling solid-state drive (SSD) offerings.

So as Jon William Toigo ably explained, enterprise cloud storage management is a headache and it's not getting enough attention. But if these consumer trends continue apace in the enterprise arena, lower TCO and increased ROI should lessen the pain.

Posted by David Ramel on 06/25/2014 at 12:59 PM0 comments

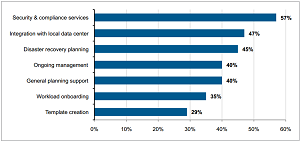

Enterprises moving to the cloud is a given, most will agree, but new research suggests it's a bumpy ride, with "staggering" project failure rates.

Even among the most well-known Infrastructure as a Service (IaaS) providers, unexpected challenges derail many cloud implementations. These challenges include complicated pricing and hidden costs that erase expected cost savings, performance problems and more, according to the report: "Casualties of Cloud Wars: Customers Are Paying the Price."

The research was conducted by Enterprise Management Associates Inc. (EMA) and commissioned by cloud infrastructure providers Iland and VMware Inc. EMA recently surveyed more than 400 professionals around the world to gauge their IaaS implementation experiences with major vendors such as Amazon.com Inc., Microsoft and Rackspace.

[Click on image for larger view.]

We need help! Respondents report what type of external support they require when operating a public cloud.

[Click on image for larger view.]

We need help! Respondents report what type of external support they require when operating a public cloud.

(source: iland)

"The promise of cloud remains tantalizingly out of reach, and in its place are technical headaches, pricing challenges and unexpectedly high operational costs," the report stated. Iland, in a statement announcing the study, said, "Respondents reported staggering failure rates across 'tech giant' IaaS implementations and identified the support and functionality needed to overcome top challenges."

As part of the survey, respondents were asked which public cloud services they considered, which were adopted, and which stalled or failed.

Rackspace was the leader in stalled/failure rates, named by 63 percent of respondents, followed by Amazon (57 percent), Microsoft Azure (44 percent) and, finally, VMware vCloud-based service providers, scoring the "best" with a 33 percent reported failure rate. Iland is a VMware-based cloud provider (and remember, Iland and VMware commissioned the study).

"Eighty-eight percent of respondents experienced at least one unexpected challenge," the survey said. "The most common challenge in the United States was support, while performance and downtime topped [Europe] and [Asia-Pacific] lists, respectively.

The unexpected challenges were primarily found in six areas (with the percentage of respondents listing each):

- Pricing -- 38 percent

- Performance -- 38 percent

- Support -- 36 percent

- Downtime -- 35 percent

- Management of cloud services -- 33 percent

- Scalability -- 33 percent

On pricing, the report stated: "While cost savings may be a key benefit of cloud, the current pricing models under which they operate are difficult to understand. A cursory glance at the pricing schemes of major public cloud providers demonstrates the need for the customer to carefully analyze pricing models and their own IT needs before committing to an option."

On performance, the report stated: "Different clouds are architected with different back-ends, and some are more susceptible to 'noisy neighbor' syndrome than others. For customers who are sensitive to variations in performance, this can impact the cloud experience."

Moving from challenges to solutions, respondents were asked what factors would make cloud services more accessible to their organizations. The responses were:

- Better management dashboard -- 52 percent

- More flexible virtual machine (VM) scaling -- 47 percent

- Easier resource scalability -- 48 percent

- Better VMware vSphere integration -- 45 percent

- More transparent pricing -- 43 percent

- Simpler onboarding -- 37 percent

- Certainty of geographic location of workloads -- 35 percent

"Finally, respondents were nearly unanimous in their agreement that high-quality, highly available phone-based support was critical to their cloud implementations," the study said. "This is notable, in part, because phone-based support is far from standard among public cloud companies, and more often than not, is accompanied by a high-cost support contract."

Despite the problems, the study revealed that almost 60 percent of respondents indicated an interest in adding cloud vendors. In contrast, fewer than 20 percent plan to quit the cloud because of security, cost, compliance or complexity issues.

Top factors influencing cloud adoption were listed as disaster recovery capabilities, cost, rapid scalability and deployment speed.

"Companies cannot afford to turn their backs on cloud computing, as it represents a key tool in the race for innovation across industries and around the world," the report stated in its summary.

Posted by David Ramel on 06/25/2014 at 12:49 PM0 comments

Amid a battle for cloud supremacy involving alternating price cuts and feature introductions, both Amazon and Google introduced solid-state drive (SSD) storage options for their services this week.

Amazon Web Services (AWS) yesterday announced an SSD-backed storage option for its Amazon Elastic Block Store (Amazon EBS), just one day after Google announced a new SSD persistent disk product for its Google Cloud Platform.

The moves are just the latest in a continuing struggle, as illustrated by research released earlier this month by analyst firm Gartner Inc. showing perennial cloud leader Amazon was being chased by Google and Microsoft in the Infrastructure as a Service (IaaS) market.

In fact, this week's alternating SSD announcements mirrored a surprisingly similar scenario in March, when Google lowered its cloud service prices and AWS followed up the very next day with its own price reduction. (Here's a price comparison conducted by RightScale after the moves, if you're interested.)

One difference in this week's sparring is that Google positioned its SSD product as a high-performance option, while the AWS offering targets general-purpose use, as it already had an existing high-performance service.

Google said its persistent disk product was a response to customers asking for a high input/output operations per second (IOPS) solution for specific use cases. The SSD persistent disks are now in a limited preview (you can apply here) with a default 1TB quota. SSD pricing is $0.325 per gigabyte per month, while standard persistent disk storage (the old-fashioned kind with spinning plates) costs $0.04 per gigabyte per month.

"Compared to standard persistent disks, SSD persistent disks are more expensive per GB and per MB/s of throughput, but are far less expensive per IOPS," Google said. "So, it is best to use standard persistent disks where the limiting factor is space or streaming throughput, and it is best to use SSD persistent disks where the limiting factor is random IOPS." Included in the Google announcement was news of another new product: HTTP load balancing, also in limited preview.

In the AWS announcement the next day, the company introduced a General Purpose (SSD) volume type to join its existing higher-performance Provisioned IOPS (SSD) service and the lower-grade Magnetic (formerly called Standard) volumes. The storage volumes can be attached to the company's Elastic Compute Cloud (EC2) instances.

"The General Purpose (SSD) volumes introduced today are designed to support the vast majority of persistent storage workloads and are the new default Amazon EBS volume," AWS said. "Provisioned IOPS (SSD) volumes are designed for I/O-intensive applications such as large relational or NoSQL databases where performance consistency and low latency are critical."

AWS said the new offering was designed for "five nines" availability and can burst up to 3,000 IOPS, targeting a variety of workloads including personal productivity, small or midsize databases, test/development environments, and boot volumes.

The new AWS EBS product costs $0.10 per gigabyte per month at its Virginia and Oregon datacenters, while the higher-performance Provisioned IOPS (SSD) service costs $0.125 per gigabyte per month. Note that the companies' pricing schemes vary in some details, so listed prices here aren't necessarily directly comparable.

I don't know how AWS has been able to counter its challenger's announcements within 24 hours of release, but I can't wait to see what's next.

Posted by David Ramel on 06/18/2014 at 12:19 PM0 comments