The time has come for virtualization admins to take a step back and re-evaluate their environments. We have been on a virtualization mission for the past few years and some of us haven't stopped to take a breath and fine-tune or refine these new environments that we're building and that we believe will yield all the benefits that come with virtualizing our servers.

We have done a great job of taking our existing physical environments and virtualizing them, but with that many of us have also imported the same processes, procedures and approaches that were used to build our physical server environments. One of the things I want to focus on this time is the agent-based approach to anything virtual.

You see, back in the physical server world, an agent-based approach to a software deployment was acceptable: The physical server was an isolated container, self-sufficient from a compute, network and -- to some extent -- storage perspective. Placing an agent for backup purposes or for antivirus was a practice we had refined for years and which served us well.

As we virtualized, we brought the same concept into the virtualized environment. I cannot begin to tell you how many times I have advised customers against doing so.

In a virtualized environment, you have a multitude of virtual containers with one thing in common: They all share physical resources. You can have VMs on physical hosts that share compute, network and storage resources. When you take the approach of an agent-based anything on this type of platform, you immediately create a bottleneck, and you create unnecessary duplication. This method limits your ability to scale your environment, as well as hinders your ability to successfully virtualize more of your environment, especially the tier-1 applications that you may have resisted virtualizing to this day.

If you still use an agent to back up your virtual machines, then you are not taking advantage of the VMware vStorage APIs for Data Protection (VADP) that allows back-up software to plug in and offer backup and restore solutions in your virtual environment the right way in a virtualized space. Don't worry about consistent versus non-consistent backups -- all respectable back-up solutions should be able to plug into Microsoft's Volume Shadow Copy Services and provide the desired level of backup and restore. If you own the software but have not upgraded to the version which would allow you to do so, I strongly urge you to invest the time in doing so. If you own software that does not support these APIs, ask your vendor for a road map, and if one does not exist or is too far out, maybe it is time to find a vendor that can react much quicker to the changing world we live in.

Let's move on to antivirus. I have discussed antivirus many times when tackling VDI, but I want to urge you to revisits your anti-virus approach even on server virtualization. Again, having an agent-based antivirus solution will grill the underlying storage from an IO perspective. Every time a scan runs, every time an update to the definition files or the AV engine occurs, it all translates into very intense pressure on storage. As storage is the most critical component that directly impacts a VM's performance, you are faced with a dilemma.

You can, of course, take the antivirus vendors' approach of" "well, you can randomize scans, and you can set different time schedules on how to update definitions, etc." Still, all these recommendations assume that we still have maintenance windows and after-hours cycles to perform these tasks. The reality of the matter is that outage and maintenance windows are shrinking so much that they don't even exist at some enterprises. Randomizing scans and updates is a temporary workaround that does not scale properly, so what is the fix? Well, some organizations have decided to reinforce their storage with more spindles, insert SSDs into the mix, etc. Basically, those organization have thrown hardware at a software problem.

My question to everyone is. why are you not leveraging VMware vShield Endpoint? For VDI, it's a given, but let's examine vShield from a server virtualization perspective. I have a customer with over 1,500 VMs and growing. It's worse than having VDI, and my customer has an AV agent inside every VM. Why?

vShield Endpoint is not expensive, and I promise if you do the math it will be a fraction of the price you are paying for the additional storage. vShield Endpoint injects a driver inside of every VM. This driver is a Virtual SCSI enhanced driver, if you will, which offloads the IO traffic to a vShield Endpoint appliance. The appliance inspects the IO traffic going in and coming out of the VM for malware. This approach allows you to scale in a virtual world. Remember, it is all about shared resources, the concept of an isolated, dedicated set of resources no longer exists. So, our way of thinking has to arrive at the same point where the technology is today.

You have to start correlating things together -- storage teams and virtualization teams cannot remain siloed. You also have to understand how your technologies affect your teams. How do certain processes in a virtualized environment affect storage and vice versa? This spans the network and compute teams as well. We have to break the silo barriers and work together. Otherwise, you defeat the purpose of a virtualized environment.

Now granted, we still find agents to be necessary, I understand that. For some solutions, use an agent. What can you do? However, stay updated on what is happening and try to avoid the agent-based approach whenever possible. I am still dealing with customers that leveraged agents so much in ESX, that they are finding it hard to migrate to ESXi. Your thoughts? Post them here or e-mail me.

Posted by Elias Khnaser on 11/15/2011 at 12:49 PM1 comments

If I was to summarize it in a few words, I would say it this way: VMware ThinApp Factory is the P2V for applications. The idea behind the ThinApp Factory is simplifying the application virtualization process -- specifically, the annoying, cumbersome and somewhat complicated sequencing process.

ThinApp Factory is dubbed to be an appliance that you install in your VMware infrastructure. You can then point an installed application or an MSI package to this appliance and it will "auto-magically" spin up the right VM with the right operating system based on a set of pre-qualifying questions you've answered. ThinApp Factory will then sequence the application properly, presenting you with a fully packaged EXE file which you can then use.

VMware with the ThinApp Factory aims to simplify the process of application virtualization. I would not be surprised if the ThinApp Factory at release did not include an assessment feature that would also analyze the applications and determine which ones are good candidates for virtualization and which are not. And then based on that analysis, it can then virtualize the good candidate applications.

If executed properly, ThinApp Factory could be a game-changer in the world of application virtualization. The application virtualization concept is of interest at every enterprise, simply due to the sheer number of applications that are available that enterprises want to get a better handle on, especially in the virtualized world.

If only VMware would release a pure ThinApp client for iDevices (IOS, Android, etc.). Now, that would be the ultimate combination: an automated application virtualization tool and a mobile device client to run these newly virtualized applications. I would be a happy camper.

Your thoughts? Post them here or e-mail me.

Posted by Elias Khnaser on 11/03/2011 at 12:49 PM3 comments

Up in the "cloud" it's all about services. A service is defined as an application with all its dependencies and requirements. For example, if you are deploying Microsoft Exchange, you will need a certain number of servers to play different roles. You may or may not be deploying Outlook Web Access, etc. The collective pre-requisites for Exchange to work properly for enterprise users are called a service.

In the cloud era, it is all about deploying services, managing services and monitoring services. We don't want to manage a server as a standalone entity but rather we want to manage it in context with the service that it belongs to.

Now, what is the most complicated process of deploying a service? I can summarize by saying it is to gather all the necessary requirements for that application, and then putting it all together again. F5's iApps, which is available in version 11 of its Big IP, has an application-centric perspective on things. So, if you are trying to deploy a Citrix XenApp or XenDesktop load-balanced Web interface and access gateway for internal and external users, you would need a large amount of information, a specific subset of configuration and then accurate implementation. When all is said and done, you want to add monitoring capabilities to this new load balancing solution.

F5 streamlines the process by supporting a number of applications it calls an iApp. An iApp is the equivalent of a service as described earlier. What an iApp does when you choose to deploy XenApp or Exchange, is walk you through a series of "goal-oriented" questions that will determine how the service is deployed. Are you using XenApp for LAN, WAN or remote access? Are you using Outlook Web Access? And so on. It will then deploy all the requirements for this service, in addition to monitoring in a few minutes.

Basically F5 has taken all the documentation needed to configure and deploy an application, created an iApp out of it and made it easy to deploy, manage and monitor. Furthermore, iApps are portable. This means if you build an iApp tha is not available as part of the product, you can share the iApp'ed version with others or even download iApps from others for your use.

Now these technologies and concepts are not earth-shattering or game-changing. They are, however, necessary for the age of cloud, where deployment, provisioning, management and monitoring are expected in a streamlined and automated manner, and where applications are being considered as a single entity rather than a collection of technologies.

I find iApps extremely helpful but I want to get your perspective on them if you have used them or are familiar with them. Post your thoughts here.

Posted by Elias Khnaser on 11/01/2011 at 12:49 PM0 comments

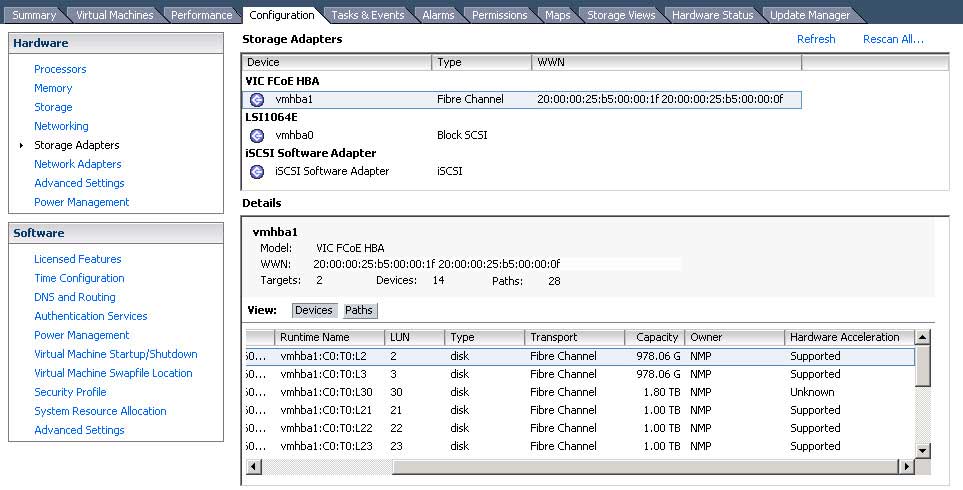

Lately I have been getting a lot of questions on how to determine if vSphere APIs for Array Integration is actually functioning on all datastores. There are tons of articles on how to check if it is enabled, but I could not find one on checking if it is functioning.

VAAI is always enabled by default. So, unless you have explicitly disabled it, there is little value in checking whether or not it is enabled. However, checking if it is functioning is something I find useful. With that, I decided to share this with everyone in case you had not seen it.

In the vSphere client, select an ESXi host and click on Configuration | Storage Adapters and select your HBA adapter. As you can see in Fig. 1, examine the last column under the heading Hardware Acceleration. If you see "Supported," that means VAAI is functioning for that particular datastore. This is just a quick and easy way of determining if VAAI is working properly.

|

Figure 1. Configuring the HBA Adapter. (Click image to view larger version.) |

Now, Fig. 1 also shows some entries that say "Unknown." This means one of two things: Either that LUN has not been provisioned into a datastore yet, or that there is a Raw Device Mapping (RDM) attached to one or more VMs.

I hope this helps! And let me know if you're having other problems I can help you with in this blog by writing to me here.

Posted by Elias Khnaser on 10/27/2011 at 12:49 PM10 comments

Microsoft Hyper-V 3 is shaping up to be an impressive release with a feature lineup worthy of a standing ovation. I am sure you have heard by now about the major features of Hyper-V 3, especially around virtual networking and Cisco's support of Hyper-V 3 via its Nexus 1000V. However, a feature that has not been given its true credit is Hyper-V 3's support of SMB 2.2, the latest newcomer to the IP storage arena and a direct challenger of the increasingly popular file-level protocol NFS.

For a while now, I and others have been wondering why Microsoft does not extend Hyper-V support to SMB. Lo and behold, the company announces its support for SMB 2.2. SMB 2.2 promises some significant enhancements that would make for a perfect companion to Hyper-V 3. For those of you that are not aware, SMB 2.0 introduced with Windows Vista and Windows Server 2008 significantly improves features, performance and stability over SMB 1.0 found in earlier versions.

The most noticeable change between SMB 1.0 and 2.0 and later 2.1 is the performance over the network. Versions 2.0 and newer are less chatty, faster and much more reliable, one more reason to migrate to Windows Server 2008 and to upgrade from Windows XP to Windows 7. That being said, let's comes back to the issue at hand: the new enhancements in SMB 2.2 that make it a perfect companion to Hyper-V 3:

Unified SAN/SMB Copy Offload--My favorite feature of the new SMB 2.2 protocol allows your array controllers to execute large data copies on behalf of Hyper-V 3. Instead of executing them leveraging the hypervisor, you are offloading it to physical array where it should be in the first place. It's very similar to what vSphere offers today.

Remote VSS--Volume Shadow Copy is the basis for almost every management tool that interacts with Windows today, from backups and restores to continuous availability. Remote VSS will extend this feature to SMB 2.2.

Witness Protocol--Another great feature of SMB 2.2 deals with HA, resiliency and load balancing, Witness instructs a client to redirect to a different node when it detects a node failure. It also dynamically and seamless load balances between nodes for optimal performance.

Cluster Client Failover--This feature allows VMs which are part of a Microsoft cluster to seamlessly detach and attach to different storage connection points.

MPIO--Allows for multiple Ethernet connections to the NAS storage. Think of it as link aggregation.

SMB 2.2 not only enables Hyper-V 3 to challenge VMware vSphere as an enterprise-level virtual infrastructure, but it also challenges NFS 3, the current preferred file-level storage. Frankly, I would position SMB 2.2 as a direct challenger to NFS 4 from a feature standpoint. It rivals NFS 4 features and in my opinion surpasses NFS 3. The irony of the matter is that it looks like SMB 2.2 will make its debut in the virtualization world far sooner than NFS 4, which has been around for a while now but still lacks virtualization support.

Microsoft finally has a product worthy of an enterprise virtual infrastructure, but I have said enough in this post. So, what are your thoughts?

Posted by Elias Khnaser on 10/25/2011 at 12:49 PM3 comments

Mark Templeton's shopping spree continues. The latest company to pop up in the Citrix shopping cart is Dropbox-like company ShareFile. From a strategic standpoint, this acquisition is a great move for Citrix. If integrated correctly, Citrix can turn ShareFile (I hope they don't call it XenFile or XenShare or XenSomething) into an enterprise cloud storage play. Every company I am talking to likes the Dropbox approach for storage for its users. The caveat, however, is that companies want to offer a Dropbox-like service on premise, not on someone else's cloud. So, if Citrix gets it right, the acquisition can be a home run for the company.

The acquisition would serve multiple purposes. First, it would allow Citrix to offer its customers yet another service that is in demand these days at the enterprise level. It also empowers XenApp and XenDesktop to have a better solution and approach to user data. Apple's iCloud can sync all your settings and make it available on any device. Citrix should be able to take the same approach as Apple as far as ShareFile is concerned and make your settings and files available on any device without hassle. It should just work.

I just hope that Citrix does not just acquire ShareFile, and make it a standalone product. I also hope that Citrix offers an on-premise version of ShareFile. While the cloud-based, SaaS model is good, I think many enterprises will be more enticed to use the product if they can deploy it locally.

That being said, and while I applaud the acquisition, at some point Citrix has to give the credit card a breather and focus significant effort on integrating all these different toys it keeps buying. The road to successful products start with tighter integration.

Posted by Elias Khnaser on 10/20/2011 at 12:49 PM0 comments

While recording my VMware vSphere 5 Training course this past summer, I made mention that I developed a process for building an effective naming convention for enterprises. Server and desktop virtualization significantly increases the number of named objects within our enterprises, so an effective naming convention that describes what an object is, its location, function and purpose is critical. Doing so allows us to identify objects in a speedy way, but it also provides a structured way of searching for objects using keywords in a logical manner.

Even as I developed these guidelines, I did not think it would interest folks very much. But I started receiving near daily tweets and e-mails requesting a copy of the document. So, I've decided to publish it and share it with everyone in today's blog.

Here are my basic guidelines for the object naming convention:

- A name should identify the device's location and its purpose/function/service.

- A name should be simple yet still be meaningful to system administrators, system support, and operations.

- The standard needs to be consistent. Once set, the name should not change.

- Avoid special characters; only use alphanumeric characters.

- Avoid using numeric digits, except for the ending sequence number.

- Avoid the use of specific product or vendor names, as those can be subject to change. (There are some generally accepted exceptions: Oracle, SMS, SQL, CTX, VMW)

Here are some basic recommendations:

- The name should begin with a rigid header portion that identifies the location and optionally a type identifier. These should be followed by a delimiter to signify the end of the header portion. This delimiter shall be a "-" (dash) unless the system does not recognize a "-". In this case, substitute the dash for another suitable agreed-upon character (i.e. $ or #).

- Allow for a variable section that completes the identification (function, service, purpose, application).

- End the name with a unique ID, a sequence number, which can be multi-purpose.

- Allow for flexibility. Since technology is constantly evolving, this standard must also be able to evolve. When necessary, this standard can be modified to account for technological, infrastructure, and or business changes.

- There must be enforcement, along with accurate and current documentation for all devices.

Here is an example of the proposed naming convention standard:

Structure:

Header |

|

Variable |

G |

G |

L |

T |

- |

A |

A |

A |

B |

B |

B |

# |

# |

Header portion

GG -- Geographical location

L -- Location should be generic and not vendor- or building-specific to facilitate moves, building name changes due to mergers, acquisitions or dissolution of business, etc.

T -- Type

- -- Dash is a required delimiter to signify the end of the header portion

Variable portion:

AAA -- Function /Service/Purpose

BBB -- Application

(Unique ID)

## -- 2 digit sequence #

Values Defined:

Geographic:

CH -- Chicago

NY -- New York

LN -- London

SY -- Sydney

MA -- Madrid

SI -- Singapore

MU -- Mumbai

Location:

D -- Main Data Center

C -- COLO Data Center

T -- Test Area (should be used for test machines that are to permanently stay in the test area)

Type (optional):

V -- Virtual

C -- Cluster server

P -- Physical

O -- Outsourced or vendor supported system

Delimiter (required):

- A "-" (dash) will be used unless the system does not recognize a "-" at which point an agreed upon character can be substituted. This could be a $ or # or other character.

Variable portion - AAA

Identify the primary purpose of the device:

DC -- Domain Controller

FS -- File Server

PS -- Print Server

ORA -- Oracle database

SQL -- SQL database

DB -- other database(s)

EXH -- Microsoft Exchange

CTX -- Citrix Server

ESX -- VMware ESX Server

Variable portion BBB

Identify the Application on this server. If the server is for a specific application, then an application identifier should be the second part of this portion of the name, preceded by the service:

JDE -- JDEdwards

DYN -- Dyna

EPC -- Epic

This area of the name offers a lot of flexibility to handle identifiers for specific purposes, functions, and/or applications. There are many challenges to select identifiers that are meaningful and consistent and are not subject to frequent change. Here are some examples based on th guidelines I propose above:

CHD-DC01 -- Chicago Office, Data Center, Domain Controller, sequence # 1

CHD-FS01 -- Chicago Office, Data Center, File Server, sequence # 1

CHD-EXH01 -- Chicago Office, Data Center, Microsoft Exchange, sequence # 1

CHD-ESX01 -- Chicago Office, Data Center, VMware ESX Server, sequence # 1

CHC-CTXJDE01 -- Chicago Office, Data Center, Citrix Server,JDEdwards Application, sequence # 1

CHC-WEB01 -- Chicago Office, Data Center, Web Server, sequence # 1

Unique ID / sequence number

## This is a 2 digit sequence number

I would love to hear your comments on this naming convention and if you have ideas to improve it.

Posted by Elias Khnaser on 10/13/2011 at 12:49 PM11 comments

Some of you wrote some interesting comments -- some with questions -- to my last post, "Why vSphere is King of Hypervisors." Instead of isolating the discussion to the comments section, I figured I would reply here, as those questions merit some answers that everyone should see. Without further ado...

Does VMware vSphere provide enough value for the price?

The answer is a resounding "Yes!" VMware vSphere 5 provides enough value for the asking price. I think what many IT professionals are still struggling to come to terms with is that virtualization is not just another piece of software, it is not just another application. Virtualization is the new infrastructure, so carefully selecting it is not a matter of price -- it is a matter of flexibility and agility. Selecting a virtual infrastructure is a decision you make after examining the supporting products for this infrastructure, after designing your strategy and where you are trying to go from a business standpoint. It is not about IT, it is about which infrastructure to deploy that allows IT to respond to the business's needs effectively.

Let me give you an example: In the old days when I worked in IT in corporate America, we would get HP, Cisco and many other manufacturer briefings. They would come in once a quarter, once a year, etc., and do a deep dive with us into what their new platform offered and why we should continue to use them. I remember when HP (or was it Compaq? now, it doesn't matter) for example introduced the ability for us to hot-swap memory while the server was powered on (not that I ever even tried it). The first thing that came to my mind was, "this is cool; can I add more memory to my SQL Server (yeah, right) without powering it down? No interruption in service?" I automatically connected the technical feature with a business use case for it. What I am trying to say, is we chose infrastructure that was flexible and allowed us to offer something different to the business -- in this case, no down time.

Some of us are still treating virtualization as a server consolidation play. Virtualization has moved beyond server consolidation and containment and more into the cloud, into a highly virtualized, highly automated and orchestrated infrastructure that provides services on demand. If you subscribe to this school of thought, then you can't look at the hypervisor isolated from everything else.

The hypervisor is at the core of many other services that compliment it and serve your goals. Again, when making this decision consider security: How are you going to address security in a virtualized world? How are you going to address antivirus? What about virtual networking? That is important, no? That is the handoff that connects everything; you best make sure that your virtual infrastructure has high support from networking vendors and high support from the hypervisor vendor as well. Should I bring up storage? If networking is important for connectivity, the world revolves around storage. In a virtualized world, it is all about storage and storage integration. That will govern your performance and your ability to be agile. I can go on for a while and talk about orchestration and automation, about monitoring, etc.

Now, some of you will say, all of them have this support. Do they really? Make sure you demo all these products and how well they integrate together. Many companies go through acquisitions and some go through several, so how well are these products integrated after those events? Furthermore, what is this company's plans for these products? How will they evolve? What is their vision and where are they planning on taking you? Ask yourself: Are they innovators or imitators?

Let's move on to another question: Am I forced to make poor choices because of VMware's new licensing model?

We all beat VMware very hard it released its initial licensing model. I was not at a loss for words and for those that claim I am advertising for VMware, please read my blog on licensing before VMware revised it. No, I was not very nice. That being said, VMware has changed its licensing and has positively responded to its customers. What else do you want? The new licensing model is a fair model. Again, we have to examine why VMware changed its licensing model to start with. The old one was based on cores and CPU chip manufacturers were about to overrun VMware's licensing models with more cores per CPU. It is the natural hardware evolution, so the licensing had to change. They seemed to get it wrong the first time around, but they addressed it adequately the second time around.

Now, if you are having a hard time with the new licensing model, it is more than likely because you have not subscribed to the cloud model yet and are still treating virtualization as though we're in a version 1.0 world, where virtualization was all about being a server consolidation play. VMware's strategic vision is cloud. As a result, this licensing model is closely aligned with the new unit of measure in the cloud -- VMs.

If you do not subscribe to this vision or your organization is not interested in it, then maybe you are right, you should look at an alternate hypervisor that suits your needs and vision and there are plenty out there that are great.

Do other hypervisors provide good capabilities for less cost?

Absolutely, there are other hypervisors out there that provide fantastic performance, great capabilities and features at a less expensive price point. You are spot on: Microsoft Hyper-V, Citrix XenServer, Red Hat Linux are a few that I can name that I have extensive experience with. I can say without a shred of doubt that these can deliver excellent virtualization infrastructure if what you are looking for is a simple server consolidation play.

Microsoft Hyper-V 3.0, for example, will now have support from Cisco with the Nexus 1000V, so these features will slowly make their way to all hypervisors. The question is, can you wait and how long? The bigger question is, will your business wait?

Can VMware keep its lead in technology without ratcheting up costs every release? I feel like in a year we'll be talking about how we need Enterprise Plus Plus Plus licenses per vCPU divided by vRAM, times VM count.

Yes, absolutely. VMware cannot keep raising the price of vSphere, it just does not work or make any sense. It's why you'll see add-on products like vCloud Director, vCenter Operations and others that complement vSphere in a tightly integrated way.

In VMware's defense, they introduced Enterprises Plus once. There haven't been that many Enterprises Plus license models introduced and if I was a betting man, VMware will start adding more features into these editions without a price change. It might be a model similar to Citrix's Subscription Advantage, where, if you have support you get the updated version for free.

What is VMware's strategic vision?

One reader commented that he has asked VMware many times for its vision and in return he has received a wishy-washy answer. For starters, you probably asked your VMware sales rep. Some of them are good and can deliver the message and answer your questions, while some are just order takers, the "what would you like today, sir?" kind of rep. I would not pose this question to my sales rep. A few ways to understand VMware or any other tech company's vision, for that matter, is to attend conferences like VMworld. Another, easier way is to seek out an executive briefing where you get to interact and listen to the company's strategic vision from non-sales reps.

VMware as a company is focused on the cloud -- all its efforts, all its products are aligned to conquer the cloud space. At VMworld 2011, it was very clear from the product announcements, positioning and messaging that all VMware cares about is the cloud. As a result, its virtual infrastructure is no longer positioned or suited for customers who are looking only for server consolidation and that is it. If that is what you are looking for, then I am telling you: vSphere is not the product for your business. If you are looking beyond that, then vSphere is most certainly your only choice. It's just my honest opinion.

What about RHEV?

I applaud Red Hat's efforts in this space and I think the acquisition of Qumranet in 2008 was the right move and has significantly improved its virtual infrastructure. I just don't see it having a significant effect. Red Hat in my mind will always spell Linux and while it could virtualize Windows very well, it is going to be very difficult in my opinion to persuade someone away from VMware to RHEV. I think Hyper-V and XenServer have more mindshare and are better positioned to take advantage of a virtual infrastructure swap. Although RHEV has some really cool features coming out soon, I just don't see Red Hat changing the landscape very much.

In my closing notes, I just wanted to address some accusations that were thrown about VirtualizationReview.com being biased in VMware's favor. While I typically don't respond to these type of comments, it was written against my blog so I feel as though I have to answer them:

This publication is a very respectful one. I have never been asked to write anything in anyone's favor. Doing so would affect my credibility and integrity and I would not do it for anyone, not even VirtualizationReview.com. My thoughst are my own. I have written countless blogs on Citrix XenDesktop, XenApp, PVS, NetScaler, Web Interface, Microsoft RemoteFX, etc. I am technologist, interested in good technology. I am opinionated, therefore, sometimes you will see pieces like this where I talk about how I see things. I have an open mind and welcome your comments, so join the conversation. (And thanks to those who have joined already, even those whose opinions differ greatly from my own.)

Posted by Elias Khnaser on 10/11/2011 at 12:49 PM1 comments

Every now and then a report surfaces comparing vSphere to its hypervisor competitors. Everyone focuses on performance and how fast a live migration takes and how many concurrent live migrations can be run at the same time. But that's not enough, so they move on to memory management and "monster" VMs.

Before I continue, it is worth noting that I love VMware products and am a recipient of the VMware vExpert award. Even then, I also appreciate products from Citrix, Microsoft and others. In fact, I am an old time XenApp fellow and a huge supporter of XenDesktop. I also think Microsoft App-V and Hyper-V are fantastic products.

Now all that being said, when I am advising customers on a virtualization strategy, I keep emotions aside and make my recommendations not just based on features and pricing, but also and most especially on company focus and strategic vision. It's the reason I recommend vSphere 9 out of 10 times -- most certainly, it has a lot to do with features, performance, and stability, as well as supporting products in vSphere's realm.

VMware has a very clear strategic vision on where it wants to go and how it wants to get there. They are focused on the cloud, and every product it has fits perfectly in that strategy and is a building block leading up to it. vSphere as a virtual infrastructure is complete from every angle. It ties very well and has very large support from all storage vendors, lots of support from networking vendors and compute vendors.

I'm not saying that other hypervisors don't have support for these components. Take XenServer, for instance, sure, it has StorageLink and Hyper-V also has APIs for storage. So, the question is, how many storage arrays support these? We can talk about the network and you will notice that Cisco announced that it will extend Nexus 1000V support for Hyper-V 3. I am not going to go into how long this took, I am a strong believer that eventually all hypervisors will be on par from a feature and performance standpoint. It is the plugins and additional software that make all the difference in the world.

On another note, vSphere is a complete infrastructure deployment that addresses how security should work in a virtual environment with vShield, it addresses antivirus in a virtual environment with vShield Endpoint. We can't keep using agent-based approaches in a virtual world. Agent-based technology was perfect in a physical world because it is an isolated stack of compute, storage and networking. In the cloud era, having an agent-based approach in VMs defeats the purposes of the cloud where shared resources are the building blocks.

Let's shift to vCloud Director. It integrates perfectly with vSphere, is easy to deploy and use and has fantastic third-party support. vCloud works for your internal, on-premise clouds, but can also be used for your hybrid clouds. Again, we're talking one management console, one interface and excellent community support. Citrix and Microsoft have competing products, but do they integrate as well? How comprehensive is their communities' support? I am not trying to rub it in Citrix or Microsoft’ face right now; I am just explaining why we make certain decisions.

Let's look at vCenter Operations. Sure, this was an acquisition, but it also has been integrated perfectly in the virtual infrastructure. What is so special about vCOPS? It is a different way of looking at metrics, it is an easy way of taking a look at metrics and making sense of them. For years, we have had these monitoring systems that all gave us the same data, and for the most part they all plugged into perfmon and gathered the same data and put it in cool graphs. Did we ever use our monitoring tools? Come on, be honest. We looked at it, and when it was all green, we said, "perfect" and moved on. Today, we can measure health, we can measure trends, we can measure capacity. We have moved into proactive monitoring.

The point I'm trying to make is it does not matter anymore if a hypervisor performs a bit better or worse than another. What matters is how we are going to use this hypervisor today and how we can use it tomorrow. Here's an example: When I'm engaged in a desktop virtualization project, 9 out of 10 cases I recommend VMware vSphere for the virtual infrastructure, but in 9 out of 10 cases I also recommend Citrix XenDesktop for the broker. Why? For starters, vSphere is already likely the infrastructure in the environment; it has great storage support, which is imperative for desktop virtualization. It has an antivirus solution that is perfect for desktop virtualization, which is critical for desktop virtualization and it has remarkable networking support. So, by marrying best-of-breed software, you can leverage all the features, bells and whistles and come to an environment that runs very well.

Now that is just one example of many I can give, but what about strategic vision? What else is VMware working on that is not here yet? Another example: Who does not love Dropbox? In the age of cloud, an enterprise-like Dropbox solution would be very welcome by users and organizations alike. We should expect to see something from VMware in this area in the next year. Another strategic approach they have taken, is they have embraced "social business" by acquiring SocialCast, VMware is highlighting and emphasizing the era of social networking in the workforce and we will see social media integrated throughout the VMware product line where it makes sense.

So you see, it is not just about hypervisor pricing, it is not an apples to apples comparison anymore. In the age of virtualization, the hypervisor is the foundation, but it is all the added amenities which supports the transformation into IT as a Service.

Posted by Elias Khnaser on 10/03/2011 at 12:49 PM10 comments

The cloud has most certainly perplexed folks -- I have never seen a disruptive technology cause so much confusion in the IT world. Today, I want to discuss the different faces of the cloud, not just public clouds, but also private clouds and internal clouds. Wait, what is an internal cloud now? So let's get on the same page shall we?

We are going to break them down into 3 categories and then go into sub-categories:

Public Cloud -- This type of cloud is often hosted by a service provider of some sort and comes in many different flavors of "xyz as a Service": IaaS, PaaS, SaaS, and more. I've written on these types of clouds here.

Private Cloud -- This type of cloud, contrary to popular belief, does not imply a cloud which is built by you on- or off-premise. This type of cloud refers to your own private chunk of a public cloud. In other words, you go to a service provider and instead of subscribing to the shared public cloud model that they offer, you request that they carve out hardware and dedicate it to you, all the while they own it, manage it, etc. You get the benefits of public clouds, but you pay a premium for hardware isolation

Internal Cloud -- This type of cloud refers to a cloud that you build from a hardware and software stand point. It could be on- or off-premise in a co-location somewhere, but you are responsible for the hardware procurement, support, software procurement and support. This type of cloud is typically a highly virtualized, highly automated and orchestrated datacenter with self-service portals, catalogs, charge-backs and show-backs, etc.

It's important as we move into the cloud era that we use the same terminology so that we are on the same page when discussing these topics. It is also a good way when discussing the cloud with your friendly neighborhood value-added partner.

Posted by Elias Khnaser on 09/29/2011 at 12:49 PM7 comments

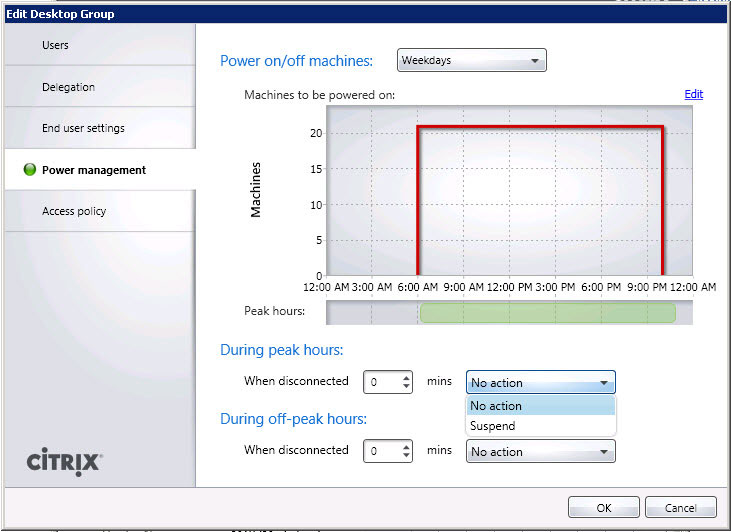

Here's a PowerShell tip using the Set-BrokerDesktopGroup cmdlet to make sure the disconnect power state for VM desktop groups doesn't go right into Suspend mode.

PowerShell fever sweeping through the industry. I don't know who convinced Citrix, VMware and others that PowerShell fashion is now "in." It seems there is a race among the vendors to see who can add more in PowerShell -- if you ask me, the idea behind a GUI is that it should automate and simplify my life, so going to a command-line-based scripting interface is counterproductive and very distracting.

Sorry for the rant. Now, here's a PowerShell tip: When you build your "Random-pooled" desktop groups, you will notice that your Disconnect timer has a single action called "Suspend" that you can configure (see Fig. 1). Suspend means that after a user session has been disconnected and the disconnect timer has expired, the VM will go into "Suspend" mode.

|

|

Figure 1. Without a GUI option for restarting VMs from Suspend mode, as seen here, we'll have to turn to PowerShell to shut them down and restart them back up. (Click image to view larger version.) |

For many of us, Suspend is not a desirable state. Instead, we typically want to restart the VMs when the disconnect timer expires, but that setting does not exist, not even with PowerShell. So, what is the solution? It's actually a pretty easy one: Set the action to "Shutdown" and then let the power management rules dictate how many VMs are powered on. This way, at least you take it out of suspend and into a usable VM.

To activate the Shutdown setting, open PowerShell and invoke PowerShell's Set-BrokerDesktopGroup cmdlet this way:

Set-BrokerDesktopGroup yourdesktopgroupname -OffPeakExtendedDisconnectAction Shutdown

Set-BrokerDesktopGroup yourdesktopgroupname -PeakDisconnectAction Shutdown

Set-BrokerDesktopGroup yourdesktopgroupname -PeakExtendedDisconnectAction Shutdown

These commands control the Disconnected timer settings for Peak, Off-Peak and Extended Peak. Pay attention to these settings as they could make all the difference in the world when you are deploying XenDesktop and don't want to be left in a state of suspense.

Editor's Note: The article title and description were corrected after the editors introduced some errors. We apologize for the inconvenience to the writer as well as readers.

Posted by Elias Khnaser on 09/26/2011 at 12:49 PM4 comments

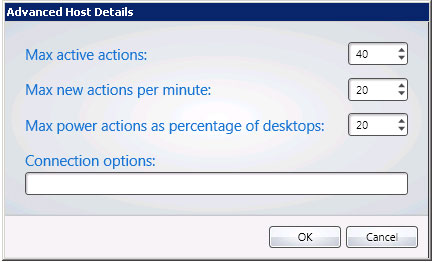

I was recently helping a customer troubleshoot an issue they were having with XenDesktop 5.5 (yes, this also applies to XenDesktop 5) not registering with the controller fast enough.

Here is the scenario: My customer is a school district that has deployed VDI using XenDesktop 5.5 in their labs. The labs consist of 30 workstations, typical of lab environments.

Now when users logged off their sessions, the default behavior of XenDesktop is to reboot the VM and bring it back in a pristine state, ready for the next user to connect. This process was taking too long. By the time the machines all rebooted and came back to a “Ready” state, more than 20 minutes would pass.

They could change the log off behavior of XenDesktop so that it does not reboot when users log off, but that was not desirable. They wanted the VM to reboot and flush out everything from the previous session, except they wanted it to do so much faster.

What I noticed as we were troubleshooting was that XenDesktop would reboot a specific number of VMs at a time. What XenDesktop was doing was pacing the reboot so that the reboots didn't overwhelm the system. It's a great idea, but we had great hardware that can handle more than the default configured values.

What we ended up doing was modifying the default behavior, so that XenDesktop would direct the hypervisor to boot and reboot more VMs at a time. That way, a lab of 30 would come back to a “Ready” state much faster. The trick is to modify the values which XenDesktop passes to the hypervisor. Here's how:

- Open Desktop Studio, click on Configuration and then click on Hosts.

- Right-click your host connection and choose Change Details, then click Advanced.

Fig. 1 shows the screen, where you can modify the values passed on to the hypervisor. We took the max active actions to 40.

|

Figure 1. Changing the default behavior of XenDesktop so it boots and reboots many more VMs at a time. |

In your environment, you should experiment with these settings to arrive at the ideal combination that best suits your deployment. There is no correct setting in this case but rather trial and error until you get the right mix.

Posted by Elias Khnaser on 09/22/2011 at 12:49 PM3 comments