Oracle's recent acquisition of Xsigo raised a lot of questions. Many concluded that this was Oracle's way of hitting back at VMware's acquisition of Nicira, but I believe that not to be true, as Xsigo and Nicira are not the same.

Xsigo's technology aims at virtualizing I/O and reducing cable count, whereas Nicira allows you to build complete computer networks. They both do share one similarity in that they reduce proprietary networking equipment.

Xsigo can be compared to HP's Virtual Connect, albeit Xsigo in my opinion has a more extensive and very powerful solution.

In a nutshell Xsigo is a top-of-rack solution that standardizes and virtualizes the connection between the servers and the top-of-rack switch using either InfiniBand or 10GB Ethernet. The advantage in my mind is that it provides future-proofed capabilities to the environment. That top-of-rack switch can accept 10GB cards, Fiber Channel Cards, regular Ethernet cards, etc., and when new technology surfaces, you just add another module and blade to that switch without needing to recable or change adapters at the server level.

Xsigo also virtualizes the server-side cards. Instead of having 10 Ethernet NICs, 4 Fiber Channel Cards, 2 10GB NICs, you now have one or two adapters on the server side and you virtualize all the rest. Now, you can carve out the I/O between the server and the top-of-rack switch into multiple virtual fiber channels, Ethernet, etc.

Xsigo is a truly elegant solution, but I am not overly excited that Oracle has acquired them because of the latter's track record of uncertainty around acquisitions. I am concerned about Xsigo's future at Oracle.

So, now you can imagine why I'm astonished when analysts draw comparisons between Xsigo and Nicira, as these companies produce very different products.

What are your thoughts on how Oracle will leverage Xsigo?

Posted by Elias Khnaser on 08/01/2012 at 4:59 PM3 comments

If you are just starting to dabble with Windows Server 2012 and specifically with Hyper-V 3.0, you may find it a bit confusing since there are three different ways of installing the Hyper-V role. You can install it on Windows Server Core 2012, Windows Server Minimal Installation and the regular full-blown version of Windows Server 2012.

Windows Server Core is a very lightweight installation with access limited to the command line and PowerShell. Minimal installation has limited access to the MMC and Control Panel. The full Windows Server 2012 install has full GUI access.

Windows Server Core is most efficient, and that's what we'll focus on here.

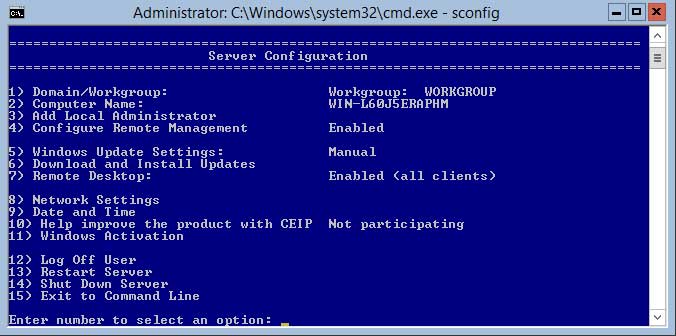

To get started open a command prompt and type sconfig (see Fig. 1), then select option 7. We need to enable Remote Desktop which is disabled by default; you will be prompted to enable it by typing "E" for enable.

|

|

Figure 1. Options for sdconfig. (Click image to view larger.) |

If you have not configured your network settings, option 8 will help you do that and that is a prerequisite for the installation of Hyper-V. Sconfig will also allow you to change the hostname and adjust the date and time if you need to.

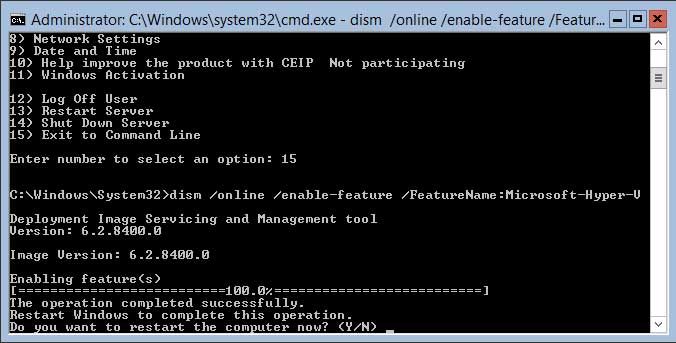

Once you have met all the necessary prerequisites enter the following command to install the Hyper-V role:

dism /online /enable-feature /FeatureName:Microsoft-Hyper-V

The installation will begin (see Fig. 2) and once successful you will be prompted to restart the server in order to complete the setup.

|

|

Figure 2. Hyper-V installation. (Click image to view larger.) |

Now, another handy little tool for you is the Minimal Server interface, which gives you a GUI but you don't have a start menu, desktop, Internet Explorer, or Windows Explorer. However, Microsoft Management Console and a subset of the Control Panel are present. In this scenario, you can launch the Hyper-V Manager from a command line by typing virtmgmt.msc.

Hope this was helpful!

Posted by Elias Khnaser on 07/30/2012 at 12:49 PM7 comments

It is no big secret that I am a huge believer in cloud computing, so much so that I think enterprise-owned or -managed datacenters or infrastructures will be the exception in the next 10 years, with enterprise datacenters existing only for very specific use cases.

From a technical and cost perspective, cloud computing is a great model. But one of the things I failed to factor into my relentless excitement about cloud computing is the fact that now that we have taken computing into the clouds, we have little to no control over natural disasters and our ability to recover from them.

Now, I'm not talking about earthly natural disasters, those we can somewhat protect against by building redundancy, geo-replication etc. Instead, I'm talking about the unearthly ones, such as space weather that could disrupt and destroy, like solar flares.

At a recent customer meeting while I was raging on the benefits of cloud computing and preaching like the good apostle that I am. One executive caught me off-guard and asked me about solar flares and how that could pose a potential risk to cloud computing. Here I am talking for the last 35 minutes about the cloud unchallenged, so how dare he throw out that question! My mind was working at 1000 MPH and I did not really have a good answer. My response basically was, we have bigger things to worry about with a solar flare that's powerful enough to knock out your cloud data.

In any event, I was not satisfied with my answer and he honestly got me thinking, not only had this guy stumped me, but he also made me doubt my cloud faith. So, I began researching and to my surprise I had answered his question pretty accurately. A sigh of relief rushed through my entire body -- "Relax, Eli, you are still 'the kind'!" -- and my ego was restored.

On a more serious note, I found out later that this executive had read a CNN report on the top 4 things that could bring down the Internet and he correlated the Internet to also mean cloud computing. It's a valid point, and the truth is that we have experienced solar flares in the past and they have disrupted our communications systems and the Internet. That being said, the kind of solar flare that could bring down cloud computing and the Internet as a whole would have to be so powerful that it will essentially fry all electronics on earth.

Riddle me this: In the event a solar flare with such power strikes, do you believe that your private on- or off-premise datacenter will survive it? With a solar flare of such power, do you really think that your backup tapes will be of any value? And lastly, do you think you will be thinking about your job, your data and your clouds?

I have maintained for years that the Earth is a single point of failure, so while our extravagant disaster recovery plans can protect us against earthly disasters, at some point you just have to throw in the towel and say this is the best we can do.

With my faith in cloud computing restored, I am now researching other risks to cloud computing that are a little less "doomsday" and a lot more practical and I must admit I am finding challenges I never thought of. I'd like to start a conversation about the potential risks to cloud computing, so please share your insights and thoughts but please steer clear of the obvious.

Posted by Elias Khnaser on 07/23/2012 at 12:49 PM6 comments

Registering a virtual machine with the hypervisor is a handy tool that can be used when moving VMs between hosts, changing storage locations or even manual creation of virtual machines. Hyper-V 3 in Windows Server 2012 has some new advanced features for registering a virtual machine and options for importing them into Hyper-V.

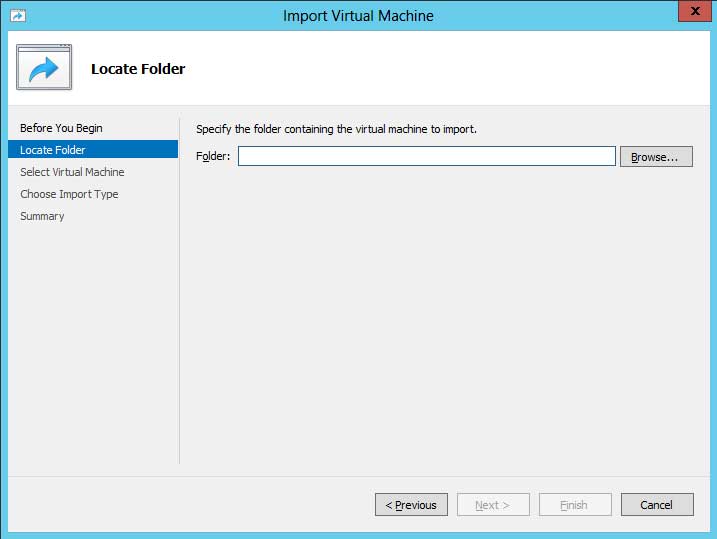

To get started, launch Hyper-V Manager, select your host in the left node, right-click and select Import Virtual Machine. The Import Virtual Machine wizard launches and you are presented with the "before you begin" welcome screen; click the Next button to continue.

The next screen (Fig. 1) will prompt you to specify the current location of the VM files that you want to import. Enter the correct value and click Next to continue. You will then be prompted to select the virtual machine you wish to import from the location you specified earlier. This is relevant in case that location has more than one virtual machine stored on it. Select your VM and click Next to continue.

|

Figure 1. Locate Folder. (Click image to view larger version.) |

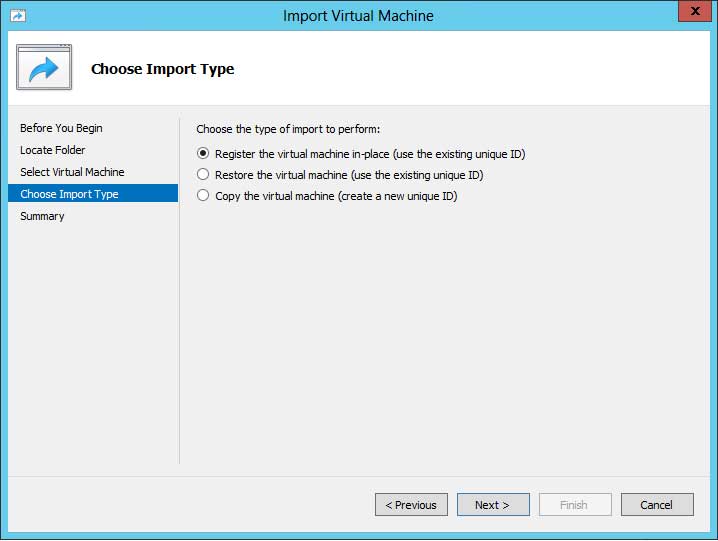

Up next is the import type (Fig. 2) and that is essentially the heart of the import process.

|

Figure 2. Choose Import Types. (Click image to view larger version.) |

You should make your selection after you have understood what each option does as follows:

Register -- This option assumes that the VM and its files are in the right place and that you simply need to register the VM's unique ID with Hyper-V. This could come in handy if you bring up a new Hyper-V host and for whatever reason or circumstance you present the storage to Hyper-V and you need to manually register the VMs on that storage. The unique VM ID is preserved and does not change in this option.

Restore -- This option is handy if you have your VM files stored on a file share somewhere or maybe even on a removable disk and you need to register the VM's unique ID with Hyper-V, but also need to move the files from the current storage location into a more appropriate location. This option will copy the files and will register the VM, all the while preserving the VM unique ID.

Copy -- This can come in handy when you are manually copying the VM files for the purpose of creating a new VM. Essentially you are using the "template" approach, except you are doing it manually. In this case using the Copy command will generate a new unique ID for the VM you are creating out of the copy process.

Have you been using Hyper-V on Windows Server 2008? If so do you find this new feature helpful? I would like to gauge the community pulse on some of these blogs I will be writing to see what the impact of some of these new features will have to your day-to-day job.

Posted by Elias Khnaser on 07/18/2012 at 12:49 PM3 comments

Lately, I have been coming across many customers that are not leveraging the full potential of Citrix provisioning services simply because they are not configuring the product the right way. Basically, they are using it with all the defaults configured during installation.

One of those defaults, if not modified, will limit Citrix Provisioning Services to support only 160 devices. These devices can range from physical or virtual, but if you are planning on using the product for VDI, for instance, and you will be supporting more than 160 devices, I suggest you keep reading.

During the initial configuration of provisioning services, you are prompted to configure the number of UDP ports that you want to make available for use by target devices. If left unchanged, the default is 20 ports. These are ports on the PVS server for which devices can connect to and stream virtual disks.

Also by default, the advanced settings configure the server for 8 threads per UDP port. Translation: Each one of the 20 UDP ports that were configured earlier can support up to eight devices, which makes the total number of supported devices that this PVS Server can support using the following formula:

20UDP ports x 8 threads per port = 160 devices

Now, I am sure you are asking, "Will the 161st device get a denial of service?" Not exactly, the 161st device will not find an open port or thread and will go into a state of constantly requesting to connect to the PVS server. And this means you will see a lot of network retransmits and increased CPU utilization on the server, and this in turn will, of course, affect the devices that are currently connected and streaming.

In order to avoid this situation, you need to figure out how many devices you intend on supporting per PVS server. Best practice calls for anywhere from 700 to 1,000 devices per 1GB physical NIC on the server. Assuming you want to support 1,000 devices, the math would look like this:

50UDP ports x 20 threads per port = 1,000 devices

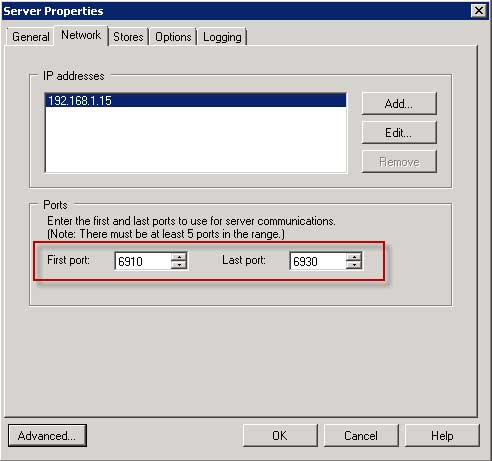

To change the default values, open the Citrix Provisioning Services Console and expand the farm, the site and the servers node, highlight the server in question and go to Properties. Once the Properties window is open, select the Network tab and then increase the number of available UDP ports (see Fig. 1).

|

Figure 1. Increase the number of available UDP ports. (Click image to view larger version.) |

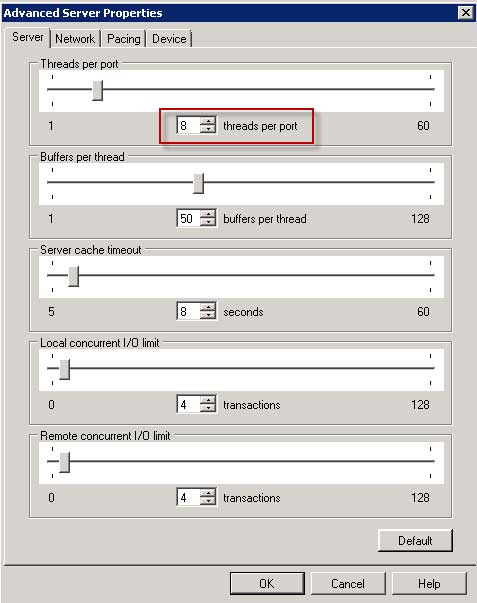

To change the number of threads per port, click on the Advanced button on the lower left-hand corner and a window will pop up that allows you to increase the number of threads per ports (see Fig. 2).

|

Figure 2. Pop-up to increase the number of threads per ports. (Click image to view larger version.) |

The advanced properties offer the ability to fine-tune the performance of provisioning services. In a future blog I will discuss the other values and how you can modify them. For now, if you are a Citrix PVS administrator, then having intimate knowledge of these values is crucial to running a successful infrastructure. Simply put, the default values that you see are in most cases are almost always not good enough (with the exception of a few). Experience has shown that these values are set at a default that is lower than where they should be.

If you have experience with these values and can report back to the community, please share your thoughts with us in the comments section what values you have configured and what your environment looks like.

Posted by Elias Khnaser on 07/16/2012 at 12:49 PM1 comments

Now that Dell acquired Quest and is already touting the idea of an end-to-end, single-throat-to-choke desktop virtualization solution, does it make sense for Citrix to respond by acquiring Nutanix? Citrix is already in the hardware business with NetScaler and Branch Repeater, so the concept is not foreign to the company at all, and the Nutanix appliance would fit perfectly in Citrix's line of business solutions such as VDI-in-a-Box and XenDesktop.

Desktop virtualization has seen slow adoption primarily because of costs -- that have admittedly come down -- and while I am seeing an incredible uptick in desktop virtualization projects in the enterprise, cost remains a deciding factor followed very closely by complexity. If Citrix were to buy Nutanix, it would instantly have a better, more simplified solution than the Dell approach (i.e., "Let's bundle everything we have and sell it") which removes the complexity of ordering the solution while preserving and creating new and even more complex technical support challenges within the same organizations.

While that is one scenario, some analysts have taken it further and have cryptically hinted that the Dell acquisition could be a catalyst for HP buying Citrix, and for Cisco buying EMC and VMware -- which are anything but new ideas. I personally think it takes a stretch of the imagination to think that just because Dell bought Quest we'd see this seismic shift in the IT landscape. Citrix acquiring Nutanix is more than an adequate response that would be well-measured.

Now, of course, Citrix could simply ignore the whole thing and continue building good solutions. I firmly believe that a turnkey hardware appliance solution would be a great value-add and a significant differentiator for Citrix. I would have speculated that VMware might buy Nutanix but Citrix is more likely since it is in the hardware business already. I am certain, however, that if Citrix bought Nutanix then Cisco-VMware would build a VMware View hardware appliance to counter -- as a matter of fact, I would not be surprised at all to see that happening.

What are your thoughts? Would you be interested in buying a software/hardware version of Citrix or VMware in a box?

Posted by Elias Khnaser on 07/11/2012 at 12:49 PM17 comments

Michael Dell should give that credit card a breather, and explain to us what the overall vision is for his company. Where do all these acquisitions fit? What is the story, Michael? I look at Citrix, VMware, Cisco, EMC, etc., and I see a story, I understand what they are doing, I look at Dell and I see acquisitions but no one is taking the time to give us a story, to dazzle us with some thought leadership. I am sure someone at Dell understands where all these pieces fit and what they are trying to accomplish.

When Dell bought Wyse, I applauded. It was a match made in heaven and I knew exactly where Wyse fit in and why Dell would want them. Wyse is the number one thin client manufacturer in the world, Dell is in the PC hardware business and desktop virtualization is hot. Acquiring Wyse made them number one overnight in a market where they had very little share. Wyse is also a business model they understand and it gels well with current company objectives. Couple that with Dell's great relationships with Citrix and VMware and it just made sense.

The Quest acquisition, on the other hand, confuses me. I get that PC sales are slowing and margins are disappearing. I understand the need to diversify and I certainly understand the need to turn the company around. Software can be very profitable, but, nonetheless, why Quest? How does this acquisition fit into Dell's the overall vision? Does the company seek to be the end-to-end datacenter? If so, buying Quest does very little to accomplish that, so what is the objective?

If we start with desktop virtualization -- and the only reason I start there is because they recently acquired Wyse -- what are they thinking? Will Dell use vWorkspace to go up against Citrix and VMware? That would be a bad business decision. vWorkspace is a good product, but it doesn't even register as a competitor in the marketplace today. What is the plan ? Bundle Dell servers, storage, networking, SSL VPN, Wyse thin clients and vWorkspace and deliver end-to-end desktop virtualization? That is a very thin and short-lived proposition.

When I compare Quest's virtualization solutions to what Dell currently has in its portfolio, I see so many duplications, AppAssure conflicts with vRanger, vKernel and vFoglight. Now, don't get me wrong -- Quest has some great software. My question is, where does it fit in with Dell? One Identity and Access Management is cool, but as Gabe Knuth writes at BrianMadden.com, are they thinking of pairing this with SonicWALL and going up against Citrix CloudGateway and VMware Horizon? Windows Server Management solutions, does this not become somewhat of a duplicate software offering considering Wyse's WSM? The Database management solutions are definitely a value-add, but I am still not able to piece this acquisition together.

Dell, are you an end-to-end datacenter provider? A virtualization management solutions provider? Are you getting into the end-user virtualization space, end-to-end? Meaning apps, data and desktops from a software and hardware perspective?

Posted by Elias Khnaser on 07/09/2012 at 12:49 PM0 comments

I can't seem to stop writing about Microsoft, and as I have been touting for a while now, the company is in high innovation mode, striking on multiple fronts and it seems to be in a hurry, acquiring where it needs to, improving where it needs to and building where it must.

Just last week I was discussing whether or not the company would build or buy a tablet and Microsoft unveils Surface. I still think they will acquire RIM or Nokia. The latter is what I am leaning towards as its current stock price is ideal, but we will see.

On the heels of Windows Server 2012, all the new features of Hyper-V 3, new SQL server, new App-V 5, an enhanced cloud strategy with Azure now also focusing on IaaS instead of just PaaS, Microsoft finally admits that SharePoint and its social capabilities are not good enough for the enterprise and recognizes that this is an area where it desperately needs improving and developing something from the ground up would take time, so Yammer was acquired without hesitation.

Where will it fit? Everywhere! For starters Yammer will layer on top of SharePoint and extend its features for more social enterprise friendliness. After that, they will go after Skydrive for the enterprise and extend collaboration features to files -- in the words of fellow analyst Jason Maynard, "Files are to collaboration what photos are to Facebook" and honestly I could not have summarized better.

Microsoft has recognized that both e-mail and file sharing a la SharePoint are not good enough anymore for today's enterprises. Yammer will bring that much-needed collaboration and breathe life into Microsoft's products, including Office.

But what else can Microsoft do with Yammer? Well, how about integration with Lync? That would be a perfect combination. Not only can you collaborate on files in Skydrive and SharePoint, but you can also launch meetings using Lync from within Yammer. It's very similar to how Citrix will integrate Podio with the GoTo family and very similar to how Cisco will integrate WebEx with Quad.

Microsoft's move reinforces a notion I have been circulating that collaboration platforms are likely to be the next desktop, where aggregation of resources and applications happens and where collaboration is native. I think Yammer was absolutely an inevitable step for Microsoft and I applaud the acquisition. I also think we are not done seeing consolidation -- Salesforce.com and possibly SAP, IBM and Oracle are due for similar social acquisitions as well.

The Yammer acquisition clearly validates that the enterprise is ready for social business and that desktop virtualization, collaboration, cloud data are slowly converging and crossing paths to where they are a true end-to-end enterprise consumerization strategy.

What are your thoughts on the Yammer acquisition? Is your organization ready for the social enterprise?

Posted by Elias Khnaser on 06/27/2012 at 12:49 PM0 comments

IntelliCache is a Citrix XenServer technology that was developed to ease the I/O traffic that VDI environments place on shared storage. It is used in conjunction with XenDesktop Machine Creation Services (MCS).

In a nutshell, IntelliCache caches blocks of data accessed from shared storage locally. For example, if your master Windows 7 image resides in shared storage, the first VM that launches on a particular host will get its bits from that shared storage. At that point IntelliCache kicks in and saves those blocks; for Windows 7, IntelliCache will reserve about 200MB. The subsequent VMs that launch on this host will no longer need to connect to shared storage; instead, the VMs will get their bits from the locally cached copy, and so on.

As new blocks are accessed, they are accessed once from shared storage then cached locally -- as you can see, Intellicache can substantially reduce the I/O load of shared storage.

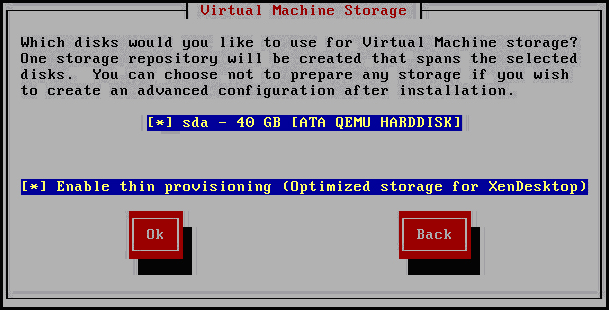

Enabling IntelliCache involves configuration on the XenServer side and on the XenDesktop side. Let's start with XenServer. First, you need to be on XenServer 5.6 service pack 2 or newer. During installation of XenServer, select "Enable thin provisioning (Optimized storage for XenDesktop)"; see Fig. 1. This option will change the local storage repository type from LVM to EXT3.

|

|

Figure 1. Enabling IntelliCache during XenServer installation. (Click image to view larger version.) |

Now for those of you that want to configure an existing XenServer to use IntelliCache, beware that doing so will completely destroy the local storage repository and any virtual machines on it. Remember, you are converting from LVM to EXT3, so all will be lost. I find it easier to reinstall XenServer, but in the event that you can't do that, use the following commands:

localsr='xe sr-list type=lvm host=<hostname> params=uuid --minimal'

echo localsr=$localsr

pbd='xe pbd-list sr-uuid=$localsr params=uuid --minimal'

echo pbd=$pbd

xe pbd-unplug uuid=$pbd

xe pbd-destroy uuid=$pbd

xe sr-forget uuid=$localsr

sed -i "s/'lvm'/'ext'/" /etc/firstboot.d/data/default-storage.conf

rm -f /etc/firstboot.d/state/10-prepare-storage

rm -f /etc/firstboot.d/state/15-set-default-storage

service firstboot start

xe sr-list type=ext

To enable local caching, enter these commands:

xe host-disable host=<hostname>

localsr='xe sr-list type=ext host=<hostname> params=uuid --minimal'

xe host-enable-local-storage-caching host=<hostname> sr-uuid=$localsr

xe host-enable host=<hostname>

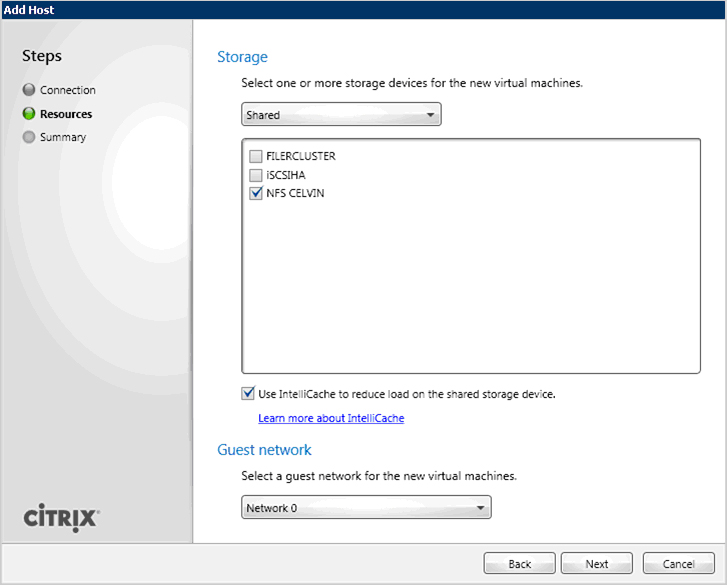

On the XenDesktop side, make sure you are using XenDesktop 5 service pack 1 or newer. You will then need to configure the XenDesktop controller to point to the XenServer host, and during that configuration you will be prompted to select your storage devices. Make your selections and then check the "Use IntelliCache to reduce load on the shared storage device” box (see Fig. 2).

|

|

Figure 2. Configuring XenDesktop for IntelliCache. (Click image to view larger version.) |

Enabling IntelliCache is fairly straight forward. If you are using XenServer and MCS on the XenDesktop side, then I strongly recommend that you test, validate and then use IntelliCache in your environment.

Posted by Elias Khnaser on 06/20/2012 at 12:49 PM0 comments

Microsoft has traditionally done very well in the hardware business. I can honestly say I like all Microsoft hardware platforms, from the traditional keyboards and mice all the way to the Xbox. Speaking of Xbox, who would have thought that Microsoft would turn the Xbox into such a success, given the stiff competition from Sony and Nintendo at the time? But the company did it.

Microsoft now faces a new challenge: Its phone and tablet market share is off the charts -- that is, it's literally off the charts. With Microsoft recently announcing that it will be building its own Windows tablet, it begs the question: Will Microsoft buy a company and build tablets and phones? Or will Microsoft follow its traditional, very successful hardware endeavors of building those devices from the ground up?

If they intend to buy, RIM and Nokia are certainly in the forefront of companies that could fit that profile. But what is Microsoft to gain from any of these companies? In the larger scheme of things, not much. Microsoft does not care about the Blackberry OS or Nokia Symbian, and while the hardware from each company is valuable, it may be cheaper for Microsoft to design its devices from scratch rather than port existing designs to Microsoft.

If market share is the logic behind an acqusitions, then both Blackberry's and Nokia's market shares are slipping at rapid rates, so I don't see what point Microsoft would have in acquiring either company from that perspective. That being said, however, I did not see the point of Google acquiring Motorola mobile either, and that happened. So I guess if Microsoft wishes to streamline and jumpstart its position in the tablet and smartphone market, an acquisition might quickly do that for them.

One thing is for certain: Microsoft sees a glaring opportunity in the smartphone and tablet space and I wonder if we are on the eve of seeing an Xphone or Xpad? I strongly believe that in order for Microsoft to give Apple a run for its money, Microsoft will have to control the entire stack. That means hardware and software, and that is the only way to guarantee quality, branding and focus -- that is how Apple appealed to so many.

Google appears to have gotten it and I think Microsoft realizes that while it's historically been very OEM-friendly, in the smartphone and tablet space it will have to take the approach it did with Xbox if it plans on being relevant in the tablet and smartphone space. This is not to say that the company won't OEM Windows RT for ARM-based systems (it has already announced pricing for OEMs, in fact).

So do I think Microsoft will buy RIM? Do I think it will buy Nokia? No on both accounts. I think Microsoft will take the Xbox approach and build it internally. What do you think?

Posted by Elias Khnaser on 06/18/2012 at 12:49 PM5 comments

Is it arrogant for a company the size of Nutanix to take on the giants of our industry by saying FU-SAN? It is most definitely arrogant, but I most certainly like that because that arrogance hides a solid technical solution and an innovative perspective, a new way of thinking that essentially says that “monolithic” infrastructures are great but they are not the only game in town.

If we look at all the large cloud deployments, the likes of Google, Facebook and others we will quickly notice that they use commodity hardware in a grid computing type approach. That is not to say that they don't have shared storage or SAN, but they have much more of these nodes that together form their compute fabric, except these nodes would need a layer, a file system that connects them together to yield the desired results.

Nutanix brings that type of thought process, that type of technology to the enterprise by offering a converged compute and storage cluster that is glued together by the Nutanix Distributed File System and it is this distributed file system that allows the Nutanix cluster to offer enterprise features that traditionally required shared storage like HA, DRS or vMotion (live migration, XenMotion). Nutanix is a 2U block or container which holds four nodes (hosts, servers). Each node can take up to 192GB RAM, dual socket Intel CPUs and three tiers of storage, 320GB Fusion-io flash, 300GB SATA SSD and 5TB of SATA spinning disk.

While Nutanix can be used for different types of use cases and workloads, I am particularly interested in it for desktop virtualization. One of the main barriers for desktop virtualization adoption has been cost and then, to an equal extent, complexity. Nutanix breaks down both barriers, as the cost of entry is very acceptable and the complexity is simplified.

A while back I had written an article on the cost of desktop virtualization versus physical desktops and a reader asked me how that would work for smaller organizations. Honestly at the time it was not going to be as effective as the value proposition I showed at scale. With Nutanix, however, we now have a story for the small organization as well as the large enterprise.

Now I have maintained for a while now that local disk is not the way to go for desktop virtualization and I have religiously argued against it because all of the suggested solutions that promoted local disk were suggesting that we don't need enterprise features. My take on this is that is not acceptable. I don't want to go backwards. I don't want to lose features and I am not willing to compromise. I also had a lot of reservations on the configurations that were being suggested and we will get to that in a minute.

So why do I like Nutanix so much? There is nothing special with the hardware configuration: SuperMicro computers, Intel inside, Fusio-io cards, some SSD drives and some SATA drives... Big deal, right? I can put that together easily. Well, sure you can but that is where my reservations come into play. First, in that scenario we lose all enterprise features. Second, there are technical challenges with SSDs from write coalescing, to write endurance, etc., challenges you cannot overcome by putting hardware together. You need a software layer that addresses all these issues, and that's where Nutanix Distributed File System comes into play. It enables the enterprise features, but also addresses some of the challenges I mentioned. So, do I accept local disk in this configuration? Absolutely.

For many customers, another challenge is they want to start small with VDI and grow into it. Monolithic infrastructures get cheaper at scale, which is why customers had to buy the infrastructure ahead of time to fit within certain discount ranges. With Nutanix you have to buy the first fully populated block with four nodes, but then after that you can buy a block with a single node in it and scale as you need to. Pretty elegant, if you ask me.

Couple Nutanix with Citrix VDI-in-a-box or VMware View Enterprise for small or medium size organizations and that is a killer solution. Couple it with XenDesktop or View Premier and -- voila! -- scale-out enterprise solution. The cost of desktop virtualization drops again. Next argument, please!

Now I can't write this glowing column in support of Nutanix without finding something I don't like. Today, the only hypervisor supported is VMware ESXi, and while I realize the market share is in VMware's favor, ignoring Microsoft Hyper-V is a huge mistake. Since one of the use cases for this solution is desktop virtualization, ignoring XenServer is also not a great idea given Citrix's position in the desktop virtualization market. Having said that, I do recognize that many enterprises deploy Citrix technologies on vSphere, but nonetheless, vSphere, Hyper-V, XenServer are an absolute must and I know that Nutanix is working on extending support to these hypervisors.

Another feature that would be welcome is Nutanix array- or block-based replication -- maybe an OEM partnership with Veeam?

I am extremely interested in your opinion of Nutanix. I have several customers deploying and I welcome your feedback.

Posted by Elias Khnaser on 06/13/2012 at 12:49 PM2 comments

I am finding that a lot of my customers are starting to realize that they can deliver a more cost effective desktop to users by leveraging Terminal Services with Citrix XenApp, but in most cases they want to be able to provide the same look and feel that Windows 7 delivers rather than a server operating system like Windows Server 2008. For that matter, Citrix XenApp 6.5 includes an Enhanced Desktop Experience feature that can offer that Windows 7 look and feel.

The process of enabling Enhanced Desktop Experience is fairly straightforward, but I have found that many are finding it confusing. So, here's a short how-to that I hope makes it much clearer.

First things, first: If you don't have a general XenApp group policy with Loopback setting configured, this would be a good time to create one and apply it to the XenApp servers OU in Active Directory. Next, you'll want to open Windows PowerShell from the Administrative Tools menu and type this command:

Set-ExecutionPolicy AllSigned

This cmdlet allows you to specify which PowerShell scripts are allowed to run. In this case, we are appending the command with AllSigned, which means that you are allowing all scripts signed by a trusted publisher to run on this server.

Next, you'll want to run the script and to do that you'll switch to the following directory:

C:\Program Files (x86)\Citrix\App Delivery Setup Tools\

and run the following script:

.\New-CtxManagedDesktopGPO.ps1

This will create four new GPOs in your Active Directory:

- CtxStartMenuTaskbarUser: Enables the Windows 7 look and feel for published desktops

- CtxPersonalizableUser: Configures Windows GPOs to limit control panel applets; also restricts users from scheduling tasks, installing programs, etc.

- CtxRestrictedUser: Restricts users from changing wallpaper settings, customizing start menu or taskbar.

- CtxRestrictedComputer: Restricts access to Windows Update and removable server drives.

Typically, you would apply the first two policies in addition to the general XenApp GPO I mentioned earlier to the XenApp OU. If you want more restrictive access then you can add the other GPOs or create one. Of course, after adding these GPOs you'll want to refresh the GPO or allow for the proper time to lapse to have it applied automatically.

One thing I have noticed is that if you have previously logged on to the XenApp server and you have a cached user profile locally, you will want to delete that user profile before launching the published desktop after you have enabled the Enhanced Desktop Experience.

Posted by Elias Khnaser on 06/06/2012 at 12:49 PM2 comments