One of the things I love about the full vSphere Client is the ability to use shortcuts. I find them to be very handy and time-saving especially, when I am switching from one area to another and multitasking. Now that VMware is clearly pushing the Web Client, I thought it would be helpful if I wrote about some of the useful ones.

Apparently VMware must have missed documenting these shortcuts, but thanks to William Lam of VirtuallyGhetto I was able to find them and decided to blog them here -- very little comes up otherwise when I do a Web search for these types of shortcuts.

Herewith, the more useful ones:

- Ctrl+Alt+Home or Ctrl+Alt+1: Back to the Home Screen

- Ctrl+Alt+s: Opens Quick Search

- Ctrl+Alt+2: Opens Virtual Infrastructure Inventory

- Ctrl+Alt+3: Takes you to Hosts and Clusters

- Ctrl+Alt+4: Takes you to VMs and Templates

- Ctrl+Alt+5: Takes you to Datastores and Datastore Clusters

- Ctrl+Alt+6: Takes you to Networking

These shortcust work on both OS X or Windows clients. When I used to administer VMware environments, in the middle of migrations or large VM requests fulfillments I used to love the shortcuts. It's arguable how much time they really save you, but I used to feel like I was admin'ing faster when using them and as a result my focus on more important tasks intensified

How many of you use shortcuts and find this information useful? Share your experiences in the comments section.

Posted by Elias Khnaser on 08/05/2013 at 1:21 PM1 comments

Colleagues and customers have been asking me about the future of Citrix Provisioning Services and whether they should be deploying Machine Creation Services (MCS) instead of it. Ironically, in some limited cases some overzealous Citrix sales reps and sales engineers have been propagating the idea of MCS over PVS. So let's set the record straight once and for all: Citrix PVS is not being retired and there are no plans in the foreseeable future to retire it.

How do I know this? The question has come up on the private forums of the Citrix Technology Professional CTP program and Citrix has refuted the rumors. With good reason: PVS remains one of the most scalable and robust solutions out there for XenApp and XenDesktop environments.

Now that the rumor has been put to rest, let's take a look at some of the features in the new version of the "undead" Citrix Provisioning Services 7:

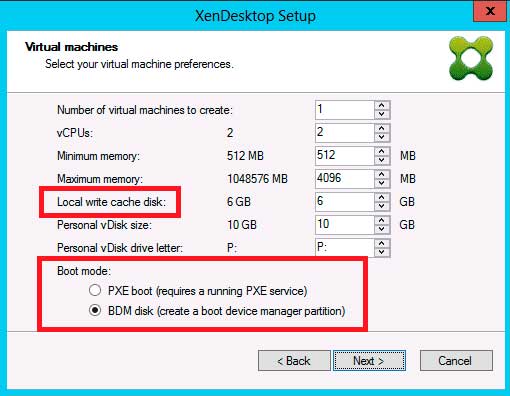

XenDesktop Setup Wizard -- The tool has seen some functionality enhancement and time-saving improvements. Notable is the ability to now add "local write cache disk" from within the wizard, a step that was previously missing but accomplished by adding the vDisk to the image and then having the XenDesktop Setup Wizard clone the disk with the creation of each new VM. So now, the wizard does not have to clone each write cache disk anymore.

Another addition is the ability to choose between PXE boot or a Boot Device Manager (BDM). In many deployments a PXE server can be denied, which means BDM can be used an an alternative. Problem was, it was a process done outside of the XenDesktop Setup Wizard. Now, Citrix includes this feature, which creates a boot partition (see Fig. 1).

|

Figure 1. XenDesktop Setup Wizard now includes BDM natively. (Click image to view larger version.) |

SMB 3.0 support -- I am a huge supporter of the SMB 3.0 protocol and while this is a function of the underlying operating system, PVS 7 now supports SMB 3.0 shares. SMB 3.0 is a very efficient and performance enhancing protocol.

VHDX support -- When coupled with the latest Hyper-V, you can now use the new and improved virtual disk format of VHDX for your write cache disks and for your Personal vDisks. However, for vDisks created and managed by PVS itself, the VHD format lives on and Citrix's reasoning for that is that it still needs to be compatible with Windows Server 2008 R2. What I wonder is, why can't the company give me the option of choosing one or the other? Some work needs to be done here. The VHDX format offers performance increase, of course, but it also means it requires automatic alignment and that can be a cause for significant performance degradation.

Those are some of my favorite new enhancements for PVS 7. While there are more that I have not covered here, this release feels like it was geared to boost Hyper-V and System Center support with many features and integration points introduced. While this is a very welcome step, as Hyper-V and System Center are most definitely capturing market share more and more everyday, I was a bit disappointed as to the VMware functionality enhancements and also at the fact that PVS remains a separate management console and infrastructure. I am looking forward to the day when the OVS console is folded into Studio.

All in all, for those of us who are fans of the PVS technology, rest assured that it lives on and you should not fear a PVS deployment. Tere is no truth to the rumor that Citrix will be retiring the technology soon, although it may seem that way from the XenDesktop 7 features that highlight MCS more.

Posted by Elias Khnaser on 07/29/2013 at 1:22 PM0 comments

It's hard to imagine Microsoft holding on to its current Windows desktop licensing model for much longer. As the company doubles down on cloud and as Azure builds momentum, Microsoft will look to build more services to improve Azure in the enterprise.

In a recent survey that my company conducted internally with customers (mind you, company sizes ranged from 1,000 employees to about 25K employs), about half said DRaaS was their top priority. A surprising 25 percent said they were going for DaaS and the rest were were looking at IaaS and some other services. Now I am not claiming that our survey is an indicator for what is going on in the market as whole, but considering the markets I service, I would consider it to be fairly accurate.

Now if you also consider that Windows has all but lost momentum as a desktop operating system with consumers and in the enterprise, as evidenced by significantly depressed PC sales reports but also by simply observing the nature of meetings, I can tell you that in 90 percent of the meetings I am in, the Apple MacBook is the dominant laptop.

So how does Microsoft turn this around? Well, I am a huge believer in Microsof. They have always come from behind and they have always managed to get it right and I don't think the current situation is doomed. On the contrary, with some creative thinking Microsoft could maintain its pole position in the enterprise. And the answer lies with Azure.

Enterprises like the consumption model and many services have moved to the cloud: e-mail, collaboration. Why not come out with a DaaS model that would work? Here is what I envision.

If Microsoft unlocked Windows desktop licensing with Windows 9 and made it so that when you purchase a Windows 9 license you automatically get a trial 60 VDI instance that runs on Azure, that would be cool right? But it is not a game changer, it's déjà vu -- we kind of have that today with Remote Desktop Services themed to look like Windows 7. But what if as part of that service Microsoft also couples it with a synchronization engine so that the VDI instance running in Azure is a replica of what is running on your desktop or laptop? And what if you could connect to that VDI instance at any time, and if you make changes on your VDI instances, those difference blocks are then synchronized with your laptop or desktop once that is online? I don't know about you, but I would absolutely love that feature.

Offer the same engine and products to the enterprise, maybe the synchronization engine becomes part of System Center? That is just one example that I find interesting. I am sure there are so many other features that could be integrated to make it even more attractive, but the bottom line is that Microsoft cannot hold on to market share by simply denying Service Provider access to Windows desktop licensing. And evolving is inevitable, so why not build a service that your consumers want and that would help you sell more Windows licenses and maintain leadership?

We can debate to death whether VDI has a future, but it's a certainty that VDI will be present in some shape in every enterprise. As for physical desktops without cloud integration -- which I consider the new "dumb terminals" -- why not integrate with cloud and evolve the OS?

What are your thoughts? Would you buy a service like the one I just described? Please share in the comments.

Posted by Elias Khnaser on 07/22/2013 at 1:24 PM8 comments

I recently had a conversation with a friend who is a VMware architect for one of the largest snack companies in the world. And that company has a very large VMware infrastructure footprint. He called me up with a dilemma and wanted my opinion on how to address it. He is planning and designing an upgrade to VMware vSphere 5.1, specifically to take advantage of single sign-on high availability (SSO HA) capabilities. He has several vCenter servers in the environment, so he is using Linked Mode -- except he wants to use it in multisite mode. Now my friend is well aware of the limitations and requirements of this implementation, but I will list the caveats here in case you are not familiar with them.

Remember, SSO HA is a new feature introduced with vSphere 5.1 and still has a way to go as far as maturation. You should be aware of the following when designing a solution using it:

- To use the SSO in HA, you will need to front-end the SSO servers with a load balancer.

- The admin role is always held by the first SSO server and does not fail over, which means if that server goes offline all services registered to SSO will continue to function properly unless you restart the server or service, at which point they will not come back online until that first server is restored.

- While that server is down you cannot add any new services of course and your ability to manage SSO is hindered

Those points being said, there is a way to promote your secondary SSO node to primary in the event that the primary is not recoverable. There is a file on the primary that you can copy (before it fails, of course) to the secondary, which contains all the necessary information to promote it should you need to do so. It's not a very elegant solution and prone to error.

It is crucial to fully understand these implications in order to properly design your environment.

As I said, my friend was aware of these limitations and was asking for alternatives. I then suggested that he use vSphere High Availability instead, as that will give him more than adequate protection against host failures and will restart the VMs in a very acceptable timeframe. But he said he wanted to protect against more than just a host failure. Given the size of his infrastructure, his concern is understandable.

I then suggested he use vCenter HeartBeat. I have always been a fan of this technology and it so happens that it supports and protects SSO. It's something that he hadn't thought of, and we ended up discussing and designing his environment to utilize vCenter HeartBeat to protect all vCenter components and plugins against operating system and application failures (among other things), and he was able to use Linked Mode vCenters and multisite SSO. Heck, he could have designed this out further if he wanted to and account for disaster recovery by designing the environment for failover and failback as well, where he would have HeartBeat protect the critical components at both his production and DR sites.

I wanted to share this story with you because I want to highlight the fact that while VMware continues to innovate and deliver excellent features in each release, sometimes it is a good idea to be cautious, to take a step back and fully understand that some of the features you're planning on implementing are at generation 1 and you may want to use alternative -- but just as effective -- solutions.

I'm very curious to know how many of you are considering SSO in HA mode and if you have taken these caveats into consideration and whether or not you find HeartBeat as a viable alternative. Or let me know if vSphere HA is enough in your situation. Please share in the comments section.

Posted by Elias Khnaser on 07/10/2013 at 1:24 PM0 comments

One of the more exciting features of Microsoft Windows Server 2012 R2 Hyper-V is Storage Quality of Service. QoS enables IT admins to control the minimum and maximum number of IOPS a virtual machine can consume. This is an important feature in the cloud era for many reasons.

For starters and to tackle the obvious, we want to be able to control the "noisy neighbor" VMs. This is a situation when a particular VM for a number of reasons is consuming most of the IOPS and limiting the available IOPS for the rest of the VMs, thereby degrading performance.

Controlling IOPS is important for multi-tenant purposes, but another equally important use case could be to suggest a price band for the availability of IOPS to VMs. For instance, you might be charged by your cloud service provider based on the amount of IOPS you request. Storage QoS provides a mechanism for them to limit the IOPS and run allowed for chargeback. Conversely, on your private cloud you can do similar tasks by guaranteeing a number of IOPS for a particular VM, useful especially as you are starting your cloud journey and as the different departments in your organization are used to paying for their hardware and ensuring complete and uninterrupted access. Storage QoS is a great tool that you can use to provide them with a similar guarantee and also report against it to prove that they are taking max advantage of resources.

Storage QoS allows you to set the maximum number of IOPS per virtual hard disk, as well as a minimum. The idea here is that you can reserve a minimum number of IOPS below which you are saying that the VM would not be running optimally. When a VM is not getting the minimum number of IOPS, it flags a warning message alerting you to take corrective measures. If you are able to accurately pinpoint the minimum number of IOPS that a VM needs and configure it accordingly then I guess this would be pretty useful.

The thing I don't like about this implementation at this stage is that IO throttling is limited to the Hyper-V host and the VMs that live on that host. Hyper-V contains a technology known as the QoS IO Balancer, which is responsible for throttling the IO and ensuring that each VM's reserves are met. The problem is, because this IO Balancer is implemented at the Hyper-V host and because your storage is most likely shared storage accessible by multiple Hyper-V hosts, it is very possible that a VM living on a different Hyper-V host would consume more IOPS and starve your VMs, which aren't governed by the IO Balancer. To get around the limitation (sort of), alerts will kick when a VM is not able to consume its minimum required IOPS. This really explains why the minimum field even exists in this implementation -- it is so that alerts can go off when a VM is starved for IOPS considering the IO Balancer again resides at the host level.

The final analysis is that Storage QoS is a very welcome step for Hyper-V, but I am looking forward to a bit more enhancement in this area in the near future.

Posted by Elias Khnaser on 07/01/2013 at 1:22 PM1 comments

It was the summer of 1997 and I was a computer enthusiast trying to find my place in the technology world. I had just landed my second consulting job as a Windows NT specialist deploying servers for a real estate investment trust in Chicago.

About a week into the job I started to notice these cool-looking minicomputers that I later understood were thin clients. I was intrigued by this little device and very curious as to how it worked, I started to do some digging and since I was in the same work area as the admins supporting the system I started to listen in on challenges, help desk complaints and more and was just fascinated by the whole concept of multiple, isolated users on the same server.

But the feature that captured me -- and this will read funny to most of you today, but it was so true back then -- was “shadowing.” The ability to remote control the user's session? That was so cool, James Bond-like, and I could connect to a disconnected session. I was hooked.

I knew at that moment that the way to differentiate myself from the army of technicians that Microsoft was building was to find my niche. And Citrix was that niche.

I started helping out by taking some help desk calls that no one else wanted to deal with, or helping out those annoying and difficult users. It was an experience that taught me so much. Later, I borrowed the bits and installed the software on my home lab and got started using Citrix WinFrame with Windows NT 3.51 (the version being used at the time). I stayed with this REIT for about a year and upgraded to MetaFrame 1.8, if my memory serves me correctly. From then on my consulting career and my professional career was designed around Citrix.

A few years later as I was researching an issue online, I came across a user on a forum with a challenging issue that I had seen and I immediately replied. Apparently, an acquisition editor for Syngress was scouting these forums in search of authors and came across my response and sent me an e-mail asking if I would like to write a chapter in a Citrix book she was working on? What? Me, author? The thought had never occurred to me, but I was always up for a challenge, I though the worse that could happen was she would not like my writing style and that would be the end of it. I ended up writing six chapters in that book, literally half of it. From then on my writing career took off and I started writing books, blogs and white papers, some for profit and some for recognition and exposure.

In the following years I needed a new challenge, a new hill to conquer and I was very impressed with Dan Charbonneau and his CBTnuggets. Dan had developed this new method of delivering digital content in small nuggets, basically a learn at your own pace kind of training videos and I had seen his NT series and found this other stuff intriguing.

CBTnuggets was growing and attracting new instructors and covering new topics so I had the idea of developing a Citrix MetaFrame XP Nugget. I pitched the idea to Dan, even though I'd never spoken into a microphone -- trust me, it's weird talking for hours to yourself with no audience interaction and having to anticipate what will maintain student interest. Dan graciously helped during this period and CBTnuggets published the very first Citrix computer-based training for MetaFrame XP A smashing success, I would meet people and they would recognize me just by my voice. I have made a habit, it seems, of being the first to publish content in specific formats. I was also the first to publish a VMware ESX 3 training course. This period of my life got my training and public speaking career started.

In later years I would be introduced to vMotion, which had the same effect on me as Citrix's “shadowing” did back then and I immediately fell in love, head over heels with VMware.

I wanted to share my story with you today, as a tribute to Ed Iacobucci, Citrix's co-founder, who passed away last week. I never met Ed, but I've introduced him to many of my students in my training courses, most recently in my TrainSignal Citrix XenApp 6 course. I'd say Ed is responsible for jump-starting my career on so many levels. Last year, I was so happy when I followed him on Twitter and he followed me back. To me, it was truly an honor. Ed Iacobucci, a true visionary who was ahead of his time is following me on twitter. It doesn't get any better than that.

With Ed Iacobucci's passing, a big star has fallen out of the technology sky. Thank you for everything you have done for me and my career and may God accept you in his heavens and may your soul rest in peace.

Posted by Elias Khnaser on 06/24/2013 at 3:24 PM1 comments

One of the new features of XenDesktop 7 and XenApp 6.5 Feature Pack is Local App Access, a technology you might be familiar with under the name "reverse seamless" that RES Software has had in its software for some time now. My understanding is that Citrix and RES have to come to some kind of arrangement that satisfies the legal issues. That being said, it is worth mentioning that Citrix Local App Access is an application that was created from the ground up by Citrix developers -- no code was leveraged from the RES Software implementation.

For those of you that are not familiar with this technology, Local App Access is quite interesting in that it allows applications that are installed on the end point to be available within your VDI or XenApp session seamlessly. Since these apps are simply being channeled into the sessions, no server-side resources are consumed at all.

You might be wondering where this could be helpful or useful. For starters, my interest in local app access is that it eliminates double-hop scenarios with HDX. Today, when you deploy XenApp applications into a XenDesktop VDI session and launch those apps, you are initiating a new HDX session from your VDI desktop to that application. But remember, you already have an HDX session to your VDI, so you end up having an HDX session within an HDX session. It performs well, but imagine if we could eliminate that second hop. So, how do you do this?

Well Citrix Receiver is installed on your end point, which is required to launch a XenDesktop VDI, right? So why can’t I launch XenApp applications directly from the end points and then use Local App Access to inject the app into the VDI session? You will, of course, have two HDX sessions to the end point, but you no longer have a session within a session. As a result your performance will be significantly enhanced.

Another good use case for Local App Access is where you might need to run apps that still do not work in a virtual environment or are not supported. You can then channel the app and inject it via Local App Access, thereby making it seamless and less confusing for the user; that way, users won't have to leave the VDI session to launch any other application.

Consider local devices on the end point as well, like DVD drives or other peripherals that can now also be accessed from within the VDI session. Or take it one step further with applications like WebEx or GoToMeeting, which can also be leveraged with some of their bandwidth-intensive requirements, especially if you are using video conferencing. While the new HDX does a great job with video, local app access might be an option. Finally, another option is for employees or users that bring their own devices and be able to use their local applications within the session without compromising policy or security that you have established. A good example here is iTunes, an application that you would typically not allow on your desktops. Now, user can use it if it is installed on their local machine.

I am interested in hearing about some scenarios where Local App Access could be useful in your environments.

Posted by Elias Khnaser on 06/19/2013 at 1:26 PM2 comments

Citrix continues to present enterprises options for dealing with new challenges and scenarios that they are having to deal with on a daily basis. And while we are reminded every day of the increasingly retracting PC sales, Apple's notebook seems to be disassociating itself from this trend as Mac sales seem to be going up. Hence, consumerization. Enterprises today are finding themselves having to deal with Mac BYO, and while there are many different ways of addressing Windows resources on Macs, they generally all require online access.

At Synergy 2013 in Los Angeles, Citrix unveiled a new product called DesktopPlayer for Mac, a type-2 hypervisor that enables Windows virtual machines to run on the Mac. The difference between type-1 hypervisors and type-2 hypervisors is that the first is installed under the operating system while the latter is installed as an application on the operating system

But what is so special about DesktopPlayer? We have had this type of technology for a while now with VMware Fusion, Parallels and others. The one differentiator is that DesktopPlayer is an enterprise-class tool which allows IT admins to centrally manage VMs that are running on DesktopPlayer. Citrix expanded the management of its type-1 client hypervisor XenClient to DesktopPlayer by leveraging the management server Synchronizer, which addresses the questions of provisioning, updating, management and patching of VMs.

I know many of you are probably asking if DesktopPlayer can be deployed without the management server. Technically, yes. Another question that I have been asked is, can DesktopPlayer be deployed on Windows host machines instead of Macs? Aat this point, the answer is no, but if I was a betting man I would say that it will evolve to cover that scenario as well, simply because it can. DesktopPlayer has some other attractive enterprise security features like encryption, lockout and remote wipe.

DesktopPlayer is in line with Citrix's strategy to deliver different solutions for the many ways users work while weaving it together under a single platform. What I hope to see in future versions is the ability for Synchronizer to sync with a VDI instance in the datacenter. So that if I want to connect to a VDI instance while away from my Mac device, I can and that should be part of weaving the platform and products together.

So how do you get DesktopPlayer? Well if you have XenDesktop enterprise or platinum editions, then you automatically get access to DesktopPlayer. If you have XenClient Enterprise then you also get DesktopPlayer automatically.

Do you find DesktopPlayer a useful technology that you can leverage in your enterprise today? I am very interested in specific use cases and in your feedback in the comments section.

Posted by Elias Khnaser on 06/12/2013 at 1:25 PM3 comments

There are so many conferences and so many announcements happening that it is hard to prioritize what to cover. We just came out of the best Citrix Synergy ever and I have so much content to cover still, and we're already deep into Microsoft TechEd and I am just surprised at the amount of new technologies and features that I will have to cover there. Well done, Microsoft. All the while Dell has some interesting announcements that I want to cover and we will soon be heading to VMworld. What I need is to stretch the calendar year for more days and start writing more frequently.

Back to TechEd 2013. If you have been following the show, the announcements are really cool and what I am particularly interested in are Windows 8.1 and Windows Server 2012 R2 -- especially Hyper-V features, VMM 2012 R2, heck a lot of products from Microsoft.

This time, let's look at my four favorite new features of Hyper-V:

Shared VHDX

Probably the best feature of this release by my analysis, clustering has always been a complicated subject in VMs and for many customers of Hyper-V the question was always, why can't I share the VHDX? Well, now you can and to make it even better you don't need to have an iSCSI or FC SAN to do so. Now, you can accomplish this by placing the VHDX on a file server share if you want and share the VHDX that way, thereby significantly simplifying the process and the requirements. For those that are wondering if you can live migrate VMs, the answer is yes. For those that are wondering if you can storage live migrate, the answer is no.

Faster Live Migration

Live Migration is such an important part of any administrator's worklife, in some cases it can be the determining factor on whether or not you are going to make your evening commitments and a social life. Windows Server 2012 R2 has two new improvements for Live Migration. The first is enabled by default and is compression based. The way it works is by analyzing the host for CPU utilization and if CPU cycles are available, they are used to compress the VM. As a result, you're sending fewer bytes over the wire and live migrating the VM twice as fast as you would without compression.

The second form of faster live migration -- "freaky fast live migration" -- requires newer hardware in order to make it work. For this type of migration, you will need network adapters that support RDMA. This option is called SMB Direct because it leverages SMB 3.0 -- specifically, the multi-channel capabilities of SMB 3.0 -- and you can expect 10x improvements. That is pretty darn fast. Microsoft recommends that if you have 10Gb or better use SMB Direct; for everything else, use the compression method.

Replica to Third Site

One of the features that we all loved about Hyper-V is the replica feature, except it was limited to one replica and while that might be sufficient in some cases, I have noticed that most of my customers would always want the ability to replicate a copy locally and a copy to a third site . In some cases, customers wanted to replicate a copy to another datacenter and then one to the cloud. Windows Server 2012 R2 Hyper-V allows you to do just that, enabling enterprises and service providers to satisfy replica needs. Many of my large enterprise customers will be happy to see this feature.

VM Direct Connect

Anytime Remote Desktop Services are used to enhance an architecture, you will see thumbs up from me. What can I say? I am a Terminal Services old timer. One of the annoying things with Hyper-V has been the inability to do rich copy and paste between the VM console and the desktop that you are connecting from. That included just clipboard copy and paste and also file copy and paste. In addition, there was no USB support available, so the user experience was not the greatest.

In this new R2 release, Microsoft has reconfigured the architecture to use RDS, even without a network connection. You are probably wondering how that even possible, considering RDS requires an IP address and the network for proper connectivity. Well, the new technology that is enabling RDS without network connectivity is leveraging the VM Bus. Now your VM console connection will enable rich copy and paste, file copy and paste, USB support leveraging RDS technology, all with no network connectivity requirements. That is pretty sweet.

I'll follow up in the weeks ahead with more technical detail on each, but I'll stop there for now and see if we can start a conversation on your favorite features from this list or other features that I have not covered. There are plenty more to talk about, so I'd love to hear from you in the comments section.

Posted by Elias Khnaser on 06/05/2013 at 1:31 PM2 comments

Microsoft has been on a roll since the announcement of System Center 2012. Since then, product after product Microsoft has proven that it is really on a roll. At TechEd this week, I am very pleased with the innovation, the thought leadership and the announcements that Microsoft showed. With that, let's talk a bit about Windows 8.1 and Windows Azure, as they are the highlights of day 1. These are not the only cool announcements today, but for this column I am going to limit it. I'll cover more announcements in the weeks to come.

Let's start with Windows 8.1, which will be previewed in June and generally available later this year. The new features? I think you will like them. There's nothing mind-blowing or game-changing but small changes will hopefully make users a little less standoffish towards the new Windows 8 interface.

The button is back, except it isn't your traditional Start button that opens a start menu in the traditional way. It's merely a start button that can take you to the Metro-style start screen. It's not a huge change but some people might find it handy to have a start button.

Boot to desktop is a new feature that will allow you to boot up directly to the desktop as opposed to booting to the Start screen metro. This is available today, of course, but through a bunch of tweaks.

Desktop applications open in desktop mode, which means when you click a file such as a Word or PowerPoint from the desktop and it will open the application in desktop mode as opposed to opening in Metro mode.

Admins will also be able to customize the start screen, limit the number and type of applications, and so on. This is a welcome features that empowers IT admins to control the user experience for locked down and managed workstations.

That was the Windows 8.1 highlights, let's move to some of the Azure updates and, boy, are there a lot of announcements for Azure. Azure is front and center of the entire Microsoft vision and that is a refreshing and welcoming step.

Windows Azure Active Directory allows you to quickly extend your internal Active Directory to the Azure cloud, which would allow you to very quickly implement single sign-on and also integrate with several other services like Windows Intune to manage devices, Office 365 and other services. This will also be a catalyst for third party providers to leverage Azure extended AD for their own services. If you have a different MDM solution from AirWatch, XenMobile or MobileIron, maybe they will be able to integrate easily and quickly.

Another cool integration point with Azure is Intune integration with System Center Configuration Manager, which will allow you to manage your corporate PC devices and mobile devices with Intune, whether they're corporate-owned or BYO.

One of my favorite demos was the "Workplace join device." While I am not a believer that users with mobile devices, especially in a BYO model, will want to enroll their devices, the ability to self-join your device to the domain is really cool. The idea behind this technology is that if you are connecting from an unmanaged mobile device and you want to get access to corporate resources, it is not enough to have an Active Directory user account -- you must enroll your device and to do that, you can configure the device to join a workplace by providing your e-mail address. But wait, there's more! In order to make this more secure and enable two-factor authentication, Microsoft is leveraging the power of Azure to actually call you and authenticate you over the phone. Basically, after you have entered your e-mail address, the system will prompt you for a phone where they can call you and ask you a security question or take action in order to completely authenticate the device.

Once the device is authenticated and based on your AD credentials, you will have access to your corporate resources. Now, in the event that you want to unsubscribe your device, you can follow the same steps. Once unsubscribed, you lose access to all corporate resources while all personal resources are preserved. Pretty cool, but I think BYO will lean more in the Mobile Application Management then it will the Mobile Device Management.

Another really cool announcement was Windows Azure Pack. It gives you the ability to layer Azure-like portals and offers self-service experiences atop your System Center infrastructure, thereby unifying the user experience between Azure service consumption and on-premise service consumption. It's a huge benefit, as I love the Azure interface and I dread the self-service interface from App Controller and Service Manager. Windows Azure Packs will basically bring Azure technology to System Center private cloud deployments to unify the experience but to also enable admins to build Azure-like environments locally.

Staying with the Azure announcements, Microsoft announced some new billing changes that includes per-minute billing as opposed to per-hour billing. This is beneficial because prior to this if you had used 40 minutes, it would round it up to an hour. Now, if you use 30 minutes, you get billed for only 30. This is huge, especially for test/dev.

Another billing change is that you will no longer be billed for VMs that are paused or turned off. Prior to this the only way to stop the billing was to delete the VM. This feature is a very welcome step.

Developers will definitely appreciate the ability to use MSDN server licenses on Windows Azure at no charge, (prior to today's announcement, it was not allowed).

Now if Microsoft does anything right, they know how to rally developers. I don't know about you guys but if I knew that by developing an application on Azure I could potentially win an Aston martin, I would be all over it. That is exactly what Microsoft is offering developers who build applications on Azure and submit them by September.

Finally and to boost Hyper-V's credibility and capability, Microsoft announced that Windows Azure runs completely and solely on Hyper-V.

With plenty of announcements and more to come, I do want to stop here and take your pulse, see what you liked, what caught your interest the most and have a conversation in the comments section.

Posted by Elias Khnaser on 06/03/2013 at 1:28 PM6 comments

This year's Synergy was the most impressive and exciting one I have attended to date. Citrix CEO Mark Templeton delivered an epic keynote assisted by technology's most impressive "illusionist," Citrix Demo Officer Brad Peterson. I really like Mark and I really respect all his efforts to transform Citrix from a single-product, extremely Microsoft-reliant company, to a company that can stand on its own with several impressive products in mobility, cloud and networking.

Mark made a ton of announcements and talked about some technologies during his keynote and I will cover all the new stuff in this blog in the weeks to come.

Today, I want to focus on the NVidia hardware-assisted GPU virtualization, which is the coolest technology to hit XenServer and XenDesktop 7.

For the longest time, one of the biggest barriers to full desktop virtualization is that certain graphics-intensive workloads were not ideal candidates for virtualization. Many workarounds and options existed, but realistically none of them were real viable solutions. That is, until NVidia came along with its new NVidia technology that XenServer and XenDesktop 7 now support. While Citrix was first to adopt it, I am pretty sure that Microsoft and VMware will have support for it in the near future.

To understand the significance of NVidia's technology, it is important for us to understand the many different ways video is rendered for virtual machines, as follows:

- CPU-rendered graphics -- This is the traditional approach where the virtual machine leverages software to render the graphics on the physical CPU. It works something like this: Data flows from the physical GPU to the GPU memory, through the graphics driver and graphics API, into system memory, and then the CPU, where it is rendered. It's a slow, multi-step process that places a heavy load on the CPU and limits scalability and adoption of graphics-intensive applications.

- GPU pass-through -- To get around some of the inefficiencies of the latter traditional solution, hypervisors began supporting GPU pass-through directly to the VM. The problem here is that it is a 1:1 relationship between the VM and the physical GPU. This means scalability is limited to the number of supported physical GPUs on the host server and the ability of the hypervisor to support multiple GPU pass-throughs. Again, it's a good step in the right direction, but still very limited.

- API Intercept (software-based shared GPU) -- This is essentially an attempt at virtualizing the capabilities of the GPU and delivering it out to numerous sessions. In essence, this is the first attempt at GPU-sharing, except it is rendered entirely in software. The result is well suited for low- to medium-sized workloads such as Windows Aero, Google Earth, Office 2010 and even certain games and applications, albeit that is on test basis. Nonetheless, this approach also is limited in scalability and most certainly does not address graphics-intensive and CAD applications.

- Hardware-assisted GPU -- Fnally! NVidia delivers the first truly hardware-assisted GPU sharing technology that allows multiple VMs to share the physical GPU directly without putting any significant load on the CPU and without rendering in the CPU. The data flow goes from the physical GPU to the GPU Memory and is rendered immediately and delivered to the end user by passing the driver, graphics API, system memory and the CPU. Hardware-assisted GPU is not a new concept. Compute goes through almost verbatim the same cycle as GPU, beginning with fully virtualized CPU all the way to hardware-assisted CPU.

NVidia will offer its grid server cards in two flavors. The K1 graphics adapter packs four lower-powered, Kepler-based GPUs and 16GB of DDR3 memory supporting up to 32 VMs. Depending on how many graphics cards you can install in your server will determine the total number of VMs per sessions supported, but it is safe to assume that at least two graphics adapters can be installed for a total of 64 users. The K2 is geared towards even more hardcore users and packs two higher-end Kepler GPUs and 8GB of GDDR5 memory, which can support up to eight VMs (and again, depending on how many cards you can install in your host will determine the total number of VMs supported).

This is not only good news for VDI and graphically challenging workloads, it is also great news for cloud-based desktops, games and much more. As this technology matures even more, and as competition accelerates from AMD and others, the price will drop, thereby making this technology a defacto standard in any deployment some time in the future.

Are you excited about this announcement? Do you have workloads that have traditionally been challenging to virtualize? I am very eager to hear from you in the comments section here.

Posted by Elias Khnaser on 05/29/2013 at 1:27 PM2 comments

Time has been our greatest teacher and has taught us some valuable lessons as far as what works in virtual desktop infrastructure (VDI).

A question I am repeatedly asked is, "Which vendor should we go with for VDI?" My answer is that they all work and they all have pros and cons. Citrix is most definitely a front-runner, but that doesn't mean you should immediately disqualify solutions from VMware, Microsoft or Dell. Time has taught us that VDI is not an all-or-nothing proposition, it's not for everyone, nor is it right for all of your workloads. Even then, here are some guidelines you can follow when implementing a VDI project.

1. Properly profile your users and workloads.

It's a crucial first step that allows you to identify which vendor's solution is the best for them. The decision is even more critical when looking at vendors with multiple solutions like Citrix, in which case you'd be asking, "Why should I use XenDesktop or VDI-in-a-Box?" Properly qualifying workloads, users and future use cases would easily answer this question.

If you want to start small and be able to scale and keep costs in check (and your workloads are VDI-compatible), then go with VDI-in-a-Box. If you need the scalability and the diversity that FlexCast offers, then Citrix offers an upgrade path. If you're a VMware shop with VDI-only workloads, then choose VMware View. There are requirements that need to be qualified, of course, such as WAN scenarios and CAD application scenarios, so in this respect, make sure the vendor you select is supported by the application you own.

2. Separate VDI workloads from enterprise workloads when it comes to infrastructure, especially storage.

I can't begin to tell you how many times not doing so has resulted in major headaches for organizations that insist on combining the infrastructures. I would also venture to say that you should consider converged infrastructures that are targeting VDI workloads, such as Nutanix and others, which will reduce the TCO and would allow you to scale as needed. It's better than having to purchase large quantities of infrastructure up front when the initial VDI rollout might be for only 400 users.

3. Choose an endpoint that is cost effective, especially when you're choosing thin clients or zero clients.

If you settle on a thin client or even a zero client that costs $500, you will significantly increase your CapEx. The smart thing to do is to choose a device that has a system on chip (SoC); some can be had for less than $250 per unit. I would also urge you to repurpose existing PCs into thin clients. You might be saying, "Then I would need to manage another OS." While that might be true for the most part, it does keep costs down and you're probably already managing other similarly configured physical machines in the environment. These converted PCs would need less management, and as they break down you can then replace them with thins or zeros.

4. Don't ignore the network, especially if the WAN is involved.

You will need to consider that a good user experience will need anywhere from 100Kbps to 2MBits/s and a good average of about 400KBPs. While keeping an eye on storage IOPS is important, network bandwidth is equally important and network latency that doesn't exceed 100 ms is also critical: the lower the latency, the better the user experience. You'll find that latency of 200 ms or more degrades the user experience quite a bit, especially for users requiring multimedia.

All these tips need to be considered before adopting a solution, but let me leave you with one more that is perhaps the most important of all: Choose the integrator that has done this before and can prove to you that it has had successful implementations. It could make all the difference in your VDI world.

Posted by Elias Khnaser on 05/20/2013 at 1:33 PM7 comments