How-To

Hyper-V Deep Dive, Part 3: Networking Enhancements

Let's take a look at Hyper-V's virtual machine network enhancements: Single Root -Input Output virtualization, Dynamic Virtual Machine Queuing & RSS.

More on this topic:

In part one, we covered the extensible switch in Hyper-V in Windows Server 2012; in part two we looked at network virtualization. We also looked at System Center Virtual Machine Manager 2012 SP1 and how it helps centralize switch configuration and network virtualization setup. This time, let's take a close look at VM network enhancements, specifically SR-IOV, Receive Side Scaling and QoS.

Single Root-Input Output Virtualization

Perhaps one of the most interesting additions to the arsenal of network enhancements in Windows Server 2012, SR-IOV has specific uses and limitations that it pays to be aware of when planning new Hyper-V clusters.

In the early days of hypervisor virtualization, Intel and AMD realized that they could help provide better performance by offloading certain functions from software onto the processors themselves. This is now known as Intel-VT and AMD-V and is a requirement for most modern hypervisors. SR-IOV is the same shift of network functions from software to hardware for increased performance and flexibility. When you have a server that supports SR-IOV in BIOS, as well as an SR-IOV-capable NIC, it presents Virtual Functions -- essentially, these are virtual copies of itself -- to VMs. If you're going to use SR-IOV extensively, be aware that NICs that support it today are limited in the number of virtual functions they can provide; some give only four, some 32, some up to 64 per NIC.

SR-IOV is not needed for bandwidth, as the Hyper-V VM bus can saturate a 10 Gb Ethernet connection, but it uses up about a CPU core for the calculations. So, if low CPU utilization is required then SR-IOV is your best bet. When latency is crucial, SR-IOV gives you network performance close to native metal, so that's another scenario where SR-IOV shines.

You do pay some penalties for the benefits of SR-IOV, particularly in the area of flexibility. If you're using the Hyper-V extensible switch and have configured port ACLs and perhaps one or more extensions, these are all bypassed with SR-IOV, as the switch never sees SR-IOV traffic. You also can't do teaming of SR-IOV capable NICs on the host; you can, however, have two (or more) physical SR-IOV NICs in the host, present these to VMs and in the VM create a NIC team out of the virtual NICs for performance and failover.

SR-IOV does work with Live Migration, something VMware hasn't managed in vSphere 5.1. Behind the scenes for each virtual function Hyper-V creates a "lightweight" team with a normal VM bus NIC, and so if you live migrate a VM to a host which doesn't have a SR-IOV NIC (or one that has run out of virtual functions to offer), it simply switches over to a software NIC.

For a more in-depth look at SR-IOV in Hyper-V, check out this blog series by John Howard from the Hyper-V team, and then click here for troubleshooting tips.

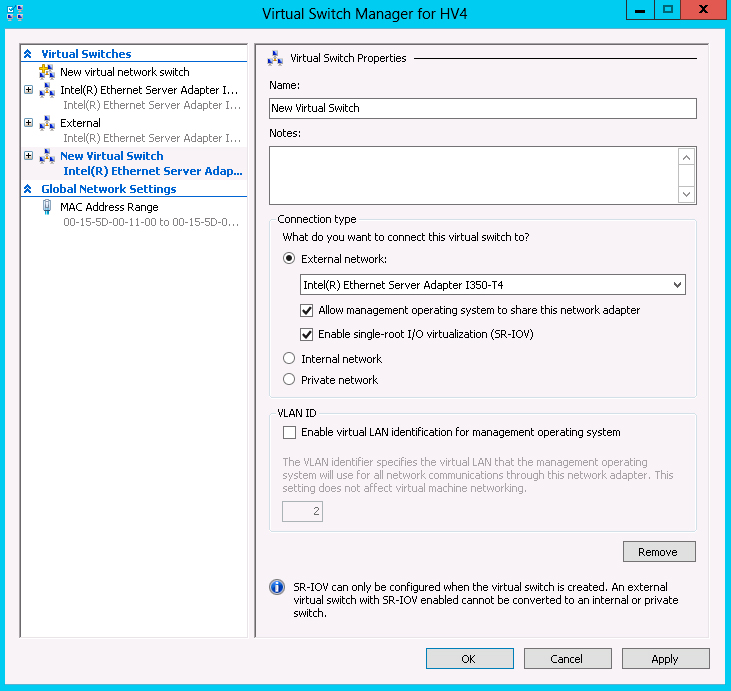

Note that when you create a virtual switch for an SR-IOV NIC, you have to enable SR-IOV at the time; you can't convert a non SR-IOV switch later.

|

Figure 1. If your system meets the requirements, enabling SR-IOV is a simple affair. (Click image to view larger version.) |

Scaling Dynamically

On a physical server, Receive Side Scaling (RSS) processes incoming network traffic so that it isn't slowed down by a single CPU core. This is done by spreading the calculations across multiple CPU cores. For a Hyper-V host that has multiple VMs with significant incoming traffic, dVMQ (Dynamic Virtual Machine Queue) does a similar thing to what RSS does for a physical server. The destination MAC address is hashed and traffic for a particular VM is put into a specific queue and the interrupts to the CPU cores are distributed. This is handled by offloading these functions to the NICs.

VMQ is available in Hyper-V in Windows 2008 R2, but you have to manage Interrupt Coalescing, which can require a fair bit of manual tuning. In Hyper-V in Windows Server 2012 with dVMQ, which is enabled by default, it takes care of the tuning and load balancing across cores for you. If it has been disabled for some reason, you enable it in the GUI or via the PowerShell cmdlet Enable-NetAdapterVmq.

Monitoring and Capturing

One issue with server virtualization and virtual networking is that many traditional troubleshooting processes don't work at all or have to be altered to handle virtualized environments. As mentioned in part one, the new extensible switch in Hyper-V allows you to define a port as a monitoring port (port mirroring), in a similar way to what you can do on a physical switch to let tools such as Wireshark and Network Monitor capture traffic traversing the switch. Hand in hand with this functionality comes Unified Tracing, which is a new parameter, capturetype, for the netsh trace command. It lets you define whether to capture traffic going through the virtual switch (=vmswitch) or through the physical network (=physical) or both. For more information on netsh tracing in Windows Server 2012, go here.

Using port ACLs also allows you to meter network traffic (either inbound or outbound) between a particular VM and a specified IP address range. While interesting to do using PowerShell (Add-VMNetworkAdapterAcl –VMName name –RemoteIPAddress x.y.z.v/w –Direction Outbound –Action Meter), I assume that this functionality will be incorporated into overall logging and monitoring solutions such as SCVMM 2012.

Top Service Quality

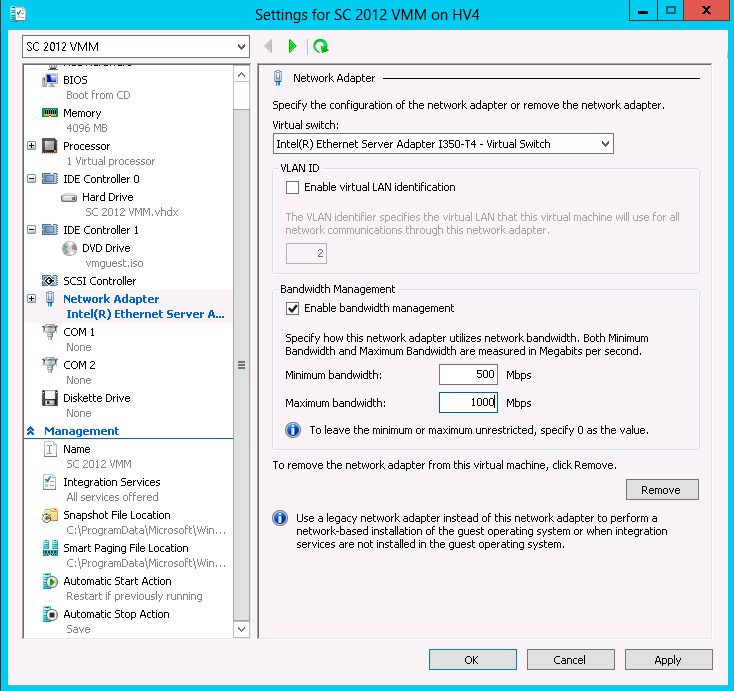

QoS has been enhanced in Windows Server 2012 and in Hyper-V. It offers bandwidth management, classification and tagging, flow control and policy-based QoS. While earlier versions of Windows Server had the concept of maximum bandwidth, version 2012 also offers minimum bandwidth. This means that when there's no congestion, a workload can use up to its maximum allotted bandwidth, but when there's congestion it has a minimum guaranteed pipe. You can use either values or both together, depending on the scenario a VM or a set of VMs is in.

Note that if you're using SMB Direct (Server Message Block Direct), a new feature of Windows Server 2012 that implements RDMA (Remote Direct Memory Access) on compatible NICs for lower latency network traffic with less CPU overhead, QoS is bypassed. In these scenarios you can implement NICs that support DCB (Data Center Bridging) for traffic control, similar to QoS. DCB allows eight distinct traffic classes to be defined and given a minimal bandwidth during times of congestion.

In earlier versions, you had to build your own traffic classifications; Windows Server 2012 comes with built-in filters in PowerShell to build classifications for common traffic such as iSCSI, NFS, SMB and Live Migration. In addition to the current IP header based tagging that builds on Differentiation Service Code Point (DSCP), Windows Server 2012 adds 802.1p tagging which takes place at layer 2 Ethernet frames; thus it can apply to non-IP packets.

Policy-based QoS uses Group Policy to build and apply QoS policies for your physical networks and hosts, simplifying deployment and management since you're already using it to apply other policies. Check this out for an in-depth look at using Group Policy for QoS.

For Hyper-V, however, Microsoft has married the QoS piece with the extensible virtual switch, enabling hosters to adhere to SLAs by controlling min and max bandwidth to ensure predictable network performance, by switch port number, using either PowerShell or WMI. Of course, these features are valuable in a private cloud infrastructure as well, and can be managed using SCVMM 2012 SP1. This fine-grained QoS control also makes it possible to take one or more 10 Gb Ethernet NICs and "carve" them up for storage, Live Migration and VM traffic, similar to how many servers today have dedicated 1 Gb NICs for different types of traffic.

|

Figure 2. For small environments you can configure minimum and maximum bandwidths on a per VM basis easily. (Click image to view larger version.) |

Let's move on to NIC teaming, which we'll cover in part 4 next time. NIC teaming is built into Windows Server natively, and we'll round up all the functionality covered so far and how they can influence how you design clusters and data centers in the future.

More on this topic:

About the Author

Paul Schnackenburg has been working in IT for nearly 30 years and has been teaching for over 20 years. He runs Expert IT Solutions, an IT consultancy in Australia. Paul focuses on cloud technologies such as Azure and Microsoft 365 and how to secure IT, whether in the cloud or on-premises. He's a frequent speaker at conferences and writes for several sites, including virtualizationreview.com. Find him at @paulschnack on Twitter or on his blog at TellITasITis.com.au.