Swiss Army Storage

FalconStor's Network Storage Server does far more than virtualize and serve up storage.

Growing up I was the proud owner of a genuine Swiss Army knife. At the time I thought, "Who could ask for more: a pocket knife that includes a toothpick and scissors?" The Swiss Army knife had me covered. But before long, I realized that surviving the New Jersey wilderness didn't require those scissors, cool or not. Also, in time I came to understand that the plastic toothpick did little more than gross out my older sister.

I bring up this story because the Swiss Army knife is synonymous with products that can perform a variety of tasks, and here I am borrowing from an old clichŽ. After spending a couple of months with FalconStor Software's physical and virtual Network Storage Server (NSS) units, I've learned a new lesson: Unlike the Swiss Army knife I had as a kid, the FalconStor NSS includes tools that provide useful functions and are not mere throw-ins.

I evaluated the NSS HC620 unit along with the NSS Virtual Appliance (NSSVA) for VMware Infrastructure. The NSS HC620 comes in a 4U form factor with support for the following core features:

- Dual storage controllers

- RAID 0, 1, 5 and 10

- Up to 224TB of storage capacity

- Internet SCSI (iSCSI)

- Up to 256 snapshots per logical unit number

- Thin provisioning

- Synchronous data mirroring

- Asynchronous replication

- Continuous data protection (CDP)

The HC620 is FalconStor's entry-level storage-virtualization unit. Also in the line are the HC650, which supports eight 1GB iSCSI and eight 4GB Fibre Channel ports, and the top-tier HC670, which supports up to four 10GB Ethernet ports. FalconStor's storage-virtualization appliances sit in-band-in the storage network data path-and serve up Fibre Channel and iSCSI storage to servers connected to the storage network. My review unit, the HC620, was only equipped to serve up iSCSI.

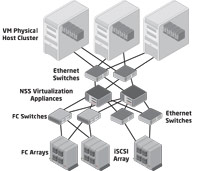

In a typical environment, the NSS architecture likely would include two NSS units (see Figure 1). This would prevent a single point of failure in the data path. With only one NSS, a failure would effectively disconnect all servers from their dependent storage.

[Click on image for larger view.] |

| Figure 1. A typical storage virtualization architecture would use two FalconStor NSS units to provide high-availability data access. |

At first glance, you might say, "Why would I want to increase complexity by adding more devices to the data path?" But storage virtualization provides several benefits. For example, it allows you to serve up different forms of storage (Fibre Channel and iSCSI) using a single protocol (iSCSI).

In addition, the storage virtualization appliances can give you the freedom to perform back-end storage-area network (SAN) maintenance transparent to the front-end physical hosts. For example, I could migrate data from one array to another while maintaining the same presentation to the physical servers. In addition, I/O caching provided by the virtualization appliances can improve overall performance while reducing latency. Again, it's easy to assume that adding a hop to the data path means increased latency. But that doesn't have to be the case if you have the right storage architecture.

While I could go on all day on storage-virtualization architecture, I need to get back to the matter at hand-the FalconStor appliances.

Getting Started

Setting up the FalconStor NSS HC620 was a breeze. In fact, I spent the majority of the deployment time simply connecting cables and racking a serial-attached SCSI array and the HC620. Configuring the arrays using the FalconStor IPStor Console (see Figure 2) was much easier than expected given the steep learning curve that was required when I've configured other storage virtualization appliances. Including time spent reading the product documentation, I configured the HC620 and a NSS virtual appliance in less than 45 minutes. And I'm not just talking about getting them online as iSCSI targets. I also set up asynchronous replication between the two. In less than an hour, I was deploying new VMware ESX 3.5 virtual machines (VMs) to the array and virtual appliance.

[Click on image for larger view.] |

| Figure 2. Here the FalconStor IPStor Console is configured to manage two physical appliances and one virtual appliance. |

Architecture and Assessment

I've long been a proponent of using virtual storage-virtualization appliances at branch office locations. Doing so allows you to leverage virtualization with limited resources and reduced capital. A single physical server can host a small number of VMs (five or fewer, for example) in a branch office, provided you use storage replication to protect each VM's data (see Figure 3). This approach lets you deploy virtualization to the branch and leverage a co-location facility or other data center for failover in the event that the physical server at the branch office fails. For larger branch office environments, you can use two storage virtualization appliances distributed across two physical nodes. This allows you to leverage features such as VMware High Availability for automated high availability, and VMotion for non-disruptive scheduled hardware-maintenance activities.

[Click on image for larger view.] |

| Figure 3. From the IPStor Console, an IT manager can monitor NSS replication traffic. |

In many environments, advanced replication and seamless disaster recovery are nice-to-haves, but sometimes difficult to justify financially. To me, this is where the FalconStor NSS really stands out. Physical and virtual appliances support VMware's Site Recovery Manager (SRM) for disaster recovery failover automation and startup. Because the NSS VM appliance supports direct-attached storage, you can get all the benefits of VMware's SRM with a single ESX host and a local SATA disk-no fancy array features required. The NSS takes care of the storage magic that makes it all work. Forget disaster recovery as being a nice-to-have: What if you had off-site replication, disaster recovery automation and object-level file recovery rolled into a single package?

This is where it really gets fun. The FalconStor storage platform can extend from the enterprise to the branch office. Physical NSS appliances (the HC620 and HC650 arrays) can reside in the larger data centers, while the FalconStor NSSVA is well suited for providing the storage backbone for the branch office. This architecture opens up a great deal of storage-management flexibility, while also providing disk-to-disk data protection, as well as archive-to-tape if necessary.

FalconStor offers application-specific agents to quiesce databases prior to storage snapshots for the most popular database applications, including Exchange, Oracle and SQL. In addition, you have a good deal of flexibility with Exchange recovery, as FalconStor's TimeMark CDP solution for Exchange allows recoverability of databases, mailboxes or single mail messages as needed. Furthermore, you can replicate an Exchange database to a remote site and perform an application-consistent archive-to-tape operation from that site. For file system backups, IPStor uses the Windows Volume Shadow Copy Service to ensure file consistency. In using the TimeMark CDP features to recover single files from a VM image, I found the process to be intuitive and simple to complete. Replication occurs at the sub-block level, resulting in significant reductions in the amount of data that must be replicated with each differential change.

Bottom Line

If you can't tell, the FalconStor solution thoroughly impressed me. Deployment, configuration, provisioning and protecting volumes; creating and recovering snapshots; and the TimeMark CDP all stand out. I also like that the product can integrate with third-party data-protection software.

Finding things I didn't like required that I dig a little bit. For starters, some enterprises would prefer to be able to leverage Active Directory user and group accounts for IPStor management, as opposed to a local user accounts database. FalconStor will need to integrate authentication with AD and other enterprise directory services if it hopes to win over some enterprises. I'd also like to see tighter integration with VMware vCenter management. For example, it would be nice for a VM provisioning task to trigger a storage snapshot that can bring a new VM online in seconds (as opposed to a block-by-block copy from an existing image). FalconStor tells me such integration, which NetApp already offers, is on the way.

FalconStor will leverage VMware's new vStorage APIs to add the integration, and I wouldn't be surprised to see this capability in the platform by the end of the year. FalconStor also needs broader Linux guest OS support, as more and more VM appliances get deployed on Linux OSes. We're not too far off from VMware's vCenter server running as a Linux-based VM appliance, for example.

The FalconStor NSS is a solid platform that makes presenting several back-end storage arrays-even from different vendors-to servers as a single cohesive set of storage resources easy to do. The physical and virtual NSS appliances perform well, and are quick to deploy and easy to manage. The virtual and physical NSS appliances each have their places, and I see the NSSVA as a key enabler for extending server or desktop virtualization to the small or branch office. The TimeMark CDP features make it easy to stage a disaster recovery environment, while the granular file and object-level recovery features service typical day-to-day user recovery needs. In an all-Windows environment, I really don't see a need to maintain a third-party backup solution if you're leveraging the FalconStor product line. The close integration with VMware's SRM (via the FalconStor Storage Replication Adapter for VMware SRM) and ESX VM snapshots (via the Snapshot Director for VMware) provide a robust and highly efficient storage-management framework for ESX infrastructures. Physical FalconStor NSS appliances already are certified on Microsoft Hyper-V and Citrix XenServer hypervisors, and I'm hopeful that the folks at FalconStor will extend similar functionality for the NSSVA in the coming months.