Windows Insider

Hyper-V's Missing Feature

- By Greg Shields

- 04/01/2010

Windows Insider has returned to Redmond -- and it feels good to be home! I'm looking forward to providing the inside scoop on the bits of Microsoft technologies that you may not be aware of. If there's a useful feature or an unexpectedly smart way to manage your Windows systems, you'll find it here. And what better way to resume this column than with a major warning -- one that could greatly impact the operation of your Hyper-V-based virtual machines (VMs).

First, some background. In the last few years, the velocity of virtualization adoption has increased dramatically. Businesses both large and small see the cost savings and management optimizations that virtual servers bring. Changing the ballgame in many ways has been the updated Hyper-V platform, which arrived with the release of Windows Server 2008 R2. This second edition of Hyper-V adds Live Migration and improvements to VM disk storage, as well as a set of performance enhancements that solidifies its place as an enterprise-worthy hypervisor.

Yet with all these new improvements, Hyper-V version 2 still lacks one key capability, which could cause major problems to the unprepared environment: I'll generically refer to that feature as memory oversubscription.

Memory oversubscription -- sometimes also called memory overcommit -- is a hypervisor feature that enables concurrently running VMs to use more RAM than is actually available on the host. It's easiest to explain this situation through a simple example. Consider a Hyper-V host that's configured with 16GB of RAM. Ignoring for a minute the memory requirements of the host itself, this server could successfully power on 16 VMs, each of which is configured to use 1GB of RAM.

The problem occurs when you need to add a 17th VM to this host. Because Hyper-V today can't oversubscribe its available RAM, that 17th VM won't be permitted to power on. In short, the RAM you've got is, well, the RAM you've got.

Failed Failovers

While this situation is obviously irritating for a single-server Hyper-V environment, it becomes quite a bit more insidious when Hyper-V hosts are clustered together. We all know that Hyper-V leverages Windows Failover Clustering as its solution for high availability. These two components work together to Live Migrate VMs between cluster nodes. Together they enable IT professionals to relocate VMs off of a Hyper-V host prior to performing maintenance. Because Windows servers often need patching that requires a reboot, this Live Migration capability ensures that process can be completed without impacting VMs.

The second scenario where clustering comes in handy is during an unexpected loss of a Hyper-V host. In this situation, Windows Failover Clustering can automatically restart VMs atop any of the surviving cluster members.

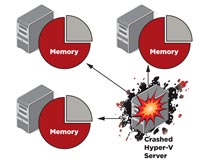

[Click on image for larger view.] |

| Figure 1. A Hyper-V cluster must reserve enough unused RAM to support the memory needs of at least one failed server. |

Yet herein lies the problem: Because today's Hyper-V hosts can never power on more VMs than the RAM they have available, a situation becomes possible in which surviving cluster hosts don't have enough available RAM. When this happens, some of those failed VMs might not get restarted elsewhere, negating the value of the cluster. In fact, because of this limitation, any Hyper-V cluster set up to protect against the loss of one member must always reserve an unused amount of RAM equal to that member's concurrently running VMs.

What does this mean to you? In short, it means a lot of unused RAM. It also means that bigger Hyper-V clusters -- those with more members -- are a better idea than smaller Hyper-V clusters.

To explain this, imagine that you've added a second server to the one referenced earlier, and as a result created a two-node cluster. In this environment, you now have 32GB of RAM that's been equally divided between those two cluster nodes. You still have 16 VMs that need to run concurrently, each of which requires 1GB of RAM.

Creating a two-node cluster for this environment gives you failover capability but offers no additional capacity for more VMs. Now, the loss of one of the two hosts means that every VM must failover to the second host. As a result, any similarly sized two-node cluster that needs full failover capabilities must set aside 50 percent of its total RAM as unused.

Scaling this cluster upward to four nodes cuts the waste percentage in half. As shown in Figure 1, a similarly sized four-node cluster must reserve 25 percent of its total RAM. An eight-node cluster cuts that number again in half, and so on. This quantity of RAM doesn't necessarily need to be equally distributed among the cluster members, but it must be available somewhere if VMs are to successfully failover.

Adding more hosts to your Hyper-V cluster is as important as adding more powerful hosts. The presence of more cluster members gives the cluster more targets for failing over VMs, while reducing the impact of wasted RAM.

Of course, another solution for this problem is for Microsoft to fix Hyper-V and add this critically necessary capability that its competitors already have. Rumors abound that it might be coming. But, as of this writing, Microsoft has released no official word on when -- or if -- such a fix may arrive. Until that time, be conscientious with the RAM in your Hyper-V clusters.

About the Author

Greg Shields is Author Evangelist with PluralSight, and is a globally-recognized expert on systems management, virtualization, and cloud technologies. A multiple-year recipient of the Microsoft MVP, VMware vExpert, and Citrix CTP awards, Greg is a contributing editor for Redmond Magazine and Virtualization Review Magazine, and is a frequent speaker at IT conferences worldwide. Reach him on Twitter at @concentratedgreg.