News

New Azure OpenAI Service Offers GPT-3 Natural Language Models

The foundational technology powering new AI coding assistants and other next-gen offerings based on natural language models is going to become an Azure cloud service.

Microsoft announced the new Azure OpenAI Service during this week's Ignite 2021 tech event.

It's based on GPT-3, an autoregressive language model that produces human-like text by leveraging deep learning, a machine learning construct that imitates the way people gain certain types of knowledge. GPT-3 comes from Microsoft partner OpenAI, an AI research and development company.

The GPT-3 language model has been put to many uses, including no-code natural language software development in Power Apps, Microsoft's low-code development offering. Microsoft has a license to infuse GPT-3 technology into its products.

"We are bringing the world's most powerful language model, GPT-3, to Power Platform," Microsoft CEO Satya Nadella announced during the keynote to this year's Build developer conference. "If you can describe what you want to do in natural language, GPT-3 will generate a list of the most relevant formulas for you to choose from. The code writes itself."

Also, a version of GPT-3 called Codex that was specifically modified for writing programming code powers GitHub Copilot, described as an "AI pair programmer."

[Click on image for larger view.] GitHub Copilot (source: GitHub).

[Click on image for larger view.] GitHub Copilot (source: GitHub).

As an AI pair programmer, it provides code-completion functionality and suggestions similar to IntelliSense/IntelliCode, though it goes beyond those Microsoft offerings with Codex. IntelliCode is powered by a large-scale transformer model specialized for code usage (GPT-C). OpenAI Codex, on the other hand, has been described as an improved descendent of GPT-3 (Generative Pre-trained Transformer) that can translate natural language into code.

After the debut of GitHub Copilot in a limited technical preview in June, OpenAI improved its foundational tech and offered it up as an API, describing it as "a taste of the future." However, Microsoft said some potential customers have needed more security, access management, private networking, data handling protections or scaling capacity, which will all be provided by the Azure OpenAI Service.

Which brings us to this week's Ignite conference where the service was announced.

"We are just in the beginning stages of figuring out what the power and potential of GPT-3 is, which is what makes it so interesting," said Eric Boyd, Microsoft corporate vice president for Azure AI. "Now we are taking what OpenAI has released and making it available with all the enterprise promises that businesses need to move into production."

While game-changing AI advances promise to revolutionize software development on many levels, they also come with ethical, legal, security and code quality considerations. For example, the Free Software Foundation (FSF) has called GitHub Copilot "unacceptable and unjust." On the code-quality front, a GitHub Copilot security study says "developers should remain awake" when working with their AI pair programmer because of a reported 40 percent bad-code rate. GitHub Copilot has also revived existential angst among developers who fear their jobs being lost in favor of AI-driven, code-writing robots. Other reports detail concerns about AI becoming a tool for hackers and other bad guys.

In response, an OpenAI FAQ asks and answers the questions: "How will OpenAI mitigate harmful bias and other negative effects of models served by the API?" and "What specifically will OpenAI do about misuse of the API?"

Perhaps seeking to ward off such concerns about Azure OpenAI Service, Microsoft's Eric Boyd said, "Microsoft will also offer Azure OpenAI Service customers new tools to help ensure outputs that the model returns are appropriate for their businesses, and it will monitor how people are employing the technology to help ensure it's being used for its intended purposes."

To help exert such control in the early stages, Microsoft is only inviting certain people or organizations to initially use Azure OpenAI Service, specifically those who are planning to implement well-defined use cases that incorporate responsible principles and strategies for using the AI technology. Access to the preview can be requested here.

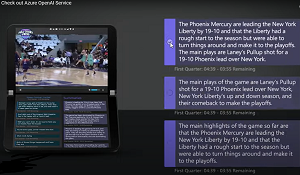

For responsible use cases, the company imagined "a sports franchise that's developing a new app to engage with fans during games could use the models' ability to quickly and abstractly summarize information to convert transcripts of live television commentary into game highlights that someone could choose to include within the app."

[Click on image for larger view.] Example App (source: Microsoft).

[Click on image for larger view.] Example App (source: Microsoft).

In addition to programming, other potential enterprise uses for GPT-3 range from summarizing common complaints in customer service logs to generating new content as starting points for blog posts, Microsoft said.

Customers can also get Microsoft guidance for using the technology successfully and fairly, with the company keeping people in the loop to discern whether model-generated content or code is of high quality.

"We expect to learn with our customers, and we expect the responsible AI areas to be places where we learn what things need more polish," Boyd said. "This is a really critical area for AI generally and with GPT-3 pushing the boundaries of what's possible with AI, we need to make sure we're right there on the forefront to make sure we are using it responsibly."

About the Author

David Ramel is an editor and writer at Converge 360.