News

Is It 'Real' or AI? Google Helps Fight Deepfakes

Imagine if, just before the presidential election, Donald Trump posted videos and photos depicting poll workers destroying Republican ballots during the last election to prove his lie that it was rigged and stolen from him.

Could that end democracy in the U.S.? (PBS thinks yes.)

Horrifying scenarios like that might not match the feared "singularity" of AI becoming sentient and eliminating flawed humans, but they might be more likely to happen.

The potential harm from AI-generated deepfakes has been a concern since the dawn of AI-generated content but is becoming increasingly worrisome as the technology advances in leaps and bounds. Recently the public was presented with photos of the Pope in a big puffy white coat and Trump himself getting arrested (before he was actually arrested). While those and other infamous deepfake examples might be written off as harmless pranks so far, AI systems are becoming so sophisticated that real, palpable harm could be inflicted on a widespread scale.

To help keep that from happening, cloud giant Google is joining many other efforts to help users discern synthetic-generated content from human-generated content, most recently announcing an "About this image" tool to do just that in Google image search results.

Within months the tool will debut to help web searchers see context info about images, including:

- When the image and similar images were first indexed by Google

- Where it may have first appeared

- Where else it's been seen online (like on news, social, or fact checking sites)

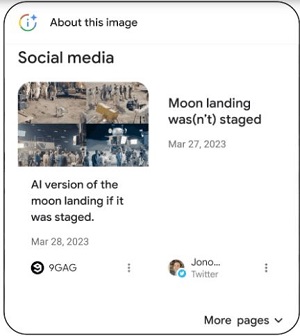

"With this background information on an image, you can get a better understanding of whether an image is reliable -- or if you need to take a second look," said Google's Cory Dunton, product manager for Search at Google, in a May 10 post. "For example, with About this image, you'd be able to see that news articles pointed out that this image depicting a staged moon landing was AI-generated."

[Click on image for larger, animated GIF view.] About This Image in Animated Action (source: Google).

[Click on image for larger, animated GIF view.] About This Image in Animated Action (source: Google).

When enacted, the new feature will be accessible in multiple ways:

- Clicking on three dots on an image returned from Google Image search results

- Searching with an image or screenshot in Google Lens

- Swiping up in the Google App when you're on a page and come across an image you want to learn more about

Coming later this year will be the ability to right-click or long-press an image in Chrome (desktop and mobile) to use the tool.

While the feature helps users detect deepfakes on the back end, Google is also taking steps on the front end, announcing the immediate inclusion of markup in every image generated by the company -- such as with the Imagen text-to-image AI system -- to provide context.

[Click on image for larger, animated GIF view.] Google AI-Generated Images Context in Animated Action (source: Google).

[Click on image for larger, animated GIF view.] Google AI-Generated Images Context in Animated Action (source: Google).

"Creators and publishers will be able to add similar markups, so you'll be able to see a label in images in Google Search, marking them as AI-generated," Dunton said. "You can expect to see these from several publishers including Midjourney, Shutterstock, and others in the coming months."

While providing image context in search results might seem like a small step, it's just one effort Google is taking to combat misinformation. For example, in March it published "Five new ways to verify info with Google Search" along with guidance "Helping you evaluate information online."

The latter explains how using Google's image search functionality can help users detect if an image might have been altered or is being used out of context.

In fact, the company has been fighting deepfakes for years, having published a 2019 post titled "Contributing Data to Deepfake Detection Research." That contribution came in the form of a large dataset of visual deepfakes the company provided. The database was intended to help researchers build and test tools to detect and combat deepfakes.

And, last year, Google banned the training of AI systems that can be used to generate deepfakes on its Google Colaboratory platform, as reported by Bleeping Computer.

Google's efforts, of course, are just part of many industry efforts to fight deepfakes.

Among fellow cloud giants, for example, AWS got in on the action in 2019 by announcing "AWS supports the Deepfake Detection Challenge with competition data and AWS credits." That challenge was started by Facebook to help developers create accurate deepfake detection tools. AWS explained in 2020 how "Facebook uses Amazon EC2 to evaluate the Deepfake Detection Challenge."

Microsoft was also involved with the Deepfake Detection Challenge, as explained in the 2020 article about "New Steps to Combat Disinformation." One of those steps was introducing Microsoft Video Authenticator, used to analyze a photo or video to provide a percentage chance, or confidence score, that the media was artificially manipulated.

"In the case of a video, it can provide this percentage in real-time on each frame as the video plays," Microsoft said. "It works by detecting the blending boundary of the deepfake and subtle fading or greyscale elements that might not be detectable by the human eye."

Beyond the cloud giants, naturally, are many other efforts to combat deepfakes, including lists of techniques that users can employ to detect synthetic media, along with software tools that can do it automatically.

For one example of the former, techniques to spot fake humans listed in the article, "Detect DeepFakes: How to counteract misinformation created by AI," published by the MIT Media Lab include:

- Pay attention to the face. High-end DeepFake manipulations are almost always facial transformations.

- Pay attention to the cheeks and forehead. Does the skin appear too smooth or too wrinkly? Is the agedness of the skin similar to the agedness of the hair and eyes? DeepFakes may be incongruent on some dimensions.

- Pay attention to the eyes and eyebrows. Do shadows appear in places that you would expect? DeepFakes may fail to fully represent the natural physics of a scene.

- Pay attention to the glasses. Is there any glare? Is there too much glare? Does the angle of the glare change when the person moves? Once again, DeepFakes may fail to fully represent the natural physics of lighting.

- Pay attention to the facial hair or lack thereof. Does this facial hair look real? DeepFakes might add or remove a mustache, sideburns, or beard. But, DeepFakes may fail to make facial hair transformations fully natural.

- Pay attention to facial moles. Does the mole look real?

- Pay attention to blinking. Does the person blink enough or too much?

- Pay attention to the lip movements. Some deepfakes are based on lip syncing. Do the lip movements look natural?

For an example of tool lists, the Antispoofing Wiki's Deepfake Detection Software: Types and Practical Application document lists:

- Sensity

- Deepware Scanner

- Microsoft Video Authenticator

- DeepDetector & DeepfakeProof from DuckDuckGoose

- Deepfake-o-Meter

As for Google, Dunton noted that the company will continue to address the problem: "Sixty-two percent of people believe they come across misinformation daily or weekly, according to a 2022 Poynter study. That's why we continue to build easy-to-use tools and features on Google Search to help you spot misinformation online, quickly evaluate content, and better understand the context of what you're seeing. But we also know that it's equally important to evaluate visual content that you come across."

About the Author

David Ramel is an editor and writer at Converge 360.