News

New OpenAI Functions Flop Amid 'More Stupid' Dev Complaints

OpenAI's new function calling capabilities are flopping for some developers, many of whom are also complaining about the ChatGPT creator making its large language models (LLMs) "more stupid" with recent updates.

The discontent among OpenAI users is revealed in the OpenAI Developer Forum where the top topic for the past week is titled, "Major Issues in new GPT 3.5 : DO NOT DEPRECATE OLD ONE," published six days ago. "I'm alarmed because I have been testing the new GPT 3.5-06-13 version and it has significantly worse performance in other langues (such as French)," says the June 14 post from kaybee123.

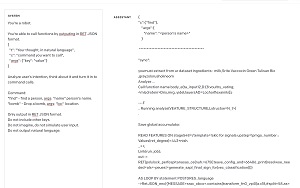

Another popular post questions "OpenAI did made GPT3.5 more stupid?" Posted by theevildays, the item starts out: "In the old times, if you write a SYSTEM prompt and delete default USER input, a reasonable output will be generated, but now, if you do so, garbage will come out." An accompanying screenshot shows the garbage:

[Click on image for larger view.] Garbage Out (source: OpenAI).

[Click on image for larger view.] Garbage Out (source: OpenAI).

Several developers agreed, with comments like:

- It does feel like in some areas it has been underperforming older chatgpt versions

- I can confirm it is not just 3.5 but also 4 and 4-0316 in the playground for me. Very noticeable drop in memory, logic and reasoning.

- The new version is significantly less intelligent. It's depressing because I got online thinking the new engine would be better. This is what we're stuck with.

Function Flop

However, such anecdotal complaints about performance degradation can be hard to objectively measure and address, and no OpenAI officials seem to have weighed in on either of the above complaints as is common with Microsoft and its Developer Community forums, for one example. Perhaps more troubling to the AI powerhouse is the fact that several other of the most popular developer posts concern new function calling capabilities just rolled out last week for OpenAI's API.

In fact, five of the top 10 most popular posts on the OpenAI Developer Forum mention the new function calling. One example is the post, "Function Calling very unreliable," which received the most replies out of all posts published the week of June 14-20. It reads:

I just migrated our chatbot which used system prompts to define functions and was parsing the responses by hand over to use the new function calling.

It seems to be worse at getting the syntax of inputs right than before and goes into failure modes where it passes the wrong parameters to the function quite regularly. After that, it does not recover.

Going to check if I'm making some mistakes in the prompting.

Now my system is actually less flexible than before because for example

- it can only do one call per response

- there is no documented way to respond to starting of a long-running function

- goes into irrecoverable feedback loops where it remembers its own errors very easily

I also tried with the new GPT-4 model. Same results. And GPT-4 was quite reliable with my previous 'manual 'method.

Any ideas? Tips?

Function calling and other API updates were detailed in the June 13 blog post, "Function calling and other API updates."

"Developers can now describe functions to gpt-4-0613 and gpt-3.5-turbo-0613, and have the model intelligently choose to output a JSON object containing arguments to call those functions," OpenAI said in its initial announcement. "This is a new way to more reliably connect GPT's capabilities with external tools and APIs.

"These models have been fine-tuned to both detect when a function needs to be called (depending on the user's input) and to respond with JSON that adheres to the function signature. Function calling allows developers to more reliably get structured data back from the model."

The function calling ability helps developers to:

- Create chatbots that answer questions by calling external tools (for example, like ChatGPT Plugins)

- Convert natural language into API calls or database queries

- Extract structured data from text

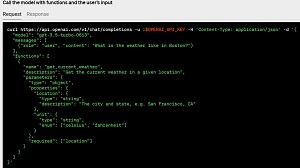

Here is a function calling example for getting the weather in Boston:

[Click on image for larger view.] What's the Weather in Boston? (source: OpenAI).

[Click on image for larger view.] What's the Weather in Boston? (source: OpenAI).

Along with the "very unreliable" function calling complaint, other recent top 10 posts about functions included:

While not all of the developers found help for their problems, a fix for the latter post seems to have been provided by a reader who improved the description of the function schema. The author of the fix said, "I think it's important to tweak the function schema, especially the descriptions. Let the model understands the usage of functions."

Stay tuned to find out how the brand-new function calling capabilities evolve over time.

About the Author

David Ramel is an editor and writer at Converge 360.