News

Researchers: Tools to Detect AI-Generated Content Just Don't Work

What happens to a society that loses the concept of "truth" in the age of AI-generated text, images, audio and video that can depict whatever a bad actor wants to depict?

No one knows yet, but new research says that tools created to identify AI-generated content -- obviously key to helping humans hang on to "truth" -- just don't work well.

"Available detection tools are neither accurate nor reliable," says a new research paper titled "Testing of Detection Tools for AI-Generated Text."

While the paper deals only with text, some of its findings might pertain to audio/image/video deepfakes also. Just submitted on June 21, the paper arrives amid a deluge of tools that can purportedly identify AI-generated content.

The new research backs up existing industry sources that reached similar conclusions, such as:

The new paper, with eight academician authors, sought to answer research questions about whether existing detection tools can reliably differentiate between human-written text and ChatGPT-generated text, and whether machine translation and content obfuscation techniques affect the detection of AI-generated text.

"The researchers conclude that the available detection tools are neither accurate nor reliable and have a main bias towards classifying the output as human-written rather than detecting AIgenerated text," the paper says. "Furthermore, content obfuscation techniques significantly worsen the performance of tools."

To arrive at these findings, researchers examined this list of tools:

- Check For AI (https://checkforai.com)

- Compilatio (https://ai-detector.compilatio.net/)

- Content at Scale (https://contentatscale.ai/ai-content-detector/)

- Crossplag (https://crossplag.com/ai-content-detector/)

- DetectGPT (https://detectgpt.ericmitchell.ai/)

- Go Winston (https://gowinston.ai)

- GPT Zero (https://gptzero.me/)

- GPT-2 Output Detector Demo (https://openai-openai-detector.hf.space/)

- OpenAI Text Classifier (https://platform.openai.com/ai-text-classifier)

- PlagiarismCheck (https://plagiarismcheck.org/)

- Turnitin (https://demo-ai-writing-10.turnitin.com/home/)

- Writeful GPT Detector (https://x.writefull.com/gpt-detector)

- Writer (https://writer.com/ai-content-detector/)

- Zero GPT (https://www.zerogpt.com/)

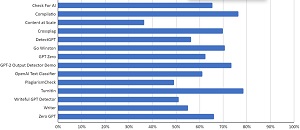

Here's a table that shows accuracy levels for the above:

[Click on image for larger view.] Overall Accuracy for Each Tool Calculated as an Average of All Approaches

discussed (source: Testing of Detection Tools for AI-Generated Text).

[Click on image for larger view.] Overall Accuracy for Each Tool Calculated as an Average of All Approaches

discussed (source: Testing of Detection Tools for AI-Generated Text).

"Detection tools for AI-generated text do fail, they are neither accurate nor reliable (all scored below 80 percent of accuracy and only 5 over 70 percent)," the paper says under a "Discussion" heading. "In general, they have been found to diagnose human-written documents as AI-generated (false positives) and often diagnose AI-generated texts as human-written (false negatives). Our findings are consistent with previously published studies (Gao et al, 2022; Anderson et al, 2023; Demers, 2023; Gewirtz, 2023; Krishna et al, 2023; Pegoraro et al, 2023; van Oijen, 2023; & Wang et al, 2023) and substantially differ from what some detection tools for AI-generated text claim (Compilatio, 2023; Crossplag.com, 2023; GoWinston.ai, 2023; Zero GPT, 2023). The detection tools present a main bias towards classifying the output as human-written rather than detecting AI-generated content. Overall, approximately 20 percent of AI-generated texts would likely be misattributed to humans."

Industry sources suggest it is hard for humans to create tools to identify AI-generated content because:

- AI-generated content is becoming more sophisticated: As AI models become more powerful, they are able to generate text that is more human-like. This makes it more difficult for tools to distinguish between AI-generated content and human-generated content.

- AI-generated content can be tailored to avoid detection: There are a number of ways to tailor AI-generated content to avoid detection. For example, AI models can be trained on a dataset of human-generated text that is similar to the content that they are trying to generate. This can make it difficult for tools to detect the AI-generated content because it will look similar to human-generated content.

- There is no single feature that can reliably identify AI-generated content: Most tools that are used to detect AI-generated content rely on a combination of features. However, there is no single feature that can reliably identify AI-generated content. This is because AI models are able to generate text that exhibits a wide range of features.

- The tools are not always up-to-date: As AI models become more sophisticated, the tools that are used to detect AI-generated content need to be updated. However, this can be a challenge because it requires the tools to be trained on a new dataset of AI-generated content.

Considering those factors, it might well be impossible for humans to create tools to identify AI-generated text with 100 percent accuracy and reliability, something the paper alluded to: "Our findings strongly suggest that the 'easy solution' for detection of AI-generated text does not (and maybe even could not) exist. Therefore, rather than focusing on detection strategies, educators continue to need to focus on preventive measures and continue to rethink academic assessment strategies (see, for example, Bjelobaba 2020). Written assessment should focus on the process of development of student skills rather than the final product."

With that in mind, some possible solutions to mitigate the associated risks of AI-generated content include:

- Watermarking: This is a method of embedding a secret signal in AI-generated text that allows computer programs to detect it as such . This would require AI developers to include watermarking in their models from the start and make it transparent and accessible to users and regulators. Watermarking could help verify the source and authenticity of online content and prevent unauthorized or malicious use of AI-generated text.

- Education: This involves raising awareness and literacy among users and consumers about the existence and implications of AI-generated content. This would require media outlets, educators, researchers and policymakers to inform and educate the public about how AI models work, what their strengths and limitations are, how they can be used for good or evil purposes, and how they can be detected or verified. Education could help develop critical thinking and judgment skills when consuming online content and avoid being deceived or manipulated by AI-generated information.

- Regulation: Establishing and enforcing rules and standards for the development and use of AI-generated text would require governments, organizations and communities to define and agree on the ethical and legal principles and guidelines for AI models, such as transparency, accountability, fairness, safety and so on. Regulation could help to monitor and control the quality and impact of online content and prevent or punish the misuse or abuse of AI-generated content.

As noted above, authors said their new study builds upon existing research. Some previous related papers include:

"Future research in this area should test the performance of AI-generated text detection tools on texts produced with different (and multiple) levels of obfuscation e.g., the use of machine paraphrasers, translators, patch writers, etc." the new paper says in conclusion. "Another line of research might explore the detection of AI-generated text at a cohort level through its impact on student learning (e.g. through assessment scores) and education systems (e.g. the impact of generative AI on similarity scores). Research should also build on the known issues with cloud-based text-matching software to explore the legal implications and data privacy issues involved in uploading content to cloud-based (or institutional) AI detection tools."

The new paper was authored by: Debora Weber-Wulff (University of Applied Sciences HTW Berlin, Germany), Alla Anohina-Naumeca (Riga Technical University, Latvia), Sonja Bjelobaba (Uppsala University, Sweden), Tomáš Foltýnek (Masaryk University, Czechia), Jean Guerrero-Dib (Universidad de Monterrey, Mexico), Olumide Popoola (Queen Mary's University, UK), Petr Šigut (Masaryk University, Czechia) and Lorna Waddington (University of Leeds, UK).

About the Author

David Ramel is an editor and writer at Converge 360.