News

AI Report: Closed Models Outperform Open Models, at Staggering Cost

A new AI report reveals closed-source foundation models outperform their open-source counterparts by a significant margin.

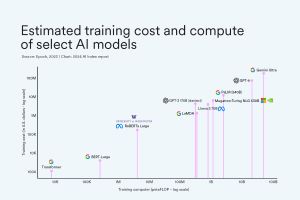

The AI Index published by the Stanford Institute for Human-Centered Artificial Intelligence (HAI) also found that the cost of training foundation models has skyrocketed, with Google's top-level Gemini Ultra costing an estimated $191 million worth of compute to train.

Costs like that have made it difficult for academia and government to keep up with industry in AI research and development, HAI indicated in an article yesterday (April 15).

Despite the resource edge that industry has over the academia/government space, the report still noted a move toward open-sourced foundation models.

"This past year, organizations released 149 foundation models, more than double the number released in 2022," UAI said. "Of these newly released models, 65.7 percent were open-source (meaning they can be freely used and modified by anyone), compared with only 44.4 percent in 2022 and 33.3 percent in 2021."

That resource edge, though, pays off when foundation models are measured for performance.

Citing results of 10 selected benchmarks, closed models achieved a median performance advantage of 24.2 percent, HAI said.

"One of the reasons academia and government have been edged out of the AI race: the exponential increase in cost of training these giant models. Google's Gemini Ultra cost an estimated $191 million worth of compute to train, while OpenAI's GPT-4 cost an estimated $78 million. In comparison, in 2017, the original Transformer model, which introduced the architecture that underpins virtually every modern LLM, cost around $900."

[Click on image for larger view.] Training Costs (source: HAI).

[Click on image for larger view.] Training Costs (source: HAI).

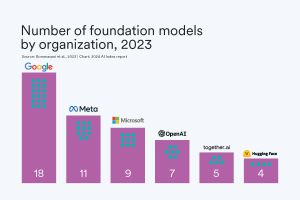

As far as who is creating the most foundation models, cloud giants Google and Microsoft clock in at No. 1 and No. 3 respectively, sandwiching Meta.

[Click on image for larger view.] Leading Players (source: HAI).

[Click on image for larger view.] Leading Players (source: HAI).

"Industry dominates AI, especially in building and releasing foundation models," HAI said. "This past year Google edged out other industry players in releasing the most models, including Gemini and RT-2. In fact, since 2019, Google has led in releasing the most foundation models, with a total of 40, followed by OpenAI with 20. Academia trails industry: This past year, UC Berkeley released three models and Stanford two."

This is the seventh edition of the AI Index, dating back to 2017.

"The 2024 Index is our most comprehensive to date and arrives at an important moment when AI's influence on society has never been more pronounced," the report said. "This year, we have broadened our scope to more extensively cover essential trends such as technical advancements in AI, public perceptions of the technology, and the geopolitical dynamics surrounding its development. Featuring more original data than ever before, this edition introduces new estimates on AI training costs, detailed analyses of the responsible AI landscape, and an entirely new chapter dedicated to AI's impact on science and medicine."

The report summarizes its voluminous findings in 10 top takeaways:

- AI beats humans on some tasks, but not on all: AI has surpassed human performance on several benchmarks, including some in image classification, visual reasoning, and English understanding. Yet it trails behind on more complex tasks like competition-level mathematics, visual commonsense reasoning and planning.

- Industry continues to dominate frontier AI research: In 2023, industry produced 51 notable machine learning models, while academia contributed only 15. There were also 21 notable models resulting from industry-academia collaborations in 2023, a new high.

- Frontier models get way more expensive: According to AI Index estimates, the training costs of state-of-the-art AI models have reached unprecedented levels. For example, OpenAI's GPT-4 used an estimated $78 million worth of compute to train, while Google's Gemini Ultra cost $191 million for compute.

- The United States leads China, the EU, and the U.K. as the leading source of top AI models: In 2023, 61 notable AI models originated from U.S.-based institutions, far outpacing the European Union's 21 and China's 15.

- Robust and standardized evaluations for LLM responsibility are seriously lacking: New research from the AI Index reveals a significant lack of standardization in responsible AI reporting. Leading developers, including OpenAI, Google, and Anthropic, primarily test their models against different responsible AI benchmarks. This practice complicates efforts to systematically compare the risks and limitations of top AI models.

- Generative AI investment skyrockets: Despite a decline in overall AI private investment last year, funding for generative AI surged, nearly octupling from 2022 to reach $25.2 billion. Major players in the generative AI space, including OpenAI, Anthropic, Hugging Face, and Inflection, reported substantial fundraising rounds.

- The data is in -- AI makes workers more productive and leads to higher quality work: In 2023, several studies assessed AI's impact on labor, suggesting that AI enables workers to complete tasks more quickly and to improve the quality of their output. These studies also demonstrated AI's potential to bridge the skill gap between low- and high-skilled workers. Still other studies caution that using AI without proper oversight can lead to diminished performance.

- Scientific progress accelerates even further, thanks to AI: In 2022, AI began to advance scientific discovery. 2023, however, saw the launch of even more significant science-related AI applications -- from AlphaDev, which makes algorithmic sorting more efficient, to GNoME, which facilitates the process of materials discovery.

- The number of AI regulations in the United States sharply increases: The number of AI-related regulations in the U.S. has risen significantly in the past year and over the last five years. In 2023, there were 25 AI-related regulations, up from just one in 2016. Last year alone, the total number of AI-related regulations grew by 56.3 percent.

- People across the globe are more cognizant of AI's potential impact -- and more nervous: A survey from Ipsos shows that, over the last year, the proportion of those who think AI will dramatically affect their lives in the next three to five years has increased from 60 percent to 66 percent. Moreover, 52 percent express nervousness toward AI products and services, marking a 13 percentage point rise from 2022. In America, Pew data suggests that 52 percent of Americans report feeling more concerned than excited about AI, rising from 38 percent in 2022.

About the Author

David Ramel is an editor and writer at Converge 360.