News

Cloud Security Alliance Offers AI Implementation Guidance

During this week's RSA Conference, the Cloud Security Alliance (CSA) announced new guidance for organizations to successfully implement AI, with recommendations focusing on security and compliance.

The new guidance is part of the organization's mission to define and raise awareness of best practices to help ensure a secure cloud computing environment.

The guidance comes in three detailed whitepapers:

AI Organizational Responsibilities - Core Security Responsibilities

This paper is described as a blueprint for enterprises to fulfill their core information security responsibilities regarding AI and machine learning (ML) development and deployment.

It discusses:

- The components of the AI Shared Responsibility Model

- How to ensure the security and privacy of AI training data

- The significance of AI model security, including access controls, secure runtime environments, vulnerability and patch management, and MLOps pipeline security

- The significance of AI vulnerability management, including AI/ML asset inventory, continuous vulnerability scanning, risk-based prioritization, and remediation tracking

More specifically, key points discussed in the paper include:

- Data Security and Privacy Protection: The importance of data authenticity, anonymization, pseudonymization, data minimization, access control, and secure storage and transmission in AI training.

- Model Security: Covers various aspects of model security, including access controls, secure runtime environments, vulnerability and patch management, MLOps pipeline security, AI model governance, and secure model deployment. Here, the paper offers a table that provides a synthesized view of the potential security risks and threats at each stage of an AI/ML system, along with examples and recommended mitigations to address these concerns.

[Click on image for larger view.] AI/ML Security Risk Overview (source: CSA).

[Click on image for larger view.] AI/ML Security Risk Overview (source: CSA).

- Vulnerability Management: Discusses the significance of AI/ML asset inventory, continuous vulnerability scanning, risk-based prioritization, remediation tracking, exception handling, and reporting metrics in managing vulnerabilities effectively.

AI Resilience: A Revolutionary Benchmarking Model for AI Safety

This paper addresses the need for a more holistic perspective on AI governance and compliance.

It discusses:

- The difference between governance and compliance

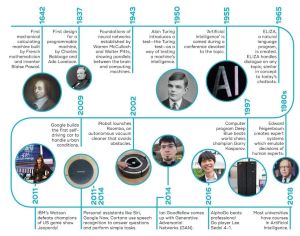

- The history and current landscape of AI technologies

[Click on image for larger view.] History of Artificial Intelligence (source: CSA).

[Click on image for larger view.] History of Artificial Intelligence (source: CSA).

- The landscape of AI training methods

- Major challenges with real-life AI applications

- AI regulations and challenges in different industries

- How to rate AI quality by using a benchmarking model inspired by evolution

Principles to Practice: Responsible AI in a Dynamic Regulatory Environment

This

paper provides an overview of existing regulations and their impact on the development, deployment and usage of AI, along with the challenges and opportunities surrounding the development of new AI legislation.

It discusses:

- How existing laws and regulations relate to AI, including GDPR, CCPA, CPRA, and HIPAA

- The impact of AI hallucinations on data privacy, security, and ethics

- The impact of anti-discrimination laws and regulations on AI

- An overview of emerging AI regulations

- Considerations relating to AI ethics, liability, and intellectual property

- A summary of technical best practices for implementing responsible AI

- How to approach the continuous monitoring of AI

The paper explains how understanding ethical and legal frameworks for AI empowers organizations to achieve three key objectives:

- Building trust and brand reputation: Organizations can build trust with stakeholders and bolster their brand reputation by demonstrating transparent and responsible AI practices.

- Mitigating risks: Proactive engagement with frameworks and utilizing a risk-based approach, helps mitigate potential legal, reputational, and financial risks associated with irresponsible AI use, protecting both the organization and individuals.

- Fostering responsible innovation: By adhering to best practices, maintaining transparency, accountability, and establishing strong governance structures, organizations can foster a culture of responsible and safe AI innovation, ensuring its positive impact on society alongside its development. Responsible AI, through diverse teams, comprehensive documentation, and human oversight, would enhance model performance by mitigating bias, catching issues early, and aligning with real-world use.

CSA at RSAC

As noted, these papers were announced during RSA Conference 2024, which includes the CSA AI Summit, focusing "on the intersection of AI and cloud to deliver critical tools and best practices necessary to meet the rapidly evolving demands of the most consequential technology ever introduced: artificial intelligence."

The conference continues through Thursday, so stay tuned for more news.

About the Author

David Ramel is an editor and writer at Converge 360.