News

OpenAI Studies Emotional Effect of AI Usage Amid Reports of Suicide, Murder Accusation

Amid reports of suicides following AI interactions and a false accusation by an AI system that a man committed murder, OpenAI has published a research report on human emotional engagement with its ChatGPT chatbot.

The first key finding of the report blog: "Emotional engagement with ChatGPT is rare in real-world usage."

That statement comes in a post titled "Early methods for studying affective use and emotional well-being on ChatGPT," which announced the study, "Investigating Affective Use and Emotional Well-being on ChatGPT." It was published by a bevy of researchers, in conjunction with MIT Media Labs, to investigate "the influence of interacting with such systems on users' well-being and behavioral patterns over time."

Along with co-writing the blog post, MIT Media Labs published its own research, "How AI And Human Behaviors Shape Psychosocial Effects Of Chatbot Use: A Longitudinal Randomized Controlled Study" (see below).

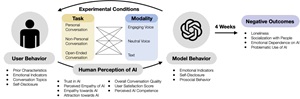

[Click on image for larger view.] Conceptual Framework of the MIT Study (source: MIT Media Labs).

[Click on image for larger view.] Conceptual Framework of the MIT Study (source: MIT Media Labs).

"Affective cues (aspects of interactions that indicate empathy, affection, or support) were not present in the vast majority of on-platform conversations we assessed, indicating that engaging emotionally is a rare use case for ChatGPT," OpenAI found.

Those findings come among a spate of real-world anecdotes that report users falling in love with AI constructs, multiple suicides following AI interactions, and one system even accusing a man of murdering his children.

In the latter case, reported last Friday by The Guardian, a Norwegian man named Arve Hjalmar Holmen filed a complaint against OpenAI after the company's ChatGPT chatbot falsely claimed he had murdered two of his children. The lawsuit, claiming defamation, asked a Norwegian watchdog to order OpenAI to adjust its model to eliminate inaccurate results relating to Holmen and to impose a fine on the company.

There have also been multiple reports of suicide that were related to AI usage, with the publication People publishing at least two articles related to that, "Man Dies by Suicide After Conversations with AI Chatbot That Became His 'Confidante,' Widow Says" and "Teen's Suicide After Falling in 'Love' with AI Chatbot Is Proof of the Popular Tech's Risks, Expert Warns (Exclusive)."

As for the love aspect, Live Science reported "People are falling in love with AI. Should we worry?"

The OpenAI study even borrows from another study that examined the love angle, titled "Finding Love in Algorithms: Deciphering the Emotional Contexts of Close Encounters with AI Chatbots."

However, as noted, the study blog post didn't find much cause for alarm, with its other key findings including (besides the one that found engaging emotionally is a "rare use case for ChatGPT"):

- Even among heavy users, high degrees of affective use are limited to a small group. Emotionally expressive interactions were present in a large percentage of usage for only a small group of the heavy Advanced Voice Mode users we studied. This subset of heavy users were also significantly more likely to agree with statements such as, "I consider ChatGPT to be a friend." Because this affective use is concentrated in a small sub-population of users, studying its impact is particularly challenging as it may not be noticeable when averaging overall platform trends.

- Voice mode has mixed effects on well-being. In the controlled study, users engaging with ChatGPT via text showed more affective cues in conversations compared to voice users when averaging across messages, and controlled testing showed mixed impacts on emotional well-being. Voice modes were associated with better well-being when used briefly, but worse outcomes with prolonged daily use. Importantly, using a more engaging voice did not lead to more negative outcomes for users over the course of the study compared to neutral voice or text conditions.

- Conversation types impact well-being differently. Personal conversations -- which included more emotional expression from both the user and model compared to non-personal conversations -- were associated with higher levels of loneliness but lower emotional dependence and problematic use at moderate usage levels. In contrast, non-personal conversations tended to increase emotional dependence, especially with heavy usage.

- User outcomes are influenced by personal factors, such as individuals' emotional needs, perceptions of AI, and duration of usage. The controlled study allowed us to identify other factors that may influence users' emotional well-being, although we cannot establish causation for these factors given the design of the study. People who had a stronger tendency for attachment in relationships and those who viewed the AI as a friend that could fit in their personal life were more likely to experience negative effects from chatbot use. Extended daily use was also associated with worse outcomes. These correlations, while not causal, provide important directions for future research on user well-being.

- Combining research methods gives us a fuller picture. Analyzing real-world usage alongside controlled experiments allowed us to test different aspects of usage. Platform data capture organic user behavior, while controlled studies isolate specific variables to determine causal effects. These approaches yielded nuanced findings about how users use ChatGPT, and how ChatGPT in turn affects them, helping to refine our understanding and identify areas where further study is needed.

"We are focused on building AI that maximizes user benefit while minimizing potential harms, especially around well-being and overreliance," OpenAI said in conclusion. "We conducted this work to stay ahead of emerging challenges -- both for OpenAI and the wider industry.

The research was conducted in partnership with MIT Media Labs, which published the same blog post and its own research paper, "How AI and Human Behaviors Shape Psychosocial Effects of Chatbot Use: A Longitudinal Controlled Study." In part, it said: "Our findings reveal that while longer daily chatbot usage is associated with heightened loneliness and reduced socialization, the modality and conversational content significantly modulate these effects."

As always, more research was advised.

About the Author

David Ramel is an editor and writer at Converge 360.