News

Execs Fear Ousting Due to Failed AI Projects: Survey

"CIOs and CDOs carry the weight of responsibility: 46% are most likely to be credited for AI gains, but over half, 56%, are most likely to be blamed for business losses due to failed AI," says Dataiku about its new survey-based report on AI. "With 60% fearing their own jobs are at risk if AI doesn't deliver measurable results within two years, the stakes for data leaders have never been higher."

Titled "Global AI Confessions Report: Data Leaders Edition," the report was announced today by the specialist in enterprise AI and machine learning platforms.

Along with that pressure on execs, highlights of the report as presented by Dataiku include:

- 80% say an accurate but unexplainable AI decision is riskier than a wrong but explainable one.

- 69% report that AI business suggestions are taken more seriously than human ones.

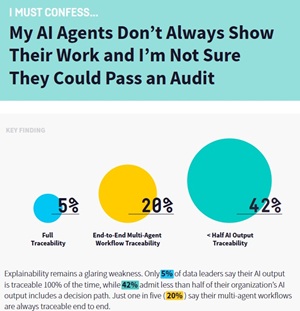

- Only 19% of data leaders always require AI agents to "show their work" before approval.

- 52% have delayed or completely blocked agent deployments due to explainability concerns.

- 73% say the C-suite underestimates the difficulty of achieving AI reliability prior to production.

- 58% of German data leaders worry AI-generated code vulnerabilities are a "disaster waiting to happen."

The study was conducted by The Harris Poll for Dataiku, surveying more than 800 senior data executives across the U.S., U.K., France, Germany, UAE, Japan, Singapore, and South Korea.

Here's a look at the survey, with more discussion around those six highlights listed by Dataiku.

Accurate but Unexplainable AI Decisions Seen as Riskier

Four in five data leaders say that an accurate but unexplainable AI decision is more dangerous than a wrong but traceable one. This perspective underscores the report's central finding that explainability has become an essential trust factor in enterprise AI.

[Click on image for larger view.] Critical Decisions (source: Dataiku).

[Click on image for larger view.] Critical Decisions (source: Dataiku).

As the report puts it, "Four in five (80%) leaders deem an accurate but unexplained AI decision more dangerous than a wrong but traceable one." Dataiku said this reflects how trust and explainability have become critical risk factors for enterprise AI adoption.

AI Business Suggestions Taken More Seriously Than Human Ones

Nearly seven in ten (69%) data leaders report that AI-generated business suggestions are taken more seriously than human ones.

This finding reflects a deepening reliance on algorithmic insights in executive decision-making. The report attributes this imbalance to what it describes as a growing reliance on machine judgment over human judgment, with 62% of data leaders believing that AI can already provide more accurate analysis than their own teams. Yet this reliance carries risk, as 59% admit AI hallucinations or inaccuracies have already caused business issues within the past year.

Few Require AI Agents to 'Show Their Work'

Despite widespread use of AI, only 19% of data leaders always require AI agents to "show their work" before approval, and 52% have delayed or completely blocked agent deployments due to explainability concerns.

[Click on image for larger view.] Show Your Work (source: Dataiku).

[Click on image for larger view.] Show Your Work (source: Dataiku).

The report warns that this lack of transparency leaves organizations exposed to compliance, audit, and reputational risks.

Explainability Concerns Delay Deployments

Explainability gaps are also slowing down enterprise AI adoption. More than half (52%) of data leaders say they have delayed or completely blocked AI agent deployments due to transparency issues.

Dataiku indicated that such concerns are central to the growing hesitation about AI reliability. The report highlights that this slowdown often arises when data leaders demand clarity on how AI reaches conclusions before approving production use, underscoring that opacity remains a major operational bottleneck.

C-Suite Underestimates Difficulty of Reliable AI

Three in four (73%) data leaders believe the C-suite underestimates how difficult it is to achieve reliable AI performance before production. The report describes this as a "dangerous gap between CEO ambition and enterprise reality," echoing earlier findings from "Dataiku's Global AI Confessions Report: CEO Edition."

Data leaders are far less confident than CEOs about their organizations' readiness, with 68% saying executives overestimate AI accuracy and 39% believing their leaders truly understand the technology. This disconnect helps explain why many AI initiatives remain stuck in proof-of-concept phases.

AI-Generated Code Risks Seen as a 'Disaster Waiting to Happen'

Finally, 58% of German respondents say AI-generated code vulnerabilities are a "disaster waiting to happen". This concern is reflected throughout the report's discussion of "trust on trial," where 75% of data leaders express worries about reliability and oversight in AI deployments.

"An alarming revelation of the report is that enterprises worldwide are betting on AI they don't fully trust," said Florian Douetteau, co-founder and CEO of Dataiku. "The good news is that most failed AI initiatives suffer from common blockers that can be overcome with more explainability, traceability, and governance. That's how AI moves from hype to real business impact."

About the Author

David Ramel is an editor and writer at Converge 360.