How-To

Using Graphic Cards with Virtual Machines

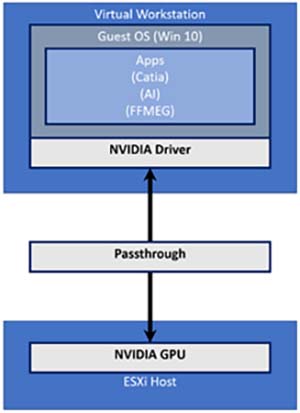

VMware has supported the use of physical GPUs in virtual machines (VMs) since View 5.3 by allowing a GPU to either be dedicated to a single VM with Virtual Dedicated Graphics Acceleration (vDGA) or shared amongst many VMs with Virtual Shared Graphics Acceleration (vSGA). By using the power of GPUs, users can more efficiently process graphics; as such, many industries such as aerospace, energy, medical, and media & entertainment have come to embrace the use of VMs with GPUs over the years.

I recently had a powerful workstation with a GPU on it, and I needed to use it both as an ESXi server and a means to complete some heavy-duty graphic design work. By creating a vDGA VM, I effectively created a very powerful virtual workstation that could be delivered using VMware Horizon by passing through a GPU. In this article, I will show you how I did this.

[Click on image for larger view.]

[Click on image for larger view.]

The system I used was an Intel NUC 9 Pro (NUC9VXQNX) with an eight-core Xeon processor. It originally came with 32 GB of RAM, a 1TB Intel Optane Memory H10 with a solid-state storage device, and a discrete NVIDIA Quadro P2200 graphics card. However, I upgraded the system to 64 GB of RAM and added two Kioxia (formally known as Toshiba Memory) 1TB XG6 (KXG60ZNV1T02) devices into the system's empty NVMe slots.

[Click on image for larger view.]

[Click on image for larger view.]

Intel lists ESXi as a supported operating system for the NUC 9, and I didn't have any issues installing ESXi 6.7 U3 on the NUC 9 and connecting it to my vSphere environment. All the system's storage and networking devices were also located without any issues. It needs to be noted that the NUC 9 was not on the VMware HCL for servers, and the NVIDIA P2200 was not on the VMware vDGA HCL -- but the NVIDIA P2000 was, and the system found it without any issues.

To verify that the GPU had been seen by the hypervisor, I SSHed into the ESXi host and entered lspci | grep -i nvidia. This reported that I had NVIDIA graphics and audio devices.

[Click on image for larger view.]

[Click on image for larger view.]

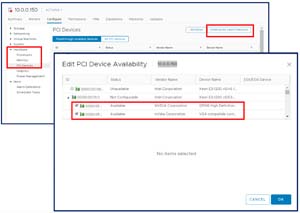

To use the GPU as a passthrough device on the ESXi host, I logged on to the vSphere Client, selected the NUC 9 Pro host, and clicked the Configure tab. Then, from the Hardware drop-down menu, I selected PCI Devices. In the upper-right corner, I clicked Configure Passthrough. After opening the Edit PCI Device Availability pane, I located the NVIDIA devices and selected the checkboxes for the GP106 audio controller and the VGA controller, and then clicked OK.

[Click on image for larger view.]

[Click on image for larger view.]

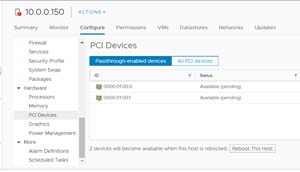

After selecting the Passthrough-enabled devices tab and verifying that the PCI devices were shown, I rebooted the ESXi host.

[Click on image for larger view.]

[Click on image for larger view.]

Once the ESXi host had finished rebooting, I verified that the GPU was configured for passthrough from the SSH connection to the ESXi by entering esxcli hardware pci list | less and searching for the GPU. The output indicated that the device was being used as a passthru device.

[Click on image for larger view.]

[Click on image for larger view.]

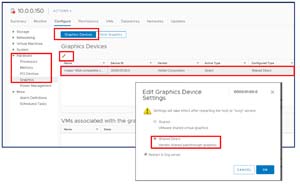

Next, I went back to vSphere Client and selected Graphics from the Configure screen. Then, I selected the GPU, selected the pencil (edit) icon, clicked the Shared Direct checkbox, and then OK.

[Click on image for larger view.]

[Click on image for larger view.]

I created a VM that had 4 vCPUs, 32GB of RAM, and a 128GB hard disk. I installed Windows 10 Pro on it, then powered it on and then installed the NVIDIA Quadro P2200 driver. Before rebooting the system, I entered sysdm.cpl at the Windows 10 run prompt and configured the system for remote access, then powered off the VM. Configuring the system for remote access is very important because once the VM has a GPU attached to it, you will no longer be able to use VMware Remote Console to access the VM; instead, you will need to use RDP or Horizon to access it.

I associated the GPU to the VM by editing the settings for the VM. From the Add new device drop-down menu, I selected PCI device; this automatically added the NVIDIA card to the VM.

[Click on image for larger view.]

[Click on image for larger view.]

I expanded New PCI device, and verified that the GPU and audio controller were both shown.

[Click on image for larger view.]

[Click on image for larger view.]

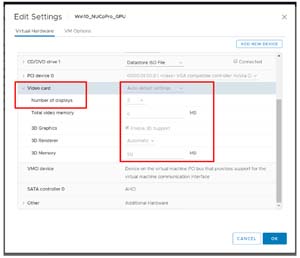

From the Video card drop-down menu, I selected Auto-detect settings, checked the Enable 3D Support box, and indicated Automatic for 3D Rendering.

[Click on image for larger view.]

[Click on image for larger view.]

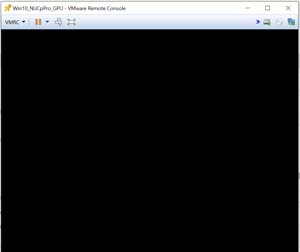

When I powered on the VM and launched a web or remote console to the VM, I only saw a black screen; this was a good sign and to be expected as it indicated that the VM was using the GPU rather than VMware graphics.

[Click on image for larger view.]

[Click on image for larger view.]

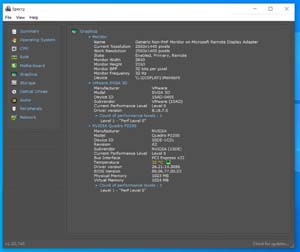

I closed the remote console window and then used RDP to connect to the VM. I launched Speccy, and under Graphics it showed that the NVIDIA Quadro P2200 card was being used.

[Click on image for larger view.]

[Click on image for larger view.]

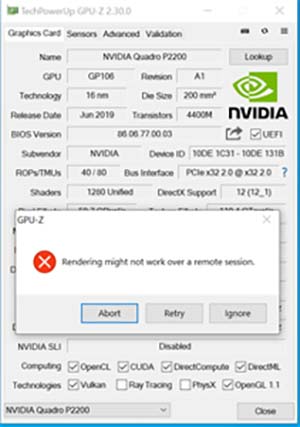

I then entered dxdiag at the command prompt and clicked the Display tab, which showed that Microsoft Display Adapter was being used with the WDDM 1.3 driver. The WDDM 1.3 driver is very old, and not all applications can be used with it. For example, when I tried to run the GPU-Z render test, I got a message that the test might not work.

[Click on image for larger view.]

[Click on image for larger view.]

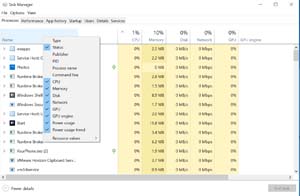

I opened Task Manager, clicked More Details, right-clicked the CPU column header, and selected GPU to see the GPU activity.

[Click on image for larger view.]

[Click on image for larger view.]

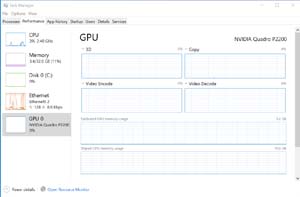

When I selected the Performance tab, the GPU was then shown.

[Click on image for larger view.]

[Click on image for larger view.]

Applications such as ControlUp Console can monitor the GPU activity remotely.

[Click on image for larger view.]

[Click on image for larger view.]

Conclusion

In this article, I discussed a few of the different options available to make a VM GPU-enabled, and then showed you how to create a vDGA VM. In the next article, I will show you how to add a vDGA VM to Horizon and run a few tests on it to see how well it performs.

About the Author

Tom Fenton has a wealth of hands-on IT experience gained over the past 30 years in a variety of technologies, with the past 20 years focusing on virtualization and storage. He previously worked as a Technical Marketing Manager for ControlUp. He also previously worked at VMware in Staff and Senior level positions. He has also worked as a Senior Validation Engineer with The Taneja Group, where he headed the Validation Service Lab and was instrumental in starting up its vSphere Virtual Volumes practice. He's on X @vDoppler.