How-To

Passthrough an iGPU to a Virtual Machine

Tom walks through his experience trying to get a virtual machine to be able to use an integrated GPU, and then do the same thing with a Horizon desktop.

The other day, I noticed that William Lam (@lamw) had tweeted about how he was able to use the integrated Intel UHD Graphics 620 GPU on a virtual machine (VM) being hosted on an NUC 10 which was running ESXi. This is an interesting proposition; I have a couple systems that also have integrated GPUs (iGPU) on them, and it would be beneficial if a VM was able to utilize this otherwise unused resource. It looks like the secret to doing this is by enabling passthrough via the ESXi host client rather than the vSphere Console. In this article, I will walk you through my experience trying to get an iGPU to work with a VM, and then with VMware Horizon.

William was using an Intel NUC10i7FNH containing a CPU with a UHD Graphics 620 card which has 24 execution units (EUs). For this article, I will be using an Intel NUC8i7BEH with a far more powerful iGPU in its CPU, and an Iris Plus Graphics 655 which has 48 EUs.

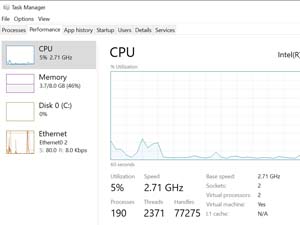

The Intel NUC8i7BEH that I will be using has an Intel i7-8559U CPU, which is a 64-bit quad-core x86 mobile microprocessor. It has support for Hyper-Threading Technology, which allows for a total of eight threads to be running at any given moment. The processor operates at 2.7 GHz with a Thermal Design Power (TDP) of 28W, and a Turbo Boost of up to 4.5GHz. It also supports up to 32GB of dual-channel DDR4-2400 memory. The CPU has an integrated Intel Iris Plus Graphics 655 GPU operating at 300MHz, with a burst frequency of 1.2GHz. The GPU has 128MB of eDRAM L4 cache and 48 EUs, and can support up to three displays (one through the HDMI port [4K @ 24Hz], and two through the Thunderbolt 3 connector [4K @ 60Hz]). The TPD and RAM are shared between the CPU and GPU.

I looked at my NUC system using the vSphere client and saw the iGPU listed, but it was marked as Unavailable.

[Click on image for larger view.]

[Click on image for larger view.]

I logged in to the ESXi host client for the NUC as root. I clicked Manage, selected the Hardware tab, and then selected PCI Devices. The iGPU PCI device was listed but was disabled. I selected the checkbox to enable it, and then clicked Toggle Passthrough. I was informed that I would need to reboot the system for the passthrough to take effect.

[Click on image for larger view.]

[Click on image for larger view.]

The status in the Passthrough column had changed to Enabled / Needs reboot.

[Click on image for larger view.]

[Click on image for larger view.]

I rebooted the NUC and then logged back in to the ESXi host client. The passthrough column now showed that the iGPU was Active.

[Click on image for larger view.]

[Click on image for larger view.]

I went back to the vSphere Client and the device was shown as Available.

[Click on image for larger view.]

[Click on image for larger view.]

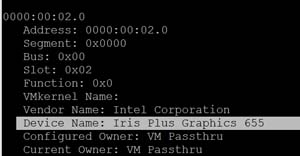

I was interested to see what the GPU looked like from the command line of the hypervisor, so I SSHed in to the ESXi host and entered lspci | grep -i Iris, and then esxcli hardware pci list | less. This reported that the iGPU was seen and the device was being used for Passthru.

[Click on image for larger view.]

[Click on image for larger view.]

[Click on image for larger view.]

[Click on image for larger view.]

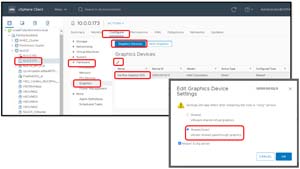

I went back to the vSphere Client and selected the ESXi host, and then navigated to Hardware > Graphic Devices from the Configure screen. I selected the iGPU, then clicked the pencil (edit) icon, the Shared Direct checkbox, and finally OK.

[Click on image for larger view.]

[Click on image for larger view.]

I created a VM that had two vCPUs, 8GB of RAM, and a 128GB hard disk. I installed Windows 10 Pro on it, then powered it on. Speccy showed that it was using VMware SVGDA 3D graphics.

I associated the iGPU to the VM by editing the settings for the VM. From the Add new device drop-down menu, I selected PCI device; this automatically added the NVIDIA card to the VM.

[Click on image for larger view.]

[Click on image for larger view.]

From the Video card drop-down menu, I selected Auto-detect settings, checked the Enable 3D Support box, and selected Automatic under the 3D Renderer drop-down menu.

[Click on image for larger view.]

[Click on image for larger view.]

When I tried to power on the VM, I got the following message: Invalid memory setting: memory reservation (sched.mem.min) should be equal to memsize (8192). I also received a message about the CPU needing to be reserved.

[Click on image for larger view.]

[Click on image for larger view.]

I went into the VM’s settings, expanded Memory, and selected Reserve all guest memory. I also reserved the CPU cycles.

[Click on image for larger view.]

[Click on image for larger view.]

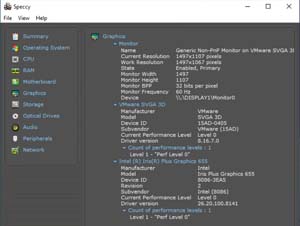

I was then able to power up and log in to the VM. Speccy showed that the iGPU was attached to the VM.

[Click on image for larger view.]

[Click on image for larger view.]

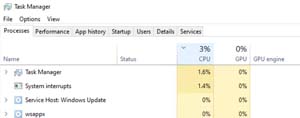

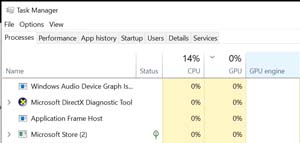

I was able to add GPU and GPU engine columns to Windows Task Manager, but they did not report any usage.

[Click on image for larger view.]

[Click on image for larger view.]

GPU-Z showed two GPUs: VMware SVGA and the iGPU.

[Click on image for larger view.]

[Click on image for larger view.]

Adding the iGPU VM to Horizon

Once I was able to use the iGPU in a VM, I wanted to see if I could also use it in a Horizon desktop.

I logged in to my Horizon Console (Version 7.12), created a manual floating Horizon desktop pool that supported two 4K monitors, enabled automatic 3D rendering, set the VRAM size to 512, and then added the iGPU VM to the desktop pool.

[Click on image for larger view.]

[Click on image for larger view.]

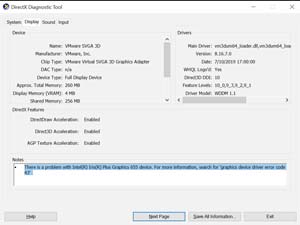

I used the Horizon client (version 5.4) to connect to the iGPU desktop using Blast protocol, and was able to log in to the desktop without any issues. I entered dxdiag.exe from the command line, and the output showed that it was using the VMware SVGA driver. There was a message in the Notes field regarding a problem with the iGPU.

[Click on image for larger view.]

[Click on image for larger view.]

[Click on image for larger view.]

[Click on image for larger view.]

I was able to add the GPU and GPU engine columns to Task Manager, but they were not reporting any usage.

[Click on image for larger view.]

[Click on image for larger view.]

The performance graph did not have the GPU.

[Click on image for larger view.]

[Click on image for larger view.]

Conclusion

Although I was able to passthrough my iGPU to a VM and a Horizon desktop, neither used it. This does not mean, however, that other applications will not be able to use the iGPU; for instance, other OSes (such Linux) that use X11 to display a monitor remotely may be able to use a iGPU passed through to a VM.