News

There's a New Job in Town: Prompt Engineer (to the Tune of $335K/Year)

Jobs for the new specialty role of prompt engineering are being advertised for up to $335,000 per year.

That new role has appeared largely thanks to the advent of cutting-edge generative AI systems based on the GPT-3 series of large language models (LLMs) for machine learning created by OpenAI. They include:

- ChatGPT, an advanced chatbot using natural language processing, or NLP

- Codex, a GPT-3 descendant optimized for coding by being trained on both NLP and billions of lines of code

- DALL-E 2, an AI system that can create realistic images and art from an NLP description

They were created in part thanks to heavy funding from OpenAI partner Microsoft.

In fact, OpenAI tech powers Microsoft's "new Bing" search experience, as the company's search system is now running on a new, next-generation OpenAI LLM that Microsoft

said is more powerful than ChatGPT and customized specifically for search.

Instead of just supplying a list of resources that mention search terms, the new Bing invites users to chat with it, providing answers in a conversational tone thanks to natural language processing (NLP) capabilities.

So we chatted with Bing, asking about the job description for a prompt engineer. It replied:

A prompt engineer is a professional who works on developing and refining artificial intelligence (AI) models using prompt engineering techniques(1). Prompt engineering involves designing and crafting natural language prompts that are used to generate responses from AI models such as language models or chatbots(2). A prompt engineer's job is to identify the AI's errors and hidden capabilities so developers can address them(3)(4).

Some of the tasks that a prompt engineer may perform are:

- Converting one or more tasks to a prompt-based dataset and training a language model with what has been called "prompt-based learning" or just "prompt learning"(2).

- Developing models to improve the prompts that are input into generative models, and models that incorporate and learn from human feedback(5).

- Testing AI chatbots with different types of prose to find out their strengths and weaknesses(3)(4).

- Responding in a courteous and prompt manner to all guest questions and/or concerns (if working in a hospitality domain)(6).

Bing also said: "Prompt engineering is one of the hottest jobs in AI right now(1). It involves designing and fine-tuning prompts for large language models like ChatGPT that can generate text for various purposes."

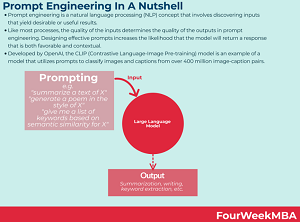

[Click on image for larger view.] Prompt Engineering (source: FourWeekMBA).

[Click on image for larger view.] Prompt Engineering (source: FourWeekMBA).

A regular non-AI-assisted Google search for prompt engineer job postings basically found the same things, topped salary-wise by a Prompt Engineer and Librarian position advertised by Anthropic, whose mission is to create reliable, interpretable, and steerable AI systems. Providing an expected salary range of $175,000 to $335,000, the position has representative projects listed as:

- Discover, test, and document best practices for a wide range of tasks relevant to our customers.

- Build up a library of high quality prompts or prompt chains to accomplish a variety of tasks, with an easy guide to help users search for the one that meets their needs.

- Build a set of tutorials and interactive tools that teach the art of prompt engineering to our customers.

That job is also listed on the Indeed careers site, along with job postings that mention "prompt engineering" that include:

The LinkedIn site, meanwhile, already lists more than 250,000 postings when it's searched for "prompt engineer" in the United States, which seems alike an absurdly abnormal amount for such a nascent job role.

And the SimplyHired site lists "prompting LLMs" as a duty for a "Head of AI" position for $235,800 per year.

The Upwork site, for contract positions, lists an openai.com GPT-3 Prompt Engineer for up to $149 per hour.

The list goes on, but the space is clearly exploding.

Maybe not for long, though. Though generative AI constructs must surely be near the top of the hype cycle, it likely will eventually fall into Gartner's "trough of disillusionment," wherein "interest wanes as experiments and implementations fail to deliver. Producers of the technology shake out or fail. Investments continue only if the surviving providers improve their products to the satisfaction of early adopters."

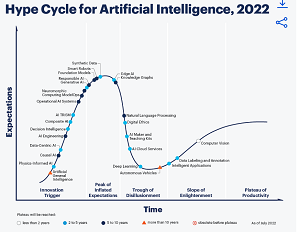

[Click on image for larger view.] 2022 AI Gartner Hype Cycle (source: Gartner).

[Click on image for larger view.] 2022 AI Gartner Hype Cycle (source: Gartner).

As can be seen in the graphic above, "generative AI" was just nearing the "peak of inflated expectations" as of July 2022, while NLP was plummeting into the trough. Prompt engineering will likely follow the same path.

When asked about the new specialty role, Dr. James McCaffrey of Microsoft Research, a pre-eminent data scientist who writes the Data Science Lab articles for sister publication Visual Studio Magazine, told Virtualization & Cloud Review, "The field of prompt engineering is new and it's not clear to me or any of the colleagues I've spoken to if a distinct new job of Prompt Engineer will emerge, or if prompt engineering techniques will become part of the personal toolkit of existing job roles such as Data Scientist. I'm inclined to think the latter -- prompt engineering seems to be a set of guidelines and heuristics rather than a set of concrete, discrete skills like those associated with writing program code."

What's more, McCaffrey said, "One of the issues with prompt engineering at this point in time is that we really don't understand exactly how large language models work. It's possible that prompt engineering is needed only because current LLMs aren't quite powerful enough to understand arbitrary input in arbitrary order. As LLMs increase in size, it's possible that the need for prompt engineering will go away. I think that's unlikely, but it's quite possible that the need for explicit prompt engineering may decrease over time."

So if you have the requisite skills, you'd better jump on the bandwagon fast.

Who knows, OpenAI may at this very moment be designing an LLM to help engineer effective prompts for LLMs.

About the Author

David Ramel is an editor and writer at Converge 360.