News

Commercial AI Pushes Receiving More Blowback

Is the rush to capitalize on AI advancements with commercial products pushing things too far, too fast?

While large language models (LLM) that back products like the revolutionary ChatGPT are making tremendous technological strides, there are increasing complaints about big-money industry players co-opting former research-based efforts, along with calls to slow down on creating more advanced models.

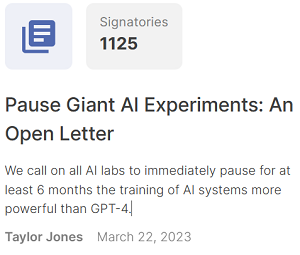

Regarding the latter, an open letter signed by major industry players including Elon Musk and Steve Wozniak is calling to Pause Giant AI Experiments.

Published on the Future of Life Institute site, the open letter originally penned by Taylor Jones on March 22 has 1,125 signatories as of this writing.

[Click on image for larger view.] Future of Life Open Letter (source: Future of Life Institute ).

[Click on image for larger view.] Future of Life Open Letter (source: Future of Life Institute ).

"We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4," the letter states.

GPT-4 is the latest and most advanced LLM from Microsoft partner OpenAI. It back's Microsoft's "new Bing" search bot and other advanced AI systems, including Microsoft's Azure OpenAI Service and GitHub Copilot, an "AI pair programmer" from Microsoft-owned GitHub. GPT-4 will likely soon replace GPT-3.5 as the backing tech of ChatGPT, if it hasn't already.

Released just a couple weeks ago, GPT-4 "Surpasses ChatGPT in Its Advanced Reasoning Capabilities," according to OpenAI.

The open letter signatories say AI labs and independent experts should pause AI advancement to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts.

With contemporary AI systems now becoming human-competitive at general tasks, the letter says we must ask ourselves:

- Should we let machines flood our information channels with propaganda and untruth?

- Should we automate away all the jobs, including the fulfilling ones?

- Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us?

- Should we risk loss of control of our civilization?

That latter concern, of course, has already been explored in various entertainment venues for quite some time, famously popularized by the human astronaut's repeated commands to an AI supercomputer named Hal in the movie, "2001: A Space Odyssey," to "Open the pod bay doors, Hal."

Even OpenAI itself is quoted in the letter to back its central premise, noting that the company has said, "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models."

"We agree," the open letter said. "That point is now."

OpenAI has itself received some blowback for its switch to a research-based company to a profit-driven company that's providing advanced pre-release tech to Microsoft thanks to the latter's $10 billion-plus investments into the company, which would give Microsoft a 49 percent share of OpenAI.

In its 2015 debut, OpenAI famously said: "Our goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. Since our research is free from financial obligations, we can better focus on a positive human impact."

After the debut of GPT-4 earlier this month, some data scientists noted that OpenAI didn't disclose technical details backing the latest iteration of its advanced AI system like it has in the past, speculating the company is switching from a research focus to a revenue-generating focus, something clearly detailed in an article from The Verge. That article states that after OpenAI announced its latest language model, GPT-4, many in the AI community were disappointed by the lack of public information, saying "Their complaints track increasing tensions in the AI world over safety."

The article further quotes Ilya Sutskever, OpenAI's chief scientist and co-founder, as saying "we were wrong" about the company's past commitment to openly sharing research.

[Click on image for larger view.] Mozilla.ai (source: Mozilla.ai).

[Click on image for larger view.] Mozilla.ai (source: Mozilla.ai).

The new wave of blowback against commercial-focused, for-pay AI has spawned several alternatives to constructs used by main actors like Microsoft and Google, including the brand-new Mozilla.ai startup from open source champion Mozilla, which created and maintains the Firefox web browser and much other open source software. Mozilla is putting up $30 million to fund a trustworthy, independent and open source AI ecosystem.

"We've learned that this coming wave of AI (and also the last one) has tremendous potential to enrich people's lives," said Mark Surman, president and executive director of the Mozilla Foundation, in a March 22 blog post. "But it will only do so if we design the technology very differently -- if we put human agency and the interests of users at the core, and if we prioritize transparency and accountability. The AI inflection point that we're in right now offers a real opportunity to build technology with different values, new incentives and a better ownership model."

The initial focus of Mozilla.ai is to foster the creation of tools to make generative AI safer and more transparent, along with people-centric recommendation systems as opposed to those that misinform or undermine people's well-being. See more about that in the Virtualization & Cloud Review article, "Mozilla.ai Startup Debuts for 'Trustworthy AI'"

AI pushback is also occurring on several other fronts, from artists complaining about generative AI infringing on their markets, to image and video "deep fakes" already contributing to the fractionalization of U.S. society with fake but realistic-looking depictions of former President Trump being arrested as just one example.

There are also rising concerns about the new tech benefiting cyber criminals, with one report noting that hacking ChatGPT was "The Dark Web's Hottest Topic."

It remains to be seen how these different pushbacks will slow things down, if at all. Billions of dollars are at stake, which prompted Microsoft competitor Google to declare a "code red" amid last year's ChatGPT hype in order to quickly offer competing tech, like its new Bard search bot that was seemingly rushed out the door as an initial-stage experiment to at least put the search giant back in the conversation with Microsoft regarding AI-assisted search.

Both Microsoft and Google are also infusing AI tech into many other of their respective products beyond search, including email, writing and other components of office suites and other offerings, further increasing the already gargantuan, perhaps existential concerns among many parties. Just yesterday, Microsoft announced a new predictive language chat tool for security experts called Microsoft Security Copilot.

Microsoft has also warned about cutting off other search engines that use its internet-spanning search index data. Microsoft doesn't want its data that is procured by minor-league search engines to be used for AI functionality to rival its "new Bing" search experience.

Nevertheless, Musk (ironically an original founder of OpenAI), Wozniak and other industry dignitaries are seemingly hopeful their call for an AI hiatus will be taken seriously.

"Humanity can enjoy a flourishing future with AI," the letter concludes. "Having succeeded in creating powerful AI systems, we can now enjoy an 'AI summer' in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt. Society has hit pause on other technologies with potentially catastrophic effects on society [Examples include human cloning, human germline modification, gain-of-function research, and eugenics]. We can do so here. Let's enjoy a long AI summer, not rush unprepared into a fall."

About the Author

David Ramel is an editor and writer at Converge 360.