News

AI at Red Hat Summit: Automation, not Writing Papers, Emails or Poems

Open source champion Red Hat just concluded its annual summit held in conjunction with the AnsibleFest event, which provided a stark contrast to how the company is using new advanced AI tech when compared to most.

That contrast was explained by Matthew Jones, Ansible Automation chief architect and distinguished engineer, in his AnsibleFest keynote address this week, during which Ansible Lightspeed was introduced. It is said to help enterprises infuse domain-specific AI from IBM Watson Code Assistant to make Ansible more accessible organization-wide and help close automation skills gaps.

"Generative AI is everywhere," Jones said. "But I cannot overstate how different our approach to all this has been. There are some really fun and interesting AI tools, with new ones popping up every day. There is no doubt about that. But Ansible Lightspeed with IBM Watson Code Assistant isn't about helping write term papers, or emails, or poems. This is AI purpose-built and applied for IT automation -- because our vision is to deliver a focused generative AI experience that saves organizations time and money."

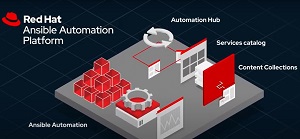

The Red Hat Ansible Automation Platform helps developers set up automation to provision, deploy and manage compute infrastructure across cloud, virtual and physical environments. Ansible Lightspeed, which as noted was announced at this week's summit, is a new AI-driven tool developed by Red Hat that aims to leverage AI to revolutionize IT automation. It simplifies automation by allowing complex processes to be distilled into a sequence of actions based on user descriptions, which reduces the need for manual human intervention.

[Click on image for larger view.] Red Hat Ansible Automation Platform (source: Red Hat).

[Click on image for larger view.] Red Hat Ansible Automation Platform (source: Red Hat).

"Designed with developers and operators in mind, Ansible Lightspeed enables a significant productivity boost for Ansible users to input a straightforward English prompt while making it easier for users to translate their domain expertise into YAML code for creating or editing Ansible Playbooks," Red Hat said. "To help train the model, users can also provide feedback."

Jones, in his keynote, said Ansible Lightspeed shapes the future of Red Hat's automation.

"As the extended Ansible community continues to train the model, the Red Hat engineering team will be working to evolve the Ansible Lightspeed offering to be available to meet the specific needs of individual customers, like custom models, custom rules with Ansible Risk Insights and custom policies," Jones said. "It's all about leveraging AI to make it easier to write automation code, and to give teams more clarity and context in the process."

Using natural language processing, Ansible Lightspeed will integrate with Watson Code Assistant to access IBM foundation models and quickly build automation code. The technology preview of Ansible Lightspeed with IBM Watson Code Assistant will reach general availability later this year.

Other AI tech was sprinkled throughout the event, as is custom with most tech events these days.

For example, the company announced new capabilities for Red Hat OpenShift AI, an AI-focused portfolio providing tools across the full lifecycle of AI/ML experiments and models, including Red Hat OpenShift Data Science. The company says it provides a consistent, scalable open source-based foundation for IT operations leaders while also offering a specialized partner ecosystem to data scientists and developers to innovate in AI.

"As Large Language Models (LLMs) like GPT-4 and LLaMA become mainstream, researchers and application developers across all domains and industries are exploring ways to benefit from these, and other foundation models," Red Hat said. "Customers can fine tune commercial or open source models with domain-specific data to make them more accurate to their specific use cases. The initial training of AI models is incredibly infrastructure intensive, requiring specialized platforms and tools even before serving, tuning and model management are taken into consideration. Without a platform that can meet these demands, organizations are often limited in how they can actually use AI/ML."

Recent enhancements to Red Hat OpenShift AI highlighted at this week's summit include:

- Deployment pipelines for AI/ML experiment tracking and automated ML workflows, which helps data scientists and intelligent application developers to more quickly iterate on machine learning projects and build automation into application deployment and updates.

- Model serving now includes GPU support for inference, and custom model serving runtimes that improve inference performance and improved deployment of foundation models.

- Model monitoring enables organizations to manage performance and operational metrics from a centralized dashboard.

Red Hat president and CEO Matt Hicks provided an overview of AI in general and specifically at the company in a post published yesterday titled "The moment for AI."

"At Red Hat Summit, that's what we are encouraging -- change," Hicks said after sharing his thoughts on AI in general. "Not just for the sake of it, but to help drive an innovation moment for your business. Maybe it will be built around Event-Driven Ansible, where you're able to free an IT team to open up new revenue streams. Or maybe you'll adopt Red Hat Trusted Software Supply Chain, and be able to push innovation even faster while retaining resiliency to supply chain vulnerabilities.

"What we present with Ansible Lightspeed and Red Hat OpenShift AI may be what you need to build your moment. The promise of domain-specific AI, in this case for IT automation, or a standardized foundation for consistent AI/ML model training could let you break into the benefits of AI that works for your organization."

About the Author

David Ramel is an editor and writer at Converge 360.