News

Growing Chorus for AI Regulation: OpenAI, Microsoft, Google Join In

The biggest players in advanced AI are renewing previous calls for regulation, joining a worldwide chorus of concerns about the dangers of runaway tech.

Just within the past week, prominent industry figures from OpenAI, Microsoft and Google have made headlines by calling for oversight of the tech they are primarily responsible for unleashing on the world. In that same time period, the Biden administration announced new efforts around responsible AI. And all of that comes amid a worldwide groundswell of concern for safe, responsible use of AI to address concerns ranging from deepfake confusion to the extermination of humanity.

While this movement gained traction with debut of advanced large language models (LLMs) that back generative AI systems like ChatGPT (such as GPT-4), AI leader OpenAI is already looking further out into the future to voice concerns about the "Governance of superintelligence," or future AI systems dramatically more capable than even Artificial General Intelligence (AGI). The latter refers to a hypothetical type of intelligent agent that can learn to do any intellectual task that human beings can do.

"In terms of both potential upsides and downsides, superintelligence will be more powerful than other technologies humanity has had to contend with in the past," OpenAI execs Sam Altman, Greg Brockman and Ilya Sutskever said. "We can have a dramatically more prosperous future; but we have to manage risk to get there. Given the possibility of existential risk, we can't just be reactive. Nuclear energy is a commonly used historical example of a technology with this property; synthetic biology is another example. We must mitigate the risks of today's AI technology too, but superintelligence will require special treatment and coordination."

Google and Alphabet CEO Sundar Pichai on May 22 penned a Financial Times article headlined "Google CEO: Building AI responsibly is the only race that really matters" where he doubled down on his previous calls for AI regulation. "I still believe AI is too important not to regulate, and too important not to regulate well," he said.

Meanwhile, Brad Smith, president of Microsoft, made a host of headlines in a speech delivered yesterday (May 25) in Washington attended by multiple members of Congress and civil society groups, CNN reported in the article "Microsoft leaps into the AI regulation debate, calling for a new US agency and executive order."

"In his remarks, Smith joined calls made last week by OpenAI -- the company behind ChatGPT and that Microsoft has invested billions in -- for the creation of a new government regulator that can oversee a licensing system for cutting-edge AI development, combined with testing and safety standards as well as government-mandated disclosure rules," CNN reported.

On the same day, Microsoft published a related post titled "How do we best govern AI?," authored by Smith.

"As technological change accelerates, the work to govern AI responsibly must keep pace with it," Smith said. "With the right commitments and investments, we believe it can."

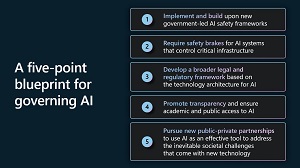

Smith pointed to the company's five-point blueprint for the public governance of AI.

[Click on image for larger view.] Five-Point Blueprint for Governing AI (source: Microsoft).

[Click on image for larger view.] Five-Point Blueprint for Governing AI (source: Microsoft).

"In this approach, the government would define the class of high-risk AI systems that control critical infrastructure and warrant such safety measures as part of a comprehensive approach to system management," Smith said. "New laws would require operators of these systems to build safety brakes into high-risk AI systems by design. The government would then ensure that operators test high-risk systems regularly to ensure that the system safety measures are effective. And AI systems that control the operation of designated critical infrastructure would be deployed only in licensed AI datacenters that would ensure a second layer of protection through the ability to apply these safety brakes, thereby ensuring effective human control."

And, while all of the above comes from what are arguably three of the top leaders in the AI space, the U.S. government piled on also. On May 23 the White House published a fact sheet outlining new steps the administration has taken to advance responsible AI research, development and deployment. That fact sheet pointed to another blueprint, the Blueprint for an AI Bill of Rights and listed three new announcements:

And the U.S. isn't the only nation-state addressing AI dangers, as Reuters on May 23 reported Governments race to regulate AI tools. "Rapid advances in artificial intelligence (AI) such as Microsoft-backed OpenAI's ChatGPT are complicating governments' efforts to agree laws governing the use of the technology," said the article, which lists efforts in countries ranging from Australia to Spain.

The U.S. is included, too, and for that country, Reuters listed:

- Senator Michael Bennet introduced a bill in April that would create a task force to look at U.S. policies on AI, and identify how best to reduce threats to privacy, civil liberties and due process.

- The Biden administration had said earlier in April it was seeking public comments on potential accountability measures for AI systems.

- President Joe Biden has also told science and technology advisers that AI could help to address disease and climate change, but it was also important to address potential risks to society, national security and the economy.

All of the above show that the industry is continuing to call for AI regulation to ensure responsible and safe usage. In fact, Microsoft, Google and OpenAI execs have all expressed similar concerns in the recent past, as detailed in the May 3 article, "Microsoft, Google, OpenAI Execs All Warn About AI Dangers."

Stay tuned to see what concrete initiatives arise from all the brouhaha.

About the Author

David Ramel is an editor and writer at Converge 360.