Tips

Smarter Security with AI: Spotting Insider Threats

As AI powers both cyberattacks and defenses, the ability to spot subtle threats in vast digital noise is paramount. Leading the charge is AI's power to intelligently monitor behavior, establishing baselines of normal activity to expose the aberrant patterns that signal attack.

That approach was a key component of a presentation today by well-known IT expert and writer Brien Posey, who shared his knowledge in the session, "AI-Powered Threat Detection: Crash Course on Modern DevSecOps," which was part of the summit titled "Next-Gen DevSecOps: Leveraging AI for Smarter Security, now available for on-demand viewing.

"AI models can analyze vast quantities of data, and they can pick up subtle things within that data," Posey said in describing one of the highlights of his presentation. He also covered other aspects of AI in cybersecurity and DevSecOps, including AI-powered phishing detection, its application in adaptive identity and access management for risk scoring, and the concerning rise of offensive AI used by attackers. He also stressed the crucial need for human oversight in AI-driven security systems.

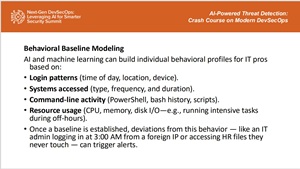

As all those topics are way too much to cover here, so we'll focus on the monitoring/detection aspects as applied to finding suspicious user and machine behavior, and readers can watch the replay for the entire gamut of valuable advice. Featured among Posey's AI monitoring techniques is behavioral baseline modeling, the approach where AI learns what constitutes normal user and machine activity to pinpoint deviations that signal potential threats.

[Click on image for larger view.] Behavioral Baseline Modeling (source: Posey).

[Click on image for larger view.] Behavioral Baseline Modeling (source: Posey).

Let's take each of those bullet points and associate what Posey had to say about them:

-

Login patterns (time of day, location, device): Posey noted, "Different users work in different ways, but it's important to determine what's normal for a given user, so that anything abnormal begins to stand out" in their login habits, whether it's 9 to 5 from a desk or frequently from a phone over the weekend.

-

Systems accessed (type, frequency, and duration): Beyond just which systems are accessed (from email to sensitive databases), AI looks at "how often are those systems accessed, and when the user does access that system, what are they doing within there, and how, how much time do they spend doing that."

-

Command-line activity (PowerShell, bash history, scripts): Posey highlights monitoring if a user "is normally someone who would work from PowerShell, or did they just all of a sudden start using PowerShell or bash or running scripts or things like that?"

-

Resource usage (CPU, memory, disk I/O—e.g., running intensive tasks during off-hours): AI tracks typical resource use because if a user suddenly shows spikes for a prolonged period, it "might reflect an abnormality that would warrant taking a closer look at what that user is doing," potentially indicating activities like downloading large datasets or trying to break encryption.

-

As Posey summarized, "once you've established a baseline of what's normal for a particular user, you can begin using AI to look for deviations from that baseline," citing the example of an IT pro unexpectedly logging in at 3:00 AM from a foreign IP to access HR files as something that "should trigger an alert and possibly a more aggressive action."

Behavioral baseline modeling is just one technique to leverage AI-powered monitoring/detection capabilities. For examples, Posey also discussed:

- AI in Email and Phishing Protection: Leveraging AI to analyze message content and context for detecting phishing and BEC attempts.

- AI in Endpoint Detection and Response (EDR): Applying AI in EDR solutions for proactive threat blocking and quarantine.

- AI in Identity and Access Management (IAM): Utilizing AI for adaptive authentication and login risk scoring based on various signals.

- AI in Privileged Access Monitoring (PAM): Employing AI to assess the risk of access requests and detect privilege creep.

- Correlating Anomalies Across Data Sources: Using AI to link subtle signals across different logs and data sources to spot complex attacks.

- User and Entity Behavior Analytics (UEBA): Platforms that use AI for user/entity risk scoring and peer group comparison.

- AI-assisted SIEM/SOAR Automation: AI's role in log correlation, alert triaging, and recommending response actions.

- AI for Vulnerability Prioritization: AI-based scoring to prioritize patching based on risk factors.

He also discussed many other aspects of the entire issue, including things like:

- The AI vs. AI Landscape: Discussion that both defenders and attackers are leveraging AI, creating an AI arms race in cybersecurity.

- Offensive AI Use Cases: Examples of how cybercriminals are using AI, such as generating deepfakes, creating AI-powered malware, and enhancing reconnaissance.

- Challenges and Risks of AI in Cybersecurity: Covered potential issues like false positives, the risk of model poisoning, data privacy and ethical concerns, regulatory implications, and the risk of over-reliance on AI reducing human oversight.

- Miscellaneous Considerations and Future Possibilities: Included points on using AI for training security teams, AI's potential role in Zero Trust frameworks, and the idea of AI potentially democratizing security by making enterprise-grade capabilities more affordable.

Of course, one of the best things about attending such online education summits and events is the ability to answer questions from the presenters, a rare opportunity for expert, real-world, one-on-one advice (not to mention the chance to win a great prize, in this case a Nintendo Switch provided by sponsor Snyk, the developer security company).

With that in mind, here are some events from our parent company coming up in the remainder of May:

About the Author

David Ramel is an editor and writer at Converge 360.