News

Google's Vertex AI 'Memory Bank' and the Industry Shift to Persistent Context

Users of certain advanced AI systems might have noticed their favorite model can remember their preferences regarding tone, formatting, prior topics of interest, how they like responses structured and so on. However, these capabilities can vary from one family of models to another -- or even within specific models, which sometimes seem to remember preferences and sometimes seem to forget.

This memory capability can prove invaluable by saving the user from having to manually add contextual or other information in each session, so AI companies are actively working to improve AI memory.

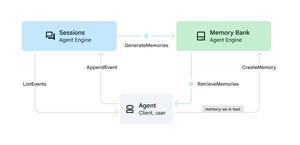

On July 8, 2025, Google announced the public preview of Memory Bank, a new capability within the cloud-based Vertex AI Agent Engine designed to solve one of the core limitations of conversational agents: lack of persistent memory. As Google explains, "without memory, agents treat each interaction as the first, asking repetitive questions and failing to recall user preferences."

[Click on image for larger view.] Memory Bank (source: Google).

[Click on image for larger view.] Memory Bank (source: Google).

Memory Bank allows agents to remember and apply contextual information across sessions, offering:

- Personalized interactions: Agents retain preferences and past choices to customize responses.

- Session continuity: Conversations resume smoothly even after days or weeks.

- Contextual awareness: Memory retrieval ensures responses are grounded in prior interactions.

- Improved user experience: Eliminates the need for users to repeat themselves.

Developers can integrate Memory Bank using Google's Agent Development Kit (ADK) or through external frameworks such as LangGraph and CrewAI. Memory Bank processes conversations asynchronously, using Gemini models to extract key facts like "I prefer aisle seats" or "my preferred temperature is 71 degrees." These are stored, updated, and retrieved intelligently, even resolving contradictions over time.

As detailed in the announcement, Memory Bank can power agents like beauty advisors that adapt to a user's evolving skincare routine -- illustrating its ability to maintain relevance in long-term engagements.

AI Memory in the Broader Industry Landscape

Google isn't alone in tackling the problem of long-term memory in AI systems. Other leading AI companies are introducing their own memory architectures to enhance agent continuity and personalization. Here's a look.

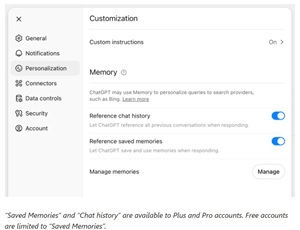

OpenAI: ChatGPT's Built-In Memory: OpenAI has introduced memory to ChatGPT. "ChatGPT can now remember helpful information between conversations, making its responses more relevant and personalized," the AI pioneeer announced as it invested in memory capabilities last year. Users can manage what the system remembers via a dedicated memory UI, and toggle memory on or off as needed. ChatGPT might remember facts like a user's name, favorite writing style, or recurring topics of interest.

[Click on image for larger view.] ChatGPT Memory (source: OpenAI).

[Click on image for larger view.] ChatGPT Memory (source: OpenAI).

The company rolled out memory across ChatGPT's GPT-4 tier and just last month announced several improvements across plans.

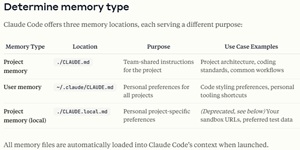

Anthropic: Claude's Memory & Workspace Context: Anthropic's Claude models support memory-like behavior through their extended context windows and the ability for developers to implement persistent "memory files." As Anthropic stated recently in their announcement for Claude 4 (Opus and Sonnet): "Both models... when given access to local files by developers -- demonstrate significantly improved memory capabilities, extracting and saving key facts to maintain continuity and build tacit knowledge over time."

[Click on image for larger view.] Claude Memory Types (source: Anthropic).

[Click on image for larger view.] Claude Memory Types (source: Anthropic).

This capability is particularly highlighted with Claude Opus 4, which is "skilled at creating and maintaining 'memory files' to store key information. This unlocks better long-term task awareness, coherence, and performance on agent tasks -- like Opus 4 creating a 'Navigation Guide' while playing Pokémon."

Anthropic's approach blends ephemeral context windows with durable memory for long-term interactions, and they also offer "memory files" (e.g., CLAUDE.md) within Claude Code to help developers manage persistent project context. Details on managing Claude's memory, particularly for agentic workflows, are documented here.

Microsoft: Copilot's Personalized Memory: Microsoft's Copilot is also joining the movement toward AI memory. In a recent announcement, Microsoft introduced a new feature simply called Memory, designed to help Copilot remember user-specific information across sessions to deliver more tailored and efficient responses. According to the official announcement, "Copilot, with its Memory feature, is here to remember the things that matter to you and act as a helpful companion."

With memory turned on, Copilot can recall interactions and offer personalized suggestions -- like book recommendations, travel tips, or fitness encouragement -- based on your past conversations. Microsoft emphasizes user control: memory can be toggled on or off, and users can delete individual facts or reset memory completely via privacy settings.

The feature is enabled when users activate Personalization in their Microsoft account. According to Microsoft, "Copilot will remember your past conversations and preferences, so you don't need to worry about repeating yourself." A visual representation of this memory, called "Narrative," is also available in the Copilot mobile app, with web support coming soon.

This approach reflects Microsoft's larger vision for an AI that doesn't just respond -- it evolves with the user over time, serving as what the company calls "your trusted confidant."

CrewAI: Structured Memory for Multi-Agent Systems: While not as big an AI player as the others, CrewAI, an open-source framework for collaborative agents, includes built-in memory capabilities across short-term, long-term, and entity scopes. This framework provides a memory system designed to significantly enhance AI agent capabilities with three distinct memory approaches that serve different use cases:

- Basic Memory System - Built-in short-term, long-term, and entity memory

- User Memory - User-specific memory with Mem0 integration (legacy approach)

- External Memory - Standalone external memory providers (new approach)

This includes memory for things like past tasks, referenced URLs, project results, or even external file lookups.

Conclusion: The Rise of Stateful AI

Google's Memory Bank announcement puts a spotlight on a fundamental shift in the architecture of AI systems: from stateless query-answering machines to persistent, personalized assistants. As the examples from OpenAI, Anthropic, Microsoft, and CrewAI show, memory is no longer an experimental feature -- it's a core design principle for next-generation agents.

Whether integrated into enterprise copilots, open-source frameworks, or large-scale public assistants, memory is becoming a necessary capability for AI systems to deliver on the promise of continuity, efficiency, and human-level personalization.

About the Author

David Ramel is an editor and writer at Converge 360.