News

Defusing the Telemetry Timebomb in Your Hybrid Environment

Enterprises are facing what one expert called a telemetry "timebomb," with explosive data growth threatening to overwhelm budgets, tools, and staff. That was the message from Nick Cavalancia, CEO of Conversational Geek, in his presentation at the recent Virtualization & Cloud Review online summit, "Defusing the Telemetry Timebomb in Your Hybrid Environment," sponsored by Cribl, being made available for online replay.

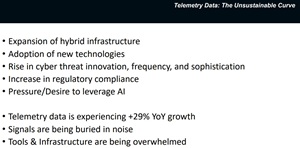

The Unsustainable Curve

Cavalancia, a four-time Microsoft Cloud & Datacenter MVP, framed telemetry growth as an "unsustainable curve," pointing to year-over-year increases nearing 30% and noting that the rate may climb higher. "With 29% growth, the 'bomb' is inevitable," he said. He added that even if the precise figure varies, the direction is clear: "It's growing. It's growing. 20, 25, 29, 30, 34, something -- a material percentage -- every single year".

"It's not just finding a needle in a haystack. It's trying to find [a suspicious signal] buried in millions more pieces of hay.""

"It's not just finding a needle in a haystack. It's trying to find [a suspicious signal] buried in millions more pieces of hay.""

Nick Calavancia, CEO, Conversational Geek

He explained that the drivers are piling up: hybrid infrastructure expansion, adoption of newer technologies like serverless and containerization, increasing cyber threat sophistication, regulatory requirements such as GDPR and CCPA, and the push to feed AI models with richer context. "In order to leverage AI, AI is gonna need more and more context," he said, noting that organizations are forced to gather far more telemetry data than before.

[Click on image for larger view.] Telemetry Growth

[Click on image for larger view.] Telemetry Growth

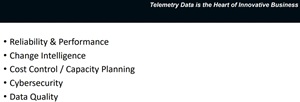

Business Demands Driving Telemetry

Telemetry, he argued, has become central to enterprise outcomes across reliability, performance, change intelligence, cost control, security, and data quality. "The telemetry data really sits at the core of any business," he said. On the security side, he cited the need for fast incident triage and reduced mean time to remediation. On the business side, telemetry supports service-level verification, regression detection, and customer experience improvements. "There's a laundry list of things in there," he noted, underscoring the breadth of telemetry's reach.

[Click on image for larger view.] Telemetry Outcomes

[Click on image for larger view.] Telemetry Outcomes

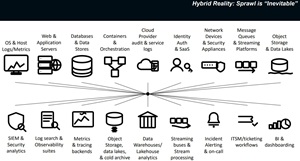

Hybrid Sprawl Is Inevitable

As hybrid IT spreads, Cavalancia warned that telemetry sprawl is unavoidable without deliberate management. He described it using an analogy: "Sprawl is going to exist naturally, just because of the entropy that exists with having just too much data and no management. It is like the idea of entropy just taking over, because no one's doing anything except for taking a deck of cards, throwing them in the air and letting them fall as they land". Without intervention, he said, the cards will only spread farther with each toss -- just like unmanaged data sources across modern IT estates.

He highlighted categories of telemetry inputs that every enterprise is collecting: operating system logs, SaaS authentication, databases, cloud provider audit trails, container and orchestration platforms, network appliances, and more. Each destination system -- from SIEM to observability suites -- adds to the complexity. "This is not an exhaustive list," he said, but simply meant to illustrate the inevitability of telemetry sprawl.

[Click on image for larger view.] Telemetry Sprawl

[Click on image for larger view.] Telemetry Sprawl

The Telemetry Timebomb in Practice

Many organizations are already seeing the impact. Cavalancia cited "ingest bills spiking," overloaded indexers that lead to query timeouts, and data that is duplicated across multiple platforms. "If you're over ingesting and under curating, the spend is just -- it goes on and on," he warned. Inconsistent normalization across datasets further compounds the problem, raising risk and slowing analysis. "You don't even know if the data is normalized across those two datasets, let alone everything you have," he said.

The costs aren't just financial. Cavalancia noted that response times suffer as well. "Meantime to remediation is going to keep creeping up. It takes a lot longer to remediate or respond to an issue here," he explained.

[Click on image for larger view.] Telemetry Timebombs

[Click on image for larger view.] Telemetry Timebombs

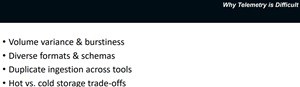

Why Telemetry Is Difficult

Cavalancia identified specific challenges making telemetry harder to manage: volume variance and burstiness that drive overprovisioning, diverse formats and schemas that complicate normalization, duplicate ingestion across tools like SIEM and SOC analytics, and tough trade-offs between hot and cold storage. "The applications that are doing any of the ingestion of that data or the usage of that data, they have to work even harder, which means more time spent, more cost spent just to try and get intelligence out of this information," he said.

[Click on image for larger view.] Telemetry Is Difficult

[Click on image for larger view.] Telemetry Is Difficult

Steps Toward Defusing the Bomb

Despite the inevitability of telemetry growth, Cavalancia outlined concrete steps to mitigate the risks. He urged attendees to think of telemetry like a supply chain: "You're now responsible for a complex supply chain that's going to get your organization what you need at the end, which is the query," he said. Governance and controls must apply at each stage -- collection, processing, storing, and querying. Among his key prescriptions:

- Normalize and enrich once before fan-out, so downstream tools are smarter.

- Route data by use case rather than habit, aligning destinations with outcomes.

- Avoid indexing everything, which drives up costs and slows performance.

- Minimize data by filtering, deduplication, and suppression of low-value fields.

- Redact personally identifiable information upstream to reduce compliance risk.

- Adopt tiered retention policies using object storage lifecycle management.

- Apply partitioning and compression to improve performance and control costs.

- Implement "filter early, govern always," with audit trails to operationalize oversight.

"A report is only as good as the action you can take because of it," Cavalancia said. "If there's no value in a field, you can't take an action because of the value in a field or the data in a field. Skip the field. You don't need it"

Bottom Line

Cavalancia closed by reminding the audience that while the growth of telemetry is unavoidable, it doesn't have to become an uncontrolled explosion.

[Click on image for larger view.] Defusing Telemetry Timebombs

[Click on image for larger view.] Defusing Telemetry Timebombs

"Recognize the complexity of the problem," he said. "Proactively managing both how the data is ingested and how it's utilized is the only way to defuse the telemetry timebomb."

And Much More

Those are all concise summaries, of course, and you need to watch the on-demand replay to get the individual items fleshed out in detail -- along with many other actionable tips -- but this gives you the overall idea of Cavalancia's presentation.

For more detail, watch the on-demand replay of the summit here.

And, although replays are fine -- this was just today, after all, so timeliness isn't an issue -- there are benefits of attending such summits and webcasts from Virtualization & Cloud Review and sister pubs in person. Paramount among these is the ability to ask questions of the presenters, a rare chance to get one-on-one advice from bona fide subject matter experts (not to mention the chance to win free prizes, in this case noise-canceling Bose headphones from sponsor Cribl, which also presented at the summit).

With all that in mind, here are some upcoming events in the next month or so from our parent company: