In-Depth

Microsoft's Scale-Out File Server Overcomes SAN Cloud Barriers

There are no SANs in the cloud because the venerable storage technology just doesn't scale to that level. But there are ways around it, and Microsoft shops should start with the company's Scale-Out File Server.

There's a revolution going on in storage. Once the domain of boring but dependable storage-area network (SAN) arrays, there's now a plethora of choice, including all flash storage (with varying different underlying technology) and server-message block (SMB)-based storage. There are three main, underlying technology shifts altering the status quo:

Flash storage, either as the only storage technology for very high performance workloads, or in tandem with hard drives for tiered storage solutions.

Cloud storage, in many cases implemented as a third tier for long-term archiving of cold data.

Software-defined storage (SDS), closely related to storage virtualization. This technology has all the buzz right now. Microsoft entered the arena with the release of Windows Server 2012, and furthered its position with the release of Windows Server 2012 R2, offering a complete SDS stack.

The problem with SAN technology is that it wasn't really designed to solve today's problems: It's complex and requires specialized skills, it's expensive, and it doesn't scale in today's cloud world. Looking at Amazon Web Services Inc. (AWS), the Google Inc. cloud platform and Microsoft Azure, none of them rely on SAN storage. There's simply no way that the companies could scale their public cloud offerings if they had to wire a SAN switch to two Host Bus Adapters (HBAs) in each server. That's not to say SANs are going away; they'll change and adapt like any technology, but they're no longer the default answer to every enterprise storage question.

Microsoft doesn't just have one iron in this fire; it offers StorSimple, a SAN array with hard-disk drives (HDDs) and solid-state drives (SSDs), as well as Azure storage for long-term storage. Then there's the recent acquisition of InMage, for replication of Linux and Windows virtual machines (VMs) from VMware to Azure.

A recent Microsoft solution for the SAN problem is Scale-Out File Server (SOFS) in Windows Server 2012 R2, which provides clustered, continuously available storage.

SOFS to the Rescue

When Microsoft built its SAN replacement technology in the form of SOFS, it replaced the controllers with Windows Server 2012 R2 file servers, and the connectivity with either ordinary 1 Gbps or 10 Gbps Ethernet NICs, or faster, SMB Direct NICs. The drives are housed in simpler enclosures with no RAID built in; just power supplies and external SAS connectivity. The SOFS role manages the permissions, data protection and data flow if you're using SSDs (the tiering).

So Microsoft is matching SAN capabilities, but doing so on commodity hardware, running on Windows Server. Table 1 shows a feature comparison.

Table 1. Windows Scale-Out File Server and Fibre Channel/iSCSI SAN Array Feature Comparisons

| FC/iSCSI SAN array |

Windows SOFS Cluster with Storage Spaces |

| Storage tiering |

Storage tiering (new in 2012 R2) |

| Data deduplication |

Data deduplication (improved 2012 R2) |

| RAID resiliency |

Two/three way mirroring, single/dual parity |

| Disk pooling |

Disk pooling (Storage Spaces) |

| High availability |

Continuous availability |

| Persistent write back cache |

Persistent write back cache (new in 2012 R2) |

| Copy offload |

SMB copy offload |

| Snapshots |

Snapshots |

| FC, FCoE, iSCSI for fast connectivity |

10 Gbps, SMB Multichannel, SMB Direct (56 Gbps +) |

|

Software Building Blocks

There are numerous abilities that make SOFS such a killer technology. The first is the humble SMB protocol. This has been in Windows Server for many, many years and is how most of us access file shares on servers to work on documents.

In Windows Server 2012, Microsoft set out to produce a new version of the protocol (SMB 3.0), capable of storing not just Word, Excel and CAD documents, but also the huge files associated with databases and VMs.

There also needed to be continuous availability built in -- not just high availability (which has some amount of downtime). If Word, for instance, temporarily loses connection to a file on a share, it just keeps working and recovers, as long as the outage isn't too extensive. But for a database or virtual hard drive (VHD), such a disconnection would almost certainly lead to data corruption. SMB 3.0 (and 3.02 in Windows Server 2012 R2) comes with transparent failover, which provides extremely fast shifting of data access from one node to another, in case of a node failure (provided you have at least two nodes in your cluster, of course).

There's also SMB Multichannel, in which SMB automatically takes into account all paths between an application server and the SOFS node, and uses them simultaneously. If one NIC fails, the traffic simply continues over the remaining links. There's no need to configure Multipath I/O (MPIO), because multichannel is automatic. In fact, it's so automatic that you might need to exclude network interfaces where you don't want high loads of storage traffic saturating the link. SMB Scale-Out provides simultaneous access to data files through all nodes in the SOFS cluster, resulting in better load balancing and network bandwidth utilization.

SMB Direct builds on newer Remote Direct Memory Access (RDMA) network cards; these were originally developed for the network needs of high-performance computing clusters used in simulations and manipulation of large datasets. These aren't required for SOFS; ordinary 10 Gbps or 1 Gbps NICs will work just fine. But for exceptional performance, low latency and zero-host CPU impact, they're the answer.

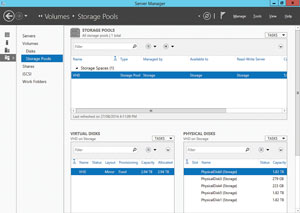

The other fundamental technology in this stack is Storage Spaces. This is a built-in storage virtualization technology that allows you to pool disks (HDD and SSD) together and then carve out virtual disks from the pools with specific capabilities. An example is shown in Figure 1.

[Click on image for larger view.]

Figure 1. A tiered Storage Space in Windows Server 2012 R2.

[Click on image for larger view.]

Figure 1. A tiered Storage Space in Windows Server 2012 R2.

One important characteristic of a virtual disk on top of a Storage Space is the data protection scheme: Two- and three-way mirroring, as well as single- and dual-parity, is supported. The latter supports the failure of two disks, whereas three-way mirroring (and dual parity) can support the failure of a whole enclosure, because the data is mirrored to another enclosure.

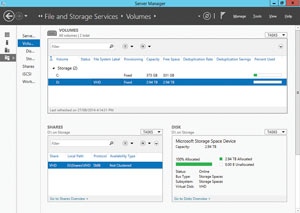

This isn't the software RAID of yesteryear, which mirrored one drive to another; Storage Spaces divides data into strips, which are spread across several disks so that a single disk failure doesn't lead to data loss. This parallelization of the load is used all through Storage Spaces, using the combined throughput of many drives to provide exceptional performance. See Figure 2 for an example.

[Click on image for larger view.]

Figure 2. A mirrored virtual disk on top of two solid-state drives and three hard-disk drives.

[Click on image for larger view.]

Figure 2. A mirrored virtual disk on top of two solid-state drives and three hard-disk drives.

Also new in Windows Server 2012 R2 is the automatic rebalancing of nodes and volumes. If a cluster has three nodes and six cluster shared volumes (CSVs), each node will "own" two. If one node is restarted or crashes, its load will be divided among the remaining nodes; when another node is added, the load is transparently rebalanced again.

Windows Server 2012 introduced data deduplication, which analyzes data at rest, identifies blocks that hold the same information and replaces all but on with a pointer to that copy of the data. Depending on the data type, you can reclaim 40 percent to 50 percent of information worker (IW) documents or up to 90 percent of VHD library disk space.

More important, Windows Server 2012 R2 added the ability to do the deduplication on running Hyper-V VM VHD and VHDX files, but only for virtual desktop infrastructure (VDI) deployments. This curious limitation partly stems from the fact that Microsoft would like to see lots of field testing before allowing ordinary server virtualization to use it. And, partly, it's because deduplication works best in high-read, low-write scenarios. If a lot of data is changing frequently, deduplication won't be very efficient. That said, deduplication works just fine for lab and test environments for any VM workload, not just VDI.

Tiering Up

Windows Server 2012 R2 added a missing feature in the quest to match SAN technology feature-for-feature: tiering. SSD technology provides fantastic read performance and great write performance, but is expensive per gigabyte compared to HDDs. The solution is to fill your bays with mostly large, slow HDDs and add a few SSDs.

Tiering will automatically "heat map" storage blocks, identifying which blocks are hit the most and moving those to the SSD tier, while moving colder data to the HDD tier. In this way you can combine the best of both technologies for overall performance. You can also pin particular files (think the master disk in a VDI implementation) to a tier manually. There's a minimum number of SSDs recommended, depending on your data protection strategy and number of enclosures.

A related technology is write-back cache, which uses some of the SSD storage to absorb intermittent incoming writes to the virtual disk, so as not to interrupt the main workload's data flow.

Another useful feature in Windows Server 2012 is the improvements to the CHKDSK command. Instead of volumes having to be offline for long periods of time (many hours in the case of large volumes), disk checking is now done while the volume is online, followed by a short (offline) phase where problems are repaired. SOFS, together with CSVs, improves on this even further through the fixing phase being performed from one cluster node, while the others can continue accessing the data, resulting in zero downtime CHKDSK.

Finally, CSV, the layer on top of NTFS that allows simultaneous read and write access from multiple nodes to data files, makes it possible to store the disks of many VMs on the same volume, while still allowing individual VMs to live migrate between different Hyper-V hosts.

Hardware Building Blocks

The other part of the overall story is the Serial-Attached SCSI (SAS) enclosures that house the drives. Unlike a SAN or other storage enclosures, these don't have RAID controllers or other complicated smarts; simply a set of SAS (6 Gbps or 12 Gbps) connections and 2.5-inch or 3.5-inch slots. Enclosures are sized from 24 to 84 bays; the full list of enclosures certified to work with Windows Server is available here.

SAS has experienced slow adoption due to a lack of options, as almost none of the major storage vendors have enclosures to offer (exceptions are Dell Inc. -- its PowerVaults were recently certified -- and Fujitsu). This is most likely because they don't want simpler, more cost-effective solutions encroaching on their high-cost, proprietary solutions. DataON Storage is one vendor with several different sizes and types available.

The connection between Windows Server and the box is enclosure awareness, which allows the OS to understand the state of fans and be able to blink drives for identification and similar tasks. Make sure the enclosure you're looking at supports SCSI Enclosure Services (SES), and that you install the latest hotfix (support.microsoft.com/kb/2913766) to improve Windows Server enclosure support.

If you want to use SMB Direct, you'll need remote direct memory access (RDMA)-capable network cards. Vendors include iWARP (10 Gbps NICs) from Chelsio, and Infiniband (56 Gbps and up) from Mellanox and ROCE.

Converged networking, which uses several high-speed NICs for several different types of traffic, is becoming more popular. New in Windows Server 2012 R2 is the ability to prioritize between different types of SMB 3 traffic on the same interface, with settings for Live Migration, Hyper-V and default (any other SMB traffic). The two big Microsoft workloads where SOFS shines: storing Hyper-V VHDs and VHDXes for running VMs;SQL Server.

The latter provides a lot of flexibility because you can simply set up a new database server, connect it to a share on an SOFS cluster, disconnect the old server and connect the database files to the new server. Imagine how much easier disaster recovery and upgrades can be.

If your company already has a SAN investment that you want to continue using, SOFS also has its place. If you need to add more compute capacity -- which could be a major investment, because you'd have to purchase new HBAs for each host and maybe even a new FC switch -- there's a better way. Put an SOFS cluster with two or three hosts in front of your SAN (so the only wiring needed is between the SOFS nodes and the SAN), then present file shares to your compute nodes. Note that the CSV rebalancing mentioned earlier is turned on by default for Storage Spaces, but turned off by default when the SOFS is backed by a SAN.

SOFS Non-Use Cases

While Storage Spaces and SOFS is definitely a good choice if you're looking to build out storage for SQL Server or Hyper-V, there are some situations where the solution doesn't fit (yet). One is for Exchange storage. It's built around the concept of several copies of your databases on different servers, each housed on large-capacity, 7200 RPM local disks in each server.

Another is storage for IW documents. SOFS is designed for very large files (VHD and SQL databases) that are open continuously and that can't have interruption of the IO flow. Word, Excel and other types of IW apps, on the other hand, have small files that are opened and closed regularly. If you do use SOFS for IW document storage, performance will be worse -- around 10 percent worse -- than if you stored them on traditional file clusters. A better option is to run virtual file servers, store their VHDX files on the SOFS and present file shares to the users; the VMs can be clustered using guest clustering for high availability.

SOFS is an enterprise technology, disaggregating the storage fabric from the compute fabric, allowing you to scale each independently for maximum flexibility. If you have a smaller environment, cluster-in-a-box (CiB) solutions should be more suitable. Alternatively, you could connect several Hyper-V hosts to shared SAS storage with CSV, but without the overhead of a separate SOFS cluster.

As with data deduplication, it's very likely that when Storage Spaces, CSV and SOFS have been in widespread use for a few years, additional workloads will be added to the list of recommended and supported ones.

Configuration Tips and Tricks

When planning your SOFS implementation, remember that the maximum number of nodes is eight, with two, three and four nodes being popular choices. You'll also want to create a number of CSVs in a three-node cluster; three or six volumes are good choices. If you only have one CSV, all the SMB clients will be connected through one node, resulting in non-optimized performance.

The other issue that often gets lost in the planning phase is the concept of columns. The number of disks in your pool, along with the data protection scheme selected, affects the number of columns per virtual disk. If at a later stage you attempt to add more disks to your pool to expand capacity, you have to add enough disks to match the number of columns for that particular virtual disk.

If you're looking to build a test lab or proof-of-concept with SOFS and Storage Spaces, make sure you select an enclosure from the hardware control list (HCL), along with certified SAS hard drives and SAS SSDs (if you're going to test tiering). Make sure to update your NIC drivers, as well as the firmware on all networking components, as this will fix many issues. Also, make sure Receive Side Scaling is available and turned on in your NICs.

If two physical servers and a storage enclosure are outside your budget, consider a setup with virtualized nodes and disks on Azure, which you can find instructions for using here. If you have a Hyper-V host, a setup found here allows you to test everything on a single machine (neither are supported in production).

Managing Storage

SOFS and Storage Spaces are designed to lower the capex through low-cost enclosures, industry-standard SAS connectivity and cluster nodes running on commodity servers. But the other side of the equation is opex, and here Microsoft's answer is to have one interface to manage all storage. System Center Virtual Machine Manager 2012 R2 can provision bare metal machines with an OS, then configure Storage Spaces and SOFS automatically.

The same interface can be used to manage the storage and the SMB file shares that will house VMs. Virtual Machine Manager can also manage SAN arrays (with the right plug-in), and automatically provision storage and unmask LUNs to virtualization hosts, through Storage Management API.

There's no doubt that if you're a Microsoft shop using SQL Server and/or Hyper-V, you should take a look at SOFS and Storage Spaces. It's easier to manage, definitely cheaper than traditional SANs for capacity, while at the same time providing equal or better performance.