In-Depth

Hyper-V in the Real World

Microsoft's virtualization platform has matured into a solid, dependable workhorse. Here's a primer for getting the most out of it.

There's a gradual shift in the business world away from the market leader VMware Inc. for virtualization; partly because Microsoft Hyper-V is more cost effective in most scenarios, and partly because of the Microsoft Azure cloud platform. Enterprises looking at hybrid cloud functionality find Azure a more logical fit to expand their IT infrastructure into, compared to the limited public cloud footprint and functionality of VMware.

In this article I'll look at how to plan a Hyper-V implementation for your private cloud, including hosts, networking, storage, virtual machines (VMs) and management. I'll also look at how to configure it, along with some tips for ongoing maintenance and troubleshooting.

Planning for Success

As with any IT infrastructure implementation, it pays to do your upfront planning; a well-planned fabric will serve you better than some servers that your server vendor thought would work for your scenario.

The first step is to gather as much information as you can about the expected workloads: What kind of processor, memory, storage and networking resources will they require? What's the projected number of VMs, and how is that number going to change over time? Armed with this information, work through the following areas to assemble a plan of what kind of technologies and how much of them you'll need to achieve a highly performant fabric.

Hosts

While a single host running Hyper-V and a few VMs might work for a small branch office scenario, in most cases you'll want to cluster several hosts together. The industry trend is to scale out from a small number of very powerful (and expensive) hosts toward a larger number of commodity hardware, cost-effective hosts.

This is partly due to the "cluster overhead" effect. If you only have two hosts in your cluster and one host is down, the remaining host has to have enough capacity to host all the VMs. This means you can really only use 50 percent of the cluster's overall capacity.

With four hosts, on the other hand, when one is down you only lose 25 percent of the overall capacity. As you scale up toward the maximum of 64 hosts in a Hyper-V cluster, you gain better efficiency, although in eight-plus-node clusters there's often the need to survive two hosts being down. Just make sure your hosts are big enough in terms of processor cores, storage IO and memory to accommodate your biggest expected workloads (SQL, anyone?).

There are three versions of Hyper-V to select from: the free Hyper-V Server, Windows Server Standard (which comes with two Windows Server VM licenses for that host) and Windows Server Datacenter (which comes with unlimited Windows Server VM licenses for that host).

If you're going to run Linux or Windows Clients as VMs (for VDI), the free Hyper-V Server is appropriate. With the two Windows versions you can install hosts either with a GUI or in the Server Core (command-line only) mode. The latter has less overhead and less attack surface from a security point of view, but make sure your team is comfortable with command-line troubleshooting and Windows PowerShell.

The hosts should have processors from the same vendor (AMD or Intel), but they don't need to be the same model. Select hosts from the Windows Server Catalog to ensure support if you have to call Microsoft.

The first VM that runs on top of the hypervisor is called the parent partition. You should only run management and backup agents here; no other software, and in particular nothing resource heavy, as all resources should be available to the VMs.

Storage

Shared storage is a requirement for clustered Hyper-V hosts. This can be in the form of "local shared storage," which is common in Cluster in a Box (CiB) preconfigured servers where each host is connected to a Serially Attached SCSI (SAS) enclosure. This is appropriate for a small number of hosts (typically two to four).

Larger clusters will need SAN connectivity, either Fibre Channel or iSCSI, or alternatively Server Message Block (SMB) shares on a Scale-Out File Server (SOFS) cluster.

SOFS is a very attractive option to traditional SANs for Hyper-V and SQL Server workloads, both because it's easier to manage and it's more cost effective. A SOFS cluster consists of commodity hardware servers running Windows Server 2012 R2; behind them can be either a SAN or SAS enclosure with HDD and SSDs. The HDDs provide the capacity, while the SSDs provide the IO performance through storage tiering (a new feature in Windows Server 2012 R2).

Data is protected through two- or three-way mirroring (don't use Parity, as it's only for archive workloads), which can span enclosures to protect against a whole enclosure failing.

The point here is to not dismiss SOFS, because on a feature-by-feature basis it matches traditional SANs for both speed and performance, as well as being generally easier to manage.

These options aren't mutually exclusive, though. Say, for instance, you have an existing SAN and need to add a 16-node Hyper-V cluster. If you have spare ports in your Fibre Channel or iSCSI switch and don't mind purchasing 32 new Host Bus Adapters (HBAs), you could simply wire up the new hosts to LUNs on the SAN. But you could also set up two SOFS servers (with only four HBAs and four connections on the switch needed) in front of the SAN, then connect your Hyper-V nodes to the SMB shares on the SOFS.

Virtual Hard Drives

Use VHDX files rather than the older VHD format for virtual hard disks because they support larger disks (up to 64TB) and are more resilient to corruption. The old recommendation to use fixed-size disks (300GB virtual disk takes up 300GB on the underlying storage, no matter how much data is actually stored on the disk) for performance no longer applies.

Dynamic disks that only consume the actual amount of data stored in them on the underlying storage are very close to the same performance. Just be mindful to not oversubscribe the underlying storage when the virtual disks keep growing, so you don't run out of disk space.

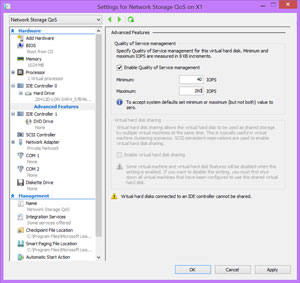

To control IO performance, you can use Storage Quality of Service (QoS) to set a maximum IOPS limit (in normalized 8KB chunks) on a per-virtual-disk basis (see Figure 1). You can also set a minimum IOPS value, but be aware that this is set on the Hyper-V host, so if the back-end storage can't deliver enough IOPS to satisfy all the VMs across all the hosts, the minimum IOPS aren't going to be delivered.

[Click on image for larger view.]

Figure 1. Storage Quality of Service management.

[Click on image for larger view.]

Figure 1. Storage Quality of Service management.

Networking

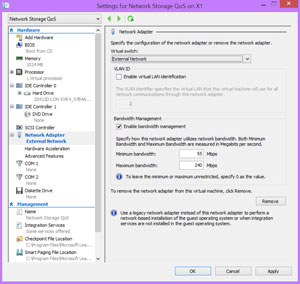

Networking on Hyper-V hosts is used for many different types of traffic: there's the intra-cluster communication (heartbeat), Live Migration (VMs moving from one host to the other while still running), backup traffic, client access to the servers, and if you're using iSCSI or SOFS, there's storage traffic. In previous times these were segregated on separate 1 Gbps interfaces, and you needed a lot of these. Today, most servers come with a couple of 10 Gbps interfaces. To achieve the same separation of different types of traffic, you should use Network QoS (shown in Figure 2) to limit the bandwidth for each type of traffic (see "Resources" for more on traffic and other resources).

[Click on image for larger view.]

Figure 2. Network Quality of Service management.

[Click on image for larger view.]

Figure 2. Network Quality of Service management.

Windows Server has had NIC teaming since 2012, allowing teaming (either switch independently or with switch awareness through Link Aggregation Control Protocol) of several interfaces (max 32) for bandwidth and redundancy. For storage traffic (and perhaps Live Migration), you can use Remote Direct Memory Access (RDMA) networking cards, which enable SMB Direct. There are three different RDMA technologies: iWarp, Infiniband and ROCE. In essence they all do the same thing, bypassing the software stack for extremely fast traffic (10 Gbps, 40 Gbps and 56 Gbps) with zero CPU overhead. This is how SOFS can match even the fastest Fibre Channel SAN.

In Windows Server 2012 R2, Live Migration can be set to use one of three modes:

Q Compression. The default, in which both the sending and receiving host's processor load is watched. If there's spare capacity, the data stream is compressed and decompressed on the fly, resulting in a 2x (or better, according to Microsoft) improvement for most scenarios.

Q RDMA. If you have RDMA network cards, you can use the SMB setting. This will result in fast Live Migrations. Many hosts today accommodate 20 to 30 VMs; when the time comes to patch the cluster or do some other type of maintenance, moving all VMs off one host and doing the maintenance/reboot before moving on to the next host will be much faster with RDMA.

Q Single Root IO Virtualization network cards (SR-IOV). If you have specific VMs that need low latency network access (to other VMs or clients, not to storage), consider using Single Root IO Virtualization network cards (your server motherboards need to support this, not just the NICs). SR-IOV bypasses the Hyper-V virtualized networking stack and projects a virtual NIC directly into the VMs. Note that you can still Live Migrate a VM using one or more SR-IOV interfaces; if the destination host also has SR-IOV NICs, they will be used (if they have spare SR-IOV virtual NICs available -- the maximum number depends on the model of the NIC). Otherwise, the network traffic will fall back to using ordinary Hyper-V networking.

There are other hardware network-enhancing technologies to look at in your planning, like Receive Side Scaling (RSS) and Virtual RSS for spreading the CPU load of multiple data streams across multiple cores; IPSec Task Offload (TO) for encrypting and decrypting traffic; and Data Center Bridging (DCB), an alternative to network QoS.

Virtual Machine Considerations

Use the latest OS for your VMs if you can; in both the Linux and Windows worlds, the latest OSes are better at being virtualized. If the applications in your VMs can take advantage of multiple processors, you can safely assign as many (up to 64) vCPUs to them as you think they'll need. If the VM doesn't use them, the host will assign CPU resources to other VMs as needed.

If you have large hosts, make sure you're aware of the size of the Non Uniform Memory Access (NUMA) nodes across your Hyper-V hosts. A NUMA node is a combination of a number of Logical Processors (CPU cores) and a set size of memory. If you have really big VMs with larger amounts of memory and vCPU assigned to them than the size of a NUMA node, be aware that Hyper-V will project the NUMA topology into the VM so that the application can take advantage of spreading the load intelligently across the NUMA nodes.

Management

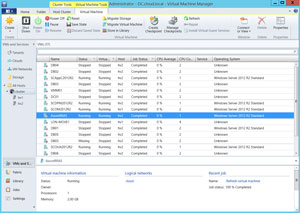

If you have just a few hosts, you can certainly use the built-in Hyper-V manager, along with Failover Cluster Manager, to operate a small cluster. For a fabric larger than, say, four or five hosts, System Center 2012 R2 Virtual Machine Manager is the best management option.

Virtual Machine Manager (see Figure 3) brings so much more than just managing VMs to the table:

- It can deploy the OS and configuration to a set of new, bare-metal servers you've just installed, either as SOFS or Hyper-V hosts.

- It can manage your Top of Rack switches, and automatically provision storage for VMs on any SAN or SOFS using SMI-S.

- It has a library for storing all VM components, and it can deploy single or combinations of multiple VMs as services/distributed applications.

- It manages Hyper-V and VMware VSphere environments.

- It can deploy clouds across both platforms, to abstract the details of the underlying resources away from users of your private clouds.

[Click on image for larger view.]

Figure 3. Microsoft System Center Virtual Machine Manager at work.

[Click on image for larger view.]

Figure 3. Microsoft System Center Virtual Machine Manager at work.

Hyper-V Configuration

Once your planning is complete, you've worked out which technology choices are needed for your environment, and you've purchased the boxes, it's time to configure it all.

As mentioned earlier, you can use Virtual Machine Manager to deploy the OS to both Hyper-V and SOFS hosts. Whether you should go through the necessary testing and fine-tuning to make this work reliably depends on how many new hosts you have. If it's less than about 20, it'll probably be faster to install Windows Server using your standard method (Configuration Manager, Windows Deployment Services or third party).

The next step is to update all drivers and all firmware; even new servers are often delivered with out-of-date software. After this, you want to apply all Hyper-V and Cluster updates to all hosts.

If you're going to run anti-malware software on the Hyper-V hosts, make sure to exclude the relevant files, folders and processes.

Use Dynamic Memory for all guests, unless the workload inside a VM doesn't support it (Exchange doesn't, for example). If your security policy allows it, consider enabling Enhanced Session mode, which facilitates easy copy and paste from host to guest, along with RDP access, even if the VM has no network connection (or no OS).

Define which network interfaces to use for Live Migration traffic using cluster manager. Right-click on Networks and select Live Migration Settings; then uncheck the networks you don't want to use, and order the ones you do by using the priority values.

If you have VMs running as domain controllers, disable the time synchronization service from the host and set up the PDC emulator for the root domain in the forest, to keep accurate time using an NTP server on the Internet.

If you're using SANs as cluster shared storage and your SAN supports Offloaded Data Transfer (ODX), make sure you enable this support on each host, as well as in any VM connecting directly to your SAN.

Ensure your VMs are running the latest Integration Components. These used to be installed through the host, but are now distributed through Windows Update (and, thus, through Windows Server Update Services or Configuration Manager), which should make this task easier. If you're using the Datacenter version for your hosts, enable Automatic VM activation and configure each VM with the right key; the days of tracking license keys across your fleet of VMs will be over.

Backing up your VMs is, of course, paramount. You can use Microsoft Data Protection Manager, Veeam Backup Free Edition or recently released Availability Suite v8. The built-in Hyper-V replica can also be used for backup and disaster recovery by asynchronously (every 30 seconds, or 5 to 15 minutes). It replicates VM disk writes to another Hyper-V host, first in the same datacenter and then (if you use extended replication) to another datacenter or Azure. These replica VMs can be failed over to in case of a disaster; if that happens, they can be injected with a different IP configuration to match the subnets in the failover datacenter or Azure virtual network.

Maintaining Hyper-V

Virtual Machine Manager works hand-in-hand with Operations Manager to monitor for issues in your infrastructure, as well as provide forecasting for your capacity utilization. Veeam offers comprehensive Management Packs for Operations Manager for both Hyper-V and VMware environments.

If you have specific performance issues or need to baseline your Hyper-V or storage nodes, you can use Performance Monitor; don't use Task Manager, as it's not Hyper-V-aware. Download and learn how to use Performance Analysis of Logs (PAL), which will help you manage Performance Monitor logging across several hosts.

Hyper-V is a very capable platform to build your business's private cloud on; and, along with System Center, it can be managed and monitored effectively. Technical insight and planning is required to navigate all the different options available. Hopefully, this article has provided some guidance toward a successful implementation.

(

Editor's Note: This article is part of a special edition of Virtualization Review Magazine

devoted to the changing datacenter. Get the entire issue here.)