How-To

What Is dHCI, Part 1: Topology, Benefits and a Look at HPE's Solution

Tom Fenton discuss disaggregated hyperconverged infrastructure (dHCI), an interesting emerging technology in the datacenter that can address limitations of HCI while still embracing its attractive features.

In this two-article series, I will discuss disaggregated hyperconverged infrastructure (dHCI), as I think that it is one of the more interesting emerging technologies in the datacenter as it can overcome many of the limitations of hyperconverged infrastructure (HCI) while still embracing the factors that make HCI so attractive. Here I will give you an overview of dHCI and explore one vendor's implementation of it. In my second article I will look at how a dHCI implementation works with vVols and monitoring it.

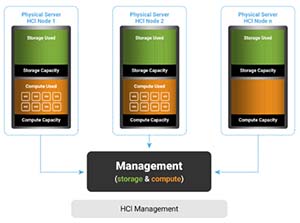

Before we do that, though, we need to look at hyperconverged infrastructure, generally. HCI came on to the scene about seven years ago and quickly became a force in the datacenter because of its ease of deployment and management. It uses commodity servers to create a cluster that uses the local storage on those servers -- along with software-defined storage -- to create a storage array. It also allows the entire cluster's compute and storage to be managed from a single management console.

[Click on image for larger view.]

[Click on image for larger view.]

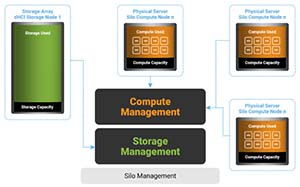

HCI eliminated the need to purchase, maintain, and manage a separate storage array, as has traditionally been the case with siloed datacenter architecture.

[Click on image for larger view.]

[Click on image for larger view.]

HCI's first large-scale use case was for virtual desktop infrastructure (VDI). What made HCI such a good fit for VDI was the predictable way in which VDI was rolled out; if you needed x number of desktops, you knew that you needed x number of HCI servers. Part of the ease of architecting VDI desktops was the fact that VDI users were, for the most part, task workers who didn't need GPUs or other non-standard hardware. Vendors could build their VDI offerings around this predictable workload. Once datacenters discovered the ease of management that an HCI offered, they started to use it for more general data center use.

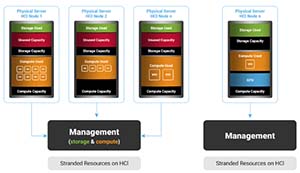

There are drawbacks, however. Chief among them is that not all applications and increasing VDI environments don't scale out linearly, creating an imbalance with regard to storage and compute. Further exacerbating the problem is that we are seeing more and more power users in VDI environments, and these users require GPUs, storage class memory (SCM), or other specialized hardware not offered within the relatively small list of HCI-compatible nodes.

This imbalance in storage and compute in an HCI cluster can lead to stranded resources, which is to say resources that have been purchased, but not used. Furthermore, resources like GPUs and storage class memory (SCM) can't be used since they're not offered with the HCI servers and have to be managed separately.

[Click on image for larger view.]

[Click on image for larger view.]

To alleviate these issues, vendors have designed schemes to keep the best attributes of HCI, namely the unified management of storage and compute, but with the power of a traditional datacenter architecture (i.e., the ability to scale compute and storage separately from each other). Think of this as making storage and compute just abstraction layers that share a common management plane. This topology was coined as disaggregated HCI (dHCI)

[Click on image for larger view.]

[Click on image for larger view.]

The first time I was exposed to dHCI was at NetApp Insight 2017 when NetApp announced an offering that coupled their SolidFire all flash arrays with vSphere.

[Click on image for larger view.]

[Click on image for larger view.]

Since then, other vendors have come up with their own dHCI offerings, and I recently had a chance to work with HPE's solution to get a better understanding of dHCI and how third-party products like ControlUp complement it.

HPE dHCI Environment

The HPE dHCI environment that I worked with was made up of three HPE DL360 Gen10 servers and a HPE Nimble AF20Q array. Each server had dual Intel Xeon 6130 procs, 128GB of RAM, and was running VMware ESXi 6.7. The array had 12 960GB SSD drives, providing 5.8 TiB of usable storage. The servers were connected to the array through an HPE FF570 32XGT switch over a 10Gb network.

[Click on image for larger view.]

[Click on image for larger view.]

I watched HPE do an entire buildout of a dHCI environment, and then a brownfield deployment of dHCI using an existing vCenter server.

In both deployments, the initial step involved logging in to a Nimble Storage array via a web browser, and then using a wizard to configure the dHCI. In step two of the configuration process, it asks if you want to use an existing vCenter Server or create a new one. Other than that, all the steps are identical and involve specifying networking information and passwords. That's really all that creating a dHCI environment entails. If you would like to see more about what is involved in setting up an environment you can read about it on StorageReview.com. The fact that it can instantiate its own vCenter Server and then install its plugin on it is pretty impressive.

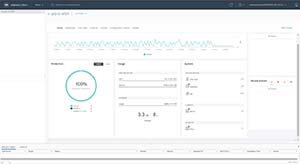

The dHCI plugin on the vCenter client shows the overall health of the dHCI environment, including both compute and storage.

[Click on image for larger view.]

[Click on image for larger view.]

The dHCI plugin makes it trivial to add additional ESXi hosts to a dHCI cluster. To add a new server, you use a wizard that scans the network for ESXi hosts. You select the host that you want to add and enter some networking information and passwords; the host will then be added to your dHCI cluster. Once added, it will be configured with the required vSwitches, VMKernel ports, iSCSI initiators and firewall settings, and have HA and DRS enabled on it.

[Click on image for larger view.]

[Click on image for larger view.]

In this article I gave you look of the topology and benefits of dHCI and looked at HPE’s implementation of it. In my next article 2 I will take a look at how HPE implements vVols on Nimble Storage and look at using ControlUp to monitor it.

About the Author

Tom Fenton has a wealth of hands-on IT experience gained over the past 30 years in a variety of technologies, with the past 20 years focusing on virtualization and storage. He previously worked as a Technical Marketing Manager for ControlUp. He also previously worked at VMware in Staff and Senior level positions. He has also worked as a Senior Validation Engineer with The Taneja Group, where he headed the Validation Service Lab and was instrumental in starting up its vSphere Virtual Volumes practice. He's on X @vDoppler.