How-To

Tips for Managing VDI, Part 5: Hyperconverged Infrastructure

In this series of articles, I have discussed some tips around VDI. These articles, for the most part, have not looked at the backend infrastructure that VDI runs on. In this article I will do so. This will include the infrastructure that was first used for VDI and the infrastructure that is now most commonly used for VDI.

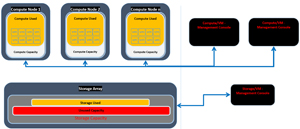

Converged Infrastructure

When VDI first started, converged infrastructure (CI) was the state-of-the-art datacenter topology. It basically was composed of a discrete storage array and the servers that used the common pool of data stored on it.

In the early 2000s servers and storage were usually connected via Fibre Channel for block storage for high-performance applications and NFS was used for file-based storage. Fibre Channel was preferred for high-performance applications as it had Gigabit/100Mb speeds. We have come a long way since than and some converged infrastructure topologies use 400GB networking and use iSCSI and NVME-oF for their storage protocols.

[Click on image for larger view.]

[Click on image for larger view.]

In theory CI allows companies to pick the "best-of breed" components and assemble them together like Lego. You can pick servers from IBM, Dell, HP and so on, and pick storage from another vendor such as NetApp, EMC or Hitachi and just plug them together. The reality is that in the early days the standards used for connectivity were loose enough to cause compatibility issues and these issues were painful to track down.

Hypervisors use storage arrays for storage that is shared amongst many different servers as it virtual machines to start from any server that had the CPU and memory capacity to host them. vMotion allows a running VM to be transferred from one server to another if maintenance or performance reasons require it.

One of the downsides to using storage arrays is that they were usually administered by a separate group, and it takes a lot of coordination to bring new applications online and was relatively inflexible. Even something as minor as increasing the storage for running applications is a painful process as silos got built around the "Storage" and "Compute" groups.

To further complicate matters is that storage arrays are usually based on ASIC technology, are slow to implement advances in technology like NVME drives. As arrays tend to be big monolithic devices, they are usually over-sized to allow for growth. Gradually adding small amounts of capacity was difficult and expensive.

Hyperconverged Infrastructure

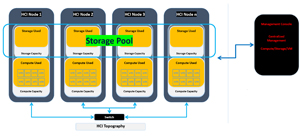

Back in 2012, when I worked for the Taneja Group, we saw a new technology starting to appear on the scene that used the local storage on servers to create a pool that could be used by any server in that pool, or cluster. This avoids a lot of the issues with CI and allows a data center to grow in a very gradual and holistic manner.

[Click on image for larger view.]

[Click on image for larger view.]

Taneja defined Hyperconverged Infrastructure (HCI) as "the integration of multiple previously separate IT domains into one system in order to serve up an entire IT infrastructure from a single device or system." This means that HCI systems contain all IT infrastructure: networking, compute and storage. Such capability implies an architecture built for seamless and easy scaling over time, in a "grow as needed" fashion.

The primary difference between CI and HCI infrastructure is that in HCI, the underlying storage abstractions are implemented virtually in software (at or via the hypervisor) rather than physically, in hardware. Because all the elements are software-defined, implementations of all resources can be shared across all HCI nodes.

Some HCI products grew out of storage companies, others grew from server-based technology, others from their hypervisors and some were purpose built from the outset for HCI.

HCI eliminates the silos and enabled centralized management of compute, storage, and networking.

The first killer use case for HCI was VDI which needed to grow compute and storage at the same time in a gradual, predictable manner.

New technologies came into use which made HCI practical.

CPUs became more powerful and supported more cores 32, even 64 core CPUs are now we common. There are even have 128 core CPUs from AMD and Intel. CPUs have features in them that have functions for storage and networking. Server's support huge amounts of RAM and have multi-levels of cache.

SATA SSD drives started to become popular as HCI began to become widely available. This allowed for servers to deliver the same IOPS as storage arrays. Now we have NVMe drives that are cost-competitive with SSD drives. A recent study that I did saw only a 16 percent cost difference in NVME and SATA SSD drives for that difference you get a drive that is many times more performant.

HCI, with regards to being used with VDI, offers ease of management, ease of deployment, flexibility, and cost savings.

In my next article I will look at some more VDI infrastructure options.

About the Author

Tom Fenton has a wealth of hands-on IT experience gained over the past 30 years in a variety of technologies, with the past 20 years focusing on virtualization and storage. He previously worked as a Technical Marketing Manager for ControlUp. He also previously worked at VMware in Staff and Senior level positions. He has also worked as a Senior Validation Engineer with The Taneja Group, where he headed the Validation Service Lab and was instrumental in starting up its vSphere Virtual Volumes practice. He's on X @vDoppler.