News

From Deepfakes to Facial Recognition, Stanford Report Tracks Big Hike in AI Misuse

A sprawling new report from Stanford University charts a steep rise in the misuse of AI technology, increasingly being applied to image/video deepfakes and questionable facial recognition and surveillance efforts.

The 2023 AI Index Report was published this week by the Stanford Institute for Human-Centered Artificial Intelligence (HAI). The report series, dating back to 2017, tracks, collates, distills and visualizes data related to AI in order to provide unbiased, vetted and globally sourced data for policymakers, researchers, executives, journalists and the general public.

Designed to enable decision-makers to take meaningful action to advance AI responsibly and ethically with humans in mind, the report examines R&D, technical performance, ethics and many other aspects of AI technology.

One of the key takeaways of the report as presented by HAI noted that the number of incidents concerning the misuse of AI is rapidly rising. The data backing up that claim comes from the AI, Algorithmic, and Automation Incidents and Controversies (AIAAIC) repository, described as a public interest resource detailing incidents and controversies driven by and relating to AI, algorithms and automation.

Specifically, the AIAAIC reported that misuse issues were 26 times greater in 2021 than in 2012.

[Click on image for larger view.] Number of AI Incidents and Controversies, 2012-21 (source: Stanford HAI, AIAAIC).

[Click on image for larger view.] Number of AI Incidents and Controversies, 2012-21 (source: Stanford HAI, AIAAIC).

"Chalk that up to both an increase in AI use and a growing awareness of its misuse," the 2023 AI Index Report said. Reported issues included a deepfake of Ukrainian President Volodymyr Zelenskyy surrendering to Russia, face recognition technology intended to track gang members and rate their risk, surveillance technology to scan and determine emotional states of students in a classroom and inmate call monitoring technology being used by prisons.

What's more, with the AIAAIC backing data being at least two years old, that hike in misuse doesn't include many recent examples that have sprung up since the advent of advanced AI systems like ChatGPT. Some recent examples include infamous deepfakes falsely depicting Donald Trump being forcibly arrested by cops and Pope Francis wearing a puffy white designer coat.

The data on AI misuse is presented in a main section of the report dealing with technical AI ethics, a topic coming under increasing scrutiny.

For example, AI ethics was a key concern of thousands of people, including notable industry figures Elon Musk and Steve Wozniak, who signed an open letter calling to Pause Giant AI Experiments. As detailed in the Virtualization & Cloud Review article, "Commercial AI Pushes Receiving More Blowback," the letter specifically says: "We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4."

The open letter signatories say AI labs and independent experts should pause AI advancement to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts.

One concern of AI watchers is that AI initiatives are increasingly backed by big-money corporations for paid products, steering the industry away from an initial research focus to profit-driven advancements that might be taking the industry too far, too fast.

That concern was echoed in this week's Stanford report.

"Fairness, bias, and ethics in machine learning continue to be topics of interest among both researchers and practitioners," the report said. "As the technical barrier to entry for creating and deploying generative AI systems has lowered dramatically, the ethical issues around AI have become more apparent to the general public. Startups and large companies find themselves in a race to deploy and release generative models, and the technology is no longer controlled by a small group of actors."

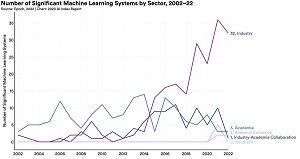

[Click on image for larger view.] Number of Significant Machine Learning Systems by Sector, 2002-22 (source: Stanford HAI).

[Click on image for larger view.] Number of Significant Machine Learning Systems by Sector, 2002-22 (source: Stanford HAI).

That relates to another key report highlight: The tech industry has raced ahead of academia. "Until 2014, most significant machine learning models were released by academia," the report said. "Since then, industry has taken over. In 2022, there were 32 significant industry-produced machine learning models compared to just three produced by academia. Building state-of-the-art AI systems increasingly requires large amounts of data, computer power, and money -- resources that industry actors inherently possess in greater amounts compared to nonprofits and academia."

Other key takeaways of the report as presented by Stanford include:

- Performance saturation on traditional benchmarks. AI continued to post state-of-the-art results, but year-over-year improvement on many benchmarks continues to be marginal. Moreover, the speed at which benchmark saturation is being reached is increasing. However, new, more comprehensive benchmarking suites such as BIG-bench and HELM are being released.

- AI is both helping and harming the environment. New research suggests that AI systems can have serious environmental impacts. According to Luccioni et al., 2022, BLOOM's training run emitted 25 times more carbon than a single air traveler on a one-way trip from New York to San Francisco. Still, new reinforcement learning models like BCOOLER show that AI systems can be used to optimize energy usage.

- The world's best new scientist ... AI? AI models are starting to rapidly accelerate scientific progress and in 2022 were used to aid hydrogen fusion, improve the efficiency of matrix manipulation, and generate new antibodies.

- The demand for AI-related professional skills is increasing across virtually every American industrial sector. Across every sector in the United States for which there is data (with the exception of agriculture, forestry, fishing, and hunting), the number of AI-related job postings has increased on average from 1.7 percent in 2021 to 1.9 percent in 2022. Employers in the United States are increasingly looking for workers with AI-related skills.

- For the first time in the last decade, year-over-year private investment in AI decreased. Global AI private investment was $91.9 billion in 2022, which represented a 26.7 percent decrease since 2021. The total number of AI-related funding events as well as the number of newly funded AI companies likewise decreased. Still, during the last decade as a whole, AI investment has significantly increased. In 2022 the amount of private investment in AI was 18 times greater than it was in 2013.

- While the proportion of companies adopting AI has plateaued, the companies that have adopted AI continue to pull ahead. The proportion of companies adopting AI in 2022 has more than doubled since 2017, though it has plateaued in recent years between 50 percent and 60 percent, according to the results of McKinsey's annual research survey. Organizations that have adopted AI report realizing meaningful cost decreases and revenue increases.

- Policymaker interest in AI is on the rise. An AI Index analysis of the legislative records of 127 countries shows that the number of bills containing "artificial intelligence" that were passed into law grew from just 1 in 2016 to 37 in 2022. An analysis of the parliamentary records on AI in 81 countries likewise shows that mentions of AI in global legislative proceedings have increased nearly 6.5 times since 2016.

- Chinese citizens are among those who feel the most positively about AI products and services. Americans ... not so much. In a 2022 IPSOS survey, 78 percent of Chinese respondents (the highest proportion of surveyed countries) agreed with the statement that products and services using AI have more benefits than drawbacks. After Chinese respondents, those from Saudi Arabia (76 percent) and India (71 percent) felt the most positive about AI products. Only 35 percent of sampled Americans (among the lowest of surveyed countries) agreed that products and services using AI had more benefits than drawbacks.

About the Author

David Ramel is an editor and writer at Converge 360.