News

AWS Makes Its Own Generative AI Moves with 'Bedrock' Dev Service, New LLMs

Amazon Web Services (AWS), seeking to not be left behind in the cloud giant AI space, is making moves to catch up to Microsoft and Google.

Microsoft, of course, gained a huge head start in the AI races via its partnership with cutting-edge, generative AI specialist OpenAI, which prompted Google to declare a "code red" and play catch up by introducing its own wares and services. Following Microsoft's lead, Google is leveraging advanced large language models (LLM) for machine language functionality to boost its search experience with Bard -- a rival to Microsoft's "new Bing," and other products, such as Docs and Gmail.

Now, the third of the "big three" cloud giants, AWS, is getting in on the action.

The company last week introduced new tools for building generative AI projects on its cloud computing platform that leverage what it calls Foundation Models (FMs), akin to LLMs like GPT-4 from OpenAI. Specifically, the company announced Amazon Bedrock a new service that helps developers create FMs from Amazon and its partners -- AI21 Labs, Anthropic and Stability AI -- that are accessible via an API.

At the same time, to work with Bedrock, AWS introduced Amazon Titan, which provides two new LLMs. One is a generative LLM for tasks such as summarization, text generation, classification, open-ended Q&A and information extraction. The other is an embeddings LLM that translates text inputs into numerical representations (embeddings) that contain the semantic meaning of the text.

"Bedrock is the easiest way for customers to build and scale generative AI-based applications using FMs, democratizing access for all builders," the Titan site says. "Bedrock offers the ability to access a range of powerful FMs for text and images -- including Amazon Titan FMs -- through a scalable, reliable, and secure AWS managed service. Amazon Titan FMs are pretrained on large datasets, making them powerful, general-purpose models. Use them as is or privately to customize them with your own data for a particular task without annotating large volumes of data."

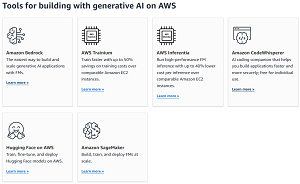

The new service joins the AWS stable of generative AI offerings, which includes Amazon CodeWhisperer -- a coding assistant similar to GitHub Copilot -- and Hugging Face on AWS, for training, fine-tuning and deploying Hugging Face models on the AWS cloud.

[Click on image for larger view.] AWS AI Tools (source: AWS).

[Click on image for larger view.] AWS AI Tools (source: AWS).

"With Bedrock's serverless experience, customers can easily find the right model for what they're trying to get done, get started quickly, privately customize FMs with their own data, and easily integrate and deploy them into their applications using the AWS tools and capabilities they are familiar with (including integrations with Amazon SageMaker ML features like Experiments to test different models and Pipelines to manage their FMs at scale) without having to manage any infrastructure," AWS explained in an April 13 blog post announcing Bedrock.

Bedrock was announced as a limited preview, as the company has been working with partners to flesh out the service.

In the same post, AWS announced the general availability of Amazon EC2 Trn1n instances powered by AWS Trainium and Amazon EC2 Inf2 instances powered by AWS Inferentia2, which the company described as the most cost-effective cloud infrastructure for generative AI. The homegrown AWS Trainium and AWS Inferentia chips are used for training models and running inference in the cloud.

In addition, the company announced the general availability of Amazon CodeWhisperer, free for individual developers.

"Today, we're excited to announce the general availability of Amazon CodeWhisperer for Python, Java, JavaScript, TypeScript, and C# -- plus 10 new languages, including Go, Kotlin, Rust, PHP and SQL," AWS said. "CodeWhisperer can be accessed from IDEs such as VS Code, IntelliJ IDEA, AWS Cloud9, and many more via the AWS Toolkit IDE extensions. CodeWhisperer is also available in the AWS Lambda console. In addition to learning from the billions of lines of publicly available code, CodeWhisperer has been trained on Amazon code. We believe CodeWhisperer is now the most accurate, fastest, and most secure way to generate code for AWS services, including Amazon EC2, AWS Lambda, and Amazon S3."

All of the above was announced shortly after the cloud giant launched its AWS Generative AI Accelerator, a 10-week program designed to take the most promising generative AI startups around the globe to the next level. The new developments come amid calls to slow down generative AI development, as industry figures are worried about commercial, for-pay product and service advancements pushing the tech too far, too fast.

About the Author

David Ramel is an editor and writer at Converge 360.