News

Scientists Seek Government Database to Track Harm from Rising 'AI Incidents'

With harmful "AI incidents" on the rise, American scientists are recommending that the U.S. government track them to mitigate future risks.

The establishment of such a database by the Department of Homeland Security (DHS) is just one recommendation made by the Federation of American Scientists (FAS) in response to a White House call for public input on the Biden administration's National AI Strategy.

"This database would serve as a central repository for reported AI-related incidents across industries and sectors in the United States, ensuring that crucial information on breaches, system failures, misuse, and unexpected behavior of AI systems is systematically collected, categorized, and made accessible," the FAS said in a letter to the Office of Science and Technology Policy (OSTP), as explained in last week's post, "Six Policy Ideas For The National AI Strategy."

Harmful AI incidents are already being tracked, of course, on a variety of fronts, including the AI Incidents Database from Partnership on AI and the AI Incident Database, which defines an incident as "an alleged harm or near harm event to people, property, or the environment where an AI system is implicated."

The idea behind such databases is to learn from experience in order to prevent or mitigate bad outcomes, and it has been likened to the similar effort taken on by the aviation industry for accidents.

The various AI incident databases and trackers have sprung up to tackle a growing problem, as Newsweek earlier this year reported "AI Accidents Are Set to Skyrocket This Year."

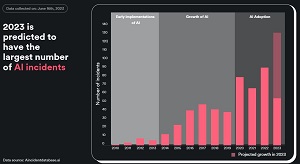

To illustrate that, Surfshark used the AI Incident Database data to produce this chart:

[Click on image for larger view.] AI Incidents Rising (source: Surfshark/AI Incidents Database).

[Click on image for larger view.] AI Incidents Rising (source: Surfshark/AI Incidents Database).

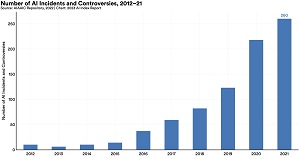

Earlier this year, Virtualization & Cloud Review reported that the AI, Algorithmic, and Automation Incidents and Controversies (AIAAIC) repository, described as a public interest resource detailing incidents and controversies driven by and relating to AI, algorithms and automation, also noted a big increase in incidents, as detailed in the article, "From Deepfakes to Facial Recognition, Stanford Report Tracks Big Hike in AI Misuse."

[Click on image for larger view.] Number of AI Incidents and Controversies, 2012-21 (source: Stanford HAI, AIAAIC).

[Click on image for larger view.] Number of AI Incidents and Controversies, 2012-21 (source: Stanford HAI, AIAAIC).

Further illustrating the rise in AI incidents, Forbes last month noted "AI 'Incidents 'Up 690%: Tesla, Facebook, OpenAI Account For 24.5%."

Faced with mounting evidence of such harmful AI incidents, the FAS noted the government database could somewhat align with other trackers and efforts.

"The database should be designed to encourage voluntary reporting from AI developers, operators, and users while ensuring the confidentiality of sensitive information," the FAS said. "Furthermore, the database should include a mechanism for sharing anonymized or aggregated data with AI developers, researchers, and policymakers to help them better understand and mitigate AI-related risks. The DHS could build on the efforts of other privately collected databases of AI incidents, including the AI Incident Database created by the Partnership on AI and the Center for Security and Emerging Technologies. This database could also take inspiration from other incident databases maintained by federal agencies, including the National Transportation Safety Board's database on aviation accidents."

The group further recommended that the DHS should collaborate with the National Institute of Standards and Technology (NIST) to design and maintain the database, including setting up protocols for data validation categorization, anonymization, and dissemination. "Additionally, it should work closely with AI industry stakeholders, academia, and civil society to ensure that the database is comprehensive, useful, and trusted by stakeholder," the FAS said.

As mentioned, the database recommendation was just one of six provided by the organization, with the others being:

- OSTP should work with a suitable agency to develop and implement a pre-deployment risk assessment protocol that applies to any frontier AI model.

- Adherence to the appropriate risk management framework should be compulsory for any AI-related project that receives federal funding.

- The National Science Foundation (NSF) should increase its funding for "trustworthy AI" R&D.

- The Federal Risk and Authorization Management Program (FedRAMP) should be broadened to cover AI applications contracted for by the federal government.

- OSTP should work with agencies to streamline the process of granting Interested Agency Waivers to AI researchers on J-1 visas.

"The actions we have recommended would all help build American capacity to encourage development and use of AI systems for the public good while mitigating risks," the FAS said. "These actions would be timely; in most cases, they could be implemented by the relevant agencies under existing authorities. They would help align the incentives of AI developers with the public good by tying funding and contracts to stringent security protocols and proper risk management. And they would help inform policymakers and the public of AI-related incidents."

About the Author

David Ramel is an editor and writer at Converge 360.