News

VMware, Intel Team Up to 'Enable Private AI Everywhere'

VMware announced a collaboration with Intel to make AI more accessible and private across various environments like datacenters, public clouds and the edge.

The two companies will deliver a validated AI stack that will enable customers to use their existing VMware and Intel infrastructure and open source software to build and deploy AI models.

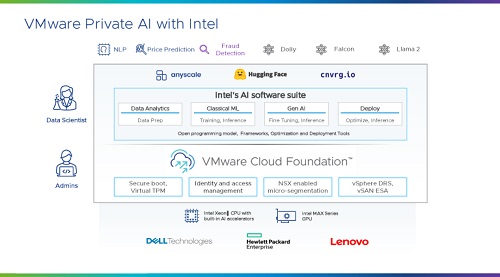

The AI stack will combine the VMware Cloud Foundation infrastructure platform with Intel's AI software suite, Intel Xeon processors with built-in AI accelerators, and Intel Max Series GPUs, a new line of datacenter GPUs for demanding AI workloads. The stack will support end-to-end AI workflows, from data preparation to model training to inferencing, and will leverage the oneAPI framework, an open standard for cross-architecture software development.

The collaboration aims to make AI more accessible and scalable for enterprises, as well as more private and secure. VMware calls this approach Private AI, which allows customers to fine-tune and infer AI models using their own corporate data, without compromising on choice, performance or sustainability. Private AI can enable various use cases, such as AI-assisted code generation, customer service, and recommendation systems.

Core tenets of Private AI include:

- Highly distributed: Compute capacity and trained AI models reside adjacent to where data is created, processed, and/or consumed, whether the data resides in a public cloud, virtual private cloud, enterprise datacenter, or at the edge. This will require an AI infrastructure that is capable of seamlessly connecting disparate data locales in order to ensure centralized governance and operations for all AI services.

- Data privacy and control: An organization's data remains private to the organization and is not used to train, tune, or augment any commercial or OSS models without the organization's consent. The organization maintains full control of its data and can leverage other AI models for a shared data set as its business needs require.

- Access Control and auditability: Access controls are in place to govern access and changes to AI models, associated training data, and applications. Audit logs and associated controls are also essential to ensure that compliance mandates -- regulatory or otherwise -- are satisfied.

"VMware Private AI brings compute capacity and AI models to where enterprise data is created, processed, and consumed, whether in a public cloud, enterprise datacenter, or at the edge, in support of traditional AI/ML workloads and generative AI," VMware said last week in a news release. "VMware and Intel are enabling the fine-tuning of task specific models in minutes to hours and the inferencing of large language models at faster than human communication using the customer's private corporate data."

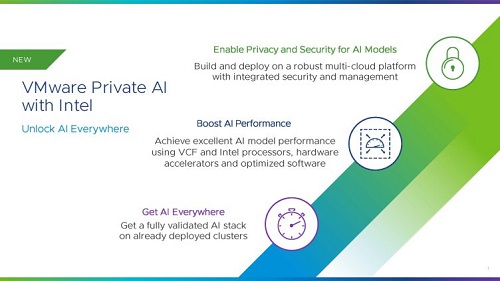

[Click on image for larger view.] VMware Private AI with Intel (source: VMware).

[Click on image for larger view.] VMware Private AI with Intel (source: VMware).

The value that enterprises can expect from the deal include:

- Enable privacy and security for AI models: VMware Private AI's architectural approach for AI services enables privacy and control of corporate data and integrated security and management. This partnership will help enterprises build and deploy private and secure AI models with integrated security capabilities in VCF and its components.

- Boost AI Performance: Achieve excellent AI and LLM model performance using the integrated capabilities built into VCF and Intel processors, hardware accelerators, and optimized software. For example, vSphere, one of the core components of VCF, includes Distributed Resources Scheduler, which improves AI workload management by grouping hosts into resource clusters for different applications and ensuring that VMs have access to the right amount of computing resources, preventing resource bottlenecks, and optimizing resource utilization.

- Get AI Everywhere: VMware and Intel are providing enterprises with a fully validated AI stack on already deployed clusters. This stack enables enterprises to do data prep, machine learning, fine-tuning, and inference optimization using Intel processors, hardware accelerators, Intel's AI software suite, and VCF across your on-premises environment.

[Click on image for larger view.] VMware Private AI with Intel Architecture (source: VMware).

[Click on image for larger view.] VMware Private AI with Intel Architecture (source: VMware).

Use cases, according to a blog post, include:

- Code Generation: Enterprises can use their models without the risk of losing their IP or data and can accelerate developer velocity by enabling code generation.

- Contact centers resolution experience: Enterprises can fine-tune models against their internal documentation and knowledge base articles, including private support data, and, in turn, realize more efficient customer service and support with meaningful reductions in human interactions in support/service incidents.

- Classical Machine Learning: Classical ML models are used for a variety of real-world applications across the financial services, health and life sciences, retail, research, and manufacturing industries. Popular ML use cases include customized marketing, visual quality control in manufacturing, personalized medicine, and retail demand forecasting.

- Recommendation Engines: Enterprises can enhance experiences by suggesting or recommending additional products to consumers. These can be based on various criteria, including past purchases, search history, demographic information, and other factors.

IBM is also getting in on the action, as VMware said: "IBM and VMware are building on VMware Private AI to enable enterprises to access IBM watsonx in private, on-premises environments and hybrid cloud for the secure training and fine-tuning their models with the watsonx platform."

About the Author

David Ramel is an editor and writer at Converge 360.