In-Depth

Microsoft Data Governance: Preparing for the Copilots

You might have heard of Microsoft's newest services for businesses -- Copilot. At the time of writing it's popping up everywhere in different Microsoft applications. This isn't going to be an article about how useful Microsoft 365 Copilot is (that'll be next month), but what you need to do to prepare your business for these different Copilots. Because if you don't have good data governance, you'll likely have some "interesting times" ahead if you roll out Copilot broadly.

Remember Delve, Microsoft's service that used machine learning to suggest internal documents by people you work with that you might want to look at? Many businesses freaked out as they realized that the permissions they had in place were far too lax, and users were offered documents that they really shouldn't have had access to. Well, if you implement Microsoft 365 Copilot, not only will those documents be part of the corpus that it looks at when answering your questions or creating drafts of new content, it won't be obvious to the user where the data comes from, unlike Delve.

Microsoft is giving advice on what to do to prepare in this blog post, this Ignite session and this Microsoft Mechanic's video, as well as this training module, but with the hype around generative AI, and the rush to not be left out of the "productivity boost," I suspect this advice might be lost in the noise.

In this article I'll cover Hornetsecurity's Permission Manager, a third-party service that will really assist with the permissions issues, and also talk about Microsoft Purview and what's new in the Purview space.

So, let's assume your CIO is tasking you with rolling out Microsoft 365 Copilot to some early adopters in your business. Start by getting your internal (and external) sharing of documents in Teams, SharePoint and OneDrive sites under control. All the different Copilots operate with the permissions of the user -- so they only have access to the documents and data that a user has access to, through the Microsoft 365 Graph API.

365 Permission Manager

I'm always amazed when I read "best practices" documentation for data governance, either by Microsoft or others. It sounds like large businesses have teams of IT people whose only job is to know and record where all data in the organization is stored, right size the permissions and regularly review access to make sure that someone who needed access last week, but who has now moved on to another role, doesn't have access anymore. In my SMB clients this is definitely NOT the case, and I suspect even large organizations don't have this down pat either, unless they're in a heavily regulated industry or are a military contractor.

One unique offering for gaining control, specifically for SharePoint Online and OneDrive for Business data storage, is Hornetsecurity's 365 Permission Manager. To understand why it's such a good solution, let's first recap how the built-in tools do it.

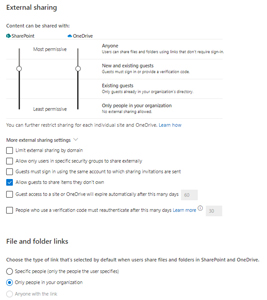

For SharePoint and OneDrive you can set tenant-wide external sharing settings, starting with Anyone (just anonymous links to documents, please don't use this one), New and existing guests (the act of sharing a document with an external user invites them to your directory if they don't already have a guest account) and Existing guests (you must have some other process for inviting external collaborators) and Only people in your organization.

[Click on image for larger view.] SharePoint Sharing Settings

[Click on image for larger view.] SharePoint Sharing Settings

Then you can further tighten external sharing from individual sites, but there's no centralized console to track what sharing is configured for which sites. There's also no easy way to see the permissions of sites for internal sharing in a centralized console.

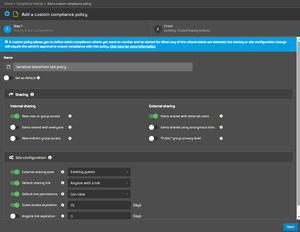

365 Permissions Manager uses the same underlying engine but lets you assign in-built or custom policies to each individual site. These policies define both internal and external sharing settings, making it much easier to govern a sprawling SharePoint estate (and each user's OneDrive storage, as that's also just a SharePoint site under the hood).

[Click on image for larger view.] Creating a Custom Compliance Policy for Sharing

[Click on image for larger view.] Creating a Custom Compliance Policy for Sharing

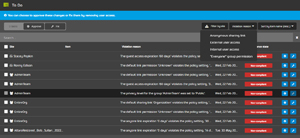

You'll then get reports on how compliant existing sites are with your policies.

[Click on image for larger view.] 365 Permission Manager Dashboard

[Click on image for larger view.] 365 Permission Manager Dashboard

Another very useful feature is the ability to pick a specific user and see exactly what SharePoint, OneDrive and Teams sites they have access to. Imagine a scenario where a user is suspected of stealing intellectual property (IP) before leaving the organization, or their account has been compromised and someone has been using their access to steal data -- knowing exactly what they have access to in a few seconds will save many hours compared to manually inventorying it. When you create a policy, you also define how often the site will be audited, and if notifications of policy violations will be sent to users. You can also use Explore Sites and see what the sharing settings are for each site and click a simple Fix button to remediate them according to policy or Approve if there's a business reason for the deviation.

When you first introduce 365 Permission Manager, you're likely to find a lot of ungoverned sharing, both internal and external, and the handy To Do module lets you prioritize the list of policy violations, where you can also fix them in bulk. Obviously, data governance isn't something an IT department can fix on their own; there are inbuilt reports that you can export and use when working through the issues with representatives from different departments.

[Click on image for larger view.] To Do List with Approve or Fix Options

[Click on image for larger view.] To Do List with Approve or Fix Options

I've found 365 Permission Manager to be a very comprehensive tool to not only apply data governance to this difficult area in Microsoft 365 but maintain that governance over time.

Microsoft offers a few new tools for governance in the recent SharePoint Premium such as Data Access Governance (DAG), which gives you reports on site sharing settings, and site access reviews, which provides tools for site owners to remediate over-permissioned sites, but they don't cover the same depth as 365 Permission Manager.

There might be scenarios where you want users to have access to particular documents, but not their Copilot instances -- for a SharePoint site you can disable search indexing which will stop that access (and stop users from being able to search for documents in the site easily).

Other things to do to prepare include inventory your Teams. If you have many similar Teams with overlapping content, apply governance before rolling out Copilot. The same goes for documents. If you have six copies of the same document in different folders, you might know which one to use, but Copilot will not know the difference. For users / business that are hoarders, having 15 years' worth of sales presentations might feel good, but remember, Copilot will base its information on all of them, so a good rule of thumb might be to only keep three years' worth and archive the rest (subject to whatever regulations you must comply with). If you have the appropriate licensing, consider using retention policies to help with this.

Managing both privileged account roles and user access is crucial -- this Microsoft Mechanics video covers this, including the use of Privileged Identity Management (PIM) and access packages.

Microsoft Purview

I last looked at information governance using Purview back in March 2023 here, and a lot has happened since then. Apart from the basic sharing permissions covered above, the next step in preparing for Microsoft 365 Copilot is adopting various parts of the Purview suite of tools. I'm aware that if you're an IT admin in an organization, building a comprehensive data governance program (in which Purview is but a small technology building block next to gigantic heaps of business, people, process and culture change blocks) is beyond the scope of this article. Nevertheless, I find that knowing what the technology is capable of provides a good starting point for the conversation.

Microsoft Purview is the umbrella term for the services that identify and protect sensitive data in both M365 and Azure, on-premises, third-party clouds and Software-as-a-Service (SaaS) applications.

One cool new feature is the ability to use the context of files, not just the content of them, to classify them. This lets you use file extensions, words in the file name or who created the file as predicates when you build your labeling rules. There's also site labeling so that you can quickly label all files in a document library, plus deeper support for PDF files, enabling auto labelling of PDF files at rest, and options to open protected PDFs in SharePoint, OneDrive and Teams.

Another new blade (in private preview at the time of writing) is AI Hub in Purview, which will track user prompts for 100-plus different generative AI tools, and assign associated risks (low, medium and high) across your organization.

Note that specifically for Microsoft 365 Copilot, when documents have sensitivity labels that apply encryption, the user doesn't just need VIEW usage rights, but also EXTRACT (more commonly known as Copy) for the document to be surfaced to Copilot, more information here. If you ask Copilot to create a new Word document or a PowerPoint presentation based on a document with a sensitivity label, the new content inherits the label, and if there are multiple documents as sources, the most restrictive label will be used.

Having automatic labelling policies for sensitive data will auto label files and emails generated by Copilot. Users can also create their own labels, restricting access to a few people for documents related to a sensitive project, for example.

If you have deployed communication compliance policies these will monitor Copilot interactions and flag any inappropriate language. And if you're using retention policies, they will apply to the prompts that your users have with Copilot, allowing you to either keep them or delete them after a time that you define.

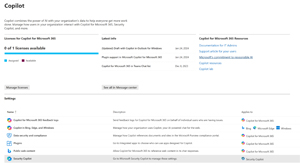

[Click on image for larger view.] The New Copilot Blade in the M365 Admin Center

[Click on image for larger view.] The New Copilot Blade in the M365 Admin Center

I covered Microsoft Fabric, the new SaaS offering for a single data warehouse / lake for a business here. Purview Information Protection labels are now available in Fabric, and Fabric items shows up in the Purview data map. You can apply sensitivity labels to Azure Data Lake Services / Azure Blob storage, Azure SQL and AWS S3 storage, and you can scan content in Amazon Redshift and in Tableau.

Data Loss Prevention incidents are now best investigated in the M365 Defender XDR console, right alongside any other security alerts. Of course, Security Copilot is integrated with Microsoft Purview, but I'll withhold my judgement until I actually get some hands-on time with it. If your organization uses the Power platform tools, it's underlying data storage is called Dataverse, which is now integrated with Purview.

Interactions with Copilot are logged in the Unified Audit log and includes in which service the interaction took place, and any files referenced during the interaction. You can also use Content Search and eDiscovery in M365 to retrieve the text from the user's prompts.

Conclusion

Now that Microsoft 365 Copilot is available for businesses of every size (that are willing to pay the price for at least a year, per user) we're in for an interesting 2024. (Over-)hyped technology tends to receive a cold wake up shower when it gets into the hands of ordinary people, in ordinary business roles. This is especially true when there's no training or adoption plan; check out Microsoft's excellent adoption resources here.

It is interesting to note the comprehensiveness of Microsoft's approach, both when it comes to advice about permissions and sharing governance, and all the tools that are "Copilot enabled" in Purview for monitoring and governing AI interactions. This is unusual for a version 1 of a Microsoft product, where the functionality often takes precedence over manageability and admin oversight. However, M365 Copilot has been available since November for larger businesses, and I suspect Microsoft has received a lot of feedback from them in the last few months. More importantly though, I think this is very important for Microsoft to get right -- Large language models (LLMs) present a whole new tech approach, and if organizations are burned by lack of control, auditing and oversight, they'll go elsewhere, something Microsoft can't afford.

In the broader context of the different Copilots, make sure you do your due diligence before ordering licenses and inventory who needs which Copilot license for which service. My recommendation is to only deploy it initially to users in roles that generate a lot of content, such as marketing and sales, and train those users. To get the best results out any LLM requires good prompts and that's a skill that takes time to develop.

I've just added my license yesterday as I write this and will report back on my experience in February.

But I think it's important to heed Microsoft's recommendation, get your data estate in order before a wide deployment of Microsoft 365 Copilot in your business.