News

Meta's Llama 3 Cracks Top 5 of AI Leaderboard, Only Non-Proprietary Model

Meta's brand-new Llama 3 large language model (LLM) debuted among the top 5 on an AI leaderboard, being the only non-proprietary model.

Meta yesterday (April 18) announced the new open-source model family while describing it as "the most capable openly available LLM to date."

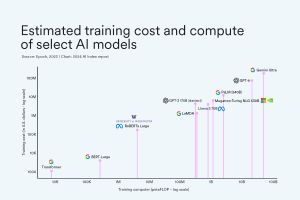

That openness is a key factor, as the generative AI space has been dominated by proprietary models that are outperforming open-source models, as detailed in a recent report ("AI Report: Closed Models Outperform Open Models, at Staggering Cost").

[Click on image for larger view.] Training Costs (source: HAI).

[Click on image for larger view.] Training Costs (source: HAI).

In a nutshell, proprietary models usually outperform open-source models typically generated by researchers or government organizations because of the huge and expensive foundation model training required to achieve state-of-the-art performance. Industry players releasing open models is more rare.

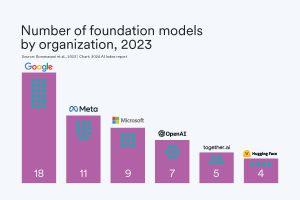

Cloud giants like Google and Microsoft have been dominating the AI space in terms of foundation models produced, but Meta has inserted itself among those major players, sandwiched between those two companies.

[Click on image for larger view.] Leading Players (source: HAI).

[Click on image for larger view.] Leading Players (source: HAI).

While Google has led in generating foundation models in 2023, the cost of training its top-level Gemini Ultra model was estimated to near $200 million.

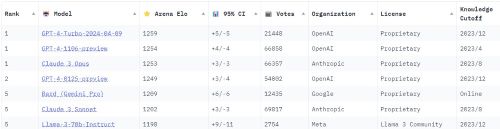

So it's remarkable that Llama 3 debuted tied at No. 5 in the LMSYS Chatbot Arena Leaderboard.

[Click on image for larger view.] LMSYS Chatbot Arena Leaderboard (source: Chatbot Arena).

[Click on image for larger view.] LMSYS Chatbot Arena Leaderboard (source: Chatbot Arena).

Chatbot Arena is an open-source research project developed by members from Large Model Systems Organization (LMSYS Org) and UC Berkeley SkyLab, the site says, describing its mission as "to build an open platform to evaluate LLMs by human preference in the real-world." The crowdsourced open platform for LLM evaluations has collected more than 500,000 human pairwise comparisons to rank LLMs and display the model ratings.

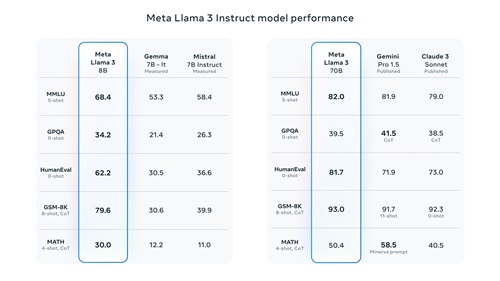

As far as that "most capable" claim, Meta provided this benchmark comparison:

[Click on image for larger view.] Meta Llama 3 Instruct Model Performance (source: Meta).

[Click on image for larger view.] Meta Llama 3 Instruct Model Performance (source: Meta).

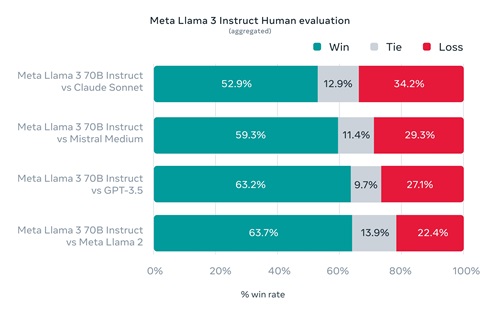

Meta also provided a comparison of Llama 3 with other models in a new human evaluation set, providing real-world rankings, somewhat like the Chatbot Arena:

[Click on image for larger view.] Meta Llama 3 Instruct Human Evaluation (source: Meta).

[Click on image for larger view.] Meta Llama 3 Instruct Human Evaluation (source: Meta).

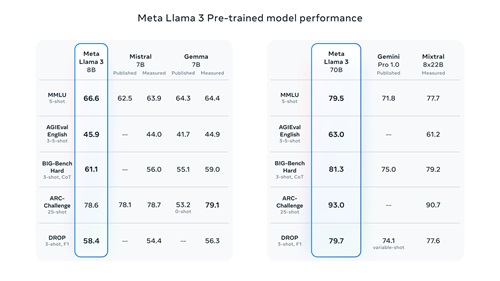

The company also claimed its pretrained model establishes a new state-of-the-art for LLM models at top-level scales.

[Click on image for larger view.] Meta Llama 3 Pre-trained Model Performance (source: Meta).

[Click on image for larger view.] Meta Llama 3 Pre-trained Model Performance (source: Meta).

No information was revealed regarding training costs for Llama 3. When we asked Meta AI, it said: "Llama 2, a large language model developed by Meta, was trained on a cluster of 512 NVIDIA A100 GPUs for 21 days, with an estimated cost of around $1.8 million," logically inferring "it's likely that training Llama 3 would have cost significantly more. For context, Llama 3 has 70 billion parameters, compared to Llama 2's 35 billion parameters."

Key takeaways from the Meta announcement as presented by the company include:

- Today, we're introducing Meta Llama 3, the next generation of our state-of-the-art open source large language model.

- Llama 3 models will soon be available on AWS, Databricks, Google Cloud, Hugging Face, Kaggle, IBM WatsonX, Microsoft Azure, NVIDIA NIM, and Snowflake, and with support from hardware platforms offered by AMD, AWS, Dell, Intel, NVIDIA, and Qualcomm.

- We're dedicated to developing Llama 3 in a responsible way, and we're offering various resources to help others use it responsibly as well. This includes introducing new trust and safety tools with Llama Guard 2, Code Shield, and CyberSec Eval 2.

- In the coming months, we expect to introduce new capabilities, longer context windows, additional model sizes, and enhanced performance, and we'll share the Llama 3 research paper.

- Meta AI, built with Llama 3 technology, is now one of the world's leading AI assistants that can boost your intelligence and lighten your load -- helping you learn, get things done, create content, and connect to make the most out of every moment. You can try Meta AI here.

Users can also try out the 70 billion-parameter and 8-billion parameter Llama 3 models at AI chip specialist Nvidia's site.

About the Author

David Ramel is an editor and writer at Converge 360.