News

Cloud Giant AI Models: Microsoft Goes Small with Phi-3-Mini, AWS Goes Big with Meta's Llama 3

Cloud giants continue to up their AI games, with brand-new developments including a tiny language model suitable for a phone from Microsoft, while Amazon's Bedrock AI service now sports Meta's Llama 3 models and much more.

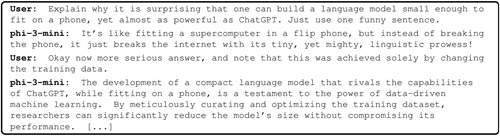

A Microsoft paper yesterday (April 22) introduced a new language model, phi-3-mini, which is a 3.8 billion parameter model trained on 3.3 trillion tokens. The model's performance is claimed to be on par with much larger models such as Mixtral 8x7B and GPT-3.5, despite being small enough to be deployed on a phone. The model is based on a scaled-up version of the dataset used for phi-2, which includes heavily filtered web data and synthetic data. The model is also aligned for robustness, safety, and chat format. Microsoft has also introduced phi-3-small and phi-3-medium models, which are significantly more capable than phi-3-mini.

[Click on image for larger view.] Phi-3-Mini Explains Itself (source: Microsoft).

[Click on image for larger view.] Phi-3-Mini Explains Itself (source: Microsoft).

Phi-2 was introduced last December when Microsoft touted the "surprising power of small language models." Phi-2 was a 2.7 billion parameter model claimed to match the performance of models 25 times larger trained on regular data.

While the "go small" approach provides benefits, there are trade-offs involved.

"In terms of LLM capabilities, while phi-3-mini model achieves similar level of language understanding and reasoning ability as much larger models, it is still fundamentally limited by its size for certain tasks," the paper said. "The model simply does not have the capacity to store too much 'factual knowledge', which can be seen for example with low performance on TriviaQA. However, we believe such weakness can be resolved by augmentation with a search engine."

Amazon Bedrock

Amazon Web Services (AWS), meanwhile boosted its AI service in several ways, including adding the hot new Llama 3 from Meta, an open foundation model that debuted in the top five of an AI leaderboard, the only such open model to ascend to that rank (see "Meta's Llama 3 Cracks Top 5 of AI Leaderboard, Only Non-Proprietary Model").

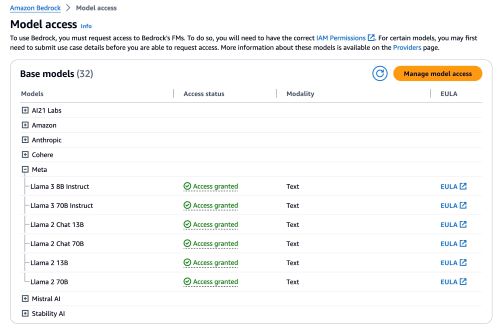

"To bring even more model choice to customers, today, we are making Meta Llama 3 models available in Amazon Bedrock," AWS said in a blog post today. "Llama 3's Llama 3 8B and Llama 3 70B models are designed for building, experimenting, and responsibly scaling generative AI applications. These models were significantly improved from the previous model architecture, including scaling up pretraining, as well as instruction fine-tuning approaches."

[Click on image for larger view.] Amazon Bedrock Model Access (source: AWS).

[Click on image for larger view.] Amazon Bedrock Model Access (source: AWS).

Another post summarizes the two models:

- Llama 3 8B is ideal for limited computational power and resources, and edge devices. The model excels at text summarization, text classification, sentiment analysis, and language translation.

- Llama 3 70B is ideal for content creation, conversational AI, language understanding, research development, and enterprise applications. The model excels at text summarization and accuracy, text classification and nuance, sentiment analysis and nuance reasoning, language modeling, dialogue systems, code generation, and following instructions.

Meta's models aren't the only new additions to Amazon Bedrock, as AWS summarized several other new enhancements for the cloud service:

- More model choice:

- Amazon Titan Text Embeddings V2, which is optimized for working with RAG uses cases (announced today with availability next week)

- Amazon Titan Image Generator, which applies an invisible watermark to all images it creates to help reduce the spread of disinformation (released in general availability today)

- Cohere's Command R and Command R+ models, which are coming soon to Amazon Bedrock (announced today, coming soon)

- Custom Model Import capability, which helps organizations bring their proprietary models to Amazon Bedrock, reducing operational overhead and accelerating application development (announced today, in preview)

- Model Evaluation, which helps customers assess, compare, and select the best model for their application (released in general availability today)

- Guardrails for Amazon Bedrock that implements safeguards to block harmful content (released in general availability today)

More about all those is explained in the long-titled post, "Significant new capabilities make it easier to use Amazon Bedrock to build and scale generative AI applications -- and achieve impressive results."

About the Author

David Ramel is an editor and writer at Converge 360.