News

Default Settings Cause AI Cloud Security Woes: Report

Cloud misconfigurations, specifically the blind acceptance of default resource settings, continue to cause cybersecurity issues in the cloud, with AI systems being a new factor.

"The default settings of AI services tend to favor development speed rather than security, which results in most organizations using insecure default settings," said Orca Security in announcing its inaugural "2024 State of AI Security Report."

"For example, 45% of Amazon SageMaker buckets are using non randomized default bucket names, and 98% of organizations have not disabled the default root access for Amazon SageMaker notebook instances," said the company in a Sept. 18 post.

That's one of the key report findings presented by the specialist in agentless cloud security, with another noting most vulnerabilities in AI models are low to medium risk -- for now.

Orca said that while AI packages help developers create, train and deploy AI models without developing brand-new routines, most of those packages are susceptible to known vulnerabilities.

"62% of organizations have deployed an AI package with at least one CVE [Common Vulnerabilities and Exposures]. However, most of these vulnerabilities are low to medium risk with an average CVSS score of 6.9, and only 0.2% of the vulnerabilities have a public exploit (compared to the 2.5% average)," said the report, based on data captured from billions of cloud assets on AWS, Azure, Google Cloud, Oracle Cloud, and Alibaba Cloud scanned by the Orca Cloud Security Platform.

For years, similar reports have reached similar conclusions, agreeing that most cybersecurity problems persist because of human error (see the July article, "Cloud Security: Despite All the Tech, It's Still a People Problem").

The above stats are one key report finding presented by Orca, with others including:

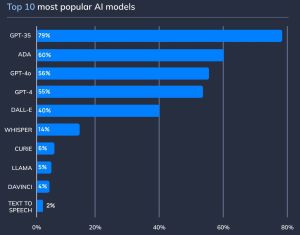

- 56% [of respondent organizations] have adopted their own AI models to build custom applications and integrations specific to their environment(s). Azure OpenAI is currently the front runner among cloud provider AI services (39%); Sckit-learn is the most used AI package (43%) and GPT-35 is the most popular AI model (79%).

[Click on image for larger view.] Models (source: Orca Security).

[Click on image for larger view.] Models (source: Orca Security).

- 98% of organizations using Google Vertex AI have not enabled encryption at rest for their self-managed encryption keys. This leaves sensitive data exposed to attackers, increasing the chances that a bad actor can exfiltrate, delete, or alter the AI model.

[Click on image for larger view.] Encryption (source: Orca Security).

[Click on image for larger view.] Encryption (source: Orca Security).

- Cloud AI tooling surges in popularity. Nearly four in 10 organizations using Azure also leverage Azure OpenAI, which only became generally available in November 2021. Amazon SageMaker and Vertex AI are growing in popularity.

[Click on image for larger view.] AI Services (source: Orca Security).

[Click on image for larger view.] AI Services (source: Orca Security).

Other data points culled from the report include:

- 27% of organizations have not configured Azure OpenAI accounts with private endpoints. This increases the risk that attackers can access, intercept, or manipulate data transmitted between cloud resources and AI services.

- 77% of organizations using Amazon SageMaker have not configured metadata session authentication (IMDSv2) for their notebook instances Not having IMDSv2 enabled leaves notebook instances and their sensitive data potentially exposed to high-risk vulnerabilities.

- 20% of organizations using OpenAI have at least one access key saved in an unsecure location A single leaked key can lead to a breach and risk the integrity of the OpenAI account

Taken altogether, the data from the report points to five main challenges in AI security, Orca said:

- Pace of innovation: The speed of AI development continues to accelerate, with AI innovations introducing features that promote ease of use over security considerations. Maintaining pace with these advancements is challenging, requiring ongoing research, development, and cutting-edge security protocols.

- Shadow AI: Security teams lack complete visibility into AI activity. These blind spots prevent the enforcement of best practices and security policies, which in turn increases an organization's attack surface and risk profile.

- Nascent technology: Due to its nascent stage, AI security lacks comprehensive resources and seasoned experts. Organizations must often develop their own solutions to protect AI services without external guidance or examples.

- Regulatory compliance: Navigating evolving compliance requirements requires a delicate balance between fostering innovation, ensuring security, and adhering to emerging legal standards. Businesses and policymakers must be agile and adapt to new regulations governing AI technologies.

- Resource control: Resource misconfigurations often accompany the rollout of a new service. Users overlook properly configuring settings related to roles, buckets, users, and other assets, which introduce significant risks to the environment.

The firm also provided five key recommendations to help:

- Beware of default settings: Cloud provider AI services cater to the needs of developers, providing them with features and settings that enhance efficiency. This often translates into default settings that can produce security risks in a live environment. To limit risk, ensure you change these default settings during the early stages of development.

- Manage vulnerabilities: While the field of AI security is relatively new, most vulnerabilities are not. Often, AI services rely on existing solutions with known vulnerabilities. Detecting and mapping those vulnerabilities in your environments is still essential to managing and remediating them appropriately.

- Isolate networks: It's best practice to always limit network access to your assets. This means opening assets to network activity only when necessary, and precisely defining what type of network to allow in and out. This is especially relevant to AI services, since they are relatively new and untested, and possess significant capabilities.

- Limit privileges: Excessive privileges give attackers freedom of movement and a platform to launch multi-phased attacks -- should bad actors successfully gain initial access. To protect against lateral movement and other threats, eliminate redundant privileges and remove unnecessary access between services, roles, and instances.

- Secure data: Securing data calls for combining several best practices. This includes opting for self-managed encryption keys while also ensuring that you enable encryption at rest. Additionally, favor more restrictive settings for data protection and offer awareness training that instructs users on data security best practices.

Assets parked in the cloud platforms named above that were used as the basis of the report include cloud workload/configuration data and billions of real-world production cloud assets. Data was collected from the first eight months of this year.

About the Author

David Ramel is an editor and writer at Converge 360.