In-Depth

Cybersecurity for Non-Experts

A self-described "one-person Security Operations Center" deeply invested in Microsoft-centric security imparts commonsense advice for different target groups: IT pros, developers and end users.

How often do you hear "cybersecurity is everyone's responsibility" or "I wish our users wouldn't just click on links?" Can you involve everyone in the defense of your organization from cyberthreats?

What does that involving everyone actually look like? How do "normal," non-IT and non-cyber people become part of the solution? And how can you, as an IT Pro, developer or technology professional, help in that endeavor?

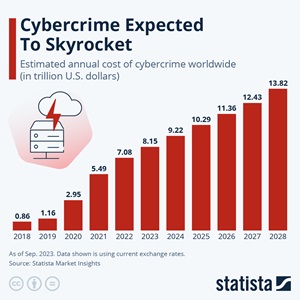

It's definitely needed. Cybercrime overall worldwide cost $9.22 trillion in 2024, expected to hit $13.8 trillion in 2028 according to Statista.

[Click on image for larger view.] Cybercrime Expected to Skyrocket (source: Statista).

[Click on image for larger view.] Cybercrime Expected to Skyrocket (source: Statista).

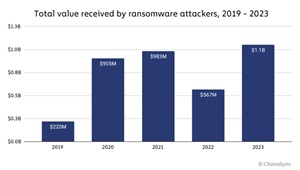

Ransomware worldwide losses (that we know of) in 2023 were $1.1 billion, with 2024 likely to break that "record" according to Chainalysis.

[Click on image for larger view.] Total Value Received by Ransomware Attackers, 2019-2023 (source: Chainalysis).

[Click on image for larger view.] Total Value Received by Ransomware Attackers, 2019-2023 (source: Chainalysis).

Essentially the businesses world is being pwned sideways by criminal gangs from a small group of countries.

In this article (by an IT Consultant / generalist, not a cybersecurity specialist) I'll cover topics related to cybersecurity for different target groups, helping everyone pitch in to make us all more resilient.

IT Professionals

Let's start with my people. These are the desktop, server, applications, cloud, identity or networking specialists, keeping the lights on in businesses. In the SMB space we wear several of those hats whereas in larger organizations there are IT pros who just do networking for example, leaving everything else to other specialists.

But we all should know the basics of common cybersecurity attacks, and understand how our configuration settings, daily processes and awareness of the threats influence our organizations overall security posture.

The first place to look is on what device you do your administrative tasks. Most IT pros don't do admin tasks continuously, there are meetings and checking emails and writing reports, researching troubleshooting steps and planning upgrades and so forth. If your organization isn't enforcing the use of Privileged Access Workstations (PAWs) or Secured Access Workstations (SAWs), consider raising this as a critical issue. You should be doing all that normal day to day work on a different machine than when you're accessing admin interfaces to perform highly privileged tasks. This minimizes the risk that you inadvertently clicked a link you shouldn't have or downloaded something that looked safe but wasn't. If having two physical devices is cost prohibitive, you can use virtualization with the host PC being the locked down OS, with a VM running email, browser applications and so on.

This also extends to your user account. If you have any type of administrative privileges in Active Directory or Entra ID (or your third-party identity solution), your account is like a bowl of sugar for flies. One option is to use separate accounts -- one for day to day and one for admin tasks -- with different authentication methods, and definitely phishing resistant authentication for the admin side. This requires user training and discipline to keep the two sides completely separate. Another option is Privileged Identity Management (PIM) in Entra ID, which requires P2 licensing (for your admin accounts only) where your single account is just an ordinary user. When you need to perform admin tasks you need to elevate, by logging in to the Entra ID portal, enter a case number, perform an extra MFA step-up or have your elevation vetted by another admin for example before you get access for a few hours. This requires less discipline from your administrators as the system forces them to elevate every time.

Another simple step is to start every new project, whether that's migrating workloads to a public cloud, rolling out a new SaaS application or redesigning a network, with threat modeling. There are in-depth frameworks (STRIDE for example), and deep training on how to use them but that can be overkill, at least for smaller projects. These four questions are often cited as the starting point:

- What are we working on?

- What can go wrong?

- What are we going to do about it?

- Did we do a good job?

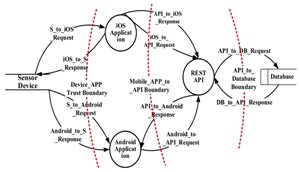

That first question often results in Data Flow Diagrams (DFD), understanding how data moves through your system.

[Click on image for larger view.] DFD diagram in Microsoft Threat Modeling Tool. (source: Image by Pangkaj Paul et al., CC BY 4.0.).

[Click on image for larger view.] DFD diagram in Microsoft Threat Modeling Tool. (source: Image by Pangkaj Paul et al., CC BY 4.0.).

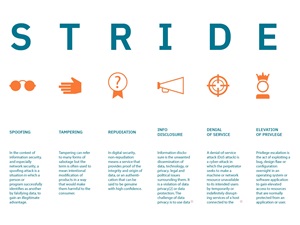

Document each part of the system to understand the scope of it, then look at potential attacks on each part of it. These attacks fall in the following categories (hence the STRIDE name):

| Threat Category |

Violates |

Examples |

| Spoofing |

Authenticity |

A stolen authentication token is used to impersonate a legitimate user |

| Tampering |

Integrity |

Abusing access to perform malicious updates to a database |

| Repudiation |

Non-repudiability |

Manipulating logs to cover up malicious actions |

| Information disclosure |

Confidentiality |

Exfiltrating data from a database containing sensitive user data |

| Denial of Service |

Availability |

Locking a legitimate user out of a system by performing many failed authentication attempts |

| Elevation of Privileges |

Authorization |

Tampering with a token to gain more privileges in a system |

The "what can go wrong" is about identifying where an attacker could subvert the system or design, which is then followed by what you can do to mitigate each of these risks. The final question is about continuous improvement and checking back to see if a good, secure design on day one resulted in a stronger solution in the end.

[Click on image for larger view.] STRIDE (source: IBM).

[Click on image for larger view.] STRIDE (source: IBM).

STRIDE is focused on software development, but the basic questions can be used for threat modeling almost anything. For example (based on one of my client's current scenario), you've got a new classroom on campus. You're planning to provide Wi-Fi and wired Ethernet access in the room. Questions to consider include attacks against the Wi-Fi authentication. Yes, wardriving / biking / walking is still a risk to wireless infrastructure (the car park is right next to the new building) which could lead to information disclosure or a denial of service situation, or in the worst case a breach of the network, followed by elevation of privileges.

Mitigations for these risks include video surveillance of the car park, modern authentication for the Wi-Fi network itself and thorough monitoring of network traffic using Microsoft Sentinel & XDR to detect attempted or successful attacks.

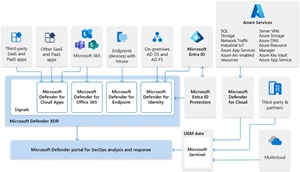

[Click on image for larger view.] Microsoft XDR Integrated with Microsoft Sentinel (source: Microsoft).

[Click on image for larger view.] Microsoft XDR Integrated with Microsoft Sentinel (source: Microsoft).

Developers

Code warriors have a tough challenge in most businesses. Productivity is rewarded and agility / speed to market are king, so adding new features quickly keeps the boss, customers and the bottom line happy. And the fastest way to do it quickly is to build on already existing Open-Source Software (OSS) packages, on a foundation of blocks that someone else has already built. But the problem arises when security is an afterthought, or something that's checked by a different team just before publishing a new application version. So "write perfect software, with no vulnerabilities, on top of OSS packages that bring no risk – and do it fast" isn't a realistic ask of anyone.

It starts with education; it still boggles my mind that most universities churn out graduates with perhaps only some elective courses on how to write secure code. It's like training engineers to design bridges but not worrying too much about if they're strong enough not to break when people drive on them. Then business leaders need to understand the risks of OSS. Yes, "given enough eyeballs, all bugs are shallow" when the source code is available for inspection but there aren't enough volunteer eyeballs to go around for every package.

We've also seen some very interesting attacks against the OSS ecosystem over the last few years, including the Russians spending three years with fake personas building up rapport and trust with the developer of the xz utility until they were given publishing rights, and then included a back-doored version which would have given attackers that had the right key unfettered access to every Linux system through SSH. These types of attacks, and more common garden vulnerabilities or crypto mining code inclusions is a real risk to businesses that don't take OSS supply chain attacks seriously.

If you're a developer learn the difference between a malicious OSS package, a vulnerable package, typo squatting, dependency confusion, and how to understand the dependency tree of all your building blocks, plus have a plan to update packages regularly. That last one is important because it's common for developers to include a version of a package at the time of writing the software but then never upgrade after that. If vulnerabilities are found and patched, but the package is never updated in your application, the risk is multiplied, because now the bad guys know about the vulnerability and are more likely to exploit it. Here's a good presentation on the topic of OSS security that I watched recently.

End Users

This group of people is the hardest to bring onboard. Unlike developers and IT Pros they generally don't have the technical background to understand the risks, nor how their actions can contribute to something going horribly wrong.

For my clients, step one is making sure everyone knows (and I ensure I have the business owners backing for this) that they'll never be in trouble for speaking up about it if they've clicked a link or downloaded a file that turns out to be malicious. Having an attacker in your network is really bad, having end users lying about how they got there because they're afraid they'll get into trouble is worse.

Secondly, I find it helpful to get the users onboard (in my regular training sessions) by explaining the risks and bringing in some examples from recent news. In most cases abstract risks such as ransomware or data loss are hard to relate to, but a news story about a similar business to yours outlining the difficulty they went through during a breach, and how it started brings the message home convincingly.

Users are much more likely to adopt a behavior if they understand why it's important, for example, you have to perform MFA in the authentication app on your phone if you sign in on a different device, or from a different location because we need to know that it's you, and not someone impersonating you.

Another tactic that I find works really well is not just talking about being cybersecurity aware at work but also relating it to people's personal life. We're all online nowadays, and nearly every part of our lives have some connection to the digital world, hence pointing people to sites such as Have I Been Pwned? for example, or talking about protecting social media profiles with MFA and setting sharing to default private really helps, as does introducing the concept of a password manager.

Finally, don't run these training sessions once a year to tick the compliance box, instead short, regular sessions with new and different content are better absorbed, and the repetition of the basics helps everyone adopt them.

Conclusion

I heard an interesting take on a popular podcast (Darknet Diaries) a while ago where Jack compared indigenous tribes in a region of Canada struggling with alcohol abuse in their communities because of not having a strong common ground in understanding the risks and how this can take generations to build up, to the way most people today are mostly oblivious to the cyber risks they're facing, and how their own actions can protect them. Hopefully it won't take generations until most people are aware of cybersecurity risks, and act accordingly, just like we teach kids to look both ways when crossing the road, we should all be aware of the cyber risks we face in our increasingly digital lives.