A report released today by Skyhigh Networks paints a scary picture of the exploding cloud services space, with rising security risks, huge exposure to malware, too much Windows XP use and no safe haven in Europe for companies concerned about government spying.

The quarterly "Skyhigh Cloud Adoption and Risk Report" from the Cupertino, Calif.-based "cloud visibility and enablement company" collates data from more than 8.3 million users in more than 250 companies. It's the third report since the series started last fall.

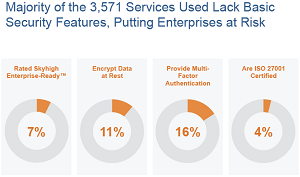

The number of cloud services in use since last quarter increased 33 percent, from 2,675 to 3,571. On average, organizations used 759 cloud services, compared with 626 last quarter, a 21 percent increase.

[Click on image for larger view.]

Cloud service enterprise security risk factors

[Click on image for larger view.]

Cloud service enterprise security risk factors

(source: Skyhigh Networks)

But while more cloud services are being used, the percentage of those deemed "enterprise ready" from a security perspective decreased from 11 percent to 7 percent. Enterprise-ready companies meet "the most stringent requirements for data protection, identity verification, service security, business practices and legal protection," according to the company. The decrease in enterprise-ready organizations "suggests that a majority of new cloud services used by employees are exposing organizations to risk," the company said.

The risk of each service is rated with the company's CloudTrust Program, which takes into account more than 50 attributes of risk in the categories of users and devices, services, business and legal.

Skyhigh Networks said a notable security risk is the fragmented use of cloud services, with organizations on average subscribing to 24 file sharing services and 91 collaboration services. "This not only impedes collaboration and leads to employee frustration, but also results in greater risk since 60 percent of the file sharing services used are high risk services," the report stated.

Of the top 10 file sharing services, only one, Box, was deemed enterprise ready. Services such as Dropbox and Google Drive were rated at a medium risk, while high-risk services included Yandex.Disk, 4shared and Solidfiles.

In the collaboration category, Skyhigh rated Microsoft's Office 365 and Cisco WebEx as enterprise ready, while AOL was the lone high-risk service. Services such as Google's Gmail, Google Docs and Google Drive were medium risk, along with Microsoft services Skype and Yammer, among others.

Of all the services reported, the top 10 were:

- Facebook

- Amazon Web Services

- Twitter

- YouTube

- Salesforce

- LinkedIn

- Gmail

- Office 365

- Google Docs

- Dropbox

The report stated that 18 percent of companies surveyed were using at least 1,000 devices running Windows XP, which lost official support -- and security updates and patches -- from Microsoft last month. Some 90 percent of the cloud services accessed by Windows XP were rated as medium or high risk.

On the positive side of things, a surprising vulnerability to the Heartbleed bug found in the open source OpenSSL cryptography library was reported early in April -- with one-third of cloud services in use being exposed to the bug-- but remediation steps taken by cloud services providers quickly brought that down to less than 1 percent later in the month.

But other security scares remained in full effect. "The malware problem is alive and well, as 29 percent of organizations had anomalous cloud access indicative of malware," the company said. "In addition, 16 percent of organizations had anomalous cloud access to services that store business critical data, introducing an even higher level of risk."

Finally, a last warning highlighted by Skyhigh relates to U.S. companies' concerns about government spying, with the National Security Agency revelations still in the news. "Given the concerns around the U.S. Patriot Act and U.S. government-issued blind subpoenas, there is a growing school of thought advocating the use of cloud services headquartered in privacy-friendly countries (that is, the European Union)," the company said. "However, 9 percent of cloud services headquartered in the EU are high risk, compared to only 5 percent of cloud services headquartered in the U.S. So, while EU-based cloud services provide protection from the U.S. Patriot Act, they do expose organizations to greater security risks."

The Skyhigh report gathered data from 10 vertical industries: education, financial services, health care, high tech, media, oil and gas, manufacturing, retail, services and utilities.

"With this report, we uncovered trends beyond the presence of shadow IT in the enterprise," said CEO Rajiv Gupta. "We provide real data around cloud usage, adoption of enterprise-ready services, the category of services demanded by employees, as well as malware and other vulnerabilities from these cloud services. It’s this type of data and analysis that CIOs use to maximize the value of cloud services and help drive an organized, productive, and safe movement to the cloud."

Posted by David Ramel on 05/07/2014 at 2:18 PM0 comments

Big Blue is buying into this cloud thing in a big way, having invested $7 billion in 17 cloud-related acquisitions since 2010, leading up to this week's announcement of the IBM Cloud marketplace, which is chock-full of services from the company, its partners and others.

Chasing a $250 billion cloud market opportunity, IBM debuted the new one-stop shopping destination targeting enterprise developers, IT managers and business leaders. It features all kinds of "x-as-a-service" offerings, including the traditional Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS) -- and some new ones such as Capabilities as a Service and even IBM as a Service. The marketplace will serve as a place where members of the targeted groups can learn, try software out before purchasing and buy services from the company and its global partner ecosystem.

The marketplace includes more than 100 SaaS applications alone, IBM said, along with PaaS offerings such as the company's BlueMix and its composable services and the SoftLayer IaaS product.

"Increasingly cloud users from business, IT and development across the enterprise are looking for easy access to a wide range of services to address new business models and shifting market conditions," said Robert LeBlanc, senior vice president of IBM Software & Cloud Solutions. "IBM Cloud marketplace puts Big Data and analytics, mobile, social, commerce, integration -- the full power of IBM as a Service and our ecosystem -- at our clients' fingertips to help them quickly deliver innovative services to their constituents."

Besides the company's own products, partners with a presence in the marketplace include SendGrid, Zend, Redis Labs, Sonian, Flow Search, Deep DB, M2Mi and Ustream.

"Most cloud marketplaces are tied to one specific product offering," said Jim Franklin, CEO of SendGrid. "If you don't use the particular service for which the marketplace was built -- even if you're a customer of other products by the same company, that marketplace is irrelevant for you. But the IBM Cloud marketplace will be available to all IBM and non-IBM customers. Whether you're using BlueMix or SoftLayer or another IBM product, the IBM marketplace will be there to serve you. As a vendor, being able to reach all IBM customers from one place is very exciting."

The marketplace serves up content depending on job roles, with service pages designed to intuitively guide customers to areas of their interest, such as start-ups, mobile, gaming and so on. For example, line-of-business IT professionals -- one of the three targeted groups -- might be interested in the myriad SaaS applications in categories such as Marketing, Procurement, Sales & Commerce, Supply Chain, Customer Service, Finance, Legal and City Managers.

Developers can immediately take advantage of an integrated, open, cloud-based dev environment where they can create enterprise apps by exploring and choosing open source or third-party tools and integrating them as needed.

"IBM has brought together a full suite of enterprise-class cloud services and software and made these solutions simple to consume and integrate, whether you are an enterprise developer or forward looking business exec," said Andi Gutmans, Zend CEO and co-founder. "We will support the rapid delivery of our applications through the IBM Cloud marketplace, enabling millions of Web and mobile developers, and many popular PHP applications to be consumed with enterprise services and service levels on the IBM Cloud."

IT managers -- the third targeted group -- can peruse the Ops category for secure cloud services built with SoftLayer. Services include Big Data, Disaster Recovery, Hybrid Environments, Managed Security Services, Cloud Environments for Small and Medium Business, and more. The Big Data and Analytics portfolio, Watson Foundation, includes more than 15 solutions, including two new offerings called InfoSphere Streams and InfoSphere BigInsights. The former helps enterprises analyze and share data on the fly to aid real-time decision-making. The latter is designed to help developers build secure big data apps with Hadoop.

"The launch of IBM Cloud marketplace represents the next major step in IBM's cloud leadership," IBM said in a statement. "This single online destination will serve as the digital front door to cloud innovation bringing together IBM's Capabilities-as-a-Service and those of partners and third-party vendors with the security and resiliency enterprises expect."

Posted by David Ramel on 05/01/2014 at 11:21 AM0 comments

Similar to the bring-your-own-device (BYOD) problem that enterprise IT and security managers have to deal with, today's plethora of cloud-based applications and services presents another security problem.

To deal with possible security risks from unauthorized use of the cloud, CipherCloud on Wednesday announced the CipherCloud for Cloud Discovery solution, designed to give enterprises real-time and granular visibility into whatever cloud applications might be in use throughout an organization.

"Rather than waiting for traditional IT services, many employees have adopted a wide range of cloud applications," the company said. "Yet, this adoption of cloud applications introduces significant risks for enterprises when there is no assurance that they are legitimate, well managed and follow security best practices."

The free solution helps CIOs, CSOs and other IT staffers discover, analyze and rate the risk of thousands of cloud apps in areas such as CRM, finance, human resources, file sharing, collaboration, productivity and others.

Enterprise IT pros can use the Cloud Discovery product to analyze network logs, providing visibility into all the cloud apps in use and comprehensive risk ratings.

Using a drill-down dashboard, users can spot the top cloud apps being used and associated risk levels using a range of security metrics. They can then decide what actions to take, if any, to mitigate risks.

CipherCloud contrasted its service with alternative solutions that it said require users to send sensitive logs outside the organization for analysis. Instead, customers retain full control of those audit logs, the company said.

"Raising the bar for cloud information protection today requires security to be in lock step with visibility," said Pravin Kothari, founder and CEO of CipherCloud, in a statement. "Insight into cloud applications usage helps companies create intelligent data protection and user monitoring strategies. We are pleased to offer this capability free to the market."

IT administrators can output data in a range of formats by using Splunk, a popular software offering that searches, monitors and analyzes machine-generated data.

Besides requiring the use of Splunk, users must be able to provide logs from a Blue Coat proxy, the company said. The new product is offered free of charge to qualified customers, who can register here.

Posted by David Ramel on 05/01/2014 at 9:44 AM0 comments

Like most cloud providers, the SoftLayer service IBM acquired last year for $2 billion is made up of commodity x86/x64-class compute servers. Having recently said it will add compute infrastructure based on its high-performance and more scalable Power processors to the SoftLayer cloud, the OpenPOWER Foundation IBM established this week released technical specifications of the next iteration of the platform.

In addition to launching three new Power servers aimed at processing big data analytics, IBM claims its latest Power-based systems can process data 50 times faster than x86-based systems based on its own tests. The company also said some customers have reported Power can process queries 1,000 times faster and within seconds rather than hours.

IBM's decision to open the Power architecture was a move to ensure it didn't meet the fate of the Sun Sparc platform. Founding members of the OpenPOWER Foundation include Google, IBM, Mellanox Technologies, Nvidia and Tyan. The group said 25 members have since joined since it was formed, including Canonical, Samsung Electronics, Micron, Hitachi, Emulex, Fusion-IO, SK Hynix, Xilinx, Jülich Supercomputer Center and Oregon State University.

Getting Google on board last year was a coup for the effort, though it remains to be seen whether it will have broad appeal. "Google is clearly the king maker here, and placing it at the head of OpenPOWER was a brilliant move," wrote analyst Rob Enderle, of the Enderle Group, in this week's Pund-IT review newsletter. "Still, it will have to put development resources into this and fully commit to the effort to assure a positive outcome. If this is accomplished, Power will be as much a Google product as an IBM product and engineering companies like Google tend to favor technology they have created over that created by others, giving Power an inside track against Intel."

Looking to flesh out its own hybrid cloud offering, IBM announced a partnership with Canonical, which will immediately offer the new Ubuntu Server 1.04 LTS distribution released last week, which includes support for Ubuntu OpenStack and the Juju service orchestration tools. IBM also said PowerKVM, based on the Linux-based KVM virtual machine platform for Power, will work on IBM's Power8 systems.

The Canonical partnership adds to existing pacts IBM had with Red Hat and SUSE, whose respective Linux distributions will also run on Power8. The company has two new S Class servers, the S812L and S822L, that only run Linux, along with the three new systems announced this week -- the S814, S822 and S824 -- that will run both Linux and AIX, IBM's iteration of Unix. IBM is offering them in 1- and 2-socket configurations and 1, 2 and 4U form factors. Pricing starts at $7,973.

Look for other OpenPOWER developments at IBM's Impact conference next week in Las Vegas. Among then, Mellanox will demonstrate RDMA on Power, which will show latency and throughput of 10x. Nvidia will announce plans to add its CUDA software for its GPU Accelerators with IBM Power processors. The two companies will demonstrate what they claim is the first GPU accelerator framework for Java and a major performance boost when running Hadoop analytics apps. Nvidia will also offer its NVLink high-speed interconnect product, launched last month, to the OpenPOWER Foundation.

Posted by Jeffrey Schwartz on 04/23/2014 at 3:39 PM0 comments

Microsoft wants to test the limits of flash storage. To that end, the company has been working with startup Violin Memory to develop the new Windows Flash Array, a converged storage-server appliance which has every component of Windows Storage Server 2012 R2 including SMB 3.0 Direct over RDMA built in and powered by dual-Intel Xeon E5-2448L processors.

The two companies spent the past 18 months developing the new 3U dual-cluster arrays that IT can use as networked-attached storage (NAS), according to Eric Herzog, Violin's new CMO and senior VP of business development. Microsoft wrote custom code in Windows Server 2012 R2 and Windows Storage Server 2012 R2 which interfaces with the Violin Windows Flash Array, Herzog explained. The Windows Flash Array comes with an OEM version of Windows Storage Server.

"Customers do not need to buy Windows Storage Server, they do not need to buy blade servers, nor do they need to buy the RDMA 10-gig-embedded NICs. Those all come prepackaged in the array ready to go and we do Level 1 and Level 2 support on Windows Server 2012 R2," Herzog said.

Based on feedback from 12 beta customers (which include Microsoft), the company claims its new array has double the write performance with SQL Server of any other array, with a 54 percent improvement when measuring SQL Server reads, a 41 percent boost with Hyper-V and 30 percent improved application server utilization. It's especially well-suited for any business application using SQL Server and it can extend the performance of Hyper-V and virtual desktop infrastructure implementations. It's designed to ensure latencies of under 500 microseconds.

Violin is currently offering a 64- terabyte configuration with a list price of $800,000. Systems with less capacity are planned for later in the year. It can scale up to four systems, which is the outer limit of Windows Storage Server 2012 R2 today. As future versions of Windows Storage Server offer higher capacity, the Windows Storage Array will scale accordingly, according to Herzog. Customers do need to use third-party tiering products, he noted.

Herzog said the two companies will be giving talks on the Windows Flash Array at next month's TechEd conference in Houston. "Violin's Windows Flash Array is clearly a game changer for enterprise storage," said Scott Johnson, Microsoft's senior program manager for Windows Storage Server, in a blog post. "Given its incredible performance and other enterprise 'must-haves,' it's clear that the year and a half that Microsoft and Violin spent jointly developing it was well worth the effort

Indeed interest in enterprise flash certainly hasn't curbed investor enthusiasm. The latest round of high-profile investments goes to flash storage array supplier Pure Storage, which today bagged another round of venture funding.

T. Rowe Price and Tiger Global along with new investor, Wellington Management added $275 million in funding to Pure Storage, which the company says gives it a valuation of $3 billion. But as reported in a recent Redmond magazine cover story, Pure Storage is in a crowded market of incumbents, including EMC, IBM and NetApp, that have jumped on the flash bandwagon as well as quite a few newer entrants including Flashsoft, recently acquired by SanDisk, SolidFire and Violin.

Posted by Jeffrey Schwartz on 04/23/2014 at 12:41 PM0 comments

The OpenStack Foundation on Thursday released its planned Icehouse build of the open source cloud Infrastructure as a Service (IaaS) operating system.

New OpenStack builds are released in April and October of every year, and the latest Icehouse build boasts tighter platform integration and a slew of new features, including single sign-on support, discoverability and support for rolling upgrades.

In all, the Icehouse release includes 350 new features and 2,902 bug fixes. The OpenStack Foundation credited the tighter platform integration in Icehouse to a focus on third-party continuous-integration (CI) development processes, which led to 53 compatibility tests across different hardware and software configurations.

"The evolving maturation and refinement that we see in Icehouse make it possible for OpenStack users to support application developers with the services they need to develop, deploy and iterate on apps at the speeds they need to remain competitive," said Jonathan Bryce, executive director of the OpenStack Foundation, in a statement.

In concert with the Icehouse release, Canonical on Thursday issued the first long-term support (LTS) release of its Ubuntu Linux server in two years. LTS releases are supported for five years, and Canonical describes Ubuntu 14.04 as the most OpenStack-ready version to date. In fact, OpenStack has extended Ubuntu's reach in the enterprise, said Canonical cloud product manager Mark Baker.

"OpenStack has really legitimized Ubuntu in the enterprise," Baker said, adding that the first wave of OpenStack deployments the company has observed were largely cloud service providers. Now, technology companies with highly trafficked sites -- such as Comcast, Best Buy and Time Warner Cable -- are trying OpenStack. Also, some large financial services firms are starting to deploy OpenStack with Ubuntu, according to Baker.

Ubuntu 14.04 includes the new Icehouse release. Following are some of the specific features in Icehouse emphasized by the OpenStack Foundation:

- Rolling upgrade support to OpenStack Compute (Nova) is aimed at minimizing the impact of running workloads while an upgrade is taking place. The foundation has implemented more stringent testing of third-party drivers and improved scheduler performance. The compute engine is also said to boot more reliably across platform services.

- Discoverability has been added to OpenStack Storage (Swift). Users can now determine what object storage capabilities are available via API calls. It also includes a new replication process, which the foundation claims substantially improves performance, thanks to the addition of "s-sync," for more efficient data transport.

- Federated identity service (Keystone) for authentication is now supported for the first time, providing single sign-on to private and public OpenStack clouds.

- Orchestration (Heat) brings compute, storage and networking automatically scale resources across the platform.

- OpenStack Telemetry (Cellometer) simplifies billing and chargeback, with improved access to metering data.

- In addition to the added language support, the OpenStack Horizon dashboard offers redesigned navigation, including in-line editing.

- A new database service (Trove) supports the management of RDBMS services in OpenStack.

In addition to the foundation, key contributors in the OpenStack community to the Icehouse release include Red Hat, IBM, HP, Rackspace, Mirantis, SUSE, eNovance, VMware and Intel. The foundation also said that Samsung, Yahoo and Comcast -- key users of OpenStack clouds -- also contributed code, which is now available for download.

Looking ahead, key features targeted for a future release include OpenStack Bare Metal (Ironic), OpenStack Messaging (Marconi) and OpenStack Data Processing (Sahara).

Posted by Jeffrey Schwartz on 04/17/2014 at 1:35 PM0 comments

Of all the major software companies, SAP is regarded as a laggard when it comes to transitioning its business from a traditional supplier of business applications to offering them as a service in the cloud. Lately, it has stepped up that effort.

SAP this week took the plunge, making its key software offerings available in the cloud as subscription-based services. Specifically, its HANA in-memory database is now available as a subscription service. Also available as a service is the SAP Business Suite, which includes the company's ERP, financial and industry-specific applications. The SAP Business Suite also runs on HANA, which provides business intelligence and real-time key performance indicators. The HANA-based SAP Business Warehouse is also now available as a service. The company announced its plans to offer the SAP Business Suite and HANA as a service last year.

The subscription-based apps follow last month's announcement that the company is allowing organizations to extend their existing SAP installations to the cloud as hybrid implementations. The company noted that some apps may have restrictions in those scenarios. Offering its flagship business apps as a service is a key move forward for the company.

"Today is a significant step forward for SAP's transformation, as we are not only emphasizing our commitment to the cloud with new subscription offerings for SAP HANA, we are also increasing the choice and simplicity to deploy SAP HANA," said Vishal Sikka, member of SAP's executive board overseeing products and innovation, in a statement.

While SAP has taken steps over the years to embrace the cloud, it has lagged behind its rivals. Making matters worse, it has faced a growing number of new competitors born in the cloud, including Salesforce.com and Workday, which don't have legacy software issues to address. Those rivals and their partners have tools that can connect to existing legacy systems. SAP's biggest rival, Oracle, has made the transition with some big-ticket acquisitions along the way. For its part, SAP has made a few acquisitions of its own, including those of SuccessFactors and Ariba.

SAP said it is expanding its datacenter footprint across four continents. It currently has 16 datacenters worldwide and said it is extending its footprint to comply with local regulations, to address data sovereignty requirements. The global expansion includes SAP's first two datacenters in the Asia-Pacific, adding facilities in Tokyo and Osaka, Japan.

SAP said it has also extended its cloud migration service offering for existing customers. In addition to running its own cloud, SAP has partnerships with major cloud providers, including Amazon Web Services, Hewlett-Packard and IBM.

Posted by Jeffrey Schwartz on 04/10/2014 at 11:54 AM0 comments

Microsoft has strong ambitions for its Azure public cloud service, with 12 datacenters now in operation around the globe -- including two launched last week in China -- and an additional 16 datacenters planned by year's end.

At this week's Build conference in San Francisco, Microsoft showed how serious it is about advancing Azure.

Scott Guthrie, Microsoft's newly promoted executive VP for cloud and enterprise, said Azure is already used by 57 percent of Fortune 500 companies and has 300 million users (with most of them enterprise users registered with Active Directory). Guthrie also boasted that Azure runs 250,000 public-facing Web sites, hosts 1 million SQL databases with 20 trillion objects now stored in the Azure storage system, and processes 13 billion authentications per week.

Guthrie also claimed that 1 million developers have registered with the Azure-based Visual Studio Online service since its debut in November. This would be great if the vast majority have done more than just register. While Amazon gets to tout its large users, including its showcase Netflix account, Guthrie pointed to the scale of Azure, which hosts the popular "Titanfall" game launched last month for the Xbox gaming platform and PCs. Titanfall kicked off with 100,000 virtual machines on launch day, he noted.

Guthrie also brought NBC executive Rick Cordella to talk about the hosting of the Sochi Olympic games in February. More than 100 million people viewed the online service with 2.1 million concurrently watching the men's U.S.-vs.-Canada hockey match, which was "a new world record for HD streaming," Guthrie said.

Cordella noted that NBC invested $1 billion on this year's games and said it represented the largest digital event ever. "We need to make sure that content is out there, that it's quality [and] that our advertisers and advertisements are being delivered to it," he told the Build audience. "There really is no going back if something goes wrong," Cordella said.

Now that Azure has achieved scale, Guthrie and his team are rolling out a bevy of significant enhancements aimed at making the service appealing to developers, operations managers and their administrators. As IT teams move to a more "DevOps" model, Microsoft is taking that into consideration as it builds out the Azure service promises to broaden its appeal.

Among the Infrastructure as a Service (IaaS) improvements, Guthrie pointed to the availability of the auto-scaling as a service, point-to-site VPN support, dynamic routing, subnet migration, static internal IP addressing and Traffic Manager for Web sites. "We think the combination of this really gives you a very flexible environment, a very open environment, and lets you run pretty much any Windows or Linux workload in the cloud," Guthrie said.

Azure is a more flexible environment for those overseeing devops, thanks to the new support for configuring virtual machine images using the popular Puppet and Chef configuration management and automation tools used on other services such as Amazon and OpenStack. IT can also now use Microsoft's PowerShell and VSD tools.

Mark Russinovich, a Microsoft cloud and enterprise group technical fellow, demonstrated how to create VMs using Visual Studio and templates based on Chef and Puppet. He was joined by Puppet Labs CEO Luke Kanies during the demo.

"These tools enable you to avoid having to create and manage lots of separate VM images," Guthrie said. "Instead, you can define common settings and functionality using modules that can cut across every type of VM you use."

Perhaps the most significant criticism of Azure is that it's still a proprietary platform. In a move to shake that image, Guthrie announced a number of significant open source efforts. Notably, Microsoft made its Roslyn .NET compiler and other components of its .NET Framework components open source through the aptly titled .NET Foundation.

"It's really going to be the foundation upon which we can actually contribute even more of our projects and code into open source," Guthrie said of the new .NET Foundation. "All of the Microsoft contributions have standard open source licenses, typically Apache 2, and none of them have any platform restrictions, meaning you can actually take these libraries and you can run them on any platform. We still have, obviously, lots of Microsoft engineers working on each of these projects. This now gives us the flexibility where we can actually look at suggestions and submissions from other developers, as well, and be able to integrate them into the mainline products."

Among some other notable announcements from Guthrie regarding Azure:

- Revamped Windows Azure Portal: Now available in preview form, the new portal is "designed to radically speed up the software delivery process by putting cross-platform tools, technologies and services from Microsoft and its partners in a single workspace," wrote Azure general manager Steven Martin in a blog post. "The new portal significantly simplifies resource management, so you can create, manage, and analyze your entire application as a single resource group rather than through standalone resources like Azure Web Sites, Visual Studio Projects or databases. With integrated billing, a rich gallery of applications and services and built-in Visual Studio Online you can be more agile while maintaining control of your costs and application performance."

- Azure Mobile Services: Offline sync is now available. "You can now write your mobile backend logic using ASP.NET Web API and Visual Studio, taking full advantage of Web API features, third-party Web API frameworks and local and remote debugging," Martin noted. "With Active Directory Single Sign-on integration (for iOS, Android, Windows, or Windows Phone apps) you can maximize the potential of your mobile enterprise applications without compromising on secure access."

- New Azure SDK: Microsoft released the Azure SDK 2.3, making it easier to deploy VMs or sites.

- Single sign-on to SaaS apps via Azure Active Directory Premium is now generally available.

- Now including one IP address-based SSL certificate and five SNI-based SSL certs at no additional cost for each site instance.

- The Visual Studio Online collaboration as a service is now generally available and free for up to five users in a team.

- While Azure already supported .NET, Node.Js PHP and Python, it now supports the native Java language thanks to its partnership with Oracle that was announced last year.

My colleague Keith Ward, editor in chief of sister publication Visual Studio Magazine, has had trouble in the past finding developers who embraced Azure. But he now believes that could change. "Driving all this integration innovation is Microsoft Azure; it's what really allows the magic to happen," he said in a blog post Friday. Furthermore, he Tweeted: "At this point, I can't think of a single reason why a VS dev would use Amazon instead of Azure."

Are you finding Microsoft Azure and the company's Cloud OS hybrid platforms more appealing?

Posted by Jeffrey Schwartz on 04/04/2014 at 1:21 PM0 comments

Some of the largest enterprises are moving from monolithic datacenter architectures to private and hybrid clouds, according to the third-annual Open Data Center Alliance (ODCA) membership survey (.PDF).

More than half of the ODCA's members (52 percent) are actively shifting their application development plans with cloud architectures, and are already running a significant portion of their operations in private clouds.

Meanwhile, security remains the leading inhibitor to cloud adoption, according to this year's report. Security was a key barrier two years ago, when the ODCA presented the findings from its first membership survey at a one-day conference in New York. Regulatory issues and fear of vendor lock-in, coupled with a dearth of open solutions and lack of confidence in the reliability of cloud services, were other inhibitors.

While only 124 ODCA members participated in the survey, the membership consists of blue-chip global organizations, including BMW, Deutsche Bank, Disney, Lockheed Martin, Marriott, UBS and Verizon. While Intel serves as a technical advisor, the group is not run or sponsored by equipment suppliers or service providers (though some of them have joined as user members), meaning the users set the organization's agenda.

One quarter of respondents said 40 to 60 percent of their operations are now run in an internal cloud, up from 10 percent of respondents last year. Meanwhile, 26 percent of respondents said 20 to 39 percent of their operations have moved to internal clouds, up from 17 percent.

By 2016, 38 percent of the overall respondents expect more than 60 percent of their operations to run in private clouds, up from 18 percent last year. As for public cloud adoption, most respondents (79 percent) said about 20 percent of their operations now run using external services. That's about the same as last year (81 percent), though that survey had half of this year's respondents.

Only 3 percent envision running everything in the public cloud, and 5 percent see running 40 to 60 percent of their operations using a public cloud provider. Thirteen percent forecast that 20 to 39 percent of their operations will run in the public cloud.

Among the most popular cloud service types are:

- Infrastructure as a Service (IaaS): 86 percent

- Service orchestration: 49 percent

- Virtual machine (VM) interoperability: 48 percent

- Service catalog: 39 percent

- Scale-out storage: 39 percent

- Information as a Service: 29 percent

All of the members will use the ODCA's cloud usage models in their procurement processes, the survey showed. Among those technologies from the usage model valued include (in order):

- Software-defined networking (SDN)

- Secure federation in IaaS

- Service orchestration

- Automation

- VM/interoperability

- Secure federation/security monitoring

- Scale-out storage

- Compute as a service

- Secure federation/security provider assurance

- Transparency/standard units measurement for IaaS

- Secure federation/cloud-based identity governance

- Information as a Service

- Transparency/service catalog

- Automation/IO control

- Secure federation/single sign-on authentication

- Secure federation/identity management

- Common management and policy/regulatory framework

- Secure federation/cloud-based identity provisioning

- Transparency/carbon footprint

- Commercial framework master usage model transparency/carbon footprint

- Automation/long-distance workload migration

Posted by Jeffrey Schwartz on 04/03/2014 at 1:02 PM0 comments

The price war between Microsoft and Amazon continued Monday, when Microsoft responded to Amazon's recent round of cloud compute and storage price cuts by slashing the rates for its Windows Azure -- soon to be Microsoft Azure -- cloud service.

Microsoft is lowering the price of its Azure compute services by 35 percent and its storage service by 65 percent, the company announced.

"We recognize that economics are a primary driver for some customers adopting cloud, and stand by our commitment to match prices and be best-in-class on price performance," said Steven Martin, general manager of Microsoft Azure business and operations, in a blog post.

Microsoft's price cuts come on the heels of last week's launch of Azure in China.

In addition to cutting prices, Microsoft is adding new tiers of service to Azure. On the compute side, a new tier of instances called Basic consist of similar virtual machine configurations as the current Standard tier, but won't include the load balancing or auto-scaling offered in the Standard package. The existing Standard tier will now consist of a range of instances from "extra small" to "extra large." Those instances will cost as much as 27 percent less than their current instances.

Martin noted that some workloads, including single instances and those using their own load balancers, don't require the Azure load-balancer. Also, batch processing, dev and test apps are better suited to the Basic tier, which will be comparable to Amazon Web Services (AWS)-equivalent instances, Martin said. Basic instances will be available starting April 3.

Pricing for Azure's memory-intensive instances will be cut by up to 35 percent for Linux instances and 27 percent for Windows Server instances. Microsoft said it will also offer the Basic tier for memory-intensive instances in the coming months.

On the storage front, Microsoft is cutting the price of its Block Blobs by 65 percent, and by 44 percent for Geo Redundant Storage (GRS). Microsoft is also adding a new redundancy tier for Block Blob storage called Zone Redundant Storage (ZRS).

With the new ZRS tier, Microsoft will offer redundancy that stores the equivalent of three copies of a customer's data across multiple locations. GRS, by comparison, will let customers store their data in two regions that are dispersed by hundreds of miles and stores the equivalent of three copies per region. This new middle tier, which will be available in the next few months, costs 37 percent less than GRS.

Though Microsoft has committed to matching price cuts by Amazon, the company faced a two-pronged attack last week from Amazon and Google, which not only slashed prices for the first time but finally started offering Windows Server support. While Microsoft has its eyes on Amazon, it needs to look over its shoulder as Google steps up its focus on enterprise cloud computing beyond Google Apps.

One area in which both Amazon and Google have a leg up on Microsoft is their respective Desktop as a Service (DaaS) offerings. As noted last week, Amazon's WorkSpaces DaaS offering, which it announced back in November at its re:Invent customer and partner conference, became generally available. And as reported in February, Google and VMware are working together to offer Chromebooks via the new VMware Horizon DaaS service.

It remains to be seen how big the market is for DaaS and whether Microsoft's entrée is imminent.

Posted by Jeffrey Schwartz on 04/01/2014 at 1:02 PM0 comments

Amazon Web Services (AWS) this week made its new cloud-based Desktop as a Service (DaaS) offering generally available.

The release of Amazon WorkSpaces, announced in November at its re:Invent customer and partner conference in Las Vegas, will provide a key measure for whether there's a broad market for DaaS.

But VMware and Google also have ambitious plans with their DaaS services, which they launched last month. VMware Horizon, a DaaS offering priced similarly to Amazon WorkSpaces, is the result of VMware's Desktone acquisition last year. Giving VMware more muscle in the game, the company teamed up with Google to enable the latter to offer its Chromebooks with the VMware Horizon View service.

When announcing Amazon WorkSpaces, Amazon only offered a "limited preview" of the service, which provides a virtual Windows 7 experience. A wide variety of use cases were tested, from corporate desktops to engineering workstations, said AWS evangelist Jeff Barr in a blog post this week announcing the general availability of the service. Barr identified two early testers, Peet's Coffee & Tea and ERP supplier WorkWise.

The company also added a new feature called Amazon WorkSpaces Sync. "The Sync client continuously, automatically, and securely backs up the documents that you create or edit in a WorkSpace to Amazon S3," Barr said. "You can also install the Sync client on existing client computers (PC or Mac) in order to have access to your data regardless of the environment that you are using."

While Amazon is by far the dominant Infrastructure as a Service (IaaS) provider, it's too early to say whether it -- or anyone-- will upend the traditional desktop market. But as Microsoft gets ready to pull the plug on Windows XP in less than two weeks, DaaS providers are anticipating an upsurge.

Meanwhile, Amazon once again yesterday slashed the price of its core cloud computing and storage services. That is price cut No. 42 since 2008, Barr noted in a separate blog post, and it includes EC2, S3, RDS, ElastiCache and Elastic MapReduce. The price cuts come just two days after Google cut the price of its cloud services.

Amazon's price cuts take effect April 1.

Posted by Jeffrey Schwartz on 03/27/2014 at 4:43 PM0 comments

Google is the latest major IT provider looking to gain wider usage of its enterprise cloud services with the addition of new Managed Virtual Machines, designed to offer the best attributes of IaaS and PaaS.

The company also added several new offerings to its cloud portfolio, and -- looking to make a larger dent in the market -- slashed the price of its compute, storage and other service offerings.

Speaking at the Google Platform Live event in San Francisco, Urs Hölzle, the senior vice president at Google overseeing the company's datacenter and cloud services, talked up the appeal of PaaS services like Google App Engine, which provides a complete managed stack but lacks the flexibility of services like IaaS. The downside of IaaS, of course, is it requires extensive management.

Google's Managed VMs give enterprises the complete managed platform of PaaS, while allowing a customer to add VMs. Those using Google App Engine who need more control can add a VM to the company's PaaS service. This is not typically offered with PaaS offerings and often forces companies to use IaaS, even though they'd rather not, according to Hölzle.

"They are virtual machines that run on Compute Engine but they are managed on your behalf with all of the goodness of App Engine," Hölzle said, explaining Managed VMs. "This gives you a new way to think about building services. So you can start with an App Engine application and if you ever hit a point where there's a language you want to use or an open source package that you want to use that we don't support, with just a few configuration line changes you can take part of that application and replace it with an equivalent virtual machine. Now you have control."

Google also used the event to emphasize lower and more predictable pricing. Given that Amazon Web Services (AWS) and Microsoft frequently slash their prices, Google had little choice but to play the same game. That's especially the case given its success so far.

"Google isn't a leader in the cloud platform space today, despite a fairly early move in Platform as a Service with Google App Engine and a good first effort in Infrastructure as a Service with Google Compute Engine in 2013," wrote Forrester analyst James Staten in a blog post. "But its capabilities are legitimate, if not remarkable."

Hölzle said 4.75 million active applications now run on the Google Cloud Platform, while the Google App Engine PaaS sees 28 billion requests per day, with the data store processing 6.3 trillion transactions per month.

While Google launched one of the first PaaS offerings in 2008, it was one of the last major providers to add an IaaS and only made the Google Compute Engine generally available back in December. Meanwhile, just about every major provider is trying to catch up with AWS, both in terms of services offered and market share.

"Volume and capacity advantages are weak when competing against the likes of Microsoft, AWS and Salesforce," Staten noted. "So I'm not too excited about the price cuts announced today. But there is real pain around the management of the public cloud bill."

To that point, Google announced Sustained-Use discounts. Rather than requiring customers to predict future usage when signing on for reserved instances for anywhere from one to three years, discounts of 30 percent of on-demand pricing kick in after usage exceeds 25 percent of a given month, Hölzle said.

"That means if you have a 24x7 workload that you use for an entire month like a database instance, in effect you get a 53 percent discount over today's prices. Even better, this discount applies to all instances of a certain type. So even if you have a virtual machine that you restart frequently, as long as that virtual machine in aggregate is used more than 25 percent of the month, you get that discount."

Overall, other pay-as-you-go services price cuts range from 30 to 50 percent dependency. Some of the reductions are as follows:

- Compute Engine is reduced by 32 percent across all sizes, regions and classes.

- App Engine pricing for instance-hours is reduced by 37.5 percent, dedicated memcache by 50 percent and data store writes by 33 percent. Other services, including SNI SSL and PageSpeed, are now available with all applications at no added cost.

- Cloud Storage is now priced at a consistent 2.6 cents per gigabyte, approximately 68 percent lower.

- Google BigQuery on-demand prices are reduced by 85 percent.

Google also extended the streaming capability of its BigQuery big data service, which initially was able to pull in 1,000 rows per second when launched in December to 100,000 now. "What that means is you can take massive amounts of data that you generate and as fast as you can generate them and send it to BigQuery," Hölzle said. "You can start analyzing it and drawing business conclusions from it without setting up data warehouses, without building sharding, without doing ETL, without doing copying."

The company expanded its menu of server operating system instances available with the Google Compute Engine IaaS with the addition of SUSE Linux, Red Hat Enterprise Linux and Windows Server.

"If you're an enterprise and you have workloads that depend upon Windows, those are now open for business on the Google Cloud Platform," said Greg DeMichillie, a director of product management at Google. "Our customers tell us they want Windows, so of course we are supporting Windows." Back in December when Google's IaaS launched, I noted Windows Server wasn't an available option.

Now it is. There is a caveat, though: The company, at least for now, is only offering Windows Server 2008 R2 Datacenter Edition, noting it's still the most widely deployed version of Microsoft's server OS. There was no mention if and when Google will add newer versions of Windows Server. The "limited preview" is available now.

Posted by Jeffrey Schwartz on 03/26/2014 at 9:30 AM0 comments