How-To

Hyper-V Deep Dive, Part 4: More Networking Enhancements

Enhancements to the newest version of Hyper-V can affect the design of your networks. Here's a look at NIC Teaming and some new data center design options.

More on this topic:

Enhancements to the newest version of Hyper-V can affect the design of your networks. In the last few parts, we took a look at some of the network virtualization and some virtual machine networking improvements. Let's take one more look at one other network enhancement, NIC Teaming, then take a quick look at some of Hyper-V's new data center design options.

Currently, approximately 75 percent of all servers in medium and large businesses use NIC teaming to provide aggregated bandwidth and fault tolerance. Microsoft's support stance has always been a bit difficult, however, since each vendor has a different implementation of NIC teaming (and will only team NICs of its own make). When getting to the bottom of network issues, a common request was often to disable the team to see if that was part of the problem. NIC teaming, also known as Load Balancing Fail Over, is now natively built into the platform and can team up to 32 NICs from different vendors, with different speeds; you could even team wired and wireless interfaces together, although the last two aren't really recommended.

Teams can be configured in a switch-independent mode, which is suitable where you have unmanaged switches or in situations where you don't have access to change the configuration of switches. If your only aim is redundancy, this works fine -- just use two NICs and set one of them in standby mode and it'll take over when the cleaner accidentally rips out a cable from the active NIC. This mode also has the benefit that each NIC can be connected to a different physical switch, providing redundancy at the switch level.

Switch-independent mode can be set to use either Address hash or Hyper-V Port load balancing mode. If you need load balancing and would like all NICs in the team to be active, there are two situations where this works well. With address hash, outgoing traffic is balanced across all interfaces but incoming traffic is only going through one NIC. This works for scenarios such as Web servers and media servers, where there is a lot of outgoing traffic but little incoming traffic. The other mode, Hyper-V port, works well if you have many VMs on one host. But no single VM consumes inbound or outbound traffic at greater speed than a single NIC in the team can offer, as this mode distributes incoming and outgoing traffic across team NICs, with each VM assigned to an individual NIC.

If you have other network situations, switch-dependent mode is probably a better choice, and it also comes in two flavors: static or Link Aggregation Control Protocol (LACP, known as IEEE 802.1ax; during development it was named IEEE 802.3ad). Static provides no recognition of incorrectly connected cables and is only practical in a very static (pardon the pun) environment where the network configuration doesn't change very often. LACP identifies teams automatically and should be able to recognize expansion and changes to a team as well.

|

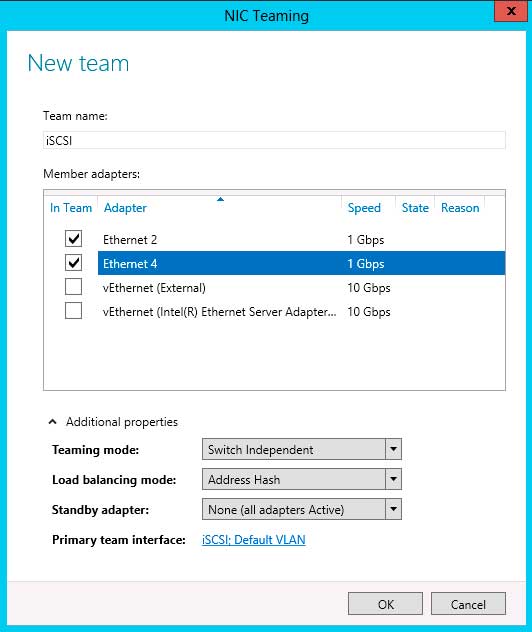

Figure 1. Creating a team from one or more NICs is easy with just a few clicks. (Click image to view larger version.) |

Configuring NIC teaming is straightforward: in the Local Server part of Server Manager there's a link for NIC teaming which opens up the team manager when you click it. Click Tasks - New Team and select which adapters should be part of the team, and click Additional properties to be able to choose the team mode, load balancing mode and if you'd like a standby adapter. Once a NIC is part of a team, its properties only list the Microsoft Network Adapter Multiplexor Protocol as an enabled protocol, whereas the new team appears as an interface with configurable protocols.

If you need to use VLANs, together with a NIC team, you can create multiple team interfaces (up to 32) for a team, each of which can respond to a particular VLAN ID, as long as you put your switch ports into trunk mode. You can actually create a NIC team with a single NIC and then use team interfaces for separation of traffic based on VLANs. If you have multiple VMs on a host that you want to respond to different VLAN IDs, don't use team interfaces; rather, set up VLAN access through the Hyper-V switch and the properties of each VM's virtual NIC.

If you want to use a NIC team inside a VM, perhaps because you're using SR-IOV adapters (see part three), make sure you configure each Hyper-V switch port that's connected to a VM with a NIC team to either allow MAC address spoofing or set the "AllowTeaming" parameter to on, using Set-VmNetworkAdapter in PowerShell or in the GUI.

As with most almost everything in Windows Server 2012, you can configure NIC teaming using PowerShell; the list of cmdlets can be found here.

|

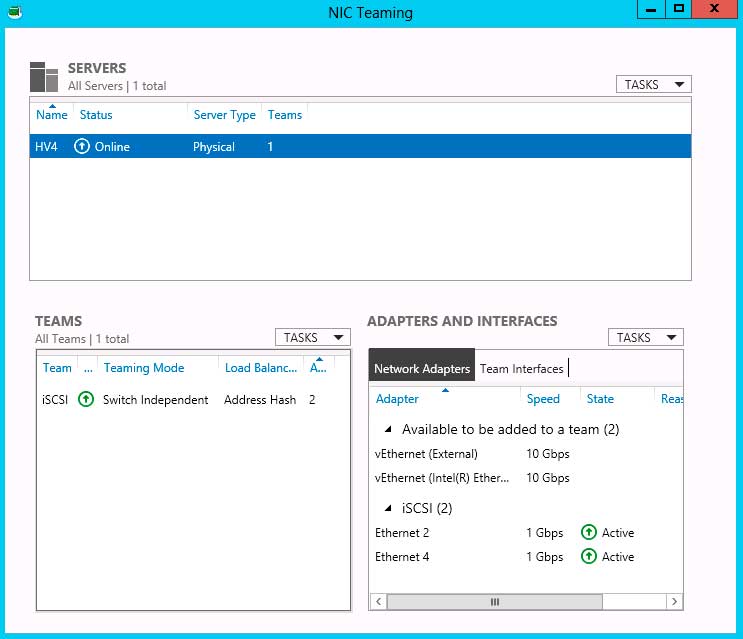

Figure 2. Once you've created teams they can easily be managed from this simple UI. (Click image to view larger version.) |

New Options for Hyper-V Data Center Design

In large data centers, be aware that Windows Server 2012 offers support for DTCP (Datacenter TCP), which work with switches that can enable ECN (Explicit Congestion Notification- RFC 3168). This leads to TCP being able to detect the extent of congestion, not just the presence of congestion as ordinary TCP does. The effect, especially in networks with large amounts of data traffic, is improved throughput as well as much lower buffer space used in switches.

If you're looking to implement IPAM (IP Address Management), a new feature in Windows Server 2012 for managing IP addresses by integrating with your DHCP and DNS servers (and saying goodbye to that Excel spreadsheet), and you're using SCVMM 2012 to manage your virtualized infrastructure, SP1 will offer a script (Ipamintegration.ps1) to export all IP addresses assigned by SCVMM to IPAM on a scheduled basis.

The network enhancements we have covered in these four parts, along with other new and improved features for storage and scalability we'll cover in the next few parts (such as running VMs from file shares) bring new options when you're designing clusters. Many new clusters are moving from separate networks for storage and other network traffic to a single fabric, and also from multiple 1 Gb/s Ethernet connections to fewer 10 Gb/s (or faster) connections. This converged fabric principle can take many forms, and different vendors have different approaches, but overall Hyper-V in Windows Server 2012 has this new world covered with Network Virtualization and SDN, a powerful, extensible virtual switch that can be centrally managed using SCVMM 2012 SP1, support for network virtualization gateways, SR-IOV, dVMQ and QoS, as well as built in NIC teaming.

More on this topic:

About the Author

Paul Schnackenburg has been working in IT for nearly 30 years and has been teaching for over 20 years. He runs Expert IT Solutions, an IT consultancy in Australia. Paul focuses on cloud technologies such as Azure and Microsoft 365 and how to secure IT, whether in the cloud or on-premises. He's a frequent speaker at conferences and writes for several sites, including virtualizationreview.com. Find him at @paulschnack on Twitter or on his blog at TellITasITis.com.au.