In-Depth

Hyper-V: It's All About the Storage

The biggest update yet to the Microsoft virtualization solution makes major improvements in the way it uses storage.

There's no doubt Microsoft is serious about making Hyper-V a contender for enterprise virtualization and private cloud. The Windows Server 2012 release was full of new features and scalability increases. Now, less than 12 months later, Hyper-V in Windows Server 2012 R2 Preview comes with a number of new features and improvements, particularly with storage. In this article, I'll cover those features and how they fit into common business scenarios.

Before I look at storage options, I'll first look at some of the other features that relate to storage, such as Generation 2 VMs, zero-downtime upgrade scenarios and Hyper-V Replica improvements.

Generation 2 VMs

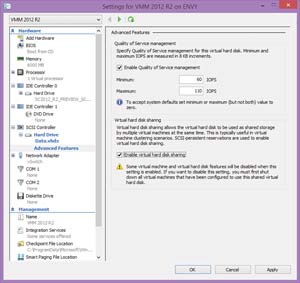

If you've been managing vSphere, you've been saddled with new VM hardware. Now, Hyper-V 2012 R2 is following suit. Generation 2 VMs are based on the Unified Extensible Firmware Interface (UEFI) instead of traditional BIOS. More important, they use less emulated hardware. Gone are serial ports, PS/2 ports and other devices the OS needed to "think" it was running on physical hardware (see Figure 1). Also, only Windows Server 2012 and Windows Server 2012 R2, along with Windows 8 and Windows 8.1, are supported as guest OSes.

Secure Boot is an option (and turned on by default) in a Generation 2 VM, so the synthetic network interface controller (NIC) supports Pre-Boot eXecution Environment (PXE) booting. In first-generation VMs, you had to use the legacy NIC and PXE boot to install the OS, then swap over to a synthetic NIC. There's no longer an option to use IDE-connected virtual hard drives (VHDs) and you can now boot from virtual SCSI. While a Generation 2 VM boots about 20 percent faster and the OS installs about 50 percent faster, there's no performance benefit to a running VM.

Cross-Version Upgrading

Application downtime means other teams besides the infrastructure team are affected by any upgrading operations, so Microsoft needs to provide a smoother upgrade path from Windows Server 2012 to Windows Server 2012 R2. And that's exactly what Microsoft offers with cross-version live migration. You can create a new cluster with a couple of Hyper-V R2 hosts, then live migrate VMs from a Hyper-V 2012 cluster to Hyper-V 2012 R2. This gives you high availability (HA) throughout the process with no application downtime.

You should start by upgrading your System Center infrastructure to Windows Server 2012 R2. Particularly important to upgrade is System Center Virtual Machine Manager (VMM), which you can upgrade by disconnecting the SQL Server database from the Windows Server 2012 SP1 server and attaching to a new Windows Server 2012 R2 VMM. Then upgrade the compute nodes and finally the storage if you're using Server Message Block (SMB) file shares. You can do the Hyper-V hosts in a rolling fashion by upgrading hosts from Windows Server 2012 to Windows Server 2012 R2 as more and more VMs are moved. At TechEd 2013, there was an informative session, "Upgrading Your Private Cloud with Windows Server 2012 R2," that's now online at bit.ly/193NwOA and provides the proper upgrade steps.

Hyper-V Replica

This new feature in Hyper-V 2012 R2 lets you replicate the configuration and VHDX file of a running VM to another host for standby disaster recovery.

In Hyper-V 2012, replication frequency was limited to every five minutes and replication (for each VM) was allowed only from the primary to a secondary host. Hyper-V 2012 R2 is more flexible. You can set the replication frequency to every 30 seconds for situations where you have plenty of bandwidth available (in the same datacenter) or every 15 minutes for situations with limited or unreliable bandwidth.

You can also have replication be a multistep process with a VM replicated from one host to another (perhaps in the same datacenter) and then again to an off-site host. Hyper-V replication lets you inject IP addressing on the replica host so your VM can function on a different network. In Hyper-V 2012, this only worked for Windows Server 2012 VMs. In Hyper-V 2012 R2, it also works for Linux VMs.

If you're considering Hyper-V Replica, you should also look at the free capacity tool that lets you work out your CPU, storage and networking requirements. If you have limited WAN link capacity, the best feature is the throughput calculator that uses a temporary 10GB VM. It replicates that from the primary to the secondary host. Let it run for at least eight hours to get a good picture. This will clearly show you how much bandwidth is available for Hyper-V replication, along with latency figures.

You can use Windows network Quality of Service (QoS) to manage replication network traffic. If your environment includes WAN accelerators, Microsoft has tested Hyper-V replication with several of these with positive performance increases. For upgrade scenarios, you can replicate from Windows Server 2012 to Windows Server 2012 R2 Hyper-V, but only at the standard five-minute interval.

The icing on the cake is the new Hyper-V Recovery Manager. It's a Windows Azure service currently available only in limited preview. It integrates with VMM 2012 R2 to orchestrate multiple VM failovers from one location to another for testing or for actual disaster recovery. The VMs aren't stored in Windows Azure -- Recovery Manager simply coordinates the process between sites.

Where to Store VMs?

For HA, you need shared storage and clustering. Hyper-V works great for this with either Fibre Channel or iSCSI SANs. Another option that first became available in Windows Server 2012 was using SMB 3.0 shares on continuously available Scale-Out File Server (SOFS) clusters.

Microsoft is continuing to strengthen the option of low-cost, commodity hard drives in shared serial-attached SCSI (SAS) enclosures in Windows Server 2012 R2 by adding storage tiering support. You can achieve excellent performance by adding a small number of fast solid-state drives (SSDs) to a larger number of ordinary, slow hard drives in the same storage space. The Windows Server 2012 R2 file server will automatically move frequently used blocks of storage to the faster SSD tier and move them back when other blocks get "hotter."

When you really need a particular file to be on the SSD tier (such as a virtual desktop infrastructure [VDI] gold master image), you can manually pin a file. Clustered Windows file servers, connected to shared JBOD SAS enclosures with tiered storage -- and combining the performance of SSD with the capacity of SAS hard disk drives through SMB 3.0 file shares -- can give you SAN-comparable performance and management at a fraction of the cost.

Storage spaces are now more resilient with the option to select three-way mirroring (along with the existing two-way mirroring and parity). If you aren't familiar with storage spaces data protection, it isn't disk-to-disk-based. Instead, the data is divided into slabs and spread across all existing disks.

Controlling storage I/O for VMs can be crucial, especially in a multi-tenant environment. This is finally coming to Hyper-V 2012 R2. You can define maximum and minimum IOPS on a per-VM basis, and normalize them for counting to an 8KB size with anything smaller rounded up to 1 IOPS (a 20KB I/O, for instance, is counted as 3 IOPS). This is I/O management on an individual host basis. If you have a large cluster where one or more hosts have "hungry" VMs, there's no central mechanism for controlling I/O at the cluster level.

No modern storage solution is complete without deduplication, and Hyper-V 2012 offered this with excellent results for files not in use, especially for VHD libraries. In Hyper-V 2012 R2, this is also supported online for running VMs in a VDI scenario only.

This caveat is obviously a bit of a letdown, but my guess is Microsoft simply hasn't yet been able to test deduplication across all the possible server VM scenarios. Full support for any running VM should be coming. Deduplication uses buffered I/O (which uses the file system cache), and because Hyper-V uses unbuffered I/O, performance is actually better with deduplication turned on.

Guest Clustering Using Shared VHDX

Even with clustered hypervisors, there's no way to guarantee availability of services and applications. If a host goes down, the VMs running on that host will automatically restart on other hosts in the cluster. As far as your users are concerned, though, this is still an outage. Even if the file server comes back a few minutes later, all unsaved changes in documents are lost.

For true continuous availability, you can create guest clusters with multiple VMs housed on different hosts. That way if a host goes down, users shouldn't notice any downtime because another VM in the cluster will continue providing service. Hyper-V has supported this since the first version, but you could only create guest clusters connected to iSCSI SANs because it was the only way to connect the required shared storage.

Hyper-V in Windows Server 2012 added virtual Fibre Channel. This provides full support for connecting VMs to Fibre Channel SAN, including live migrations. However, this type of guest clustering requires some planning and configuration. It isn't suitable for hosted environments because it requires direct access to the underlying hardware.

There's a new option in Hyper-V 2012 R2 called shared VHDX files, which provides clustered shared storage through a simple shared file (see Figure 2). You should store this file on highly available storage. Hyper-V offers anti-affinity class names to ensure guest cluster nodes aren't co-located on the same host. Both Hyper-V hosts and the file storage need to be running Windows Server 2012 R2.

[Click on image for larger view.]

Figure 2. Storage traffic is controlled at the virtual hard drive level. This is also where you enable shared VHDX for guest clusters.

[Click on image for larger view.]

Figure 2. Storage traffic is controlled at the virtual hard drive level. This is also where you enable shared VHDX for guest clusters.

There are some limitations to shared VHDX. You can only resize the file if all guest cluster nodes are shut down. You can only safely back up from a node within the cluster, not the host level. Your VHDX file can be a fixed size or dynamic, but not a differencing disk. It supports normal live migration of guest cluster nodes, however, you can't do a live storage migration of the VM with the shared VHDX file. To move that file, you need to shut down all cluster nodes, relocate the file and reconnect to all guest nodes.

Because of the short brownout period experienced by live migrated VMs, Microsoft has changed the default max number of missed heartbeats from five to 10 before a failover is triggered. The time interval is set to 1 second. You can increase this to a maximum of two seconds.

Enhanced VM Interaction

To easily copy files and text to and from VMs in Hyper-V 2012, you had to set up RDS connections to your VM. This obviously doesn't work during OS installation and never worked for Linux VMs. In less security-conscious environments, you can now enable Enhanced VM interaction. This offers sound, picture, file, and folder copying both in and out of VMs along with USB redirection.

Automatically Activating VMs

The Standard edition of Windows Server gives you two Windows Server VM licenses for free. Windows Server Datacenter offers unlimited Windows VMs per host. But even on Datacenter hosts, you have to enter product keys and activate each VM individually. In Hyper-V 2012 R2 Datacenter (for Windows Server 2012 R2 guests only) your VMs will automatically be activated, regardless of the underlying type of license on the host.

Live Migration at Warp Speed

When you're moving VMs from one host to another, you need to copy the memory state while changes are tracked. This is a network-intensive operation, especially for large VMs.

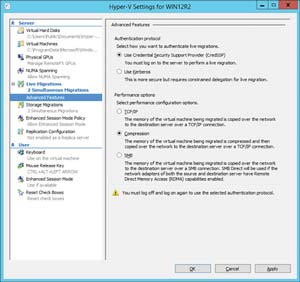

There are two options to speed up the process in Hyper-V 2012 R2. The first is live migration compression, which trades some CPU overhead on the source and destination hosts for less network traffic (see Figure 3). Hyper-V monitors your CPU load and will only use compression when it won't compromise overall host performance.

[Click on image for larger view.]

Figure 3. Hyper-V in Windows Server 2012 R2 offers a lot more flexibility in managing live migrations.

[Click on image for larger view.]

Figure 3. Hyper-V in Windows Server 2012 R2 offers a lot more flexibility in managing live migrations.

If your hosts have Remote Direct Memory Access (RDMA) network cards, you can use them for live migration traffic with excellent performance. In internal testing, the Hyper-V team tested with one, two and three 56Gbps RDMA adapters with SMB 3.0 multi-channel. They found a linear performance increase, but the fourth NIC provided no extra performance. This bottleneck was due to the bandwidth of DDR 3 host RAM with the network providing more speed than the RAM itself could offer.

Microsoft recommends using compression if you have less than 10Gbps bandwidth. If you have more than 10Gbps, RDMA transfers would be more appropriate.

Better Linux VM Support

Hyper-V 2012 R2 offers full dynamic memory support for Linux VMs, the ability to resize VHDX files while the VM is running (just as for a Windows VM), full Volume Shadow Copy Service (VSS) support for online VM backup and a new video driver for an enhanced GUI experience. This backup support is particularly interesting as many environments rely on a simple script to copy essential files to another drive on a schedule. This lets any VSS-capable backup software create full image-based snapshots of running VMs.

Other Improvements

You can resize your VHDX files for running VMs, both increasing and decreasing the size (not beyond the existing data). For Gen2 VMs, you can do this for both the OS VHDX and data drives. Gen 1 VMs only let you do this for data drives.

Dynamic disks in VHDX format can now expand up to 10 times faster than VHD files, so Microsoft is now recommending using dynamic disks instead of fixed disks for production workloads. You can clone a running VM, which can be useful in a troubleshooting situation where you don't want to disturb a production VM, but you need to find out why some software in the VM is misbehaving.

There are some interesting improvements in Hyper-V in this coming release, particularly the shared VHDX feature and the storage QoS settings. There's also increased flexibility in Hyper-V Replica.

The thorough support for Linux VMs (particularly the backup story) is a cross-pollination from Windows Azure and shows an understanding of the challenges many businesses face. The improvements in Hyper-V are of course only half the story. The management and monitoring that System Center 2012 R2 brings to the table will be subject of a future article.