News

Feds Learn About Shifting to Software-Defined Networking

Move to SDN said inevitable in new world of mobility, social, Big Data and cloud computing.

Software-defined networking (SDN) is an emerging and evolving technology, but "it's the place where we'll all end up at," speaker Anil Karmel told an audience of federal agency IT staffers in a recent webinar.

While such a shift to SDN might well be inevitable, it entails many questions and concerns, with security being foremost in an event geared toward government IT. Karmel, himself a former federal agency deputy CTO, told the audience that security and other SDN considerations in the new age of mobile computing are closely related to context-aware IT.

He spoke about SDN and related issues last week in a webinar titled "Evolution or Revolution? The Software-Defined Shift in Federal IT," along with Steven Hagler of Hewlett-Packard Co. The event was produced by MeriTalk and sponsored by UNICOM Government IT and HP.

As systems and applications evolve and organizational investments move from outside the datacenter along with mobile workers, context awareness becomes more important, said Karmel, who was deputy CTO at the National Nuclear Security Administration (NNSA) before recently leaving to form cloud security company C2 Labs Inc.

[Click on image for larger view.]

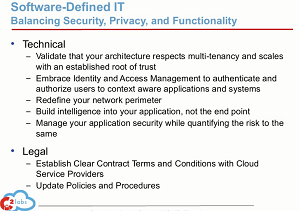

The software-defined balance act

[Click on image for larger view.]

The software-defined balance act

(source: MeriTalk webinar)

"As you extend those investments outside your datacenter, you need to redefine your network perimeter," Karmel said. "Because we're very comfortable as IT practitioners with having your boundaries around the data that you have in your datacenter. But as soon as that data leaves your datacenter -- how you define the network perimeter and the security controls that are in these external environments -- you have to redefine that network perimeter and the security controls that are in it, so as your workloads move, your security moves with it."

Part of that entails building intelligence into applications as opposed to endpoints or devices that are consuming data, Karmel said. Intelligence that lets applications be more aware of the type of data they're handling and the underlying infrastructure will enable context-aware decisions on where to store the data and where security should be employed.

To achieve this, it's paramount to design apps with users in mind and with security considerations built-in at the beginning, rather than have them "bolted on" later, Karmel said. "Understanding the user, understanding what data they're trying to access, where they're trying to access that information, with security that's baked in to the beginning, is absolutely key," he said.

Along with security, software-defined computing offers many other benefits, including simple energy savings, said Hagler, who was on hand to discuss the HP Americas Moonshot server, which the company claims is the first software-defined server, in service for about a year. Issues such as power consumption become more problematic for companies tied to doing things the old way in the new age of mobility, social engagement, Big Data, cloud computing and more.

IT shops need more servers to handle more users, so power consumption becomes more of an issue, said Hagler, who noted that cloud computing when compared to entire nations is the fifth-largest consumer of electricity on the planet. Focusing on workload-specific infrastructure rather than more general-purpose systems and bringing functionality from clients to the servers results in equipment acquisition cost savings, lower power consumption and less space required for infrastructure.

The newness of SDN and related technologies was apparent in the following questions from the audience, who mostly asked about the basics of the much-hyped but still little-used computing model:

"How does an SDN or software-defined environment differ from a virtualized environment?"

"Good question, Hagler said, "and one we run into a lot." As compute capacity increased beyond what was needed -- following Moore's Law -- it became apparent that a lot of server resources weren't being heavily utilized, and virtualization allowed IT to "carve up" that broad computing resource into smaller segments so organizations could do more with less, he said. Now, with SDN, specifically as it relates to servers, "we now have the ability to right-size it right from the get-go," he said, so smaller servers purposely built for specific use cases can be used from the beginning.

Hagler sees virtualization and SDN as coexisting rather than competing, with each having its place, letting IT choose the right tool to do the right job.

Karmel answered the question from a three-point perspective: compute, storage and networking.

Hagler's explanation of the benefits of using Moonshot concerned the compute side of things, Karmel said, letting organizations deploy and scale that compute based on needs. Networking and storage are also evolving to support applications, he said. "Because at the end of the day, it's all about the users and the applications. That really is what will drive agility, flexibility and transparency in your environment and allow you to realize the benefits of a software-defined enterprise."

"How do you think a software-defined approach is going to foster more innovation in government?"

"We have these existing investments in IT, and the users of services that are delivered by government agencies have these incredible thoughts about what they want to do with information," Karmel answered. "But, unfortunately, IT has been bound by the existing investments that they have and the inability to evolve those investments to capitalize and deliver new applications and systems and resources that our users need -- whether they're public servants, whether they're citizens. So having an environment that can quickly adapt and flex and scale to meet the needs of our constituents is key to drive agility, to drive flexibility, to drive transparency from a cost and service-delivery point of view ... for you to understand that around context or IT is how you get there.

"One of the foundations of that is understanding and determining the value of your information so that you can understand how to protect and deploy it. So once you have that value -- that information -- and you understand how to protect and deploy it, you can then set the foundation for context-aware IT to deliver the applications of tomorrow."

Hagler answered the question in terms of opportunities resulting from freed-up resources. "We routinely see customers in the enterprise or in the federal space ... bound by keeping the lights running on what we've got," he said. "So 70 to 80 percent of the environment is used to manage or try to keep what I've got going, and the innovation cycle gets delayed because I just don't have the time, I don't have the energy, I don't have the resources for it."

So being able to get more efficiency, being able to do the right thing, being able to effectively have the right tool for the right job -- again, I can now free up those resources so not only are we as manufacturers bringing innovation to the marketplace, but the federal agency customers or enterprise accounts can now spend more time doing what's right for the business versus just trying to keep their own legacy equipment running."

"Isn't SDN about programming the network components control plane from the data plane?"

"Software-defined networking is taking advantage of the technology that we've seen in the server environment before," Hagler replied. "If you think about it, we all had proprietary server capabilities in the past and you really couldn't mix and match very often. In the broad sense of industry standard servers that we have today, what we've been able to do is create that standard hardware platform by which we can do these different capabilities -- kind of what I'm trying to evolve from now.

"In the software-defined network, what I see is now we get that basic building block upon which we can start doing lots of different workloads, lots of different capabilities. So I'm not bound by an appliance-type of device. I'm bound now by ... let me get some infrastructure that's got some standards to it so now if I need a router one day or I need a switch another day, I have the ability to effect it, so that software-defined nature kind of allows me to mix and match and take advantage of the flexibilities that didn't exist before."

Karmel again answered in terms of compute, storage and networking. "The power that software-defined networking brings is the ability to dynamically build Layer 2 networks across this breadth of platforms, connecting, for example, compute and storage in new innovative ways," he said. "Delivering new capabilities to constituents that you would typically have to deploy a router or a physical switch or an appliance to an endpoint to get that network up and running.

"In a software-defined network, you are in essence defining the control plane or defining the network at Layer 2 across Layer 3 where you're able to connect these disparate environments in a secure enterprise way ... connect compute and storage in any way and dynamically deliver applications very quickly and effectively. So that really is the power that SDN brings to the table ... but it really is a holistic marriage between compute, network and storage to deliver the applications of tomorrow."

"If the servers are now purposely built, what happens if the needs change for those servers?"

Both Hagler and Karmel said traditional general-purpose servers and the new smaller, more targeted servers will coexist, as the new technology won't replace the existing technology but rather will be another tool to be used when needed.

Both also agreed there's a trade-off when using the purpose-built servers. "I'm trading flexibility for efficiency," Hagler said, admitting that the more targeted servers won't allow him to run Exchange one day and SAP HANA the next. Karmel said the trade-off also concerned performance and scalability versus efficiency, and the decision about which type of server to use was a "deployment choice" that needs to be made depending on requirements and the apps being used.

The federal agency IT audience circled back to issue No. 1, security considerations, with moderator Caroline Boyd admitting that "government agencies have many of them."

"What specific security considerations should we keep in mind when looking at SDN?"

Karmel said the question relates to redefining context in view of four key paradigms: understanding who the user is; what data are they trying to access; where is the user; and where is the data and how do they get there.

"When you have a very user-centric data/application-centric approach, in delivering context-ware IT, you can then place the appropriate controls around the information so that when the workload moves or scales, the security envelope moves with it," Karmel said. "So you're not going to apply the same level of security controls to data that's not inherently sensitive -- it just doesn't make any sense."

You wouldn't use a sledgehammer to drive a tiny nail, Karmel said, so security controls need to be managed holistically, being employed according to the value of the information being protected.

"What is the linkage between SDN and [software-defined storage] or [software-defined anything]? What is the relationship, if any?

Karmel again spoke about the importance of using SDN in conjunction with software-defined storage. "Software-defined networking is that control plane for a network ... to dynamically instrument and reconfigure networks or deploy networks of varying sizes and shapes across multiple environments," he said.

"Software-defined storage is dynamically reconfiguring your storage environment, or that storage tier, based on the needs of your application, data and organization. Now, those two are synergistic. You need to have that software-defined storage plane tied to that software-defined network plane to deliver a software-defined future or software-defined enterprise. You could use them independently, but the value is delivered when they're deployed holistically. We do believe in the value of having that control plan to manage an SDN network or a software-defined storage network or software-defined servers."

"What one step should agencies take as they begin to look at a software-defined environment?"

Hagler advised that agencies think about the problems they're trying to solve and not try to solve them all with the same technology, as many more tools are now available. He said a major issue faced by customers is how to go from a general-purpose approach to a workload-specific approach, and they should challenge their vendors to help in that transition. "Think a little bit more granular about what we're trying to solve," he concluded.

As for the inevitable shift to SDN, Karmel explained more. "The space is definitely emerging and evolving, but it is the place that we will all end up at," he said. "SDN is that new paradigm; it is that new paradigm shift."