In-Depth

Windows Server 2016: Virtualization Unleashed

Microsoft has made a host of improvements to its core virtualization stack in the latest version of its flagship server.

The next version of Windows Server is finally here! It's been a long time coming -- the first preview was back in October 2014.

This long gestation brings many new and improved features across most areas of Windows Server -- this article will focus on what's coming for Hyper-V and related technologies.

Storage Quality of Service (QoS)

Storage is arguably the biggest challenge when designing virtualization fabrics. It's no coincidence that public clouds such as Microsoft Azure and Amazon Web Services (AWS) put a big emphasis on designing your virtual machines (VMs) with IOPS performance in mind. (Of course, if you're implementing a private cloud virtualization infrastructure, the same considerations apply.)

Windows Server 2012 R2 allows you to specify minimum, maximum or both, IOPS settings on a per-virtual-disk basis. These IOPS are normalized at 8KB (you can change the amount), so a 12KB storage operation would be counted as two IOPS. This works well as long as the underlying storage (DAS, SAN, SMB 3.0 NAS or Scale Out File Server [SOFS] with Storage Spaces) has enough IOPS performance to satisfy all the hosts. But because there's no central storage police, if there is resource scarcity the individual Storage QoS limits won't be honored.

That centralized storage policy controller is now available in Windows Server 2016 SOFS. Remember that SOFS nodes can be deployed in front of a SAN or as part of a software-based storage fabric using Storage Spaces. You can define two types of policies. In one, all the VMs to which the policy applies share in the pool of IOPS. In the other, the same upper and lower limit applies to all the VMs within that policy.

This new storage QoS doesn't just apply limits to eliminate the "noisy neighbor" problem or guarantee minimum IOPS for VMs; it also actively monitors storage traffic, providing good insight into the IOPS requirement for different VMs and workloads. These policies are set using PowerShell in Windows Server 2016, but System Center Virtual Machine Manager (VMM) 2016 provides a GUI to create and apply them.

Dipping a Toe into the Hyper-Convergence Pool

Windows Server 2016 is also taking a shot at the hyper-converged space with the new Storage Spaces Direct (S2D), which uses internal storage in nodes to create Storage Spaces volumes. Storage can be SAS, SATA, SSD or NVMe, but note that SATA drives need to be connected to a SAS interface.

A direct competitor to VMware's VSAN, S2D requires a minimum of three nodes and can provide two- or three-way mirroring to protect the data (only two-way mirroring in a three-node cluster). Note that S2D can be either used in hyper-converged deployment where each storage node is also a Hyper-V node, or disaggregated where the storage nodes are providing storage services to other Hyper-V nodes. The latter allows you to scale storage and compute independently, and offers flexibility at the expense of more hardware required.

If you're looking at a stretched Hyper-V cluster with nodes in two geographically separate locations, Windows Server 2016 now comes with Storage Replica (SR), letting you replicate any type of storage from one location to another, either synchronously or asynchronously.

Software-Defined Networking (SDN)

SDN was introduced in Windows Server 2012, but the underlying standard NVGRE never really took hold in the industry. This is why in Windows Server 2016 the new SDN stack builds on VXLAN. If you do a new deployment of virtual networks in Windows Server 2016, the default will be VXLAN; but you can still select NVGRE.

If you've already implemented SDN in Hyper-V in 2012 or 2012 R2, be aware that picking NVGRE won't give you interoperability between Windows Server 2012 and Windows Server 2016 virtual networks. This is because of the location where the information about which VMs is on which host, and in which virtual network, is stored. In 2012 this was held in WMI; in 2016 Microsoft has adopted the OVSDB protocol, which is used by other virtual network providers.

Eventually, this should lead to virtual network connectivity between VMs running on VMware and Hyper-V hosts. Note that there's no new development of the NVGRE stack in Windows Server 2016, but a whole host of improvements in the new stack, based on technologies born in Azure such as the Network Controller, Software Load Balancer (SLB), Network Function Virtualization (NFV) and RAS Gateway for SDN.

RDMA networking has also gotten an overhaul. It's been in Windows Server since 2012 (under the name of SMB Direct), but only for communicating with the back-end storage (to match Fibre Channel SAN performance). Windows Server 2012 R2 added the ability to do Live Migration over RDMA. But they couldn't be used together so you needed two separate RDMA fabrics, one for storage access and one for Live Migration (and other traffic). Windows Server 2016 introduces Switch Embedded Teaming (SET), which allows a single NIC to be used for both types of traffic.

Nano, Nano

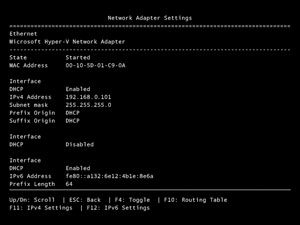

The biggest architectural change ever in Windows Server is Nano Server (Figure 1). As an alternative deployment option to Server Core and Server with a GUI, Nano Server is a GUI-less, minimal disk footprint server that lets you add only the functionality you need. It supports three roles today: Hyper-V host, SOFS storage host and an application platform for modern cloud applications. It's only available through Volume Licensing channels, which makes sense because the appeal is for large enterprises and cloud providers with large farms of servers.

[Click on image for larger view.]

Figure 1. The small-footprint Nano Server.

[Click on image for larger view.]

Figure 1. The small-footprint Nano Server.

The Upgrade Story

The upgrade story has been gradually improving for Hyper-V clusters, although it can't yet match the ease with which VMware clusters can be upgraded. Windows Server 2012 allowed Live Migration of VMs to a new Windows Server 2012 R2 cluster, resulting in zero downtime for application workloads. The downside is that you need extra hardware to create the new Windows Server 2012 R2 cluster.

The story in Windows Server 2016 is better (for all clusters, not just Hyper-V), enabling introduction of Windows Server 2016 nodes into a Windows Server 2012 R2 cluster. Like upgrading Active Directory, the cluster has a functional level, so the basic process is to drain VMs from one node, evict the node from the Windows Server 2012 R2 cluster, format it, install Windows Server 2016 and Hyper-V and then join the node back into the cluster. It pretends to be a Windows Server 2012 R2 node and VMs can be Live Migrated back to it. Rinse and repeat for each host until all hosts are running Windows Server 2016. You now run Update-ClusterFunctionalLevel, making it impossible to introduce Windows Server 2012 R2 hosts into it while unlocking the new Windows Server 2016 features.

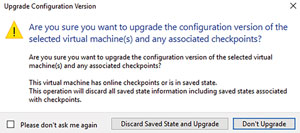

There are also new configuration formats for VMs: .vmcx files store the configuration, and .vmrs files hold the running state. A mixed-mode cluster will use the old file formats until the cluster functional level has been upgraded. You can then upgrade VMs to the new format (during a maintenance window), which requires a shutdown and the running of Update-VmConfigurationVersion, or a right-click in Hyper-V Manager (Figure 2). Once upgraded, a VM will only run on Windows Server 2016 hosts.

[Click on image for larger view.]

Figure 2. Upgrading virtual machines to the newer format.

[Click on image for larger view.]

Figure 2. Upgrading virtual machines to the newer format.

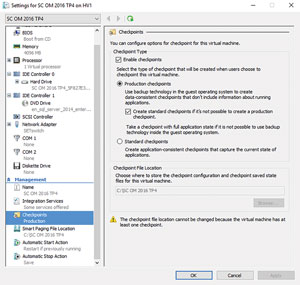

Backup and Checkpoints

Until now, Hyper-V VM backups have relied on Volume Shadow Copy Services (VSS), just like other backup tools in Windows. This reliance is removed in Windows, making VM backup a feature of Hyper-V; using Resilient Change Tracking (RCT) gives third-party backup vendors a leg up in supporting Hyper-V.

This new platform also unlocks a new type of VM snapshot called Production Checkpoints. Unlike checkpoints in Windows Server 2012 R2 and earlier, these use VSS inside the VM, leading to two improvements. First, the VM is aware that it's being backed up and potentially restored (it always boots, rather than being restored to a running state). Second, it eliminates the risks of distributed database corruption (Active Directory, Exchange DAGs and so on), making these checkpoints much safer (see Figure 3).

[Click on image for larger view.]

Figure 3. A new, safer virtual machine checkpoint snapshot.

[Click on image for larger view.]

Figure 3. A new, safer virtual machine checkpoint snapshot.

Compute Resiliency

Clustering virtualization hosts together offer protection for both planned and unplanned downtime. For planned, simply Live Migrate VMs to other hosts so there's no application workload downtime; for unplanned, it's a matter of restarting VMs on other hosts if a host fails.

There are times, however, when clustering can be detrimental to high availability. Take the example of a short network outage to one of more hosts. If it exceeds the heartbeat timeout value, the cluster will assume the node are down and restart all the VMs running on those hosts elsewhere, leading to potential data loss and less availability.

Similarly, loss of storage connectivity for longer than a minute will lead to VMs crashing in Windows Server 2012 R2. In Windows Server 2016, network disconnects will lead to a host being placed in isolation mode for up to four minutes (the number of minutes can be changed), with failover occurring after this. A storage connectivity outage will lead to VMs being placed in a frozen state instead of crashing; if the storage comes back after a time, they will simply resume where they left off.

Balancing VMs across hosts in a Hyper-V cluster is crucial; in previous versions Microsoft provided this functionality as Dynamic Optimization in System Center Windows Server 2012 R2 VMM. If you didn't have VMM, you were out of luck, but Windows Server 2016 provides this as inbox functionality.

Guards and Shields

Hyper-V in Windows Server 2016 is the only virtualization platform that's offering a solution to the issue of unlimited control of host administrators. If I'm the virtualization admin, I can take copies of virtual hard disks, mount them and perform offline attacks against applications such as the AD database; I could also attach a debugger to a running VM and inspect memory contents or inject code into a VM's memory. This is an issue with public clouds and hosting providers, but even in private clouds many enterprises won't virtualize their domain controllers for this very reason.

Shielded VMs solve this problem. A Shielded VM relies on several different technologies working in tandem: Generation 2 VMs with virtual TPM chips enable full disk encryption through dm-crypt in Linux and BitLocker in Windows VMs. Generation 2 VMs boot from UEFI using Secure Boot (both Windows and Linux), which protects the firmware and OS startup files.

On the host side is Host Guardian Service (HGS), running on three or more protected servers in an AD forest separate from the rest of your network. The HGS checks the health of each approved Hyper-V host, with two levels of attestation:

- Administrator attestation relies on adding trusted hosts to a group in AD.

- Hardware attestation mode relies on a TPM 2.0 chip in each host to ensure the hypervisor is secure, and that no malicious code has been loaded prior to the OS starting.

Finally, the application or VM owner gives the fabric administrators encrypted files with shielding data that Hyper-V uses to unlock the VMs during their boot process. This occurs without host administrators having access to the secrets inside the shielding data. Because VMs will only run on specific trusted hosts, if you take a copy of a VM you won't be able to start it on another host, as it's not trusted.

This solution also encrypts all Live Migration traffic, and disables VM crash dumps. If you enable them, they're encrypted, as are host crash dumps. Today the guest VMs need to be Windows Server 2012 or later, as Generation 2 VMs aren't supported by earlier OSes.

There are built-in tools for converting existing VMs to Shielded VMs, and Azure Pack provides the end-user interface for providing the shielding data and provisioning VMs.

Because this solution requires new hardware (TPM 2.0 is very new), it's unlikely that this technology is going to see rapid adoption; but in today's security landscape it's an innovative approach to a crucial problem.

Containers as Far as the Eye Can See

Another big innovation in the next version of Windows is containers. These are already available on your Windows 10 PC, if you have the anniversary update. The ability to virtualize the OS into lightweight copies instead of having the whole virtual server and OS stack in each VM provides numerous benefits. Containers start in less than a second and use significantly fewer resources than VMs. I've seen reports of businesses using Windows containers in trials that are running more than 800 containers per host.

But the security assurances of Windows (and Linux) containers are an issue. Even though each container looks like a separate OS to the applications running on them, writing malicious code to break out of a container into the host OS should be significantly easier than breaking out of a VM.

Because of this, Windows Server 2016 offers another flavor of container in the form of Hyper-V containers. Here, each container runs in a lightweight, separate VM. They still boot very quickly, but provide the full security isolation of a full VM.

Developers don't need to worry about which is being used; they simply develop using containers, and at deployment time Windows or Hyper-V containers can be selected. Windows Server 2016 also comes with Docker built in for managing containers. Note that this is not an emulation or something "Docker-like"; it's the Docker engine built into Windows. Alternatively, you can use Windows PowerShell to manage containers. All this container goodness also enables Hyper-V to do nested virtualization for the first time, so you can run a VM inside a VM and so forth.

New File Systems and More

A file system introduced in Windows Server 2012, Resilient File System (ReFS), was rarely used because of its limitations. Those limits are now gone, and ReFS is the recommended file system for hosting VMs, offering very fast checkpoint migration and incredibly fast creation of large, fixed virtual disks. PowerShell Direct lets you run cmdlets in VMs over the VMBus, obviating the need for PowerShell Remoting -- provided you have appropriate credentials. Hyper-V Replica makes it easier to add virtual disks to VMs without upsetting ongoing replication, and lets you optionally add the new disk to the replicated set.

Adding and removing virtual NICs are now online operations; if you've assigned a fixed amount of memory to a VM, you can now change that amount while the VM is running. If you're doing guest clustering of VMs with the common storage provided through Shared VHDX, you can now back it up from the host, as well as resize the Shared VHDX file while the VMs are running.

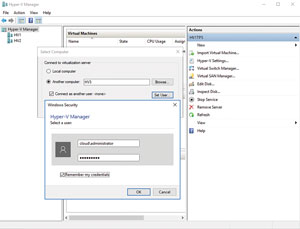

The new Hyper-V manager, shown in Figure 4, lets you use alternate credentials to start it. It can also manage Windows Server 2012/2012 R2 hosts. Integration components for VMs are now distributed through Windows update instead of installed from the host.

[Click on image for larger view.]

Figure 4. Hyper-V Manager is now more powerful.

[Click on image for larger view.]

Figure 4. Hyper-V Manager is now more powerful.

Hyper-V in Windows Server 2016 is a great platform on which to build a private cloud fabric, with the inclusion of so much Azure SDN networking technology. And it's also the best stepping stone to a hybrid cloud, especially with the forthcoming Azure Stack, which builds on the Windows Server 2016 Hyper-V platform.