In-Depth

The 2016 Virtualization Review Editor's Choice Awards

Our picks for the best of the best.

As we close the book on 2016 and start writing a new one for 2017, it's a good time to reflect on the products we've liked best over the past year. In these pages, you'll find old friends, stalwart standbys and newcomers you may not have even thought about.

Our contributors are experts in the fields of virtualization and cloud computing. They work with and study this stuff on a daily basis, so a product has to be top-notch to make their lists. But note that this isn't a "best of" type of list; it's merely an account of the technologies they rely on to get their jobs done, or maybe products they think are especially cool or noteworthy.

There's no criteria in use here, nor any agenda at work. There was no voting, no "popularity contest" mindset. These are simply each writer's individual choices for the most useful or interesting technology they used in 2016. As you can see, it was a fine year to work in virtualization.

Trevor Pott

HC3

Scale Computing

Why I love it: Compute and storage together with no fighting; this is the promise of hyper-convergence, and Scale succeeds at it. Scale clusters just work, are relatively inexpensive, and deal with power outages and other unfortunate scenarios quite well.

What would make it even better: Scale needs a self-service portal, a virtual marketplace and a UI that handles 1,000-plus virtual machines (VMs) more efficiently.

The next best product in this category: There are many hyper-converged infrastructure (HCI) competitors in this space, and each has its own charms. Try before you buy to find the right one for you.

Remote Desktop Manager (RDM)

Devolutions

Why I love it: RDM changed my universe. Once, my world was a series of administrative control panels and connectivity applications, each separate and distinct. After RDM, I connect to the world in well-organized tabbed groups. A single click opens a host of different applications into a collection of servers, allowing me to bring up a suite of connectivity options with no pain or fuss.

What would make it even better: The remote desktop protocol (RDP) client can be a little glitchy, but when it is, there's usually something pretty wrong with the graphics drivers on the system.

The next best product in this category: Royal TSX

Hyperglance

Hyperglance Ltd.

Why I love it: Hyperglance makes visualizing and exploring complex infrastructure easy. It makes identifying and diagnosing issues with infrastructure or networks very simple. Nothing else on the market quite compares. Humans are visual animals, and solving problems visually is just easier.

What would make it even better: Hyperglance is an early startup, so there's a lot of room to improve; however, they're doing work that no one else seems to be doing, so it's kind of hard to complain.

The next best product in this category: So far as I know, there are no relevant competitors that aren't still in the experimental stage.

NSX

VMware Inc.

Why I love it: NSX is the future. Love it or hate it, VMware has set the market for software-defined networking (SDN) for the enterprise. More than just networking, NSX is the scaffolding upon which next-generation IT security will be constructed.

What would make it even better: Price and ease of use are the perennial bugbears of VMware, but time and competition will solve this.

The next best product in this category: OpenStack Neutron

5nine Manager

5nine Software Inc.

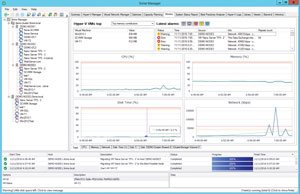

Why I love it: 5nine Manager makes Hyper-V usable for organizations that don't have the bodies to dedicate specialists to beating Microsoft System Center into shape.

What would make it even better: At this point, 5nine Manager is a mature product. There are no major flaws of which I am aware, and the vendor works hard to patch the product regularly.

[Click on image for larger view.]

[Click on image for larger view.]

The next best product in this category: Microsoft Azure Stack

Mirai IoT Botnet

(If I told you the URL, I'd probably go to jail)

Why I love it: The Mirai IoT botnet has already changed the world; and those were just the proof of concept tests! After successful attacks against high-profile targets, the Mirai botnet has become a fixture of the "dark Web": A cloud provider to ne'er do wells that reportedly rivals major legal cloud providers in ease of use. While the Mirai botnet would get recognition on its own for actually finding a use for all the IoT garbage we keep connecting to the Internet, its true benefit is its service as both a sharp stick in the eye to the world's apathetic legislators and an enabler for unlimited "I told you sos" from the tech community.

What would make it even better: As an IoT botnet, Mirai's compute nodes are somewhat limited. It has no virtualization support (either containers or hypervisor-based), requiring users to script their attacks using commands native to the botnet. As the compute nodes are almost all Unix derivatives, however, this isn't a huge impediment, and they generally respond to the same commands.

The next best product in this category: The Nitol Botnet

Trevor Pott is a full-time nerd from Edmonton, Alberta, Canada. He splits his time between systems administration, technology writing and consulting. As a consultant he helps Silicon Valley startups better understand systems administrators and how to sell to them.

Jon Toigo

[Jon decided to forgo categories. In the holiday spirit, we've decided to not punish him for doing so. -- Ed.] The good news about 2016 is that the year saw the emergence of promising technologies that could really move the ball toward realizing the vision and promise of both cloud computing and virtualization. Innovators like DataCore Software, Acronis Software, StrongBOX Data Solutions, StarWind Software, and ioFABRIC contributed technology that challenged what has become a party line in the software-defined storage (SDS) space and expanded notions of what could be done with cloud storage from the standpoint of agility, resiliency, scalability and cost containment.

Adaptive Parallel I/O Technology

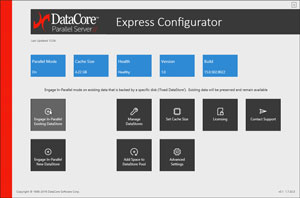

DataCore Software

In January, DataCore Software provided proof that the central marketing rationale for transitioning shared storage (SAN, NAS and so on) to direct-attached/software-defined kits was inherently bogus. DataCore Adaptive Parallel I/O technology was put to the test on multiple occasions in 2016 by the Storage Performance Council, always with the same result: parallelization of RAW I/O significantly improved the performance of VMs and databases without changing storage topology or storage interconnects. This flew in the face of much of the woo around converged and hyper-converged storage, whose pitchmen attributed slow VM performance to storage I/O latency -- especially in shared platforms connected to servers via Fibre Channel links.

[Click on image for larger view.]

[Click on image for larger view.]

While it is true that I like DataCore simply for being an upstart that proved all of the big players in the storage and the virtualization industries to be wrong about slow VM performance being the fault of storage I/O latency, the company has done something even more important. Its work has opened the door to a broader consideration of what functionality should be included in a properly defined SDS stack.

In DataCore's view, SDS should be more than an instantiation on a server of a stack of software services that used to be hosted on an array controller. The SDS stack should also include the virtualization of all storage infrastructure, so that capacity can be allocated independently of hypervisor silos to any workload in the form of logical volumes. And, of course, any decent stack should include RAW I/O acceleration at the north end of the storage I/O bus to support system-wide performance.

DataCore hasn't engendered a lot of love, however, from the storage or hypervisor vendor communities with its demonstration of 5 million IOPS from a commodity Intel server using SAS/SATA and non-NVMe FLASH devices, all connected via Fibre Channel link. But it is well ahead of anyone in this space. IBM may have the capabilities in its SPECTRUM portfolio to catch up, but the company would first need to get a number of product managers of different component technologies to work and play well together.

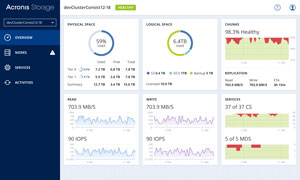

Acronis Storage

Acronis International GmbH

Most server admins of the last decade remember Acronis as the source of must-have server administration tools. More recently, it seemed that the company was fighting for a top spot in the very crowded server backup space.

Recently, however, the company released its own SDS stack, Acronis Storage, that caught my attention and made my brain cells jiggly. The reason traces back to problems with SDS architecture since the idea was pushed into the market (again) by VMware a couple years back.

[Click on image for larger view.]

[Click on image for larger view.]

Many have bristled at the arbitrary and self-serving nature of VMware's definition of SDS, which much of the industry seemed to adopt whole cloth and without much push back. Simply put, VMware's choices about which storage functionality to instantiate in a server-side software stack and which to leave on the array controller made little technical sense.

RAID, for example, was a function left on the controller, probably to support the architecture of VMware's biggest stockholder at the time, EMC. From a technical standpoint, this choice didn't make much sense because RAID was beginning to trend downward, losing its appeal to consumers in the face of larger storage media and longer rebuild times following a media failure.

With Acronis Storage, smart folks at Acronis were able to rewrite that choice by introducing CloudRAID into its SDS stack. CloudRAID enables a more robust and reliable data protection scheme than one can expect from traditional software or hardware RAID functionality implemented siloed arrays. Acronis Storage's use of the term RAID may strike some as confusing, especially given that the company has embraced erasure coding as a primary method of data protection. Regardless of the moniker, creating data recoverability via the disassembly and reassembly of objects from distributed piece parts makes more sense than RAID parity striping given the large sizes of storage media today and the potentially huge delays in recovering petabytes and exabytes of data through traditional RAID recovery methods.

Acronis also introduced some forward-looking technology to support BlockChain directly in its stack. This is very interesting because it reflects an investment in one of the hottest new cloud memes: a systemic distributed ledger and trust infrastructure. IBM and others are also hot for BlockChain, but Acronis has put the technology within reach of the smaller firm (or cloud services provider) that can't afford the price tag for big iron.

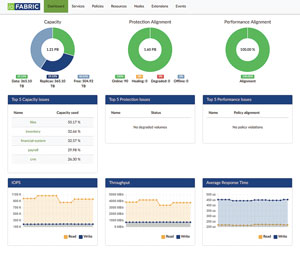

Vicinity

ioFabric Inc.

Another disruptor in the SDS space is ioFabric, whose Vicinity product is called "software-defined storage 2.0" by its vendor. The primary value of the approach taken by this vendor is the automation of the pooling of storage and the migration of data between pools, whether for load balancing or for hierarchical storage management.

[Click on image for larger view.]

[Click on image for larger view.]

ioFabric's addition of automated pooling and tiering to the SDS functional stack is very interesting, in part because it counters the rather ridiculous idea of "flat and frictionless" storage that has cropped up in some corners of the IT community, courtesy of over-zealous marketing by all-flash array vendors.

Flat storage advocates argue that all data movement after initial write produces unwanted latency. So, backup and archive are out, as is hierarchical storage and periodic data migrations between tiers. As an alternative to archive and backup, advocates suggest a strategy of shelter-in-place; when the data on a flash array is no longer being re-referenced, just power down the array, leave it in place and roll out another storage node. Such an architectural model fails to acknowledge the vulnerability of keeping archival or backup data in the same location as active production data, where it will be exposed to common threats and disaster potentials.

Tacitly, Vicinity seems to acknowledge that many hypervisor computing jockeys know next to nothing about storage, so even the basic functions of data migration across storage pools needs to be automated. Vicinity does a nice job of this.

My only concern is whether Vicinity is sufficiently robust to provide the underlayment for intelligent and granular data movement -- based on the business value of data -- across infrastructure. But, at least, the technology provides an elegant starter solution to the knotty problem of managing data across increasingly siloed storage over time.

Virtual Tape Library (VTL)

StarWind Software Inc.

As with ioFabric, StarWind Software is another independent SDS developer. Its virtual SAN competes well in every respect with VMware vSAN and similar products, but StarWind has gone beyond the delivery of generic virtual SAN software (a very crowded field) with the introduction in 2016 of a VTL solution.

Basically, the StarWind VTL is a software-defined virtual tape library created as a VM with an SDS stack. The Web site offers a somewhat predictable business case for VTL, including its potential as a gateway to cloud-based data backup, and the VTL itself is available on some clouds as an "app."

What I like about the idea (which has a long pedigree back to software VTLs in the mainframe world three decades ago) is the convenience it provides for backing up data, especially in branch offices and remote offices that may lack the staff skills to make a good job of backup.

The StarWind VTL can be plugged into clusters of SDS storage, whether converged or hyper-converged, and used as a copy target. Then the appliance can replicate its data without creating latency in the production storage environment to another VTL, whether in the corporate datacenter or in a cloud. It's a cool and purposeful implementation of a data protection service leveraging what's good about SDS. And thus far, I haven't seen a lot of competitors in the market.

StrongLINK

StrongBox Data Solutions

All of my previous picks have fallen within the boundaries of the marketing term "software-defined storage." SDS is important insofar as it enables greater agility, elasticity and resiliency; plus lower costs in storage platform and storage service delivery. However, while all products discussed thus far have made meaningful improvements in the definition, structure, and function of storage platform and service management, they do not address the key challenge of data management, which is where the battle to cope with the coming zettabyte wave will ultimately be waged.

[Click on image for larger view.]

[Click on image for larger view.]

With projections of between 10ZB and 60ZB of new data requiring storage by 2020, more than just capacity pools of flash and disk will be required. Also, more than simple load management will be required to economize on storage costs. Companies and cloud services providers are finally going to need to get real about managing data itself.

That's the main reason I'm so keen on technologies like StrongLINK from StrongBox Data Solutions. StrongLINK can best be described as cognitive data management technology. It combines a cognitive computing processor with an Internet of Things architecture to collect real-time data about data, and about storage infrastructure, interconnects, and services in order to implement policy-based rules for hosting, protecting, and preserving file and object data over the life of the data.

StrongLINK is a pioneering technology for automating the granular management of data itself, regardless of the characteristics, topology or location of data storage assets. Only IBM, at this point, appears to have the capabilities to develop a competing technology, but only if Big Blue can cajole numerous product managers to work and play well together.

For now, if you want to see what can be done with cognitive data management, StrongLINK is ahead of the curve.

Jon Toigo is a 30-year veteran of IT, and the managing partner of Toigo Partners International, an IT industry watchdog and consumer advocacy. He is also the chairman of the Data Management Institute, which focuses on the development of data management as a professional discipline.

Brien M. Posey

Virtualization Manager

SolarWinds Worldwide LLC

Why I love it: SolarWinds Virtualization Manager is the best tool around for managing heterogeneous virtualized environments. The software works equally well for managing both VMware and Hyper-V.

In version 7.0, SolarWinds introduced the concept of recommendations. The SolarWinds Orion Console displays a series of recommendations of things you can do to make your virtualization infrastructure perform better. The software also monitors the environment's performance and generates alerts (in a manner similar to Microsoft System Center Operations Manager) when there's an outage, or when performance drops below a predefined threshold. Virtualization Manager also has a rich reporting engine and can even help with capacity planning.

What would make it even better: The software works really well, but sometimes lags behind the environment, presumably because of the way the polling process works. It would be great if the software responded to network events in real time.

The next best product in this category: Splunk Enterprise

Turbonomic

Turbonomic Inc.

Why I love it: Turbonomic (which was previously VMTurbo) is probably the best solution out there for virtualization infrastructure automation. The software monitors hosts, VMs and dependencies in an effort to assess application performance. Because the software has such a thorough understanding of the environment, it can add or remove resources such as CPU or memory on an as-needed basis. The software can also automatically scale workloads by spinning VM instances up (or down) when necessary. These types of operations can be performed for on-premises workloads, or for workloads running in the public cloud.

[Click on image for larger view.]

[Click on image for larger view.]

What would make it even better: Nothing

The next best product in this category: Jams Enterprise Job Scheduling

System Center 2016 VM Manager

Microsoft

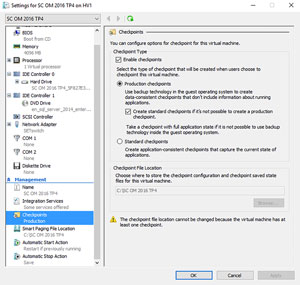

Why I love it: VM Manager is hands down the best tool for managing Microsoft Hyper-V. Of course, this is to be expected, because VM Manager is a Microsoft product. Microsoft has added quite a bit of new (and very welcome) functionality to the 2016 version. For example, the checkpoint feature has been updated to use the Volume Shadow Copy Services, making checkpoints suitable for production use. Microsoft has also added support for Nano Server, and has even made it possible to deploy the SDN stack from a service template.

What would make it even better: VM Manager 2016 would be even better if it had better support for managing VMware environments. Microsoft has always provided a degree of VMware support, but this support exists more as a convenience feature rather than as a viable management tool.

The next best product in this category: The second best tool for managing Hyper-V is PowerShell, with Microsoft's Hyper-V Manager being third best. Both PowerShell and Hyper-V Manager are natively included with Windows.

Brien M. Posey is a seven-time Microsoft MVP with over two decades of IT experience. As a freelance writer, Posey has written many thousands of articles and written or contributed to several dozen books on a wide variety of IT topics.

Paul Schnackenburg

Operations Management Suite

Microsoft

Why I love it: Operations Management Suite (OMS) has been on a cloud-fueled growth spurt since it was born just 18 months ago. From its humble beginnings in basic log analysis, there are now 25 "solutions": packs that take log data and give you actionable insight for Active Directory, SQL Server, Containers, Office 365, patch status, antimalware status, Azure Site Recovery and networking, as well as VMware monitoring (plus a lot more). Both Linux and Windows workloads are covered and they can be anywhere; on-premises, in Azure or in another public cloud.

If you have System Center Operations Manager (SCOM), OMS will use the SCOM agents to upload the data to your central SCOM server and from there to the cloud.

There's no doubt that as public cloud and hybrid cloud become the new normal, being able to monitor your workloads -- no matter where they're running -- will be crucial. And OMS continues to surprise with the depth of insight into network latency, how long it takes to install patches or Office 365 activity that can be had using log data. Recently, the ability to upload custom log data from any source was added, increasing the scope of OMS.

Add to this Azure Backup and Automation and OMS is now the best way to monitor, protect and manage any workload in any cloud.

The best reason to love OMS, however, is the "free forever" (max 500MB/day, seven-day data retention) tier, which means you don't have to take my word for it; you can simply sign up, connect a few servers and see how easy it is to gain insights.

What would make it even better: The ability to monitor more third-party workloads would be beneficial and is probably coming, given the cloud cadence development pace.

The next best product in this category: Splunk

Windows Server 2016

Microsoft

Why I love it: After a very long gestation, Windows Server 2016 is finally here. There are many new and improved features; top of the list are improvements in Hyper-V such as 24TB memory and up to 512 cores with hyperthreading in a host, along with 240 virtual processors and up to 12TB of memory in a VM. On the storage side, there's now Storage Spaces Direct, which uses internal HDD, SSD or NVMe devices in each host to create a pool of storage on two to 16 hosts.

[Click on image for larger view.]

[Click on image for larger view.]

Creating fixed-size virtual hard disks and merging checkpoints is lightning fast with the new filesystem, ReFS, which has been substantially improved since 2012 and is now the preferred file system for VM storage and backup.

Backup is completely rewritten with Resilient Change Tracking now a feature of the platform itself. Fast networking, 10 Gbps or 40 Gbps RDMA NICs, can now be used for both storage and VM traffic simultaneously, minimizing the number of interfaces each host requires.

PowerShell Direct allows you to run cmdlets in VMs from the host without having to set up remoting. Upgrading is easier than ever with Rolling Cluster Upgrades where you can gradually add Windows Server 2016 nodes to a 2012 R2 cluster until all nodes are upgraded. And then there are Shielded VMs, which bar fabric administrators from access to VMs, a totally unique feature to Hyper-V.

And all this can be done on the new deployment flavor of Windows Server -- Nano server, a headless, streamlined server with minimal disk and memory footprint.

Hyper-V was already the most innovative of the main virtualization platforms and this new version dials it up to 11, especially when you include Windows Server/Hyper-V Containers.

What would make it even better: Support for Live Migration to and from Azure would be cool.

The next best product in this category: VMware vSphere

System Center 2016 VM Manager

Microsoft

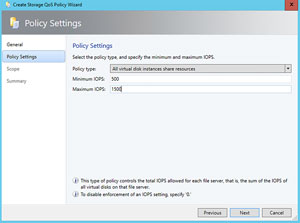

Why I love it: Building on all the new features in Windows Server 2016, System Center VM Manager lets you bring in bare metal servers and automatically deploy them as Hyper-V or storage hosts. This also adds the ability to create hyper-converged clusters where the Hyper-V hosts also act as storage hosts using Storage Spaces Direct (S2D).

[Click on image for larger view.]

[Click on image for larger view.]

VM Manager also manages the Host Guardian Service to build Guarded Fabric and Shielded VM, as well as the new Storage Replica (SR) feature. SR lets you replicate any storage (DAS or SAN) to any other location, either synchronously (zero data loss) or asynchronously for longer distances.

Software-defined everything is the newest buzzword, and VM Manager doesn't disappoint with full orchestration of the new Network Controller role, which provides a Software Load Balancer (SLB) and Windows Server Gateway (WSG) for tenant VPN connectivity. VM Manager also manages the new centralized storage Quality of Service policies that can be used to control the storage IOPS hunger of "noisy neighbors."

Data Protection Manager lets you back up VMs on S2D clusters; when combined with the ReFS file system, it provides 30 percent to 40 percent storage space savings, along with 70 percent faster backups.

What would make it even better: VM Manager 2016 can manage VSphere 5.5 and 5.8, but support for 6.0 would be nice.

The next best product in this category: VMware vCenter

Azure Stack

Microsoft

Why I love it: Even though Azure Stack is only in its second Technical Preview (due out mid-2017), it promises to be a game-changer for private cloud. Today, public Azure makes no secret of the fact that it runs on Hyper-V, which provides for easy portability of on-premises workloads. But the deployment and management stack around on-premises and public Azure are mostly different. Azure Stack provides a true Azure experience every step of the way, with ARM templates working identically across both platforms, which, combined with the fulfillment of a true hybrid cloud deployment model, large enterprises will appreciate.

What would make it even better: The ability to run it on the hardware of your own choosing, instead of only prebuilt OEM systems.

The next best product in this category: Azure Pack provides an "Azure like" experience, but not true code compatibility.

Paul Schnackenburg, MCSE, MCT, MCTS and MCITP, started in IT in the days of DOS and 286 computers. He runs IT consultancy Expert IT Solutions, which is focused on Windows, Hyper-V and Exchange Server solutions.

Dan Kusnetzky

SANsymphony and Hyper-converged Virtual SAN

DataCore Software

Why I love it: DataCore is a company I've tracked for a very long time. The company's products include ways to enhance storage optimization, storage efficiency and to make the most flexible use of today's hyper-converged systems.

The technology supports physical storage, virtual storage or cloud storage in whatever combination fits the customer's business requirements. The technology supports workloads running directly on physical systems, in VMs or in containers.

The company's Parallel I/O technology, by breaking down OS-based storage silos, makes it possible for customers to get higher levels of performance from a server than many would believe possible (just look at the benchmark data if you don't believe me). This, by the way, also means that smaller, less-costly server configurations can support large workloads.

What would make it even better: I can't think of anything.

Next best product in this category:

VMware vSAN

Apollo Cloud

PROMISE Technology Inc.

Why I love it: I've had the opportunity to try out cloud storage services and have found that some caused me concerns about the terms and conditions under which the service is being supplied, how private the data I would store in those cloud services was going to be or how secure the data really was.

Apollo Cloud is based on the use of a local storage device. This means that my data stays local even though it can be accessed remotely. Furthermore, the device offers easy-to-use local management software, as well. Data can be accessed by systems running Windows, Linux, macOS, iOS and Android. I found it quite easy to share documents between and among all of these different types of systems and between staff and clients.

What would make it even better: At this time, special client software must be installed on some systems to overcome limitations built into the OSes that support those environments. It would be better if a way to connect to the storage server without having to add an app or driver to each and every device would make this solution far easier to use for midsize companies.

Next best product in this category:

Nexsan Transporter

DxEnterprise

DH2i

Why I love it: DH2i has focused on making it possible for SQL Server-based applications to move freely and transparently among physical, virtual and cloud environments. Most recently, it announced an approach to enable SQL Server-based application mobility based on containers. RackSpace and DH2i have been working together to provide a SQL Server Container-as-a-Service offering, which should be of interest to companies interested in allowing their workloads to burst out of their internal network into a RackSpace-hosted cloud environment.

What would make it even better: I'm always surprised when a client whose infrastructure is based on SQL Server hasn't heard of DH2i and its products. Clearly, the company would do better if it could just make the industry aware of what it's doing.

The next best product in this category: Microsoft offers SQL Server as an Azure service, and also makes it possible for its database to execute on physical or virtual systems.

Red Hat Containers

Red Hat Inc.

Why I love it: The IT industry really took notice of containers in 2016. Red Hat has integrated containers into many of its products (Red Hat Linux, Red Hat OpenShift, Red Hat Virtualization and so on) and made it production-ready for customers. Red Hat did the hard work of integrating containers into its management, security and cloud computing environments. And if a customer really wants to conduct a computer science project, Red Hat is there, too.

[Click on image for larger view.]

[Click on image for larger view.]

What would make it even better: Nothing, really. What impressed me was the company's desire to move this technology from a computer science project into a production-ready tool without removing customer choice. If a customer wants to tweak internal settings or do its own integration and testing work, Red Hat is happy to help them do it.

The next best product in this category: Others in the community, including Docker Inc., IBM, HP and SUSE are offering containers. Red Hat just did a better job of putting together a production-ready computing environment.

Daniel Kusnetzky, a reformed software engineer and product manager, founded Kusnetzky Group LLC in 2006. He's literally written the book on virtualization and often comments on cloud computing, mobility and systems software. He has been a business unit manager at a hardware company and head of corporate marketing and strategy at a software company. In his spare time, he's also the managing partner of Lux Sonus LLC, an investment firm.