In-Depth

Intel Releases GA of 2nd-Gen 'Cascade Lake' Xeon CPUs

Support for Optane DC Persistent Memory is one of many new features in Intel's latest generation of chips.

Today (April 2, 2019), Intel Corp. announced the general availability of its latest generation of Xeon CPUs, which the company has code-named "Cascade Lake." What makes this generation of CPUs so interesting isn't the new features in the chips (although the chips do have some interesting new features), but the new tier of memory that they support. In this article, I'll first talk about this new tier of memory, then I'll investigate the new features of the chips that I find most interesting, and finally I'll close with a discussion on how the Cascade Lake CPU architecture will impact the datacenter.

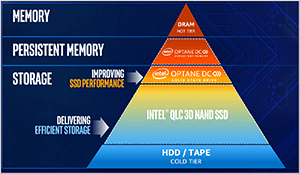

The big news with Cascade Lake is that the chips support Optane DC Persistent Memory (DCPM). Optane is a new tier of memory (Figure 1) that's faster than solid-state drive (SSD) storage and less expensive than Dynamic Random Access Memory (DRAM); unlike DRAM, however, Optane is persistent, meaning that it will keep the information stored on it after a system reboot. As a side note, Intel has been shipping SSDs that use Optane for a while, but these are the first CPUs that will support Optane DCPM attached to the system via a Double Data Rate (DDR) slot rather than a Serial ATA (SATA) connector.

[Click on image for larger view.]

Figure 1. The New Optane DC Persistent Memory tier of memory. Source: Intel Corp.

[Click on image for larger view.]

Figure 1. The New Optane DC Persistent Memory tier of memory. Source: Intel Corp.

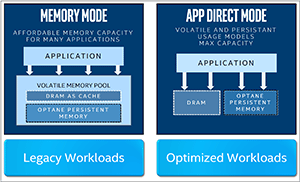

Although there hasn't been a lot third-party verification of the performance of Optane DCPMM, the latency of 3D XPoint (the technology behind Optane) is about 10 times slower than DRAM, but about 1,000 times faster than NAND SSDs, according to Intel. As Optane DCPMM plugs directly into a DDR4 socket, the path to the CPU is considerably shortened. Because this is a new tier of memory, the community is still devising new techniques to squeeze the most out of it. For example, although Application Direct Mode requires applications to be rearchitected, Optane DCPM can still be used in Memory Mode for legacy workloads without application re-architecture (Figure 2).

[Click on image for larger view.]

Figure 2. Optane DC Persistent Memory workloads. Source: Intel Corp.

[Click on image for larger view.]

Figure 2. Optane DC Persistent Memory workloads. Source: Intel Corp.

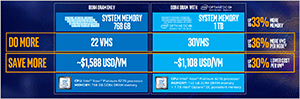

One of the most interesting claims made regarding Optane DCPM, albeit unverified by a third party, is that it can drive down the cost of each virtual machine (VM) on a server by one-third (Figure 3).

[Click on image for larger view.]

Figure 3. Optane DC Persistent Memory cost per virtual machine. Source: Intel Corp.

[Click on image for larger view.]

Figure 3. Optane DC Persistent Memory cost per virtual machine. Source: Intel Corp.

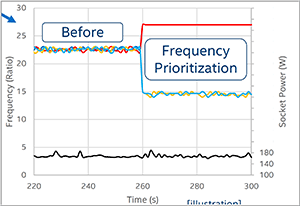

Some of the CPUs announced in the Cascade Lake generation support Intel Speed Select Technology (SST), which allows for the prioritization of workloads and also gives the cores in the CPU in which they are running a higher frequency than other cores (Figure 4). In effect, this allows the CPU to maintain its total watt rating.

[Click on image for larger view.]

Figure 4. Intel Speed Select Technology. Source: Intel Corp.

[Click on image for larger view.]

Figure 4. Intel Speed Select Technology. Source: Intel Corp.

This generation of Xeon CPUs differs from the first generation of Xeon CPUs (released in 2012) in two distinct ways: It's geared not only toward high-performance computing (HPC), but also the fast-growing fields of analytics and Artificial Intelligence (AI); additionally, it has many different variants optimized to support a wide variety of workloads.

The Cascade Lake architecture can support up to 56 cores and 112 threads, 1MB of L2 cache per core, 38.5MB of shared L3 cache, 8 socket servers, 48 PCIe 3.0 Lanes, 16Gb of DDR3 and 4.5TB of Optane DCPM per processor.

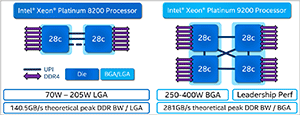

Looking at the two most powerful series of CPUs, the 8200 and 9200, we can see that they have two and four dies, respectfully (Figure 5)—the theoretical DDR memory bandwidth of the CPUs for 281GB is truly remarkable.

[Click on image for larger view.]

Figure 5. The 8200 and 9200 CPUs. Source: Intel Corp.

[Click on image for larger view.]

Figure 5. The 8200 and 9200 CPUs. Source: Intel Corp.

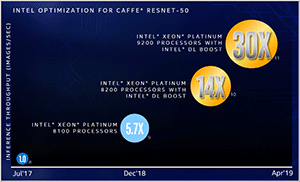

Intel also introduced DL Boost (Figure 6), which reduces one of the most common instruction sets used for AI from three cycles to one cycle, yielding up to a 3 times theoretical boost in performance for common AI frameworks, such as Caffe2, TensorFLow and PaddlePaddle. This, coupled with the other technology in the Cascade Lake CPU architecture, can yield a 30x increase in throughput.

[Click on image for larger view.]

Figure 6. Intel DL Boost. Source: Intel Corp.

[Click on image for larger view.]

Figure 6. Intel DL Boost. Source: Intel Corp.

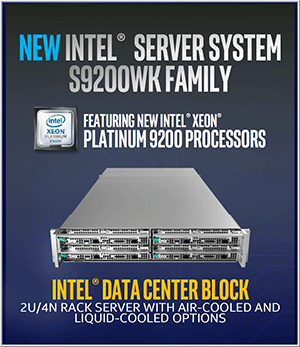

Intel announced the 9200 series of four core CPUs based on Cascade Lake, which range from having 32 to 56 cores. They accomplish this by combining and interlinking two dies into a single package. This series of CPUs will only be available as a system; you won't be able to simply buy a CPU or mother board with this processor on it. Instead, you'll need to buy an entire system, which Intel calls a Data Center Block -- a 2U container that can house up to four compute nodes (Figure 7).

[Click on image for larger view.]

Figure 7. The Intel 9200 Data Center Block. Source: Intel Corp.

[Click on image for larger view.]

Figure 7. The Intel 9200 Data Center Block. Source: Intel Corp.

Wrapping Up

In this article, I touched on the basics of what Intel announced around the release of Cascade Lake, its second generation of Xeon CPUs.

Intel has unlocked a lot of potential with the Cascade Lake chips and it will be interesting to watch how this potential is utilized in the real world. As with other technologies of these types, the first adopters of Cascade Lake will be those who need the latest technology to deal with complex workloads, including HPC centers, financial institution and, yes, gamers. However, it's typically the case that as the cost of these technologies stabilizes, they will be adopted by general datacenters. It needs to be noted that Intel has updated its compiler and other tools to take advantage of this generation of CPUs.

The CPUs are priced ranging from $213 for a Xeon Bronze 3204, which is a CPU with only six cores, to $10,009 for a Xeon Platinum 8280, which has 28 cores, a base speed of 2.7 MHz, supports Optane DCPM and has 4.5TB of RAM. The price of the 9200 series, however, has not yet been announced.

About the Author

Tom Fenton has a wealth of hands-on IT experience gained over the past 30 years in a variety of technologies, with the past 20 years focusing on virtualization and storage. He previously worked as a Technical Marketing Manager for ControlUp. He also previously worked at VMware in Staff and Senior level positions. He has also worked as a Senior Validation Engineer with The Taneja Group, where he headed the Validation Service Lab and was instrumental in starting up its vSphere Virtual Volumes practice. He's on X @vDoppler.