News

AI's New Frontier: Why the PC Is Stealing the Cloud's AI Thunder

For years, the promise of AI has largely resided in the cloud, with powerful services and massive datasets driving innovation from a centralized vantage point. But a recent Intel-commissioned report signals a significant shift, revealing that the next wave of AI adoption is moving closer to the user. Businesses are increasingly turning to the specialized hardware of AI PCs, recognizing their potential not just for productivity gains, but for revolutionizing IT efficiency, fortifying data security, and delivering a compelling return on investment by bringing AI capabilities directly to the edge.

All that and more is detailed in the July 17 AI PC Global Report from Intel, which surveyed 5,050 business and IT decision-makers across 23 countries, showing momentum for on-device AI acceleration.

Of specific importance to readers of Virtualization & Cloud Review is the increasing buzz around non-cloud AI, with the report noting, "One of the most powerful advantages of AI PCs is the ability to run AI workloads locally, offline and privately, without sending sensitive data to the cloud."

In fact, the report's introduction specifically sets up the diminishing importance of the cloud in the AI landscape:

Businesses are already well-versed in the productivity gains that AI delivers for tasks like search optimization or language translation. Consumed as a cloud service, this type of day-to-day AI use is driving adoption. But for businesses looking to extend IT efficiency, better protect sensitive data, and reduce long-term costs, AI PCs are fast becoming the go-to option.

Some data points to back that up include:

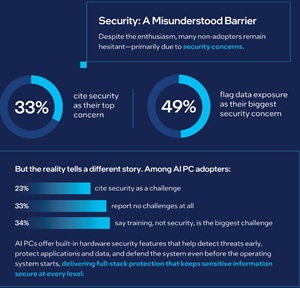

- Security benefits of local AI: AI PCs come with built-in hardware security features that help detect threats early, protect applications and data, and defend the system even before the operating system starts. This provides full-stack protection and is especially valuable for regulated industries like healthcare, finance, and legal services, where data privacy and compliance are paramount.

- 49% of non-adopters cite data exposure as their top security concern: This concern was flagged by nearly half of respondents who haven't yet adopted AI PCs. Intel counters this by emphasizing that one of the most powerful advantages of AI PCs is the ability to run AI workloads locally, offline and privately, without sending sensitive data to the cloud. By keeping data on the device, organizations can reduce exposure risks while still benefiting from real-time AI capabilities.

[Click on image for larger view.] Data Exposure (source: Intel).

[Click on image for larger view.] Data Exposure (source: Intel).

- Only 23% of adopters report security as a challenge, and 33% report no challenges at all: While a third of non-adopters cite security as their biggest AI PC concern, only 23% of those already using the technology highlight security as a challenge. An equal number (33%) of adopters have not experienced any challenges, including security issues, when using AI PCs. This suggests that concerns about replacing cloud AI with endpoint AI may be overstated in practice.

- AI workloads are already being run locally for core tasks: The report highlights that Independent Software Vendors (ISVs) are optimizing their applications for AI PCs, integrating AI features like real-time transcription, intelligent editing, and enhanced collaboration tools that take advantage of on-device AI acceleration. Common use cases for AI currently include optimized search (73%), real-time translation (72%), and predictive text input (71%).

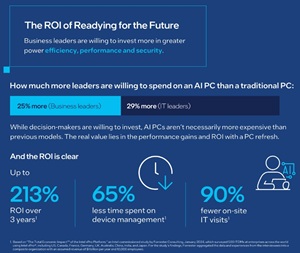

- Strong ROI demonstrated for AI PCs: AI PCs built on the Intel vPro platform and powered by Intel Core Ultra processors can deliver up to 213% ROI over three years, with a payback period of less than six months.

[Click on image for larger view.] The ROI (source: Intel).

[Click on image for larger view.] The ROI (source: Intel).

- Willingness to invest in AI PCs: IT leaders are willing to spend up to 29% more for AI PCs compared to traditional PCs, and business leaders are willing to spend up to 25% more.

- Training crucial for effective AI PC use: 95% of respondents believe employees will need training to effectively use AI PCs.

[Click on image for larger view.] More Training (source: Intel).

[Click on image for larger view.] More Training (source: Intel).

- Existing gap in AI PC training provision: Only 42% of organizations provide ongoing AI training, with 33% relying on one-off sessions, and 35% of organizations reporting no training provided at all.

- On-device AI supports data sovereignty and compliance: The ability to run AI workloads locally and privately is "especially critical for regulated industries like healthcare, finance, and legal services, where data privacy and compliance are paramount." This local processing helps organizations adhere to regulations that might restrict cloud-based data handling.

- 65% less time spent on device management and 90% fewer on-site IT visits: Organizations leveraging AI PCs built on the Intel vPro platform can expect to spend 65% less time on device management. Furthermore, IT staff can drastically reduce the amount of time spent physically visiting sites for maintenance interventions, potentially reducing hardware-related on-site visits by as much as 90%. This suggests a more autonomous, self-sustaining endpoint experience, further minimizing reliance on centralized services.

- Independent Software Vendors (ISVs) are building apps that assume local AI capability: The report states that "Another key factor in readying for the future is the growing ecosystem of Independent Software Vendors (ISVs) that are optimizing their applications for Al PCs." Leading ISVs are already integrating AI features like real-time transcription, intelligent editing, and enhanced collaboration tools that leverage on-device AI acceleration.

- Performance improvements of up to 100% for local workloads: AI PCs can deliver "Up to 100% performance gains running workloads on the latest Intel vPro platform when compared with the Intel Core 1000 series processors." These workloads include data visualization and insights, content creation, local AI assistance, and digital marketing. This reflects a shift in architecture that favors endpoint execution over cloud round-tripping.

The Intel report clearly positions AI PCs as a pivotal shift in the enterprise AI landscape, moving significant processing capabilities from centralized cloud environments to the endpoint. For IT professionals deeply involved in virtualization and cloud infrastructure, this evolution presents both challenges and opportunities.

Implications for Virtualization and Cloud Professionals

- Rethinking Workload Distribution: The rise of AI PCs necessitates a re-evaluation of where AI workloads are best executed. While complex, large-scale AI model training and massive data analytics will likely remain cloud-based, edge-based inference and day-to-day productivity tasks can increasingly leverage on-device NPUs, reducing network latency and cloud compute costs.

- Enhanced Endpoint Security Strategies: With sensitive data processing occurring locally, the focus on endpoint security becomes even more critical. IT professionals will need to leverage the hardware-level security features of AI PCs and integrate them into a holistic security posture that complements existing cloud security frameworks.

- Optimizing Network Traffic: Shifting AI workloads to the client side can significantly reduce data egress from cloud environments, potentially lowering bandwidth consumption and associated costs, freeing up network resources for other critical operations.

- New Management Paradigms: The report highlights reduced device management time and fewer on-site IT visits with AI PCs. This points to a need for IT teams to adapt to more autonomous, self-sustaining endpoint management strategies, potentially freeing up resources to focus on more strategic cloud and infrastructure initiatives.

- Addressing the Skills Gap: Despite the benefits, the report underscores a significant training gap. IT leaders will be instrumental in developing targeted training programs that empower end-users to fully leverage on-device AI capabilities and educate teams on the nuances of managing a hybrid AI landscape.

- Collaboration with ISVs: As Independent Software Vendors (ISVs) increasingly build applications optimized for local AI acceleration, IT professionals will need to collaborate closely with these vendors to integrate these new capabilities seamlessly into their enterprise software ecosystems.

The Future of Enterprise AI: A Hybrid Approach

Intel's push to deliver 100 million cumulative AI PCs by the end of 2025 with Core Ultra processors underscores a clear vision for the future: a robust, hybrid AI environment where cloud and edge intelligence complement each other. This means IT strategies must evolve to embrace distributed AI, ensuring that the right workloads run in the right place -- be it the cloud or the AI-powered endpoint -- for optimal performance, security, and cost-efficiency.

Other Tech Giants Join the On-Device AI Frontier

While Intel champions the AI PC, other technology giants are also making significant moves to bring AI capabilities closer to the user, challenging the cloud's exclusive dominance in AI processing. This trend signals a broader industry shift towards distributed AI, where various devices--from PCs to smartphones and specialized edge hardware--become powerful AI engines.

Microsoft has been particularly aggressive in this space, launching its Copilot+ PCs, a new category of Windows PCs designed with dedicated AI acceleration to run AI features natively on the device. These PCs leverage powerful neural processing units (NPUs) to deliver enhanced performance for AI-driven tasks, aiming to transform user productivity and creativity directly on the desktop. This strategic move was detailed in an article by "Redmond Magazine: Microsoft Announces New AI PCs at Pre-Build Event."

Similarly, Google is invested in on-device AI, primarily through its Gemini Nano model, designed for efficiency on mobile devices. Gemini Nano enables features like on-device summarization, smart replies, and advanced scam detection, allowing AI tasks to run privately and without an internet connection on devices like Pixel phones. Google's broader AI Edge initiative provides developers with tools and frameworks to deploy AI across mobile, web, and embedded applications, emphasizing low latency, offline functionality, and local data privacy. This foundational strategy for on-device models is further elaborated in their developer resources: Introducing Gemma 3n: The developer guide.

These efforts from industry leaders like Microsoft and Google, alongside Intel's focus on the AI PC, underscore a clear industry trajectory: AI is becoming increasingly localized, embedded directly into the devices we use daily. This evolution promises to enhance security, reduce latency, and unlock new levels of efficiency and personalization, fundamentally reshaping the enterprise AI landscape and how IT professionals manage their computing environments.